Author 丨 Guo Meiting Li Runzezi

Editor丨Zhu Wei Jing

Figure Source 丨 Figure worm

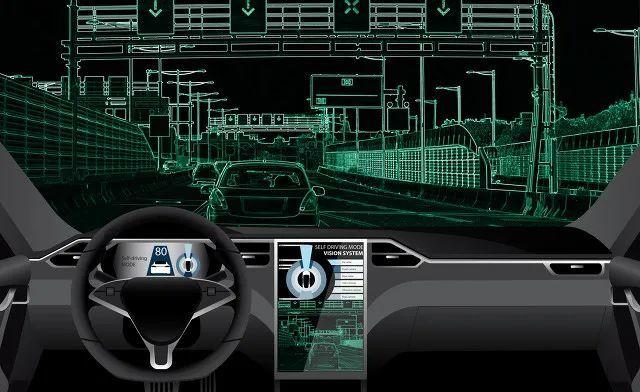

Recently, the American Highway Safety Insurance Association (IIHS) launched a safety rating program for some autonomous driving systems to assess whether vehicles using partially automated systems have adequate protection. The plan will be tested primarily to increase drivers' attention to the road.

It is reported that because the automatic driving system of many cars on the market is at L2 level, it has not achieved true automatic driving, and the active supervision of the driver is still required during the driving process. The abuse or inattention of drivers of these driving systems, which are only partially automated, has led to multiple accidents.

To this end, IIHS believes that systems with good safety ratings should take multiple safeguards to ensure that drivers are always on the road and that the steering wheel remains in control.

Safety rating program for some automated driving systems

According to the information on the official website of IIHS, the safety rating of some autonomous driving systems divides the safeguard measures into four levels: good (good), acceptable( acceptable), marginal (marginal), and poor (poor). In general, to get a good rating, the IIHS says, the system needs to make sure that the driver's attention is always on the road and that the driver should also maintain control over the steering wheel.

To be "good" with this rating, the vehicle's autopilot system should use multiple types of alerts to alert the driver to road conditions and ensure the driver's control of the steering wheel; automatic lane changes must be approved by the driver; and automated functions must not be used in conjunction with automatic emergency braking or lane departure prevention/warning disables, etc.

According to the definition of the Society of Automotive Engineers, autonomous driving technology is divided into six levels, L0 to L5. Of these, L2 is partially autonomous (with both adaptive cruise and route centering), but when using this level of autonomous driving system, the driver must maintain control (hands on the steering wheel, eyes on the route, or both).

According to public information, many cars on the market are currently equipped with autopilot systems at L2 level, such as Tesla's Autopilot (automatic assisted driving), Volvo's Pilot Assist (autopilot assist), Cadillac's Supercruise (super cruise) and so on.

Vehicles at this stage are mainly used to collect on-board sensors to collect environmental data inside and outside the vehicle, provide drivers with hazard warning and other functions, and realize partial automated driving, generally providing automatic parking systems, emergency automatic braking, body stabilization systems, automatic cruises, intersection assistance, lane keeping and other functions. This also means that when using L2 assisted driving, drivers must constantly monitor the road and be ready to take over the control vehicle at any time.

Break the driver's excessive dependence

"Until now, even the most advanced systems have required active supervision from the driver." THE IIHS said over-hype from automakers has led drivers to see the system as "a car that can drive itself." Shockingly, there are records of drivers watching videos, playing mobile games or even taking naps while speeding on the highway.

In 2008, a Tesla Model X driver was killed when the vehicle accelerated and hit a collapsed safety barrier after activating Autopilot. The National Transportation Safety Board found that drivers were likely distracted by mobile video games at the time.

The IIHS researchers in charge of the new rating program say that some of the above-mentioned self-driving systems often give the impression of how they can operate more than they can actually do, and even if drivers understand their limitations, their minds are still inevitably fluctuating, "As humans, it is more difficult for us to observe and wait for problems to occur than we drive ourselves." ”

The National Transportation Safety Board (NTSB) investigated several accidents involving vehicles equipped with autonomous driving systems and found that more than half of them were due to drivers' over-reliance on some autonomous driving systems.

At the same time, a "partially automated" system may also expose drivers to more risk and responsibility. Recently, the US court announced the world's first criminal case in which a driver was charged with a felony for abusing the L2-level assisted driving system to cause death. The case occurred in 2019, the driver turned on the Tesla Autopilot function, and when encountering a red light (Tesla AP function could not recognize the red light and brake), he did not take over the steering wheel in time, and the vehicle ran a red light, resulting in two cars colliding and two passengers dead. Court documents show that a hearing in the case will be held on February 23 this year.

Car companies are trying to avoid the above risks through technical means. For example, GM's SuperCruise uses a "driver attention camera" and a display directly in the driver's line of sight to help drivers keep their attention focused on the road.

Last May, Tesla updated its driver monitoring system, which will use cameras in the vehicle's cab to ensure that drivers can concentrate when using its Autopilot (Auto Assisted Driving) and Full Autopilot (FSD). Google also published a new patent for autonomous driving in December of the same year, which can accurately determine the driver's attention through the 3D gaze vector of the eye.

Editor of this issue Wang Tingting Intern Zhang Ke