Although there is not even a mass-produced L3-level automatic driving at this stage, this does not affect the optimism of various OEMs about the future of this technology, and the entire automotive industry also regards autonomous driving as the development direction of future cars. So if you want to achieve a state of mass production availability for automatic driving, what are the hidden dangers, today we will sort it out.

Before we discuss the risks posed by autonomous driving, we must first clarify which step the development of autonomous driving has reached. Society of Automotive Engineers SAE divides autonomous driving into six levels: L0, L1, L2, L3, L4 and L5.

L0 is the lowest level, named human driving, as the name suggests, all operated by humans, and automatic driving is completely unrelated.

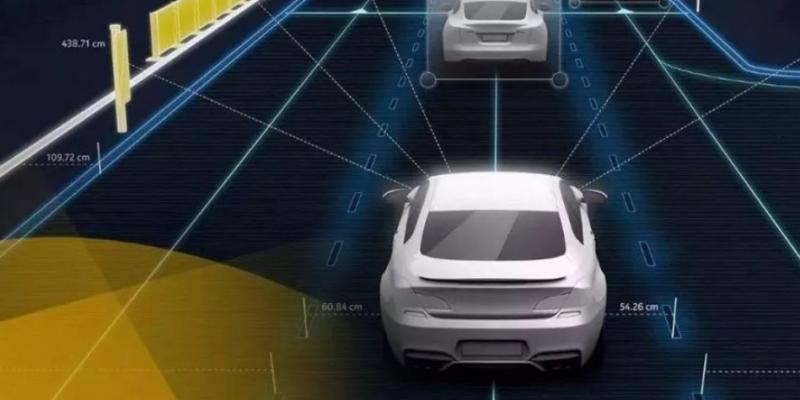

L1 is named assisted driving, which is to give the driver some relatively simple auxiliary support, and the driver also needs to operate. The most representative technology is ACC adaptive cruise, through the radar system on the body to detect the surrounding road conditions, to achieve automatic follow-up acceleration, deceleration, braking and so on.

Vehicles at L2 level can automate some functions, but the driver needs to monitor the road conditions and be ready to take over the vehicle at any time. It is usually equipped with the following functions: ACC adaptive cruise, lane keeping system, automatic brake assist system, automatic parking system. The L2 level makes driving much easier, and even on simple roads the hand can take a short off the wheel.

L3 level can basically achieve partial automatic driving operation of the vehicle in a specific environment, and automatically judge whether it can be automatically driven or returned to the driver for manual operation according to the road condition environment.

L4 can achieve the autonomous driving that people are pursuing, without the need for monitoring or response, and the vehicle can make the correct operation instantaneously according to its own judgment. People sitting on the car can do what they want to do, playing mobile phones will not be deducted points, and even sleeping can be, equivalent to sitting on the vehicle driven by a special driver, just need to get on the car and get off the car is enough. However, this level should limit the driving area and cannot be suitable for all driving scenarios.

L5 level is the highest level, named fully autonomous driving, as the name suggests, it is all taken over by AI, not limited by the road environment, can automatically judge road conditions and respond to emergencies. For now, L5 is fully autonomous.

At present, the most advanced mass production vehicles on the market have only reached the L2 level of automatic driving, and some models have the capabilities of L3, but due to regulations and responsibility delineation, the functions of L3 have not been completely liberalized for consumers to use. Since L4 and L5 are still in the laboratory stage, we will take the function of L2 and L3 as the basis to see the possibility of automatic driving causing driving hazards.

Fatigue and distraction

As can be seen from the above definition, L2 and L3 require the driver to take over control of the car, but the vehicle is driving itself for a long time. People are prone to passive fatigue. The incompatibility of not having to use vehicle controls frequently, but also the need to be constantly alert to dangers, will reduce the driver's alertness, even if he only drives on the road for 10 minutes, in which case the driver will even fall asleep.

Second, for some drivers who are always on standby, long periods of autonomous driving can make the trip very boring. Bored drivers tend to spontaneously engage in activities that stimulate them to distract them, such as using their phones, reading magazines, or watching movies. This is especially true if the driver has a high level of trust in autonomous driving.

Alert system defects

Self-driving car manufacturers seem to have become aware of the problem, realizing that there needs to be interaction between drivers and self-driving cars to ensure safety. To compensate, they asked drivers to put their hands on the steering wheel while the vehicle was moving, or to touch the steering wheel regularly to indicate that drivers were vigilant at all times.

But some drivers have come up with innovative ways to avoid touching the steering wheel, such as adding a steering wheel ring to the steering wheel. Even if the driver touches the steering wheel as requested, their eyes may be focused elsewhere, such as on the phone's display. If their glasses focused on the driveway when they touched the steering wheel, they wouldn't have figured out a way to replace their hands with a water bottle. There is evidence that long periods of autonomous driving can make drivers mentally distracted, so that even if the driver is looking at the lane, he may not be able to handle what is happening in the lane.

The software is not perfect

Although driving is negligent due to reasons such as human distraction or inattention, is there nothing wrong with the self-driving system itself? For example, will autonomous vehicles focus their attention or computing resources on only one aspect of driving, leading to negligence in another aspect that is more critical to driving safety?

The safe operation of autonomous vehicles is largely determined by the software algorithms that drive them. Like human drivers, autonomous vehicles powered by such algorithms will need to prioritize activities that are critical to safe driving.

But when we haven't fully understood when human drivers should pay attention, how do we design algorithms that can pay attention to what autonomous vehicles should pay attention to? Poorly designed self-driving programs can cause vehicles to drive negligently like human drivers.

In summary, driver negligence is currently a problem for some autonomous vehicles. However, the operating logic of the entire autonomous driving system and the underlying architecture are also flawed. Unless we can design vehicles that can reliably participate in all the activities that are critical to safe driving, higher-order autonomous driving will not really land until then.