Reporting by XinZhiyuan

Source: Microsoft Research AI Headlines

Recently, the evaluation results of the WMT 2021 International Machine Translation Competition held by the International Association of Computational Linguistics ACL were announced. The Microsoft ZCode-DeltaLM model, jointly released by Microsoft Research Asia, microsoft translation product teams and Microsoft Turing teams, won the WMT 2021 "Large-Scale Multilingual Translation" track. The model is based on DeltaLM, a multi-language pre-training model that can support hundreds of languages created by the machine translation research team of Microsoft Research Asia, and is trained and generated under the multi-task learning framework of Microsoft ZCode. The researchers hope that with this multilingual translation model, more low-resource and zero-resource language translations can be effectively supported, and the vision of rebuilding the Tower of Babel will one day be realized.

Recently, at the just-concluded International Machine Translation Competition (WMT 2021), Microsoft Research Asia, Microsoft Translation Product Team and Microsoft Turing Team joined forces to show off their strengths on the "Large-Scale Multilingual Machine Translation" evaluation mission track, and won the first place in all three sub-tasks of the track with a huge advantage with the Microsoft ZCode-DeltaLM model. Compared with the M2M model with the same amount of parameters, the Microsoft ZCode-DeltaLM model has achieved an improvement of more than 10 BLEU scores on major tasks.

The WMT International Machine Translation Competition is recognized by the global academic community as the world's top machine translation competition. Since 2006, the WMT Machine Translation Competition has been successfully held for 16 sessions, and each competition is a platform for major universities, technology companies and academic institutions around the world to demonstrate their machine translation capabilities, and has witnessed the continuous progress of machine translation technology. Its "Large-scale Multilingual Machine Translation" evaluation track provides development sets and some language data for hundreds of language translations, aiming to promote the research of multilingual machine translation. The assessment task consists of three sub-tasks: one large task, which uses a model to support 10,302 directional translation tasks between 102 languages, and two small tasks: one focused on translations between English and 5 European languages, and the other focused on translations between English and 5 Southeast Asian languages.

Official results of Full-Task and Small-Task1 in the WMT 2021 Large-Scale Multilingual Translation Task (BLEU score)

Plan to rebuild the Tower of Babel

According to statistics, there are currently more than 7,000 different languages in the world, and many languages are on the verge of disappearing or have disappeared. Each language carries a different civilization. Descartes once said, "The difference in language is one of the greatest misfortunes of life." For a long time, the reconstruction of the Tower of Babel has been able to eliminate the barriers caused by languages in economic activities and cultural exchanges, and realize language exchanges, which is the common dream of mankind. In 1888, the linguist Polish-Jewish Zamenhof created a new language based on Latin, called Esperanto. He wanted all the people of the world to learn to use the same language. It was an ideal solution, but Esperanto didn't catch on in the end.

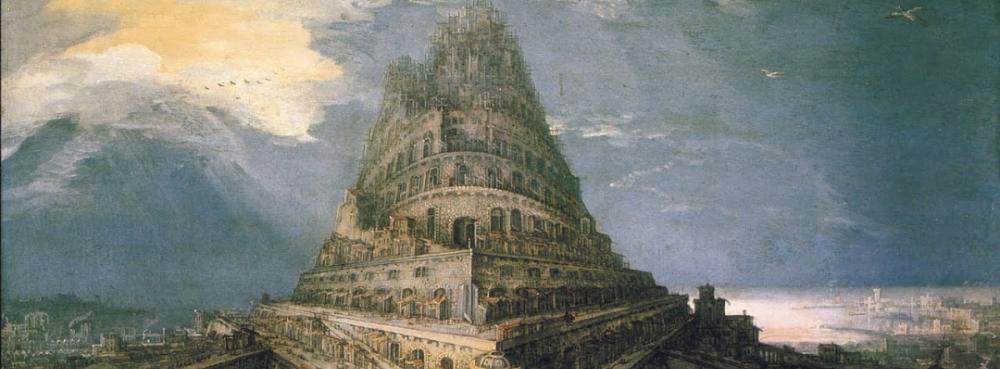

Bruegel Pieter's 1563 oil painting Tower of Babel. The Bible records that humans first lived together and spoke the same language, and later humans became more and more powerful, and they wanted to build a high tower to heaven called the Tower of Babel. God was furious when he heard this, and in order to prevent mankind from building the Tower of Babel, God disrupted the human language, making it impossible for humans to speak different languages and communicate with each other, so that the Tower of Babel project failed, and human beings have been scattered all over the world ever since.

Later, computer scientists hoped to implement machine translation through algorithmic models. In the early days, researchers tried to design rules to complete translation between any two languages, including compiling bilingual dictionaries, summarizing translation conversion rules, and building translation knowledge bases. However, the rule-based approach has great flaws, such as the phenomenon that the rule description is granular, the coverage is low, and the maintenance cost of the rule base is extremely high, resulting in low translation quality, poor robustness, and easy to conflict and compatibility problems between new and old rules. After that, researchers began experimenting with data-driven approaches to solving language translation problems, from instance-based approaches, to statistics-based approaches, to currently popular neural network-based approaches.

A data-driven translation approach relies on the quantity and quality of bilingual parallel corpora. Benefiting from the development of information technology, data in high-resource languages is relatively easy to obtain, and machine translation systems are becoming more and more high-quality translations for high-resource languages, and now they can be deployed and commercialized in large quantities, helping to solve communication barriers between some languages, promoting the development of various cross-language commercial applications, and making people see the hope of rebuilding the Tower of Babel.

But despite this, the current machine translation still faces many difficulties. First, there are still a large number of low-resource languages, and their bilingual corpus is difficult to obtain, so the translation quality of stand-alone machine translation systems for low-resource languages is very low. Secondly, the establishment of a separate machine translation system for all languages is very expensive to develop and maintain.

Dawn of the Tower of Babel: A Multilingual Machine Translation Model

So, is there a better technical way to translate between all languages at once and with translation performance in mind? To that end, researchers began exploring multilingual machine translation models, which use only one model to enable translation between all languages. The motivation and advantages of this model approach are:

(1). Based on a data-driven approach, all language encodings are mapped into a semantic space, and then the target language is generated by decoding algorithms from that space. This semantic space exists implicitly relative to explicitly constructed Esperanto, which theoretically encodes semantic information about all human languages. Just like the human brain can understand the input information of multiple modes (sight, touch, hearing, smell, taste, etc.) and issue corresponding instructions to each mode.

(2). There is a correlation between languages, many languages are homologous, and many languages have the same root between each other. Human language is also often used in daily use with multiple languages. Although the resources of different languages are uneven, mixing all languages for model training can not only share knowledge between different languages, but also use the knowledge of high-resource languages to help improve the translation quality of low-resource languages.

(3). This method gives full play to the hardware computing power of the computer. Based on advanced deep learning algorithms, dozens, hundreds, and even translation problems between all languages can be supported using only one model. In this sense, machines have surpassed the translation capabilities of human experts, because even in the Guinness Book of World Records, a person can master only 32 kinds of languages at most, and a machine model can do even more.

Multilingual machine translation models are a very important research topic at present, and are expected to help people realize their desire to rebuild the Tower of Babel. In recent years, the machine translation research team of Microsoft Research Asia has carried out multi-faceted research on multilingual machine translation models, including model structure exploration, model pre-training methods, parameter initialization, fine-tuning methods, and methods for building large-scale models. The team has accumulated a lot of research experience in the field of machine translation, and has achieved rich results in language translation tasks centered on Chinese, including the translation between East Asian languages (such as China, Japan and Korea), the translation of Chinese minority languages, Chinese dialects (such as Cantonese), and the translation of Wenyan. Related machine translation technology achievements have also enabled Microsoft's multiple cross-language product applications, such as speech translation, cross-language retrieval and cross-language Q&A.

Based on DeltaLM+Zcode, it dominates

The multilingual machine translation model that stood out in the WMT 2021 competition, Microsoft ZCode-DeltaLM, was trained under the Microsoft ZCode multi-task learning framework. The core technology to achieve this model is based on DeltaLM, a multi-language pre-trained model that can support hundreds of languages previously created by the machine translation research team of Microsoft Research Asia. DeltaLM is the latest in a series of large-scale multilingual pre-trained language models developed by Microsoft. As a generic pre-trained generation model based on an encoder-decoder network structure, DeltaLM can be used for many downstream tasks (e.g., machine translation, document summary generation, problem generation, etc.) and has shown great results.

Pre-training a language model usually takes a long training time, in order to improve the training efficiency and effectiveness of DeltaLM, The researchers at Microsoft Research Asia did not train the model parameters from scratch, but initialized the parameters from the current state-of-the-art encoder model (InfoXLM) that was previously pre-trained. While initializing the encoder is simple, initializing the decoder directly is difficult because the decoder adds an additional cross-attention module compared to the encoder. As a result, DeltaLM has made some changes based on the traditional Transformer structure, employing a novel interleaved architecture to solve this problem (as shown in the following figure). The researchers added a full-connection layer between the self-attention layer and the cross-attention layer in the decoder. Specifically, an odd-layer encoder is used to initialize the decoder's self-attention, and an even-layer encoder is used to initialize the decoder's cross-attention. Through this interleaved initialization, the decoder matches the structure of the encoder and can initialize parameters in the same way as the encoder.

Schematic diagram of DeltaLM model structure and parameter initialization method

The pre-training of the DeltaLM model makes full use of multilingual monolingual corpora and parallel corpus, and its training task is to reconstruct the randomly specified language blocks in the single statement sub-clause and the concatenated two-sentence pair, as shown in the following figure.

Example of a DeltaLM model pretrain task

In terms of parameter fine-tuning, the researchers viewed the multilingual translation task as a downstream task for deltaLM's pre-trained model, fine-tuning it using bilingual parallel data. Unlike the fine tuning of other natural language processing tasks, the training data for multilingual machine translation is large, so the cost of parameter fine-tuning is also very large. To improve the efficiency of fine-tuning, the researchers used a progressive training method to learn the model from shallow to deep.

The fine-tuning process can be divided into two phases: in the first phase, the researchers fine-tune parameters directly on the DeltaLM model's 24-layer encoder and 12-layer decoder architecture using all available multilingual corpora. In the second phase, the researchers increased the encoder depth from 24 to 36 layers, where the bottom 24 layers of the encoder were multiplexed with fine-tuned parameters, and the top 12 layers of parameters were randomly initialized, and then continued to train using bilingual data on this basis. Due to the use of deeper encoders, the capacity of the model is expanded, and considering the parallelism of the encoders, the new number of encoder layers does not add too much additional task calculation time cost.

In addition, researchers at Microsoft Research Asia employ a variety of data augmentation techniques to solve the problem of data sparsity in multiple directions of multilingual machine translation, further improving the translation performance of multilingual models. The researchers used monolingual and bilingual corpora to enhance data in three areas:

1) In order to obtain reverse translation data from English to any language, the researchers used the initial translation model to translate back English monolingual data as well as monolingual data from other languages;

2) In order to obtain double-pseudo data in non-English directions, the researchers paired the same English text by translating it back into two languages. When this direction is good enough, the researchers also directly translate the monolingual expectations of this direction to obtain pseudo-parallel data;

3) The researchers also used the central language for data augmentation. Specifically, the bilingual data from the central language to English is backtranslated, so as to obtain the trilingual data from the target language to English and the central language.

Faced with complex data type composition (including parallel data, data-enhanced reverse translation composite data, and double pseudo-parallel data) and the imbalance of language data scale, the researchers based on dynamic adjustment strategies not only adjust the priority of data types, but also apply temperature sampling to balance the number of training uses of data in different languages. For example, in the early stage of model fine-tuning, more emphasis is placed on the learning of high-resource language translation, while in the later stage, translation knowledge is learned more evenly from different language data. For each language pair's translation task, the researchers also decided whether to adopt a direct translation strategy or bridge translation by using English as the axis language based on the performance on their development set.

Based on the above methods, the researchers built a large-scale multilingual system Microsoft ZCode-DeltaLM model, and the official evaluation results of the hidden test set in WMT 2021 exceeded expectations. As shown in the chart below, this model leads the second-place competitor by an average of 4 BLEU scores, which is about 10 to 21 BLEU scores ahead of the benchmark M2M-175 model. Compared to the larger M2M-615 models, the model translation quality also leads by 10 to 18 BLEU scores, respectively.

WMT 2021 Large-Scale Multilingual Translation Model Review Results List Summary

Universal models, beyond translation

While Microsoft researchers are excited about the results achieved in the WMT 2021 competition, what is even more exciting is that the Microsoft ZCode-DeltaLM model is not just a translation model, but a general-purpose pre-trained encoder-decoder language model that can be used for all types of natural language generation tasks. Microsoft ZCode-DeltaLM also performs best on many of the build tasks in the GEM Benchmark, including summary generation (Wikilingua), text simplification (WikiAuto), and structured data-to-text (WebNLG). As shown in the figure below, Microsoft's ZCode-DeltaLM model far outperforms other models with larger parameter scales, such as the 3.7 billion parameter mT5 XL model.

The Microsoft ZCode-DeltaLM model summarizes the results of the evaluation on the build and textual simplification tasks in the GEM Benchmark