ACCORDING TO THE SHANGHAI INSTITUTE OF MICROSYS AND INFORMATION TECHNOLOGY OF THE CHINESE ACADEMY OF SCIENCES ON JANUARY 16, THE VISUAL PERCEPTION IMPAIRMENT CAUSED BY THE BURIAL ACCIDENTS SUCH AS EARTHQUAKES, LANDSLIDES, AND LANDSLIDES HINDER THE SEARCH AND LOCATION OF THE TRAPPED PEOPLE.

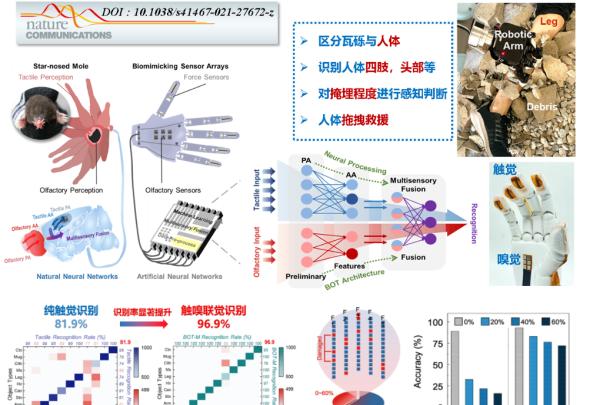

In order to ensure the best rescue time, improve the success rate and survival rate of search and rescue, relying on the national scientific and technological innovation 2030 "new generation of artificial intelligence" major project, in view of the identification and rescue needs of trapped people in extreme environments without visual input, Tao Hu's team, a researcher at the Shanghai Institute of Microsystems and Information Technology of the Chinese Academy of Sciences, was inspired by the "touch-smell fusion" perception of the natural star-nosed mole rat, and integrated the MEMS olfaction, haptic flexible sensor array and multimodal machine learning algorithm. Constructed a star-like nose mole rat touch-sniffing integrated intelligent manipulator (pictured).

▲ Touch-sniffing integrated bionic intelligent manipulator for emergency rescue burial scenes

According to reports, thanks to the excellent performance of the silicon-based MEMS gas sensor (sensitivity exceeds 1 order of magnitude for humans), pressure sensor (detection limit exceeds human 1 order of magnitude), the mechanical finger can accurately obtain its local micromorphology, material hardness and overall contour and other key features after touching the object, the palm can simultaneously sniff out the "fingerprint" odor of the object, and further through the bionic touch-olfactory synesthesia (BOT) machine learning neural network real-time processing, and finally complete the identification of the human body, confirm the part, determine the burial state, Remove obstacles and close the loop for rescue.

IT Home learned that the researchers of the Shanghai Microsystem Institute cooperated with the Shanghai Fire Research Institute of the Ministry of Emergency Management, and through field research in the front-line fire rescue units, the real restoration of the burial scene of the human body covered by rubble piles was truly restored, and 11 typical objects, including the human body, were identified in this environment, and the accuracy of touch-olfactory synesthesia recognition reached 96.9%, which was 15% higher than that of a single feeling.

Compared with the single-touch (548 sensor) perception study published by MIT in Nature (DOI: 10.1038/s41586-019-1234-z), the work uses only 1/7 of the number of sensors through touch (70) and olfactory (6) synesthesia, which achieves a more ideal identification purpose, and the reduced sensor size and sample size are more suitable for rapid response and application under complex environments and limited resource conditions. In addition, in the face of the common situation in the actual rescue of the presence of interference gas or partial damage to the device, through the complementarity of multimodal perception and the rapid adjustment of the neural network, the system still maintains a good accuracy rate (>80%).

The research, titled A star-nose-like tactile-olfactory bifactory bionic sensing array for robust object recognition in non-visual environments, was published in Nature Communications on January 10, 2022.