Author | John Russell

Translated by | Sambodhi

Planning | Ling Min

At the spring GTC meeting, Bill Dally, Nvidia's Chief Scientist and Senior Vice President of Research, presented the basics of NVIDIA's R&D facilities, as well as details of some of its current priorities. Dally this year will focus on the AI tools that NVIDIA is developing and using, which is a very clever form of reverse marketing. For example, Nvidia has used artificial intelligence to improve the design efficiency of GPUs.

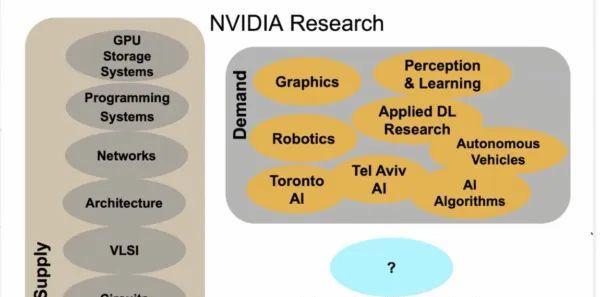

Dally said in this year's talk: "We have a group of about 300 people trying to predict our outlook on NVIDIA products, and we are like a high beam that wants to illuminate things in the distance. We are loosely divided into two halves. Half provide technology for GPUs. It refines the GPU itself, including circuitry, VLSI design, architecture networks, programming systems, and storage systems that enter the GPU and GPU systems. ”

"The NVIDIA research team hopes to develop software systems and technologies that work well with GPUs. We've been pushing for computer graphics technology, and there are three different computer graphics research groups, along with five different AI groups. Using GPUs to run AI is now a huge undertaking and is growing in scale. We also have a group dedicated to the production of robots and driverless cars. "We also have some geo-oriented labs, like the Artificial Intelligence Labs in Toronto and Tel Aviv." ”

Nvidia sometimes picks a few people from a group of people to carry out crazy plans, such as one group that developed Nvidia's real-time ray tracing technology.

As always, there was some repetition in Dally's conversation, but there was also something new. The size of the group, of course, is up from about 175 people in 2019, as support for driverless systems and robots grows. "A year ago, Nvidia poached Macro Pavone from Stanford University to lead their new driverless car development team," Dally said. He didn't mention much about the design of the CPU, but there's no doubt that the work in this area has also been intensified.

Here's a small (slightly edited) review of Nvidia's increasing use of AI when designing chips, with some support slides.

Graph neural networks are used to map voltage drops

"As an AI expert, we certainly want to use AI to design better chips. We have several ways to do this. The first and easiest way is that we can use (in combination with ARTIFICIAL intelligence) existing computer-aided design tools. For example, we can map the power consumption in the GPU and predict how far the gate voltage will decrease — the so-called IR drop is the current multiplied by the resistance voltage drop. Doing this with regular CAD software takes three hours. Dally said.

In Dally's view, it's an iterative process, and all that needs to be done is train an AI model to get the same data.

"We do this on a bunch of designs, basically we can input the power map, and the resulting inference time takes only three seconds. Of course, if you count the time for feature extraction, this takes 18 minutes. We can achieve results very quickly. In this case, we use a graph neural network instead of a convolutional neural network, which we do to estimate the switching frequency of each node in the circuit, thus facilitating the power input in the previous example. "We can get accurate power estimates in a very short amount of time, which is faster than traditional methods." ”

Predict parasitic effects using graph neural networks

Dally says one of his favorite jobs is using graph neural networks to predict parasitic effects. A few years ago, he was a circuit designer. Previously, circuit design was a very iterative process, you had to draw a schematic of a transistor, but you didn't know how it performed until the layout designer got the schematic, laid it out, and extracted the parasitic devices, and then you could do the circuit simulation.

"You'll go back and modify your schematic (and pass it again) to the layout designer, which is a very lengthy, iterative, inhumane, labor-intensive process. Now what we can do is predict parasitic effects by training neural networks without having to lay them out. This allows circuit designers to iterate very quickly without having to take manual layout steps in a loop. The chart here shows that our predictions of these parasitic devices are very accurate compared to the benchmark truth. ”

Layout and routing challenges

Dally said that AI can also predict routing congestion, which is crucial for companies' chip layout.

The normal process is that developers have to take a netlist and run it through a layout and routing process, which can be time-consuming and usually takes several days. Although you can understand the congestion situation, you will find that the original layout is not perfect enough. So it had to be redesigned to lay out the macro in a different way, so that those red areas could be avoided (as shown in the image below).

There are too many lines trying to pass through a particular area, a bit like traffic jam parking spaces. "All we can do now is we don't have to layout and route anymore, we can use these netlists and, through graph neural networks, basically predict where congestion will occur, so as to achieve a high degree of accuracy." "It's not perfect enough, but it shows the area where the problem is, and we can take immediate action to iterate quickly without having to do a full layout and routing," he says. ”

Automation of standard unit migration

Dally said that these methods are currently critiquing human design through artificial intelligence. What's really exciting is the use of AI for practical design.

Dally gives an example, such as the NVCell system, which uses a combination of simulated annealing and reinforcement learning to design standard cell libraries. For every new technology, such as switching from 7nm to 5nm, there is a cell library. "In fact, we have thousands of such units and have to be redesigned in new technologies with a very complex set of design principles," Dally says. ”

According to Dally, Nvidia mainly uses reinforcement learning to lay out transistors. In the past, when transistors were laid out, there were often a lot of design rule errors, just like video games. And this is what reinforcement learning is best at.

"The use of reinforcement learning in Atari video games is a good example. It's like an Atari video game, but it's a video game that fixes a design misplan in a standard unit. We can use reinforcement learning to correct errors in these design rules, allowing us to largely implement the design of a standard unit. ”

As shown in the figure below, 92% of the cell libraries can be implemented with this tool without errors in design rules and electrical rules. In addition, 12% of the units are smaller than the human design. "In general, in terms of unit complexity, this tool makes units that are comparable to, or better than, human-designed units." Dally indicates.

One of the benefits of this for NVIDIA is that it can save a lot of human resources. In the past, a group of about 10 people took a year to complete the porting of a new technology library. Now, you can run multiple GPUs for a few days to complete. This allows humans to process 8% of the uncompleted units. "In many cases, we end up with better designs. This solves the problem of human beings and is superior to human design. ”

Dally's speech also has a lot to say about NVIDIA research and development, and NVIDIA's research and development is mainly focused on products, rather than basic science. If you're interested, check out Dally's talks on NVIDIA R&D in 2019 and 2021. After reading it, you will find that Dally's description of the research and development work and organization has not changed much, but the theme is different.

https://www.hpcwire.com/2022/04/18/nvidia-rd-chief-on-how-ai-is-improving-chip-design