Reports from the Heart of the Machine

Editors: Zenan, Boat

"Deep neural networks are very difficult to train, and the residual network framework we propose makes it much easier to train neural networks." The beginning of the abstract has now been carefully read by countless researchers.

This is a classic paper in the field of computer vision. Li Mu once said that if you are using a convolutional neural network, there is a half chance that you are using ResNet or its variant.

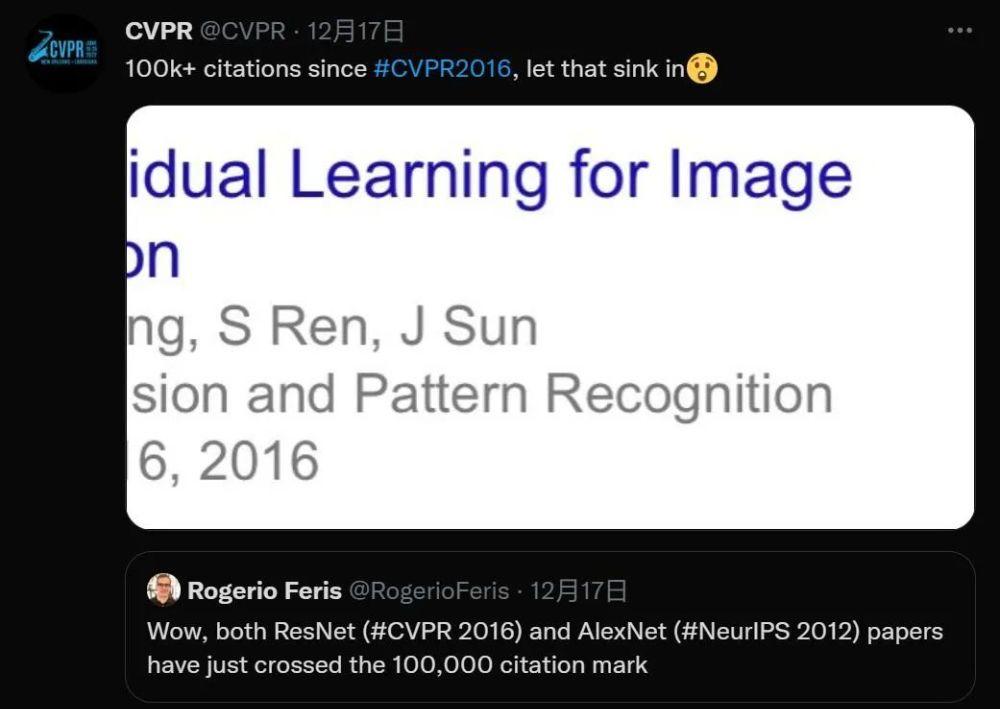

A few days ago, it was found that the number of ResNet papers cited quietly exceeded 100,000 plus, just six years after the submission of papers.

Deep Residual Learning for Image Recognition won the Best Paper Award from CVPR, the top computer vision conference, in 2016, and ResNet was quoted several times more than NeurIPS's most popular paper, Attention is All You Need. The popularity of this work is not only because ResNet itself has been tested, but also because it has validated the popularity of the field of AI, especially computer vision.

Thesis link: https://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/He_Deep_Residual_Learning_CVPR_2016_paper.pdf

The paper's four authors, He Kaiming, Zhang Xiangyu, Ren Shaoqing and Sun Jian, are now well-known in the field of artificial intelligence, when they were all members of Microsoft Asia Research. Microsoft Asia Research Is One of the Few Pure Academic Institutions in the Industry that can receive sustained high investment from technology giants.

Speaking of the paper itself, residual networks are proposed to solve the problem of network degradation when there are too many hidden layers of deep neural networks (DNNs). Degradation is the problem that when there are more hidden layers in the network, the accuracy of the network reaches saturation and then degrades sharply, and this degradation is not caused by overfitting.

Suppose a network A has a training error of x. Add several layers to the top of A to build network B, and the parameters of these layers have no effect on the output of A, which we call C. This means that the training error for the new network B is also x. The training error of network B should not be higher than A, and if B has a training error higher than A, it is not a trivial problem to learn the identity map using the added layer C (which has no effect on the input).

To solve this problem, the module in the preceding figure adds a direct connection path between the input and output to perform the mapping directly. At this point, C only needs to learn the existing input features. Since C only learns residuals, this module is called the residuals module.

In addition, similar to GoogLeNet, which was launched almost at the same time that year, it also connected a global average pooling layer after the classification layer. With these changes, ResNet can learn about 152 layers of deep networks. It can achieve higher accuracy than VGGNet and GoogLeNet, while being more computationally efficient than VGGNet. The ResNet-152 achieves a top-5 accuracy rate of 95.51%.

The architecture of the ResNet network is similar to that of VGGNet, consisting primarily of 3x3 convolutional kernels. This allows you to add shortcut connections between layers on top of VGGNet to build a residual network. The figure below shows the process of synthesizing a residual network from some of the early layers of VGG-19.

Part of the structure of ResNet. Many people say that He Kaiming's papers are very easy to understand, and you can read ideas just by looking at the illustrations.

ResNet's powerful characterization capabilities have resulted in performance improvements for many computer vision applications, including object detection and facial recognition, in addition to image classification. Since its introduction in 2015, many researchers in the field have tried to make some improvements to the model to derive some variants that are more suitable for specific tasks. This is also one of the important reasons for ResNet's ultra-high citation volume.

When ResNet citations exceeded the 100,000 mark, another classic paper, AlexNet in 2012, also exceeded 100,000 citations.

AlexNet is a convolutional neural network designed by Alex Krizhevsky, winner of the 2012 ImageNet competition, and was originally run with GPU support with CUDA. The network's error rate was more than 10 percent lower than the previous champion and 10.8 percentage points higher than the runner-up. Turing Award winner Geoffrey Hinton is also one of the authors of AlexNet, and Ilya Sutskever of the SuperVision Group at the University of Toronto is the second author.

Thesis link: https://papers.nips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

The Alexnet network contains 60 million parameters and 650 million neurons, and the 8-layer structure contains 5 convolutional layers and 3 fully connected layers. Alexnet successfully applied Tricks such as ReLU, Dropout, and LRN for the first time in a convolutional neural network.

A CVPR 2016 article and a NeurIPS 2012 article both exceeded 100,000 citations, highlighting the popularity of the AI field in recent years. It's also worth mentioning that AlexNet was the winner of the 2012 ImageNet Image Recognition Competition, and ResNet was the winner of the 2015 competition.

According to Google Scholar, Kaiming He, the first author of ResNet, published a total of 69 papers, with H Index data of 59.

He Kaiming is a well-known researcher in the field of AI. In 2003, he won the first place in the overall score of the Guangdong Provincial College Entrance Examination with a standard score of 900 points, and was admitted to the basic science class of the Department of Physics of Tsinghua University. After graduating from the Basic Science Class of the Department of Physics at Tsinghua University, he entered the Multimedia Laboratory of the University of Chinese in Hong Kong to pursue a doctorate, under the tutelage of Tang Xiaoou. In 2007, He Kaiming entered the visual computing group of Microsoft Research Asia, and the internship supervisor was Sun Jian. After graduating with a Ph.D. in 2011, he joined Microsoft Research Asia as a researcher. In 2016, He Kaiming joined Facebook's Artificial Intelligence Lab as a research scientist.

He Kaiming's research has won several awards, he won the Best Paper of the CVPR at the International Computer Vision Summit in 2009, the Best Paper Award in 2016, and one paper in 2021 was a candidate for the best paper. He Kaiming also won the ICCV 2017 Marr Prize for Mask R-CNN, and also participated in the research of the best student paper of the year.

His most recent research in the spotlight was in November's Masked Autoencoders Are Scalable Vision Learners, which proposed a generalized computer vision recognition model that promises to bring new directions to the big models of CV.