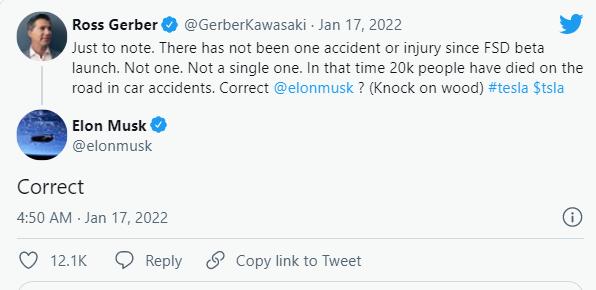

On January 18, Tesla CEO Elon Musk claimed that there was no fault after the launch of the FSD (fully autonomous driving) beta version. A day earlier, Ross Gerber, a Tesla shareholder, said on Twitter that there had never been a car accident or injury in more than a year since the FSD was released, compared with 20,000 deaths in traffic accidents in the United States during the same period. Musk retweeted the tweet and said with certainty, "That's right."

Two days earlier, Musk's definitive answer was questioned, with a video blogger named AI Addict sharing a video of his experience with FSD in San Jose, California, with a population of just over 1 million. According to the description of the video, the blogger has updated to the FSD Beta v10.10 (2021.44.30.15) version released on January 5 this year. In the video, the vehicle performed well under the control of the FSD, but when driving to a right turn road, the video reached 3 minutes and 30 seconds, the vehicle did not correctly identify the anti-collision column on the side of the road after turning right, and without hesitation, the people in the car were immediately frightened, fortunately, the driver has always paid attention to the road conditions, and also put the handle on the steering wheel when the accident occurred immediately, so as not to cause a larger car accident.

Of course, Tesla has been reminding users of fully autonomous driving on its web pages and FSD feature notes that FSDs are in the early stages of testing and must be used with more caution. It can make the wrong decision at the wrong time, so it's important to keep your hands on the wheel at all times and pay extra attention to road conditions. Motorists need to keep an eye on the road and be prepared to act immediately, especially when driving in blind spots, intersections and narrow roads. Now it seems that this kind of reminder is still necessary.

The security of the Tesla FSD Beta has also been questioned, with different issues occurring in different versions. For example, in the earlier version of 9.0, after the transformation, the trees on the side of the road were not identified, the bus lanes were not identified, the one-way streets were not identified, and after turning, they drove directly to the parking space and a series of problems.

In addition, outside criticism also includes Tesla's direct delivery of undeveloped functions to consumers, making it irresponsible for people to use the system on actual driving roads.

Tesla's autopilot system uses cameras to recognize and react to external objects. Under normal circumstances, roadside obstacles, ice cream cones, signs for special lanes, and parking signs can be identified, and are currently only open to U.S. car owners for paid use.

FSD Beta has been officially released for use since the end of September 2020, interested car owners can apply for use in mobile phones, and Tesla will decide whether to open this function according to the owner's recent driving score, and in fact, most car owners can use this function. Tesla will monitor and collect driving data during the driving process to adjust the upgrade optimization system, which is why FSD quickly provides upgrade packages. In the autopilot industry, it is generally believed that lidar with high-precision maps is the solution for automatic driving, and Tesla has done the opposite, completely abandoning lidar and choosing to believe what the camera sees at the moment, the video has been uploaded for three days, Tesla did not explain whether the accident was indeed caused by the FSD did not identify the row of green barrier columns on the side of the road.

On February 1, 2021, the National Highway Traffic Safety Administration (NHTSA) asked Tesla to recall 53,000 electric vehicles because of a conflict of law feature that includes a "rolling stops" feature that allows Tesla to pass through lanes with two-way stop signs at 5.6 miles per hour. The traffic rules in the United States require that this sign is close to the red light, and when there is this sign at the intersection, even if there is no car around and no one, it must stop in front of the white line of the intersection for 3 seconds before confirming the intersection before continuing to drive. Tesla's software guided the vehicle through at low speeds and was recalled as a result.

Musk then explained that there are no security issues with this feature. "The car just slows down to about 2 miles (3.2 kilometers) and if the view is clear and there are no cars or pedestrians, keep going," he said. “

Musk has just finished praising the FSD Beta in mid-January Since the start of the plan a year ago, "never" any mistakes have occurred, but the recent test video of the owner of the car has smacked Musk's face, because of FSD Beta's misjudgment, the vehicle directly hit the bumper on the side of the road. Of course, this accident is not serious, after all, the vehicle was not seriously damaged or injured, and it is not known whether this was included in Musk's previous "accident" range.

NHTSA in the United States also reminded: "So far, no commercially available model can achieve autonomous driving, and each car needs to be controlled by a driver." While advanced driver assistance features can help drivers avoid collisions or traffic accidents, drivers must always be aware of driving conditions and road conditions. ”