The old mage's "golden rule" seems to have failed on the smartphone.

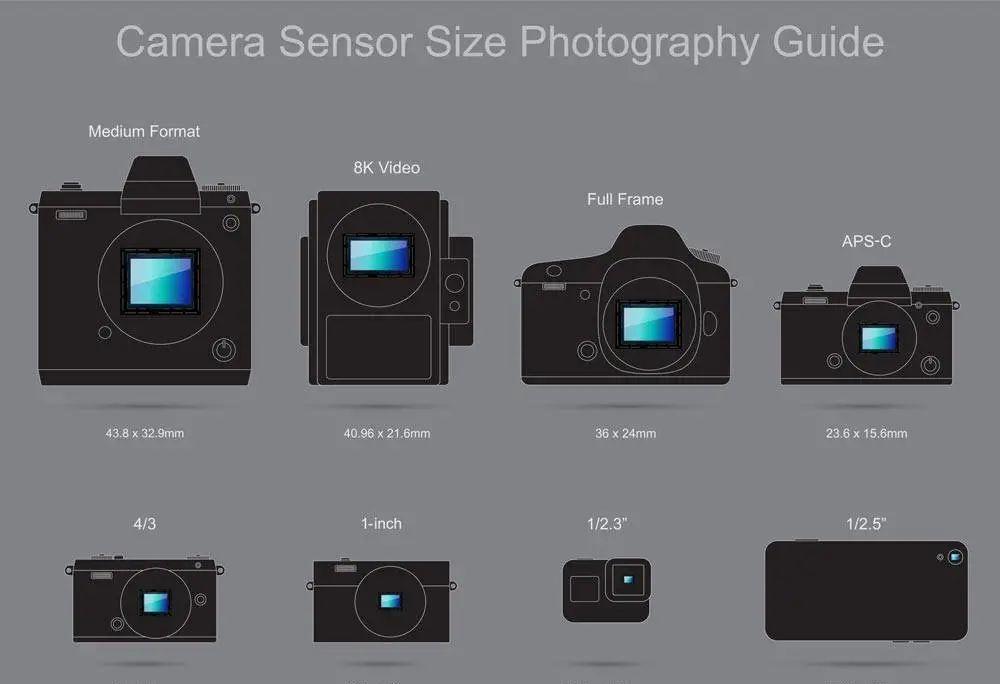

Different "cameras" are equipped with different sensor sizes. Image credit: henrys.com

Although the "bottom" of the smartphone camera has gradually become larger, it still has not been able to cross the threshold of one inch, that is to say, the mobile phone is still inferior to the black card of the entry positioning in terms of physical parameters.

Sony RX100 Black Card vs Xiaomi Mi 11 Ultra. Image courtesy of: dpreview

Even at the press conference with sony black card, the main "image" of the Xiaomi Mi 11 Ultra, the main camera Samsung GN2 1/1.12 inch physical size is still a little far from 1 inch.

True Me GT2 Pro with IMX766 Main Camera

At this point, with the cmOS physical dimensions standard, the first wave of Snapdragon 8 Gen1 new machines, Xiaomi 12, 12 Pro is still the most favorite product. The size of the CMOS size is no longer the main criterion for measuring mobile phone imaging systems.

In this way, it seems that the pursuit of the "supersole" main camera has become a thing of the past.

What does it mean to crush people to death at the bottom level?

Or rather, what advantages can the outsole bring?

Simply put, larger sensors get cleaner photos, better low-light performance, and better "bokeh".

Smaller sensors need lower ISO, longer shutter times, and a larger aperture to achieve the same results.

"Big" has big troubles, and "small" has small advantages. Image courtesy of: dpreview

In terms of imaging results, large sensors have the advantage of crushing grades, but they also pay a price.

To accommodate larger sensor sizes, the volume of cameras and lenses has also multiplied. In order to make the light evenly spread on the sensor, the complex optical design will increase the weight of the lens exponentially. And with that comes the cost, which is money.

Intuitive comparison of the volume of the full-frame system with the M43 system. Image credit: wildernessshots

Small sensors are the opposite, and the volume, weight, and cost are also reduced.

Imprecisely speaking, the sensor size is proportional to the image quality, and it is also proportional to the volumetric weight and price.

There will always be a "dessert" position between them, in camera systems, full-frame is, and on smartphones, the one-inch that manufacturers are trying to achieve may be.

Until the advent of "computational photography".

The breaking of "computational photography"

Whether it is a camera or a smartphone, the imaging principle is nothing more than the lens controlling the light into the CMOS, the CMOS for photoelectric conversion, and then restored by the image sensor to the image.

Judging from the above process, the mobile phone has no possibility of winning over the camera, unless the sword goes sideways.

Compared with the strong tool attributes of the camera, smart phones are actually more inclined to "results", and the general public uses mobile phones to record more casually, rarely to carry out post-polishing, and pay attention to "one-hammer trading".

iPhone 13 Pro vs Pixel 6 Pro. Image courtesy of cnet

With the rise of AI computing power in the soC of mobile phones, mobile phone photography has gradually evolved from simple HDR and multi-frame synthesis of night scenes to real-time HDR, large aperture simulation, and complex "night scene" modes.

Through the great improvement of AI computing power and the fine matching of different image algorithms, the process of smartphone imaging is no longer traditional, but adds many "computing" components.

In the past, when shooting with a camera, it was often necessary to plan and choose the shooting project in advance, which also gave birth to some classical experiences such as the Sixteen Laws of Sunshine.

Smartphones are born with "panacea", and any scene, subject, and light needs to be handled freely. The traditional recording process of acquisition, processing and restoration is not suitable for the image system of the mobile phone, and after a series of AI calculations and tuning and then output, it is more in line with the results that mobile phone users want.

As computational photography has become mainstream, the camera's hardware specifications are no longer the only measure of image power. Image power has gradually become a demonstration of comprehensive strength, including better hardware and better AI algorithms.

The more powerful ISP and AI performance in the SoC also affects the image power of the mobile phone along with the CMOS and lens.

The rise of self-developed chips

Whether it is Apple's A-series chips or Qualcomm's 8 series chips, in the recent update iterations, compared with the improvement of CPU and GPU performance, whether it is the number of cores or transistors, the neural computing engine has several times or even more than ten times the improvement.

A15. that can perform 15.8 trillion AI calculations per second. Image courtesy of Apple

These enhancements are used in all aspects of the product, and computational photography based on machine deep learning is one of the major ones.

Although the AI computing power on the SoC has increased significantly, it is still a common solution, and the performance of image personalization and computational photography effects between different platforms does not vary much.

This is not enough for mobile phone manufacturers who are constantly pursuing differentiation, so the "additional" chips set up for image differentiation in mobile phones have become very common.

Built-in vivo V1 self-developed ISP of vivo X70 Pro+.

For example, the surging C1 on the Xiaomi MIX FOLD, the V1 in the vivo X70 series, and the Mariana X chip that will appear on the Find X5 series are all aimed at having their own characteristics in the image.

I remember when I experienced the vivo X70 Pro+, I was impressed by the unique tone and strong low-light performance. And when "calculating" the image frame by frame, the energy efficiency of the additional chip is better than that, and there is also a power saving effect.

OPPO self-developed NPU Mariana X. Image: OPPO

OPPO's Mariana X chip also solves the problem of stronger computing power and energy efficiency ratio for images.

With the improvement of chip computing power and the improvement of algorithms, in terms of the final effect of mobile phone images, computational photography, machine deep learning, and AI algorithms will bring more obvious changes to ordinary users.

Sony Xperia Pro-I with a one-inch image sensor Image credit: Petapixel

And from 1/2.8 inch to 1/1.12 inch, or even to 1 inch, in many scenes shot by mobile phones, it is difficult to detect changes without careful comparison.

If you change the frame of reference, with the same specifications of the lens in the same scene, the full-frame camera and the APS-C format camera to obtain a photo is difficult for ordinary people to distinguish at a glance.

The so-called "the most important thing in photography is the head in the back" probably means the same thing.

Stand-alone, self-developed ISP chips (or image NPUs), or computational photography, solves the "back of the head" problem.

At this moment when AI is popular, the traditional hardware specifications are closer to the "raw materials" of a dish, while computational photography is more like a chef who can do five major cuisines, and will cook according to the season and mood. The importance speaks for itself.

They all want to hold the core technology in their hands

Google Tensor.

The "Core Building" campaign has become the main theme of the consumer electronics industry in 2021.

Apple's M-series chips are known for their high energy efficiency ratio and fit well with hardware, forming an ecological barrier with other products. The Tensor of the Pixel 6 Pro is also related to Android 12 to some extent, but it has not yet constituted a barrier.

Google's customized Tensor, combined with Android 12, makes the Pixel 6 series the "smartest Pixel phone".

Then to the domestic manufacturers' customized ISPs, NPU, although the chip is small, it is enough to bring certain advantages to the image, and even does not rule out the barriers that finally form the brand.

From the beginning of the camera appearing on the mobile phone, its development is the same as that of traditional image manufacturers, a larger bottom, higher pixels, and a larger aperture has always been the main theme, so it is not hesitant to raise the camera and occupy a considerable space in the machine.

Leitz Phone 1 with one-inch image sensor . Image credit: Leica

However, as we get closer to the upper limit, the marginal effect becomes more pronounced, and the improvement in imaging effect is no longer significant.

Until the emergence of "computational photography" and self-developed ISP chips, the development direction of mobile phone imaging has been completely reversed, and computational photography guided by algorithms and machine learning is becoming the main battlefield.

Xiaomi Mi 11 Ultra, Leitz Phone 1, iPhone 12 Pro Max. Image credit: XDA

Outsole sensors with larger size and cost are no longer the first choice of mobile phone manufacturers, but prefer the "tuning" of sensors, that is, algorithm optimization based on mature hardware, and the use of independent image chips (ISPs, NPUs) to create unique image barriers.

It is foreseeable that in the case that the laws of physical optics have not been broken, when the new machine is released in the next few years, the focus of the image will be around AI calculations, machine learning, unique tones, extreme speed focus and other "calculations", and the size and model of the sensor will become more and more reduced.

Click "Watching"

It is the greatest motivation for us