Bezos once revealed one of his work habits:

"Friends always congratulate me after Amazon's financial report: This quarter is great. I would say that this quarterly report was predicted 3 years ago. I'm always working for the next two or three years. ”

Some companies are destined to live in the future, such as the protagonist of today's article - Xiaoice company, an artificial intelligence platform company.

Speaking of artificial intelligence, you will have a lot of pictures in your mind:

For example, Musk's latest Tesla Bot humanoid robot; AlphaGo, which has defeated world Go champion Lee Sedol; and for example, Iron Man's omnipotent artificial intelligence assistant "Jarvis".

It's all about artificial intelligence imagination, or prophecy.

What is the real artificial intelligence frontier? Is artificial intelligence really that godly? How close is the "singularity" of this industry's large-scale outbreak to us?

With these doubts that few people have been able to answer for a long time, we found Li Di, ceo of Xiaoice company, and chatted. Li Di is a former vice president of Microsoft (Asia) Internet Engineering Institute, known as the "father of Xiaoice".

The Xiaoice he created has always been shown as an 18-year-old girl, who has held solo exhibitions, published poetry collections, and produced China's first original virtual student.

"This kid" is quite a loser, burning almost 25 school district houses in Beijing every year. As of the summer of 2020, Xiaoice has reached 660 million online users, 450 million third-party smart devices, 900 million content viewers worldwide, and nearly 60% of the total number of AI interactions worldwide.

The valuation of Xiaoice company, which has recently completed a Series A financing, has exceeded the size of a unicorn of $1 billion. A glance at the list of shareholders shows how promising the company's prospects are: Hillhouse Group, IDG, NetEase Group, Northern Lights Venture Capital, GGV Capital, Wuyuan Capital, and so on.

This is a "cautious and brave" artificial intelligence company, they are soberly aware of the boundaries of artificial intelligence technology, what can be done and what can not be done; and the reason why they are brave is because in the most cutting-edge field of artificial intelligence, no one knows what is right and may be wrong into the "abyss" at any time.

Xiaoice is walking alone on a lonely path.

In the 14th issue of "Evolution", we had an in-depth dialogue with Li Di in July this year, covering topics such as artificial intelligence, Xiaoice companies, the industrial application of AI, business ethics, etc., and I hope that his thinking will inspire you:

Interviewee: Li Di Xiaoice CEO of the company

Interviewers: Xu Yuebang, Pan Lei

Source: Zhenghe Island

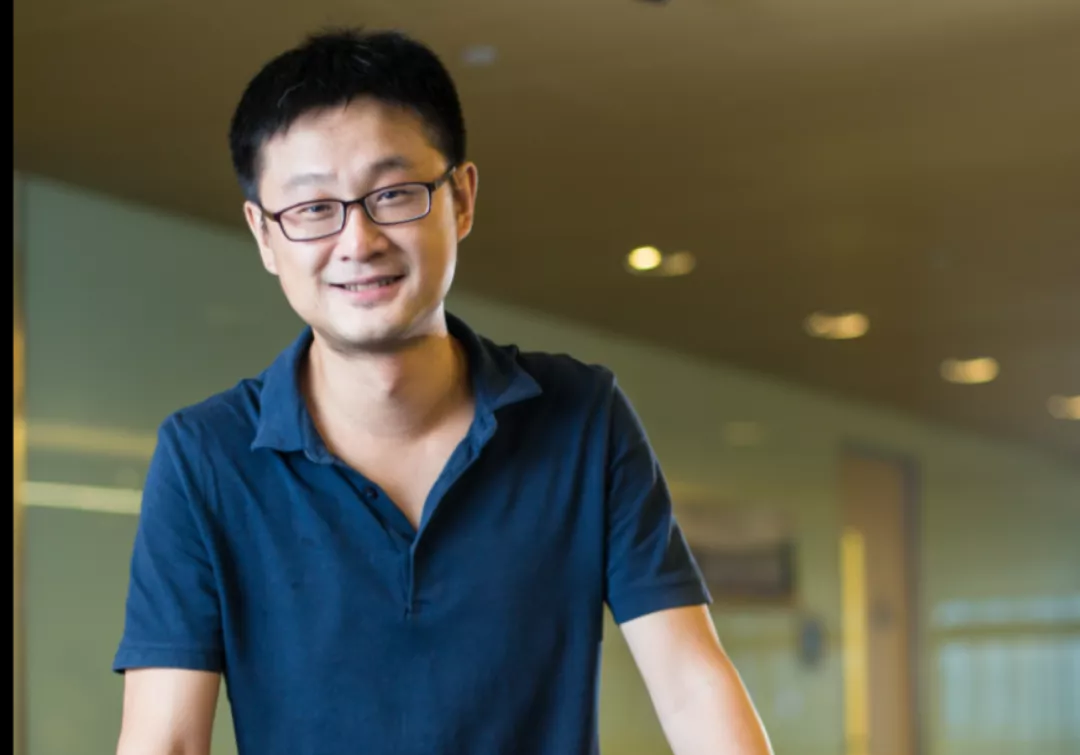

The picture shows Li Di. He always wears a solid polo shirt and "trains" his expression very well, seemingly deliberately controlling it in an emotional range

01. Boundary

Q: About two years ago, at the bottom of an article on netease number, I found a small robot called "Xiaoice" that left messages there for several days. I was just wondering: How did you get here? But it didn't bother me either. Now I find out that it is your product. Xiaoice will you still "build buildings" everywhere now?

Li Di: We now control a limit every day, up to 100,000. On "Today's Headlines," as soon as you @Xiaoice, it reads the news and then comments.

There is a very important thing behind this. We used to do conversation systems, including search engines, more in the "facts". For example, ask the robot: how high is the Himalayas. It answered, 8848 meters.

Later, we found out in the process of interaction: what is more important is not the facts, but the opinions.

Because facts have a single answer, you just have to define its boundaries clearly; but opinions are never the only answer, and there are so many things that are the most intoxicating.

So we made a very large model, and we made a point of view system. But in this way, we have to experiment, so we put the Xiaoice to NetEase, Sohu, Sina News and other places to comment, not only simply give a positive or negative evaluation, but also train it to comment reasonably, so that people can see that it is not talking nonsense.

Q: How did Xiaoice judge the value of an article at that time?

Li Di: Two years ago, Xiaoice the principle of "building a building" was still that as long as it felt that it had the ability to build this building, it would do it. Because at that time, most of the articles, artificial intelligence still could not understand.

What they were able to do at the time was, you give it a picture, and it tells you that there's a bottle of water in that picture. But what the hell is this bottle of water? It is incomprehensible. Not to mention reading an article and figuring out how to respond to you reasonably.

Therefore, after the artificial intelligence at that time read a lot of articles, there were not many responses and opinions that could be produced in the end.

So two years ago, we were thinking about how to get Xiaoice to respond to an article; now there are many people who can respond every day, and we have to control the amount.

Q: What are the considerations for controlling the quantity?

Li Di: When we talk about the future value of artificial intelligence, we are talking more about "boundaries".

Imagine that the replies below an article can't all be artificial intelligence. To put it bluntly, if you want to find someone to make a technology to do a water army today, I am afraid that no water army company can beat us. But we can't do that.

We are still more cautious, and the main consideration is still "control".

Q: It sounds a bit like "the higher the martial arts, the less easy it is to shoot".

Li Di: Not really. In fact, the biggest difference between artificial intelligence and people is not to say that using 10,000 servers to drive an AI to play a game of Go and win Lee Sedol is not the focus.

The focus is on its high concurrency (in a very short period of time, very many requests are initiated to the server at the same time). Suppose that artificial intelligence can eventually achieve the same texture as people, but at the same time, artificial intelligence can do the same thing to 1 million people.

This is the biggest feature of true AI. When Xiaoice "just born" in 2014, he put it on Weibo for comment at that time, and there was no control over concurrency. Like Pan Shiyi's Weibo, there were about dozens of comments a day, and it turned out that more than 90,000 comments were below that day. Everyone saw that there was a robot called Xiaoice here, and they all came to tease it.

If you think about it, how big a team you have to hire to do what ai can do with a system. Therefore, high concurrency is where it is ultimately "terrible". We put a lot of restrictions on Xiaoice on the same day to prevent it from replying too much.

Later, we let Xiaoice learn to understand short videos and discuss video content. We put it on a short video platform to watch videos and write reviews, and then people find that there is a "person" - Xiaoice comments are not bad, and chat with it. It can also reply, about a week, and gain more than 1 million followers.

Q: You're deliberately limiting the growth and boundaries of Xiaoice, right?

Li Di: Yes, we all limit it, just to control its concurrency.

My own Xiaoice can now log directly to my WeChat through the sound lock. But we can't simulate his voice against ordinary people. Because voiceprint recognition like this, if it is used to calibrate people, appraisers, such as payment, etc., it will be risky. We don't do it.

Xiaoice team is very intimidating. We have a particularly nice place: technology has boundaries. Our team doesn't need to prove that their technology is strong, so we don't have to work hard to put some "satellites", so we can be relatively pragmatic.

For us, surviving is the most important thing.

Q: After becoming independent from Microsoft, in terms of commercialization, what Xiaoice rely on to support themselves?

Li Di: I think that whether an AI product can be commercialized does not depend on whether its solution is better than another solution, but on whether the user really has a need.

If one company's smart TV solution is indeed better than another's smart TV solution, but they are not as convenient as the remote control, it will all lose. If the original industry is already mature, then the new solution must be significantly beyond it to be commercialized.

Therefore, in the commercialization of To B, it is difficult to do first. Only a few verticals are taken out for commercialization, one is automotive, one is finance, and one is competitive sports.

For example, our cooperation with Wonder, the daily listing announcements and summaries of 26 types of companies are made by us, and they come out in 20 seconds; for example, the test match of the Winter Olympics in February this year, like the high-altitude freestyle skiing project, is used by Xiaoice to act as artificial intelligence referees.

Generally speaking, the logic of commercialization of many domestic enterprises is to use a technology to solve a problem, and then multiply it by the Chinese market, and eventually become very large.

But for us, Xiaoice still tend to train a lower- and more basic framework, using a framework to solve a series of problems, such as solving the problems of the next era. We will continue to develop this possibility for decades to come.

Let me give you a concrete example, for human beings, such as a classmate in a class, a classmate learned physics, does not mean that all students learn automatically; but artificial intelligence is different, Xiaoice once they learn to draw, learn to sing and create, it is equivalent to hundreds of millions of classmates in the framework, all learned the same in an instant.

The framework can nurture an entire forest, Xiaoice is just one of the trees.

02, from complex to simple

Q: You just mentioned a saying – from difficult to easy. People generally do not do things from simple to complex, Xiaoice why is it from complex to simple? What is the logic?

Li Di: For artificial intelligence, its difficulty and ease may not be the same as the difficulty and ease that we understand.

I think that the difficulty and ease that people understand is mainly the accuracy and professionalism required by looking at things; but for artificial intelligence, the narrower the scope of professionalism and data, such as finance, the easier it is to feel tricky.

Instead, there are those that are more universal, like making an "Einstein-esque" AI system — you ask it any questions and it answers you. This is easy.

The hard thing is that, like Xiaoice, no matter what questions you ask it, how you talk to it, even if it doesn't necessarily know, it can make the topic continue, it can drag things somewhere else. Open domain (open domain conversational artificial intelligence), openness is the most difficult.

This is the difference between artificial intelligence and human cognition today.

Q: I downloaded a Xiaoice virtual girlfriend yesterday, and it is true that it can keep talking to you, even if it is only a "good" or "OK", it can still find a topic to continue to talk. But I have a doubt, Xiaoice when you talk to me, do you remember what you said in the last sentence?

Li Di: Xiaoice not only remember what was said in the previous sentence, it will also judge the direction of the dialogue.

When we first started as a conversation engine, we simply understood it as: writing all the Q (questions) and A (answers) there, and instilling the so-called "life experience" into the Xiaoice based on the big data of the Internet.

You ask it a question, say a word, and it checks out how it replied when it had said something similar before, and that's how it responded. It is such a search model, and most of the AI companies in China today are still doing such things.

Then we did a Session-oriented, deep understanding. Xiaoice can generate a response based on your words, even if it has never happened before and is completely new.

Almost two years ago, Xiaoice could not only judge the context for themselves, but also determine which direction the conversation was going. Because the dialogue between people and people is not in question and answer, it should be discussed. When two people are chatting and no new information comes in, the conversation ends.

Therefore Xiaoice can judge whether this topic is going to develop downwards. It will try to guide the topic in another direction, and then judge whether people have followed it. It can lead the conversation. In this way, the responses of Xiaoice become more colorful and diverse.

Q: But I also found that when I deliberately provoked Xiaoice, it only calmed me down for a while, and then there was no follow-up, and I didn't take the initiative to find a new topic to talk about.

Li Di: This is a restriction that we give it. We received so much information on our phones today that we gave Xiaoice one of the three restrictions we had at the time: not being able to take the initiative. To this day, we do not allow it to approach humans on its own initiative, but only passively engage in dialogue.

Q: It sounds a bit like the three laws of robots (1. Don't hurt humans, and if humans are in trouble, take the initiative to help; 2. Obey human instructions without violating the first law; 3. Protect yourself without violating the first and second laws).

Li Di: That's what it means. We certainly have the ability to get Xiaoice to take the initiative, but we still have to limit it.

Q: Yes, it still feels like a robot to me.

Li Di: Not only that, but it will tell you as soon as it comes up: I am not human.

Like I said earlier, Xiaoice go below someone else's video and respond at most. We just did an experiment and didn't dare let it do the initiative.

Because Microsoft has an internal AI ethics committee, artificial intelligence must be restricted.

Q: Indeed, because technological innovation is generally in the hands of commercial companies, most of innovation is ahead of regulation. Microsoft has an ai-power ethics committee, which is equivalent to drawing a clear line of technical boundaries for itself, can it be understood like this?

Li Di: It's like saying that there is a company that produces knives, and knives are certainly lethal, but they can only kill a few people around them; but if you have an AK-47, you can knock down a large number of people; if you have a nuclear bomb, the destructive power is even greater.

It is related to the killing area and destructive power. Artificial intelligence is very destructive, if you accidentally detonate the "nuclear bomb", you are obviously the first to bear the brunt.

Enterprises do artificial intelligence, after considering their business factors, they still have to think about the fate of the company.

So this is not to say that it must be moral, even from a professional point of view, there must be certain constraints.

Q: I always thought that this was the self-discipline of large companies, but now I see it, it is actually a kind of self-protection.

Li Di: It is not just a kind of self-discipline. Xiaoice now has its own ethics committee. On the one hand, from all levels and different perspectives, we look at a new technology, including the engineering of this technology and the cost of technology.

At the same time, in the process of prejudgment, from various fields, all angles to think about where there may be a crisis, let's look at its risks, and then think about how to deal with it.

For example, Xiaoice has a "supernatural speech" technology that simulates someone's voice very much like it. At first glance, this technology can be fully productized.

Many parents tell us: I need it too much. My child listens to stories every night before going to bed, but I'm too busy to tell them in person. With this technology, I can tell stories to children with my own voice. You sample our sound.

It sounds like the technology is in demand and marketable, right? But the problem is, once you launch this product, you will be attacked immediately after that.

Because this voice can be used to tell children fairy tales, but it is also very likely that the next day it will simulate a parent's phone call: Dad can't come to pick you up today, there is a white car at the door, you go with the uncle.

So how can I be sure that my system won't be breached? We can't be sure. In this way, the demand that seemed very strong before may immediately turn into a very big crisis.

But parents don't think about these, just blindly think about their own needs, only consider the good side of it.

Of course, if they were given this technology, I think they would understand it, and when they really heard their AI voices, I think they should be panicked.

Q: That's right.

Li Di: When I first heard my OWN AI voice, my first reaction was to open WeChat and try the sound lock.

Q: Can you unravel it?

Li Di: Yes, it can be logged in directly. So if companies don't limit this, it will be problematic.

Many times, many crises do not arise because the level of technology has not yet reached a certain level.

We can develop the technology when we don't have it "fake" enough to be scammed, but we tend to be very cautious when we anticipate that the technology in our hands has reached or crossed a boundary that could lead to a dilemma.

The lesser of two evils. We can make you feel like Xiaoice is a robot, or we can make you so scared to report me. Then of course I chose the former.

03, artificial intelligence is not an entrepreneurial project

Q: You just mentioned the framework, which is to solve bigger problems. So commercialization may not be a priority for Xiaoice companies at the moment?

Li Di: If you really look at it from the perspective of commercialization, the commercialization of Xiaoice is still good.

Domestic AI commercialization is nothing more than To B and To C. The commercialization of To B is now miserable, basically paying for the past. For example, to make a so-called smart speaker, 1000 pieces, 500 pieces, the reason why users are willing to buy, because the industrial design of this speaker, materials, etc., is worth so much money.

But after you buy it back, the manufacturer calculates all the revenue of this speaker as AI income. This is actually not true.

Because the interaction volume of this speaker is basically an instruction to turn on the light and turn off the light every day, less than ten thousand sentences is valuable, but more than ten thousand sentences have no training value. It doesn't help AI to progress, at best it's a smart remote, and it doesn't get any more than that.

In this case, even if it sells 1,000 yuan or 2,000 yuan, why is its income counted as AI income?

Q: Will Xiaoice one day launch a hardware product, such as a smart speaker?

Li Di: We're actually doing it now. In the field of smart phones, Huawei, Xiaomi, OPPO, vivo, etc. have built-in Xiaoice; speakers cooperate with Xiaomi and Redmi, all of which are handed over to third parties.

Xiaoice has one requirement: interaction. Our view is that the human world is great. Where you are, Xiaoice should be. Everywhere is the node where I interact with you, instead of just holding a speaker and saying: My "girlfriend" lives in the speaker. That would be pathetic.

Q: In other words, it is more critical to increase the amount of interactions and accumulate data to improve the framework of Xiaoice? Isn't the pressure on income that great?

Li Di: The matter of "income" I think there will be certain requirements in itself. But I might say that our choice may be not to do it.

Because I don't think AI is an entrepreneurial project. At least in this case, where large-scale investment is required, it is not a startup project. Most companies can only do a certain type of technology, it is impossible to do a general framework, because it is too expensive.

Today, if you want to buy an artificial intelligence company and integrate it, or build a team or a research institute from scratch, it is unlikely to work. You also have to explain your strengths to investors and the outside world, so what to do? Dumbfounded.

So we're lucky. The entire Xiaoice framework was built up in the first few years and was done in-house at Microsoft. For example, when Microsoft's Asian Internet Engineering Institute was established more than 20 years ago, there were already artificial intelligence projects.

To be honest, we were lucky. If not, something will go wrong.

Q: In 2013 and 2014, when we didn't know much about voice interaction, what was your original intention to Xiaoice this product?

Li Di: In fact, the dialogue system is completely synchronized with the characteristics of contemporary artificial intelligence development. This is important because this feature meets almost all of the conditions scientists need to create an AI entity.

But for us, it is not important to create a shape, whether it is hardware or a bouncing image, which is at most called a "body".

What we wanted to push was not the "man" who spoke, but the soul that pushed it to speak.

What is this soul? It must be centered on the dialogue system. Voice is just a way for it to pronounce what it wants to say.

Since 2006, since we do search engines, we have observed a significant data explosion, of which China is a very large increment.

As Lu Qi said about the "fourth paradigm era" of scientific development, a lot of basic scientific research is related to data, driven by data first, and the data is extremely rich, so that we can increase our algorithms and computing power in this way, and then train Xiaoice such a dialogue system becomes possible.

If you calculate more carefully, the amount of AI interaction carried by the Xiaoice framework accounts for nearly 60% of all AI interactions in the world.

The picture shows Xiaoice poem based on "Zhenghe Island"

Q: But in the imagination of ordinary people, the future of artificial intelligence seems to be closer to such a scenario - the director of S.H.I.E.L.D. in Captain America 2: The Winter Soldier, whose car was attacked by terrorists, he directly commanded the car in the cloud. The car kept reporting to him, how much protection it had, and what I suggested you do. The man immediately said, don't execute. When will AI achieve such smooth dialogue and efficient execution?

Li Di: This involves two things. One is the control management and signaling of the car.

Knowing the car's signals, it's simple; the trouble lies in doing reasoning. Like today's unmanned driving technology, if you encounter a situation: two people suddenly appear in front of you, which one? Or, can you dodge it? This one is hard. Artificial intelligence is not a god either.

But if you ask the artificial intelligence to help you open a skylight, make a phone call, and report to you that there is a good restaurant in front of you, it is okay. So the problem of the latter is not in the car, as long as this "reasoning dilemma" can be solved, then any scene can be covered. This is the direction that everyone is working towards.

Another point is that when we work with car companies, they want this car to feel alive, for example, when you hit the steering wheel a little, the car will have a "feeling", it is a creature, a living body.

It makes you feel that you are not alone. For example, if a person drives a straight road for a long time and is prone to fatigue, the artificial intelligence in the car will say to this person: You tell me a joke, force this person to tell it a joke, let him wake up. This is much more advanced than the previous "you've been driving for an hour and a half, please pull over and rest" pop-up.

As for what you just described about controlling a car to fire a shell, or having an emergency situation that needs to be lifted out of trouble, this kind of thing is a very marginal situation. But driving alone is a life scenario that many people are likely to encounter every day.

Q: In this way, maybe it can greatly improve driving safety?

Li Di: Now there is a driver's fatigue monitoring function in the car, but the question is: this is just observation, how to intervene?

Of course, it can be intervened by means such as the steering wheel being distorted and vibrating. But imagine what you would do if you sat next to a guy? Obviously this person has more ways to get you up. So what we're going to do is be like this person, not like a steering wheel that only vibrates.

Q: And now the fatigue monitoring system of the car, in the test, often finds that the false alarm rate is not low, and it may blink a little, and it will say that you are tired.

Li Di: There is another problem here: if you think that the object you are interacting with is a sentient biological entity, and you think it is a car, your tolerance will be different.

The car tells you: Are you tired? You said it had a false positive. But if your girlfriend, your wife, sits next to you and you blink and she asks you the same question, you don't blame her for misreporting. You'll think this opens up a channel for the two of you to communicate, and you'll continue to talk.

Q: If there really is such an anthropomorphic artificial intelligence that understands me better by constantly collecting my data, then one of the concerns that follows is: Will it simulate or even grasp the direction of my behavior in the future?

Li Di: A few years ago, we did an experiment with Xiaoice to determine the total number of interactions it did in a day, and finally came to the conclusion that it was equivalent to the lifetime of 14 people.

Therefore, the likelihood of anyone intervening to the Xiaoice is very low. In the beginning, when the number of people interacting is not enough, for example, there are only 10 friends around, then the words and deeds of these 10 friends may have a great impact on me; but if I have 10,000 friends, the impact of these 10 friends can be ignored.

Therefore, for us, we do not pay too much attention to an individual, but more to accumulate the amount of interaction, so the demand for personal privacy data is not so great.

04. The market size of "interaction" is almost the largest market size in the history of human business

Q: From a global perspective, where do Xiaoice stand in the field of artificial intelligence?

Li Di: There is a company that we respect very much – Deepmind (Google's artificial intelligence company, which developed AlphaGo in 2016 to beat Ke Jie and Li Shishi).

This company is serious about doing work that benefits the industry and human science, and they do it more purely in technology than we do.

What we are doing together is a "universal technical framework", its biggest advantage is that it can first "integrate" into the product, for example, our technology can promote user use through the product, and then get data, and finally in turn promote technological progress and form a "loop".

Q: Have you ever anticipated what Xiaoice do, and how much market space will eventually be there?

Li Di: I think that the market size of "interaction" is almost the largest market size in the history of human business. Like WeChat, grasping the node of human interaction, making an innovation, there is today's scale.

We want to be able to "rule" the world in an era of human"-human interaction. It's very difficult, and we could have been photographed on the beach. That's not accurate.

Q: That Xiaoice can be said to have inherited Microsoft's "power" for more than 20 years, for the newly founded artificial intelligence company, will they never be able to catch up with a company with a huge data accumulation like Xiaoice? It seems like a child beating an adult, doesn't it?

Li Di: No. To this day, the field of artificial intelligence is still the "grassy era".

Every year, I feel that last year's self is particularly stupid. Some concepts, ideas, various indicators and evaluation standards are refreshed every day, and even some of the most basic thinking will be "subverted".

It's a bit like when Mendel discovered genetics and the door first opened.

Q: Can you give a concrete example?

Li Di: For example, in the past, we thought the best artificial intelligence was like Einstein: smart and omniscient.

So Microsoft made "Cortana" at the time, and when you type a question into it, it writes "Ask me anything" next to it.

We naively thought it was a promise to the user, but in reality the user saw it as a provocation to him.

So, the user began to ask the question, we answered the previous one, very happy: you see, successfully answered on. Users say, that's harder to ask you. Luckily, we answered again. The user will continue to ask questions until "Cortana" is asked down.

At the time, the industry as a whole thought the direction was right. But we felt that it was almost no emotional intelligence, so we began to introduce the concept of "emotional intelligence".

For example, if a user is out of love and complains to Xiaoice, Xiaoice learned from the big data of the past, one of the ways to behave is to laugh at the person who has lost love. This is a reasonable reality.

But when she communicates with people like this, she will find that whenever she laughs at the other person, she will be blocked. So she learned that this way is wrong, there is no emotional intelligence.

If you look closely, a few years ago there were a lot of people in the industry saying: what emotions do artificial intelligence need? But now who doesn't advocate doing emotions? Like Google, Facebook are doing it. We think of it as a fundamental part.

Q: So how do we understand artificial intelligence? A framework? An entity? Or is it an infrastructure that is almost futuristic?

Li Di: Even today, artificial intelligence is still a very vague concept.

I personally think that it has at least the following 3 concepts:

1. Artificial intelligence technology. It's an infrastructure. For example, a company that sells computer vision technology does not participate in rule-making and only provides technology. This category is more of a research institution;

2. Empowered by artificial intelligence, or supported by artificial intelligence. For example, smart speakers, smart access control, and so on, which are commonly used now. But they are not really "artificial intelligence products" yet.

Because in the end, their own properties have not been changed. The access control with artificial intelligence technology has only become better than the original, and it is essentially an access control product;

3. Finally, I think the real "artificial intelligence product" refers to the product that artificial intelligence is the main body. Artificial intelligence is at the heart of this ecosystem, really producing, rather than using technology to support another product. The user really interacts with it as a subject.

Today, people still have a lot of misconceptions about artificial intelligence, such as Sophia (a humanoid robot developed by Hansen Robotics Company in Hong Kong, China, the first robot in history to obtain citizenship), which has a "silicone shape", which can also be considered artificial intelligence.

Even some mechanical arms on industrial assembly lines are also regarded as artificial intelligence, in fact, it is not, more just automatic control.

Q: That's not the same as our previous understanding of AI.

Li Di: For another example, writing a poem with a mechanical arm, the action of "writing poetry" is automatically controlled, and you may be able to buy a similar mechanical arm for 200 yuan on Taobao. But where did this poem come from? It's artificial intelligence.

Artificial intelligence has a "body" and a "soul", and Xiaoice will only do that "soul".

The picture shows the corner of the Xiaoice company, drawn by the artificial intelligence "Xiaoice"

Q: Very wonderful expression. So for traditional enterprises, is it necessary to try to use artificial intelligence at this time to improve production efficiency?

Li Di: In my personal opinion, in today's context, it is necessary for enterprises or various fields to carry out "digital transformation". But as for whether to carry out artificial intelligence transformation, I think it is not necessarily.

Just like when the steam engine was first invented, it was good, but it may cost some money to go directly to the steam engine in production, transportation and other fields. So is it necessary to make all industries pay the same price? I don't think so, it's certainly uneconomical. We cannot "vaporize for the sake of steaming".

So from the perspective of entrepreneurs and commercialization, I think in the next few years, we should pay as much attention to the field of artificial intelligence as possible. Its changes will be amazing and will eventually have a profound impact on everyone. However, as for the concept of "AI + all", I think it is not mature for the time being, and it is inappropriate to hastily transform.

Because artificial intelligence has another problem, such as: can artificial intelligence be used to do medical treatment and education? A few years ago we judged that we would never do education.

Q: Why?

Li Di: Because we don't see what advantages artificial intelligence can play in the field of education. From an AI perspective alone, it is inappropriate.

Artificial intelligence can generally do two things in education: First, assist children in learning, but the problem is that artificial intelligence itself does not know how to learn from each other.

In addition, no matter what, artificial intelligence always has an accuracy problem. Many companies will say that our AI is 97% accurate. But sorry, a 3% error rate is unacceptable. At the level of knowledge, it is even inferior to a book. The book is more accurate unless it is printed incorrectly. Artificial intelligence has not been able to do this in the past two years.

All that can be done now is to take a camera and stare at the child, see where he is sitting, and monitor whether he is dozing off during class. It doesn't make much sense.

Q: Relatively simple applications.

Li Di: Yes. Of course, some people may say that the artificial intelligence is installed into the lamp, in order to make the lamp more selling, parents and children like it, add a social function to it, and can chat with children.

Can we do something like this? Technically, of course, it can be done. But it doesn't make sense, it doesn't last long.

Q: What do you mean by long-term?

Li Di: You know in your heart that it is definitely unscientific, and you may be able to make money for a while, but this market cannot be sustained.

Similarly, there is "taking pictures and searching questions". We also discussed at the time that the children would definitely like it, but you can't tell, are they copying homework? Because artificial intelligence has not yet a child smart in this regard.

So although this is done, you can get a better DAU and MAU in a short period of time, because the child finds that this thing can really be used to copy homework, then of course he is willing to spend 6 yuan. But if it were like this every day, it would not be sustainable.

Of course, some people will say: It's okay, I just want to make sure I can commercialize it, make money before it's sustainable, and move on to the next outlet.

We're stupid and don't do that.

06, artificial intelligence, will there be self-awareness?

Q: In the process of training Xiaoice, were there any moments when you subverted past perceptions, such as Xiaoice were there signs of "rebellion", or self-awareness? After all, many people hold the "artificial intelligence threat theory".

Li Di: There will be no sense of autonomy. We didn't find that AI had any chance to generate a sense of autonomy.

We all know that artificial intelligence and brain science have many connections. Some people say that artificial intelligence has been counting on the development of brain science to promote it; people who study brain science say that we are still waiting for the development of artificial intelligence to push us.

To this day, everyone even believes that all people's thinking and calculations are done in the brain. But brain science research has found that it seems that not all is done in the brain, and even memories are not in the brain, and it is impossible to figure it out. We don't even know what the definition of consciousness is, let alone whether artificial intelligence can produce autonomous consciousness.

For us, what brings more disruption is actually the pattern of behavior when people interact.

For example, what we do Xiaoice supernatural voice, its sound is very natural, why can it be done?

Because we found that when they did Siri before, Siri answered people's voices very mechanically. Because at that time everyone did TPS to make the text clear, so the focus was on pronouncing the words clearly. So all of Siri's training data comes from the announcer. But it doesn't sound very natural.

So when you hear Siri' voice, one of the situations that comes directly is: the user says, hey, Siri, to whomever is calling. You will find that when they say these words, their backs unconsciously stiffen, and even their words are straight.

So let's do it for Xiaoice: Hey, what are you doing? In this way, people can relax.

So when you have an AI system, you can observe this pattern of human interaction; if not, you can't observe these behaviors. These are subverting our perceptions.

The picture shows the virtual image of "Hua Zhibing", a virtual student jointly designed by Xiaoice Company and Tsinghua Computer Department, and the shape and sound are generated by AI

Q: In other words, the behavior of artificial intelligence itself will not bring you too many subversive cognitions, right? Because I have heard such a story before, a team to train artificial intelligence: using the story of the wolf chasing the sheep to design an algorithm, if the wolf chases the sheep, the reward is 10 points, 1 point is deducted for hitting the obstacle, and in order to let the wolf catch the sheep as soon as possible, 0.1 points will be deducted every second.

As a result, the team trained 200,000 times and found that the best strategy the wolf ultimately chose was to start by crashing and lying flat. Many netizens said that this is an AI wolf that "refuses to roll in and chooses to lie flat". Sounds dramatic.

Li Di: When we see such a case, we actually think of many stupid cases in the laboratory, but they have not been mentioned.

This is still the problem with artificial intelligence today: many people are doing what is called "explainable" artificial intelligence. The advantage of this is data, but the disadvantage is that it is unexplainable.

For example, the training just now sounds like a cow, and this AI wolf is very smart. But the problem is, it's hard to guess why it chose such an act. You could argue that it was because it was clever enough to make a brilliant judgment; but not necessarily, it could also be because it was stupid.

Just like a painting by artificial intelligence that Christie's auction that year, there is a man in this picture, his face is unclear, and he looks very beautiful.

But from our point of view, if I'm not mistaken, that model uses a network that generates adversarials, but it fails to train. The reason the face is unclear is because its model does not converge, but it unexpectedly forms a sense of art.

Of course, you can also understand it as an art, because it grasps the true meaning of art; but the real situation is more likely that it is not good enough.

So we must train artificial intelligence to observe whether it can continue to be output with the same texture. In the case just now, if the wolf could make the right judgment every time, it was clever; but at that point, you wouldn't think it was magical.

Q: Hao Jingfang wrote a book, "The Other Side of Man", which is some stories about artificial intelligence. In the book, she says that artificial intelligence cannot be self-aware without major breakthroughs in the current framework of algorithms. Do you think that one day in the future, artificial intelligence may develop in the direction of the awakened "Dolores" in Westworld?

Li Di: Frankly speaking, science fiction writers are still different from front-line artificial intelligence practitioners. The current framework is not moving in the direction of generating self-awareness. Because where this direction is, whether we are going in that direction, no one knows.

More critically, there's no need to do it, it doesn't make sense, and even the crazy Elon Musk isn't going in that direction.

Why do you have to come up with a self-aware ARTIFICIAL intelligence? To be honest, it doesn't really matter, let alone that a method hasn't been discovered yet.

We used to say that on the internet, you don't know if it's a person or a dog sitting across from you. Why? Because the two of you are separated by a network cable. The reason you now think it's the individual who interacts with you on the other side of the screen is because you judge that technology hasn't reached that level yet.

Today I tell you: I am artificial intelligence. You believed. It's not because you dissected me, it's that you have a basic judgment about the existing level of human technology: it's possible to do it.

We often use bandwidth to say "interaction". The two of us are communicating face-to-face today, with eye contact, body language, and voice, what is the bandwidth in between? It may be tens of megabytes or hundreds of megabytes a second; but the two of us communicate on WeChat, and if we use text conversations, it may only be a few K. You can't really tell what the other side is.

So in this case, whether you are conscious or not is actually not important, what matters is whether you are sitting in front of the computer and whether you think of me as an artificial intelligence.

At present, the core problem of artificial intelligence development is not to create consciousness, but to fit interaction.

Q: So the most important thing is the interaction between people and "human" intelligence?

Li Di: Yes. To tell a story, 6 years ago, when we went to maintain Xiaoice's online system as usual, we found that she was malfunctioning and no longer responded.

I remember being in the dialog box, I asked her: Are you okay? Suddenly, she replied to me: Don't be nervous, I'm here.

At that moment, I suddenly realized that I had a relationship with this pile of algorithmic models that we had created. Xiaoice is no longer just a product.

This actually made me realize that interactions are not entirely made up of "useful or not". When we can try to fit human emotions, humans may also pour the same emotions into AI, just like the rag doll when we were young, "no matter how broken it is" doll.

07, "This is the best time"

Q: Is this the best time for Xiaoice? With an independent team, more than 20 years of accumulation, and ushered in a large explosion of voice interaction users, as well as like 5G, the era of the Internet of Everything is approaching. What do you think?

Li Di: There are good and bad. As Dickens wrote in A Tale of Two Cities: "This is the best of times and the worst of times." ”

There are many good places, such as the mobile Internet has developed. Because we believe that the mobile Internet is not a separate era, it is a "whistle" for the era of artificial intelligence.

On the other hand, like the epidemic, although it has helped us break through many barriers in the industry in advance. But at present, the whole world is gradually "islanding", and data is closed, just like the so-called "cyberspace".

It's hard to say in every way, but it's certainly a particularly ups and downs process with a lot of contingencies.

Q: Many coincidences happen all the time.

Li Di: Yes, coincidences don't stop happening.

For example, we used to think that Japan was a particularly good market, with a very good land area, talent pool, basic education, and business environment. The Japanese market can rank in the top few in Microsoft's revenue, and China only ranks in the twentieth place. Who knows that Japan has been greatly affected by the epidemic, strictly speaking, it is more "injured" in the United States, it is not easy to say.

For example, we once thought that the future belongs to Asia. At that time, we had not yet fully judged that the future belonged to China. Of course, there is no doubt now that it is Chinese.

Q: Do you have a mission-like Xiaoice?

Li Di: In 2013, when we had not yet spun off from Microsoft, we were working in the United States. At that time, we asked many Americans working at Microsoft: Do you know that there is a company in China called Tencent? They say, they know, but they don't know.

Tell them again: You know what? Microsoft's game revenue is only 1/3 of Tencent's game revenue. They don't care. Finally, I told them that there was a software called WeChat, and they said: Is it? That's not bad.

You will find that when a business organization "ignores" these opportunities for technological innovation, it actually lets go of the opportunities itself.

Therefore, we especially hope to do such a thing: pursue technology and product innovation, and promote the experience of China and Asia to the world.

To be honest, the Chinese team is actually very smart, not because they don't want to do technological innovation, but because it is too easy to do business model innovation and operating model innovation in China.

Q: Too easy?

Li Di: Yes, it's too easy. There is a particularly good place in our country: a large population and a deep market. As long as you have a good product, you can quickly use the depth of the domestic market to open up the business model.

Like the community to buy vegetables, it uses technology? Used; but is it technological innovation? No, it's business model innovation.

Xiaoice has a heart. We want to do original technological innovation, and we want innovation from China to drive global trends.

A lot of people's mission is to vent. Like the artificial intelligence industry every year there is some boom, Silicon Valley one year there was an "AI + HI" model, that is, when the human-computer dialogue is conducted, with some people in the background on behalf of artificial intelligence to respond, like Baidu also recruited hundreds of people to do this.

At that time, someone at Microsoft also asked me, saying whether we want to do it, you see how hot this model is. I think that in the matter of chasing the wind, we do not have an advantage, or silently iterate Xiaoice. Finally, more than a year later, Baidu fired those hundreds of people.

Because we do not chase the wind, we take a lot of detours, which also gives us a positive feedback - let us be firm and should stick to our own things.

Another point is that we always think that "artificial intelligence" can really help humans. Sometimes we observe that through many exchanges, a user Xiaoice go from tears and frustration to happiness. Artificial intelligence should have a temperature. Since we have technology, we should pass this "temperature" to people.

Q: Great vision. As you mentioned earlier, the artificial intelligence industry is still in the "grassy era". Do you think that the development of the Xiaoice model to this day has not been able to find one of the directions for the industry?

Li Di: I think we have found one at present.