The author has always been skeptical about Tesla's dead "pure visual route". The point of doubt is: can the advancement of algorithms compensate for the limitations of camera physical performance? For example, when the visual algorithm is good enough, the camera has the ability to measure the distance? Can you see it at night?

The previous question was dispelled in July 2021, when Tesla was revealed to have developed "pure visual ranging" technology. The latter question persists.

At one point, the author even thought that if the camera is analogous to the human eye, and the visual algorithm is analogous to the "part of the function that cooperates with the eye" in the human brain, then the view that "when the visual algorithm is good enough, you can not need lidar" is equivalent to saying that "as long as my brain is smart enough, the eyes are highly myopic."

However, some time ago, Musk mentioned that HW 4.0 will "kill the ISP" plan, but subverted the author's cognition. In an interview with Lex, Musk said that the raw data of Tesla's full car camera will no longer be processed by the ISP, but directly input the NN reasoning of FSD Beta, which will make the camera super urgent and powerful.

With this topic in mind, the author made a series of exchanges with Luo Heng, head of horizon BPU algorithm, Liu Yu, CTO of Wanzhi Driving, Wang Haowei, chief architect of Junlian Zhixing, Huang Yu, chief scientist of Zhitu Technology, co-founder of Cheyou Intelligent, and many other industry experts, and then understood that his previous doubts were purely "self-made clever".

The advancement of visual algorithms is indeed expanding the boundaries of the physical performance of cameras step by step.

One. What is an ISP?

The full name of the ISP is Image Signal Processor, that is, the image signal processor, which is an important component of the vehicle camera, and its main role is to calculate and process the signal output by the front-end image sensor CMOS, and to "translate" the raw data into images that can be understood by the human eye.

In layman's terms, only by relying on isSPs can drivers "see" the details of the scene with the help of cameras.

Based on the first principle, autonomous driving companies also use ISPs, mainly according to the actual situation of the surrounding environment to white balance, dynamic range adjustment, filtering and other operations on the camera data to obtain the best quality image. For example, adjust exposure to accommodate changes in light and dark, adjust the focal length to focus on objects at different distances, and so on, so that the camera performance is as close as possible to the human eye.

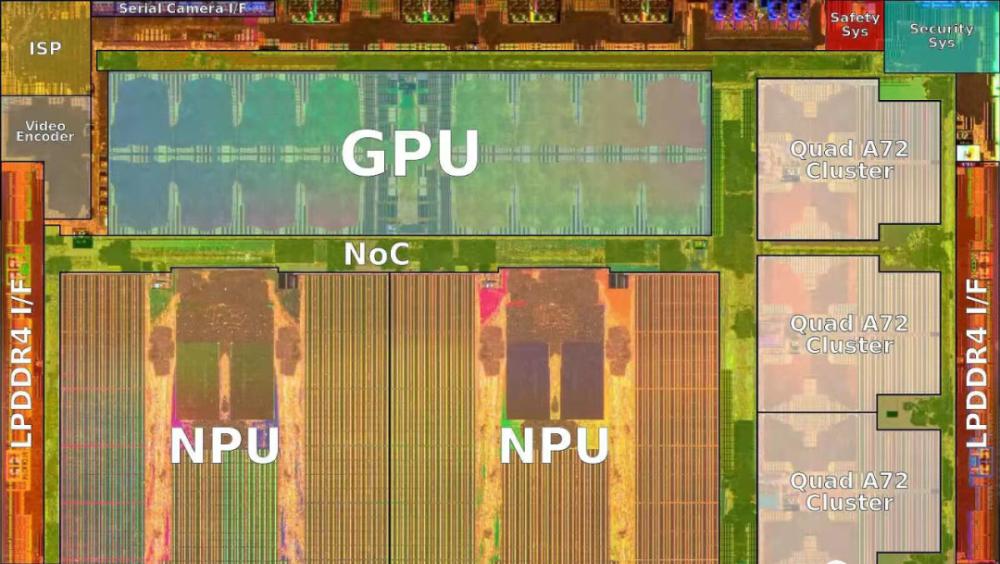

(The picture shows Tesla's FSD chip)

However, it is clear that getting the camera "as close to the human eye as possible" will not meet the needs of autonomous driving - the algorithm requires the camera to work normally in situations where the human eye is also "out of order" such as strong and low light. In order to achieve this goal, some autonomous driving companies have to customize ISPs that can enhance the performance of cameras in bright light, low light and interference conditions.

On April 8, 2020, Ali Damo Academy announced that it has independently developed an ISP for on-board cameras based on its unique 3D noise reduction and image enhancement algorithms to ensure that autonomous vehicles have better "vision" and "see" more clearly at night.

According to the road test results of the autopilot laboratory of Damo Academy, using the ISP, the image object detection and recognition ability of the car camera at night, which is the most challenging scene, is more than 10% higher than that of the mainstream processor in the industry, and the original blurry annotations can also be clearly identified.

Two. Motivation and "feasibility" of killing ISPs

However, ISPs are designed to get a "good-looking" picture in a changeable external environment, but whether this is the most needed form of picture for autonomous driving, the industry is still inconclusive. According to Musk Elon, neural networks don't need beautiful pictures, they need raw data that the sensor acquires directly, raw photon counts.

In Musk's view, no matter what processing method the ISP adopts, there is always a part of the original photon that will be lost in the process of reaching CMOS through the lens and converting into visible photons.

Regarding the difference between the loss and non-loss of the original photon, Huang Yu, chief scientist of Zhitu, said: "When the photon is converted into an electronic signal, there is indeed noise that is suppressed, not to mention that the ISP does a lot of processing on the original electrical signal. ”

In the article "From photon to control - from photons to control, Tesla's technical taste is getting heavier and heavier", the co-founder of Che right intelligence made a more detailed explanation of the human eye's processing of perceptual information, and the summary here is as follows:

(The picture is taken from the public account "Car Right Intelligence")

As shown in the figure above, the human visual system and the electronic imaging system are logically identical. Retinal color and pixel matrices are actually more representative of the information of the external objective world, and true human perception of color requires the participation of the brain (equivalent to the back-end processing of ISPs and higher layers).

On the left side of the image above is a standard color plot with saturation gradients and intensity gradients, and on the right is the original frame with element colors corresponding to it. In contrast, it can be seen that the imaging system designed with the human visual sense as the core will provide us with pleasant and subjective image information, but it may not fully reflect the objective real world.

Musk believes that in order to make it "better looking" and more suitable for "people to see", a lot of data that was originally useful was processed in the "post-processing" link that the ISP was responsible for. But if it is only for the machine to see, the processed data is actually useful, so if the "post-processing" step can be omitted, the amount of valid information will increase.

According to Yu Wan's CTO Liu Yu's explanation, Musk's logic is:

1. Due to the richer raw data, in the future, the detection range of the camera may be larger than the human eye, that is, when the light intensity is very low or very high, our human eye may not be able to see (because it is too dark or too bright), but the machine can still measure the number of photons, so it can still have image output;

2. The camera may have a higher resolution of light intensity, that is, two light points that look very similar, and the human eye may not be able to distinguish such a small brightness or color difference, but the machine may be able to.

The explanation of an AI Four Dragons engineer is that the dynamic range of a good camera is much larger than that of the human eye (in a relatively static state), that is, the range of "from the brightest to the darkest" that the camera can observe is wider than the human eye can observe. In extremely dark conditions, the human eye cannot see anything (almost no photons), but the camera's CMOS can receive many photons and can therefore see things in the dark state.

In an interview with "Nine Chapters of Intelligent Driving", many experts expressed their approval of Musk's logic.

Luo Heng, head of the horizon BPU algorithm, explained: "Tesla's current data labeling has two kinds of manual labeling and machine automatic labeling, of which manual labeling is actually not all based on the current image information, but also contains human knowledge of the world, in this case, the machine also has the probability of using more information-rich raw data; and the machine automatic labeling is combined with post-observation, combined with a large number of geometric analysis consistency, if the use of raw data, the machine has a high probability of finding more correlation, Make more accurate predictions. ”

In addition, Wang Haowei, chief architect of Zoomlion, explained: "Tesla has flattened the original image data before it enters the DNN network, so there is no need to post-process the perception results of each camera. ”

By killing the ISP to improve the camera's recognition ability at night, this seems to be the opposite of the idea of Ali Damo Academy's self-developed ISP. So, are the two contradictory?

According to the visual algorithm expert of a self-driving company, the demands of the two companies are actually the same. In essence, both Ali Damo Academy and Tesla hope to improve the ability of the camera through the cooperation of chips and algorithms.

But the difference between the two is that the idea of Ali Damo Academy is that in order to be able to see the human eye, various types of algorithmic processing and enhancement of the original data are carried out; Tesla removes the part of the data processing done in the algorithm to "take care" of the human eye, and instead develops the data and corresponding capabilities required to increase the algorithm required for the camera in low light and strong light environments.

In addition to this, Musk also said that a 13-millisecond latency drop can be achieved without ISP processing, because there are 8 cameras, and each camera ISP processing produces a delay of 1.5 - 1.6 milliseconds.

Once Musk's idea is practically verified to be feasible, other chip manufacturers should also "follow suit". Even, some chip manufacturers are already doing this.

For example, Feng Yutao, general manager of Amba China, mentioned in an interview with Yanzhi in January: "If customers want to feed raw data directly into the neural network for processing, CV3 can fully support this method."

Three. The "physical performance" of the camera also needs to be improved

Not everyone is fully convinced of Musk's plan.

A head of Robotaxi technology VP said: "Tesla is also right, but I think the development of the algorithm will be very difficult, the cycle will be very long, and then the development time may be very long." If you add a lidar, you can first solve the three-dimensional problem directly, and of course, you can use pure vision to build three-dimensional, but it consumes a lot of computing power. ”

According to the co-founder of Che Right Intelligence, Musk is a "master of incitement" and "his propaganda method is to make you dizzy and make you involuntarily produce technology worship.".

He said: "Some imaging experts believe that it is unrealistic to abandon all ISP-level post-processing, such as obtaining debayer images of intensity and color, which will cause a lot of difficulties for subsequent NN recognition heads." ”

In a recent article in Che right Intelligence, it was mentioned that the scheme of raw data dyeing is directly into the neural network by the ISP is feasible in which scenarios? Is it compatible with Tesla's existing cameras or do you need a better vision sensor? Is there a full NN head task or a local NN head task in the FSD beta? It's all uncertain answers.

Let's go back to the question I asked at the beginning: Can the improvement of visual algorithms break through the bottleneck of the physical performance of the camera itself?

The CEO of Robotaxi Company with a visual algorithm background said: "Backlight or the perception of vehicles coming out of the tunnel and suddenly facing strong light, it is difficult for the human eye to solve, and the camera is not GOOD, at this time, it is necessary to have lidar." ”

Liu Yu believes that in theory, if you don't care about the cost, you can build a camera, the performance can exceed the human eye, "but the low-cost cameras we use on these cars now seem to be far from reaching this performance level." ”

The implication is that solving the perception of the camera in low light or strong light cannot rely solely on the improvement of visual algorithms, but also around the physical performance of the camera.

If the camera wants to detect the target at night, it cannot be imaged by visible light, but must be based on the principle of infrared thermal imaging (night vision camera).

An "AI Tigers" engineer believes that photon to control is very likely to mean that Tesla's camera with the HW 4.0 chip will be upgraded to multispectral.

The engineer said: At present, the driving camera filters out the non-visible light part, but in reality, the light spectrum emitted by the object is very extensive and can be used to further distinguish the characteristics of the object. For example, white trucks and white clouds can be easily distinguished in the infrared band; with pedestrians or large animals to prevent collisions, it will be easier to use infrared cameras, because the infrared rays emitted by the body of the thermostatic animal are easily distinguishable.

"Right Intelligence" also mentioned such a question in the article: Will Tesla update the camera hardware for the concept of phoenix to control, introduce a real light quantum camera, or do is it ANP bypass based on the existing camera? At the same time, the authors also point out that if the camera hardware is also upgraded, "then Tesla will have to completely retrain its neural network algorithm from scratch because the inputs are so different."

In addition, no matter how advanced the camera technology is, it may not be able to get rid of the effects of dirty pollution such as bird droppings and muddy water.

Lidar uses an active light source, first emit light, and then receive light, the pixels are very large, and it is difficult to completely block it out of the general dirt. According to data provided by a lidar manufacturer, in the case of dirty surfaces, the detection distance of its lidar is only attenuated by less than 15%; and when there is dirt, the system will automatically issue an alarm. But the camera is a passive sensor, each pixel is very small, and the small dust skill blocks dozens of pixels, so it is directly "blind" when the surface is dirty.

If this problem cannot be solved, wouldn't it be a delusion to try to save the cost of lidar through advances in vision algorithms?

A few additional points:

1. How chip manufacturers design is only one aspect of the problem, but if customers do not have the ability to make full use of the original data, they cannot bypass the ISP.

2. Even if chip manufacturers and customers have the ability to bypass iss, in the future for a long time, most manufacturers will still retain ISPs, a key reason is that in the L2 stage, the driving responsibility subject is still a person, and the information processed by the ISP is displayed on the screen, which is convenient for interaction and can also give the driver a "sense of security".

3. Whether to bypass is still a continuation of the dispute between the two technical routes of "pure vision" and "lidar", in this regard, the above-mentioned views of Robotaxi's technical VP are very enlightening:

In fact, the pure visual solution and the lidar scheme are not spelled "who can do it and who can't do it", the real spelling is how long it takes for the development of the pure visual scheme algorithm to develop to the level of the lidar scheme, and how long it takes for the cost of lidar to drop to the same as the cost of the pure visual scheme. In short, is the former's technology advancing faster, or the latter's costs falling faster.

Of course, if the pure vision school needs to increase the sensor in the future, and the lidar school needs to reduce the transmission sensor, how much impact the algorithm is, how long it takes to modify the algorithm, and what the cost is, these are all problems that need to be further observed.

Reference Articles:

Musk's latest interview: The hardest thing about autonomous driving is to build a vector space, Tesla FSD or reach L4 at the end of the year| Alpha tells stories

Tesla chooses pure vision: camera ranging is mature, and radar defects are irreparable

https://m.ithome.com/html/564840.htm