High-end automatic driving systems are a problem that the next generation of intelligent networked vehicles must achieve, not only to solve how vehicles can achieve autonomous driving, but also to solve the problems that cannot be solved by the current generation of automatic driving, including function development problems and performance improvement problems. For example, it is not difficult to see from the recent Weilai automobile automatic driving accident that in order to achieve true automatic driving, it is necessary for the automatic driving system to solve many of the current edge scenarios, which are scene content that greatly affects the functional safety of the system. For example, most OEMs want to emulate Tesla to use similar shadow mode for data acquisition and simulation, so how to prevent stepping on the pit in the development process is also a problem worth thinking about.

In addition, for high-level automatic driving, the SOA-based development model will be used for architecture, so how to improve efficiency in SOA to achieve the goal of being universal, efficient and reliable must be solved.

This article will elaborate on the above three more difficult and urgent problems to be solved, with the intention of providing reference for developers.

How to improve collision detection of stationary targets

From a development and testing perspective, we have collected a number of scenarios that are difficult to solve or may have problems. Among them, the identification of stationary targets is one of them. From a full-vision perspective, currently molded autonomous driving products are based on monocular or tri-eye vision for inspection. This detection method has a defect that cannot be changed naturally, because the method is based on deep learning machine vision, which is manifested as recognition, classification, and detection are placed in the same module, and it is usually impossible to divide it, that is, if the target cannot be classified into classification, and then often for some targets can not be effectively detected recovery. This leakage of recognition can easily lead to collisions of autonomous vehicles.

In order to illustrate the reasons for the non-identification, summarizing the methods to solve this type of problem, we need to highlight here: the first is that the training dataset cannot completely cover all the targets in the real world; because many stationary targets are not necessarily standard vehicles, and may even be special-shaped vehicles, falling rocks, irregular construction sign lights, therefore, the type of target trained in the development stage cannot be used for real automatic driving identification scenarios to a large extent.

The second is that the image lacks texture features, and the texture features contain multiple pixels for statistical calculations in the area, often with rotational invariance; there is a strong resistance to noise; therefore, for some less textured truck carriages, white walls, etc., it is difficult to identify by visual means.

In addition, it is necessary to explain why deep learning does not do a good job of identifying static targets. Because machine vision in deep learning, especially machine vision images based on monocular camera detection, will remove all stationary targets as backgrounds, so that it is possible to select moving targets that are important to the video understanding process, which can not only improve recognition efficiency, but also reduce the encoding bit rate. At the same time, in order to prevent false detection, it is also necessary to separate the moving target and the stationary target, such as some cars parked on both sides of the road, the priority of the moving target is naturally higher than the stationary target, and then to identify, usually the background subtraction, three frame method or optical flow method, usually this type of recognition algorithm takes 1-2 seconds, but for the real-time requirements of high automatic driving, this time may have a collision accident.

Therefore, in order to solve the above identification performance defects, it is necessary to solve the problem caused by the lack of deep learning from the root cause. Machine vision has two main learning matching modes, one is manual modeling, and the other is deep learning, which is usually used for image recognition and classification. Since deep learning is mainly through segmentation and refitting, in principle, it has to traverse each pixel, multiply and accumulate billions of times on the trained model and set different weight values to compare, which is different from human vision, and machine vision is non-integral. In essence, deep learning is a kind of use of data collection points, through effective matching with existing databases, to fit an infinitely close to the actual curve function, so as to be able to identify the desired identified environmental goals, infer trends and give predictive results for various problems. Of course, curve fitting also carries a certain risk when representing a given data set, which is the fit error. Specifically, the algorithm may not be able to identify normal fluctuations in the data, and eventually the noise is considered valid information for the sake of fit. Therefore, if you want to really solve the ability to identify such abnormal environmental targets, it is not wise to rely only on improving the AI accelerator capability of the SOC chip. Because the AI accelerator only solves the accelerated computing power of the MAC multiply accumulation plus computing module.

To truly solve this kind of identification or matching error problem, the next generation of high-performance autonomous driving systems are usually optimized by multi-sensor fusion (millimeter-wave radar, lidar) or multi-eye camera detection. Designers who have done the development of driver assistance systems should be clear that for relying on the current generation of millimeter-wave radar because it is very sensitive to metal objects, it is usually possible to avoid the mis-triggering of AEB due to false detection in the process of detecting objects. Therefore, many stationary targets are usually filtered out, and for some large trucks or special operating vehicles with higher chassis, the target is often missed because of the height of the millimeter-wave radar.

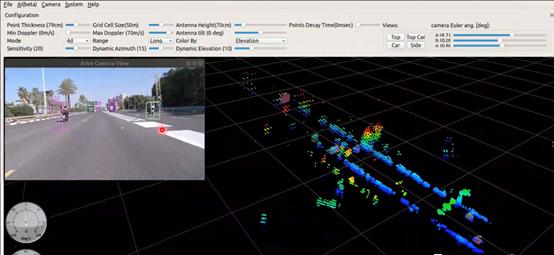

Traditional methods (or non-deep learning algorithms) are required for three-bit target reconstruction, which can usually be optimized using lidar or high-resolution 4D millimeter-wave radar for point cloud reconstruction or binocular cameras for optical flow tracking. For the method of detecting the target based on lidar, the principle is to transmit the detection signal (laser beam), and then compare the received signal reflected from the target (target echo) with the transmitted signal, and after appropriate processing, the relevant information of the target is obtained, so the point cloud matching of the echo itself is also a deep learning process, but this process is faster than the segmentation and matching of the bullet screen image recognition.

The detection of static targets in the way of binocular vision is dependent on parallax images, and this parallax diagram of pure geometric relationship can be more accurately located at the static target position. Many times monocular vision can complete the detection of bumpy road conditions, road conditions with strong chiaroscuro, and long-distance objects in some broken road conditions, but there will be a lot of uncertainty in three-dimensional recovery. The stereo camera can be integrated with deep learning, and the three-dimensional point cloud is fused with the RGB information and texture information of the image, which is conducive to the recognition and 3D measurement of long-distance targets.

Deep learning can detect common road participants more finely and stably, synthesizing multiple features to facilitate the discovery of road participants from a greater distance. Stereo vision can simultaneously achieve 3D measurement and point cloud-based detection of all-road participants, not limited by object type, not limited by installation position and attitude, dynamic ranging is more stable, and generalization ability is better. We combine stereo vision and deep learning to discover targets at greater distances and to use stereo vision for three-dimensional portrayal.

These algorithms either rely more on the CPU for logical operations including kalman filtering, smoothing, gradient processing, or rely on GPU deep learning processing of images. Therefore, the next generation of high-level autonomous driving domain control systems need to have good computing power to ensure that their performance meets the requirements.

Shadow mode can break the game perfectly

At present, when developing the next generation of high-end automatic driving systems, the OEMs or Tier1 often cannot fully cover the various working conditions that may suddenly change in the environment, and this initial scale of data coverage often relies on high-quality data acquisition and processing, which we usually call data coverage in extreme scenarios. How to collect and transmit a large amount of extreme scene data back to the background of automatic driving is an important problem that we need to solve, and it is also a key element to evaluate whether the subsequent automatic driving system can break the game perfectly.

Tesla's shadow mode pioneered effective data collection. The definition of "shadow mode" is that in the manual driving state, the system and its peripheral sensors are still running but do not participate in vehicle control, but only verify the decision algorithm, that is, the system's algorithm makes continuous simulation decisions under the "shadow mode", and compares the decision with the driver's behavior, once the two are inconsistent, the scene is judged to be "extreme working conditions", and then triggers data backhaul.

However, if you want to understand shadow mode well, you need to focus on solving the following problems.

1. How does shadow mode provide more and larger extreme working conditions detection and acquisition, including labeled and non-labeled training scenarios

Since the shadow mode is usually a part of data acquisition and processing, except for triggering data recording by using the trajectory difference of the control end, the rest of the working mode is not directly applied to data recording. If autonomous driving requires efficient and rapid application of shadow mode methods, it is necessary to simultaneously lay out deep neural networks during its acquisition process, throughout the control process (including the entire module that realizes the entire perception, prediction, planning and control). More practical shadow patterns need to broaden their operating range, which requires that it is not only the alignment trajectory to trigger data recording and backhaul, such as sensing target differences, fusing target differences, etc. can trigger data recording and backhauls. This process requires the definition of the corresponding data acquisition units according to the actual collected ports, which can work in automatic driving or manual driving mode, only as hardware for data acquisition, recording, and backhaul, and does not affect vehicle control.

2. Whether the chip selection and sensor configuration support the shadow mode are in line with expectations

For autonomous driving development, we expect that shadow mode is just a simple logical operation with less resource consumption, and there is no increase in background processing delay during the activation of shadow mode. If the next generation of advanced automatic driving wants to achieve data acquisition based on shadow mode, it must consider configuring an additional chip specifically for the shadow system, or splitting out a module in a chip in a multi-chip domain control system for shadow algorithm training.

In addition, the previous shadow system is usually running on the L2 + system, its target sensor type is often relatively single, such as the general company is using the 5R1V way for data acquisition, a little more advanced, there may be a configuration of a single lidar (the current domestic mass production or about to mass production of enterprises have not yet had this configuration), whether the data collected by this sensor configuration can be directly applied to the next generation of high-end automatic driving systems, it is uncertain. Because, the pre-judgment of a single or less sensor for environmental conditions and the execution ability of the system are greatly different. Therefore, when the upgraded high-level automatic driving system, its sensor capability is definitely a step up for the control of the entire system. Therefore, whether the subsequent automatic driving system can still apply the scene data collected at the previous L2 level, or can only be partially applied, this needs to be redesigned and planned.

3. What kind of standard judgment method needs to be used to achieve the most scientific and effective data backhaul

The trigger premise of the shadow mode is that the driver's operation of the vehicle must be correct and objective, because it is assumed that in the human driving mode, the system's ability to judge the environment must be inferior to the driver. But is this really the case? Of course not all. For example, the driver sees that there is more dirt on the road in front of the car for fear of staining the wheels affecting the appearance of the car and chooses to change lanes to avoid driving, and the system will indeed not trigger the automatic lane change system for this reason, at this time, if the driver's car control method is used as a standard to judge its correctness, then the system must be wrong, at this time, the trigger data acquisition, backhaul, in fact, is meaningless or inaccurate. Therefore, from another point of view, in the entire automatic driving control system link, the driver's regular driving behavior may be a driving bias, and the trigger mode of data acquisition and backhaul is actually a method mode to improve the sense of driving experience.

4. The positioning of the problem by the shadow mode needs to be further improved

Since the shadow pattern is oriented to the visual driving end, this way of locating the problem is often retrospectively viewed from the perspective of the executive end. When there is a problem in the control execution process, it is often pushed back to the question of whether the decision-making end is time, and if there is no problem with the decision-making end, it will continue to push forward whether it is the trajectory prediction end, and further push whether it is a perception end problem. In addition, the perception end is also a broad concept, which includes the real scene perception and subsequent fusion system, if there is a problem with scene perception, but through a series of robust algorithm processing of the fusion system, avoiding the problem of misjudgment caused by the perception error, it is necessary to screen out such abnormal perception scenes separately.

In order to filter out the data of this scenario, it is necessary to continuously record the jump between the data at each end of the planning, and the large jump between the two ends triggers the data backhaul, of course, the calculation amount of the whole process will be very large. At present, the overall perception ability of the automatic driving system is still very limited, misperceivation leads to the scene of miscalculation still occurs from time to time, and even correct perception may lead to misexercitration, in this case, the shadow system needs to refine the granularity when collecting the "prediction / decision failure" scene data, excluding the collection of invalid data and backhaul, which can save both traffic and storage space.

5. Whether the simulation application ability of automatic driving system data has been established

The use method after data backhaul is to use it for deep learning and data matching optimization, and this process requires that the simulation system first rely on the scene to build the simulation system, and enter the corresponding scene detection parameters in the simulation system for algorithm training optimization. However, the reality is that the ability to effectively use road measurement data for simulation is currently relatively demanding. Major OEMs, testing agencies, tier1 are still not fully equipped with such capabilities to complete or the capabilities are not mature enough.

What kind of development problems will be brought about by the architecture upgrade

High-level autonomous driving needs to integrate a variety of application scenarios such as vehicle-road collaboration, edge computing, and cloud services, and needs to have certain scalability, versatility, and autonomous evolution. The current electrical and electronic architecture and software platform architecture are difficult to solve these needs, and the current automotive SOA can solve the above problems very well. SOA originates from the IT field, and the optimal implementation in the automotive SOA environment should be to inherit the mature Ethernet-based implementation of high cohesion and low coupling.

As a result, the process of designing a high-level automated driving system based on the SOA architecture focuses on achieving the following functions:

1. Service communication standardization, that is, service-oriented communication

SOME/IP uses the RPC (Remote Procedure Call) mechanism, inheriting the "server-client" model. SOME/IP allows clients to find the server in a timely manner and subscribe to the service content that interests it. The client can use the "demand-response" and "firewall" models to access the services provided by the server, and the service can use notifications to push the service content that the customer has subscribed to, which basically solves the problem of service communication.

However, SOME/IP, a communication standard based on soa architecture, has two major drawbacks:

a) Only a relatively basic specification is defined, and application interoperability is difficult to guarantee.

b) It is difficult to cope with big data, high concurrency scenarios. Due to the lack of object serialization capabilities, SOME/IP software interoperability is prone to problems. SOME/IP does not support shared storage, broadcast-based 1-to-many communication, and performance may become an issue in autonomous driving scenarios.

2. The SOA architecture needs to divide the services, and the service division for the purpose of service reuse and flexible reorganization is the service-oriented reuse sharing design.

The system-software development process of SOA needs to be applied to the definition of the functional logic of the vehicle, and the architecture will lead or participate in the process of requirements development, function definition, function implementation, subsystem design, component design, etc. Service-oriented reuse design implementation needs to be able to run through and finally reflect in the function implementation.

What needs to be noted here is that service reuse involves cutting and rebuilding of the old system, and as the scale increases and the new features increase, information-based communication will grow, so that the unexpected situation will begin to experience a major processing response period, which may cause data access delays. The automatic driving system has extremely high requirements for real-time performance, which is also the biggest limitation of SOA applications.

In addition, for the software implementation of SOA, the service-based software architecture construction process needs to fully consider whether the service-oriented communication design and service-oriented reorganization implementation need to be fully considered.

The next generation of high-end autonomous driving systems will need to solve two types of problems: Where am I and where am I going? One of these two types of problems relies on map positioning and the other on navigation control. While infrastructure is about building service-oriented design capabilities, soa architectures are born. How to achieve a perfect breakthrough in the functions of high-level automatic driving systems under the new architecture and improve the overall functional experience and performance to a new height is a problem that autonomous driving developers need to focus on breaking through. No matter from the overall development method, the perceptual performance should be achieved in the process of quantitative change to qualitative change. There are still many problems that need to be solved on this road, and we need to solve them one by one continuously.

Reprinted from Yanzhi Intelligent Car, the views in the text are only for sharing and exchange, and do not represent the position of this public account, such as copyright and other issues, please inform, we will deal with it in time.

-- END --