In the history of Transportation in China, there are two famous events that seem absurd but are extremely revealing:

In 1865, the fourth year of the Tongzhi reign of the Qing Dynasty, the British merchant Durand laid a small railway of about 500 meters long outside the Xuanwu Gate in Beijing. It was China's first railway, and it quickly drew exclamations from onlookers. It is amazing to see a huge steam engine with several carriages slowly coming without human pulling. Leifeng Network

However, the ignorance of the steam engine head still made people fearful, and the Qing court officials were even more frightened and broke into a cold sweat, and ran to complain to Cixi, saying that the behemoth built by Durand was an iron dragon that could enter the heavens and the earth and destroy the dragon vein of the Qing Dynasty! When Cixi heard this, she was also frightened: "This is to kill me Daqing!" "Immediately ordered demolition. Leifeng Network

In addition to "ignorance", according to Mao Hongbin, the governor of Liangguang, there was another reason for opposing the construction of the railway, that is, the train would squeeze out the living space of the carriage, thus interrupting the trade of the people: "As soon as this (iron) road is opened, it will become a road for foreign trains to travel alone, and it will be difficult for Chinese carriages to ride alongside them, let alone be overwhelmed by their rampage, and will inevitably cut off the delivery of merchants and people." "Leifeng Network

This is Event One.

Nearly a hundred years later, a similar tool iteration competition event occurred in Changzhou, Jiangsu Province:

In 1946, Changzhou, Jiangsu Province, planned to introduce buses in the urban area, but there were still 3,000 rickshaw drivers in the city. The implementation of urban buses will inevitably threaten the living space of rickshaw drivers, so 3,000 rickshaw drivers have launched a general strike march.

In October of that year, the strike march reached a white-hot point. On the afternoon of October 25, a group of drivers went to the city hall to protest, and when they passed the Fuqiao Bridge, they encountered a red-headed Chevrolet bus. The emotional coachmen stopped the bus, threw stones at the car, and the two sides were in a mess, and finally the escort soldiers shot in the melee, killing one person on the spot and seriously injuring three people, and the local government had to urgently stop the urban bus.

Looking back now, these two things seem very incomprehensible, but in the era of artificial intelligence, its revelations (or lessons) are still profound: first, do not underestimate the public's concerns and resistance to unknown emerging technologies; second, a technology in the full bloom of human society, must consider, protect and even enhance the rights and interests of human beings, people-oriented.

Recently, senseTime's AI ethics of "development" are also from a human perspective.

Different from the early "AI ethics", SenseTime's proposed ethics of developing AI not only emphasizes the constraints on the negative impact of technology, but also emphasizes the positive value of ARTIFICIAL intelligence to society and individuals.

More importantly, SenseTime believes that in the process of developing and landing artificial intelligence technology, "AI ethics" should consider the rapidity of industry change, adopt different governance frameworks at different stages of technological development, and achieve a balanced development of society.

In short, it is: keep up with the times, put people first, and "promote good" and "eliminate evil".

1. The complexity of AI governance

In recent years, with the continuous infiltration of artificial intelligence in daily life, this magical technology has gradually shown the world its good and evil sides.

Let's start with the "good" side.

In the past two years, the outbreak of the new crown epidemic, widely used non-sensory temperature measurement, non-sensory passage, is based on perception recognition of artificial intelligence technology. AI technologies grafted on various computer hardware and software, such as intelligent image processing, convenient payment, conversational robots, etc., have also greatly facilitated people's daily lives.

Let me give you an example. Previously, SenseTime also developed intelligent obstacle avoidance glasses to help visually impaired people get voice prompts such as traffic signals and environmental obstacles when walking outdoors, improving the lives of visually impaired people.

Artificial intelligence not only brings extremely intuitive value in practical applications, but also has a remarkable impact on academic research. For example, some time ago, DeepMind used machine learning to prove two major mathematical conjectures that have plagued human scientists for decades, reaching the top of Nature. There are also many examples of AI scientists combining AI with basic research in physics, biology, chemistry, etc., and achieving outstanding results.

However, at the same time, due to the "black box" characteristics of deep learning algorithms and the instability and uncontrollability of artificial intelligence systems in real life, the harm caused by misuse or abuse of artificial intelligence to human society should not be underestimated.

In this context, the establishment of an ethical system of AI is a general trend. In order to promote the benign development of AI technology, all parties have taken on different roles. For example, according to the Statistics of the United Nations, there are currently more than 150 reports on AI governance in the world, and the academic community has also set off a research boom on many emerging topics such as "trusted AI", "responsible AI" and "AI for good".

So, how should tech companies take responsibility for the sustainable development of AI? As a pioneer in the AI industry, SenseTime has its own thinking.

First of all, should AI technology develop? The answer is undoubtedly yes. As Yang Fan, vice president of SenseTime and chairman of SenseTime's Ai Ethics Committee, said:

"The simplest and most straightforward way to solve the ethics of technology seems to be that without technological innovation, then naturally there will be no many problems of digital technology." However, the country must develop, society must progress, and people's quality of life must improve. The development of cutting-edge science and technology is very important to the home, the country and the world. If you have concerns and give up development, you are choking on food."

"Technological development and technological governance, in itself, is the relationship between constraints and balance, the reins are pulled too early, strangled too dead, will restrict the development of technology; in the process of technological evolution, timely collection of ropes, the bottom line and boundaries of technology, in order to make technology development and application, go fast, go far." Nowadays, AI has gone out of the laboratory, and with the increasing development of AI universal large devices, machine conjecture will bring us more possibilities, and ethical governance will be more important."

Yang Fan also said: "There is no theory that can perfectly explain technology, but we need to understand the boundaries of technology application. Through AI ethical governance, it is not only to avoid the negative impact of AI technology, guide the positive development of technology, and promote the sustainable development of companies and industries; at the same time, this is also the social responsibility that leading companies in the industry and all AI practitioners should bear."

From its inception, SenseTime's mission has not changed: to insist on originality and let AI lead human progress. That is to say, in the process of SenseTime's ai landing, the service object of AI should not be limited to a specific group, but should be oriented to the entire human society.

Yang Fan proposed that AI governance is a multi-objective, multi-dimensional balance process. For example, during the epidemic prevention period, the goal of protecting collective interests and respecting the privacy of individual travel should be weighed; the health code should be balanced between targeted prevention and treatment of rapid population classification and the use of digital products for the elderly.

The trade-off of multidimensional goals means that the difficulty of AI governance is increasing. To this end, the SenseTime Ethics Committee sorted out the international mainstream governance views and the practical experience of the industry frontline, and put forward the three cores of AI governance: technology controllable, people-oriented and sustainable development. Specifically, each core behaves as if:

Sustainable development: protecting the environment, protecting peace, inclusive sharing, open collaboration, social awareness, agile governance...

People-oriented: protect human rights, protect privacy, human controllability, fairness and non-discrimination, benefit mankind...

Technology controllable: verifiable, reviewable, legal, trustworthy, explainable, safe and reliable, open and transparent, responsible...

2. Institutional "technical controllability"

It is worth noting that SenseTime's participation in the "AI ethics" process does not stop at written work in the form of initiatives or reports. SenseTime's "Key Actions" are the first to implement the governance of "AI Ethics" in the operation process of products and businesses, making it a part of SenseTime's corporate management.

In January 2020, SenseTime established the Ai-Intelligence Ethics Committee to infiltrate the concept of "AI governance" from the organizational structure of the enterprise.

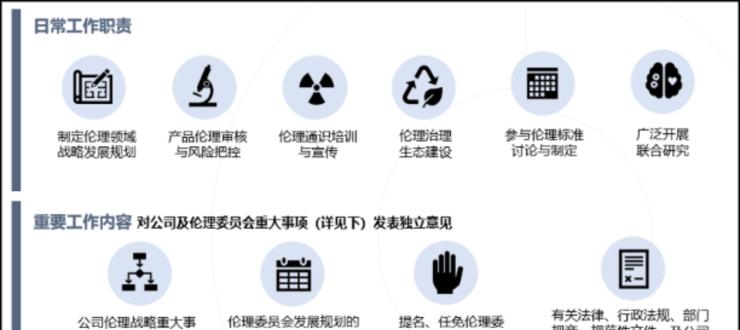

Internally, the committee reviews all of SenseTime's product lines, conducts ethics training for employees to improve their ethical awareness in their daily work, and cooperates with other organizations to jointly carry out research on AI ethics.

Photo note: Introduction to the responsibilities of the SenseTime Ethics Committee

In addition, the members of the committee include not only the core backbone within SenseTime, but also many external experts involved. Moreover, in order to ensure the objectivity and neutrality of the decisions made by the committee, the ethics committee has a mandatory rule that the external members cannot have less than 1/3 of the seats.

In the first half of this year, the committee launched an ethical review system, practicing the core AI governance principle of "technology controllability" from the actual operational "check".

The setting of the online system is combined with reference to numerous policies and research results in the field of technical ethics at home and abroad. Starting from the three dimensions of data risk, algorithm risk and social risk, the audit system sets up more than ten sections and nearly 30 questions, and disassembles the project in detail from the perspectives of respect for human rights, benevolence, impartiality, privacy protection, reliability, transparency and explainability, accountability, etc., and examines its ethical level.

Note: Operation and maintenance of SenseTime's ethical review system

At present, the ethical review has been embedded in the Project Approval Process, conducting online review, and dividing the risk level of the audited products according to the score. For products with high ethical risks, the system rejects them and asks for interruption of development, offline or rectification. If it cannot be rectified, it will not be listed.

Figure note: SenseTime's ethical review process

For example, there have been "AI fortune tellers" who need to find SenseTime, propose to use computer vision technology to identify human body features, palm prints and other information, and automatically give the user's life fortune and other predictions. Identifying these information and characteristics is not technically difficult, but it is clearly not in line with SenseTime's values, and even if it is foreseeable that there can be benefits, such a project cannot be established.

Yang Fan's view is: "Technology can do nothing, and then it can do something." While a highly profitable AI product may be eliminated due to risk issues, which will have a direct impact on the company's revenue, it is necessary in the long run.

At present, this system has fully covered SenseTime's existing products and newly applied project products, forming an internal ethical risk control mechanism for the whole life cycle of product projects from project establishment, release and operation. The results of the ethical review will accompany the whole process of product development and release, and if there are significant changes in the product during the release stage, the description needs to be re-reviewed.

According to SenseTime, since the launch of the system, 10% of the products have been ordered to rectify or go offline; in the review of new projects, 5% of the projects have been put back for rectification or rejected, accumulatively giving up commercial benefits worth millions of dollars.

"It is not enough to have concepts and ideas, AI ethical governance requires both high-level guiding principles and specific and micro evaluation indicators." The establishment of the system is to construct an artificial intelligence ethical risk assessment system based on these indicator dimensions, concretize and operate the indicators, and provide support for the technical governance and risk management and control of enterprises." Yang Fan said,

In practice, the AI ethics management system covers all aspects of risk identification, assessment, processing, monitoring and reporting through a systematic way, clarifies the risk management and control responsibilities of each subject in the whole life cycle of each product, and makes the technology controllable with rules to follow, rules to follow, and evidence to check."

In November, SenseTime received the Harvard Business Review's 2021 Ram Charan Management Practice Award for forward-thinking and practice in ethics. It is also the first company in China to receive media awards from top business schools for its practices in technology ethics and governance.

3. The AI ethics of "development"

"Technology controllable" is not an end, but a means.

In the final analysis, the ultimate goal of artificial intelligence enterprises in technological innovation is not to stop the research and innovation of artificial intelligence technology, but to develop artificial intelligence in real life in a benign and sustainable way, keep up with the needs of the times, and make it benefit mankind and society.

This year, in addition to ethical review, SenseTime also continued to maintain its innovation in artificial intelligence technology, launching artificial intelligence infrastructure such as AI large devices, and "ethical governance" and "technological innovation" to contribute to the industry.

"Whether it is the technology itself or the governance of technology, SenseTime is an open and committed long-termist. AI ethical governance is a topic that requires continuous attention and investment. The development of artificial intelligence ethics requires multi-party cooperation and joint exploration."

It is understood that at present, SenseTime has cooperated with 10 institutions such as tsinghua university's Institute of International Governance of Artificial Intelligence, Shanghai Jiao Tong University, Shanghai Artificial Intelligence Laboratory, Shanghai Institute of Science, and Data Law Alliance to carry out joint research on agile governance, data security, and the interpretability of artificial intelligence algorithms, etc., promoting research on five special topics on average every year, and producing 10+ special research reports.

When more and more forces participate in it, "AI ethics" will no longer be just a slogan, but will evolve into practical actions, implement the development of artificial intelligence, and ultimately promote the comprehensive implementation of artificial intelligence in human society.

At that time, humans and AI can be regarded as the real "harmonious coexistence". This is the vision of "developing" the AI ethics.

Author's Note:

During the writing period, the U.S. Treasury Department used the so-called "human rights violations" as an excuse to put 15 individuals and 10 entities in five countries, including China and Russia, on the sanctions list, including SenseTime. This is also the second time that SenseTime has been unjustifiably suppressed by the United States after being added to the Entity List in 2019 – in particular, the date of the decision was announced on the pricing date of the Hong Kong listing offering of The SenseTime plan. Such a precise blow to SenseTime cannot be described as obvious. This act of the US Treasury Department politicizes normal commercial and scientific and technological activities and seriously undermines normal market rules and order.

At this point, I think of what Yang Fan, chairman of the SenseTime Ethics Committee, said: "Do you want to develop AI? Absolutely. The country must develop, society must progress, and people's quality of life must be improved. The development of cutting-edge science and technology is very important to the home, the country and the world. "May China's hard science and technology development rise!"