The evolution of "Discussion 2.0" stands at the current layout of SenseTime's large model

We are experiencing a huge wave of new AI infrastructure.

Within half a year, the consensus of large models spread rapidly from small scopes. According to a report released by CITIC, the number of large models with more than 1 billion parameter models released has approached 80, half from enterprises and half from scientific research institutions.

In the process of gradually forming the domestic large model ecology, it has also begun to strip off its follow-up to OpenAI and gradually find a path that suits it. The standard for measuring the success of the big model has also come from the parameter competition of hard bridges and hard horses to the real knife and real gun to solve the problem.

In April this year, SenseTime first announced the "SenseNova" large model system, and released a number of AI large models and applications, including the self-developed Chinese large language model "Discussion SenseChat". Recently, at the World Artificial Intelligence Conference, SenseTime announced the first major iteration of the "Daily New SenseNova Big Model" system. The large language model "discussed" to upgrade to version 2.0.

It's stronger. In the entire SenseTime big model layout system, its role has become more and more obvious.

Stronger "Negotiation 2.0"

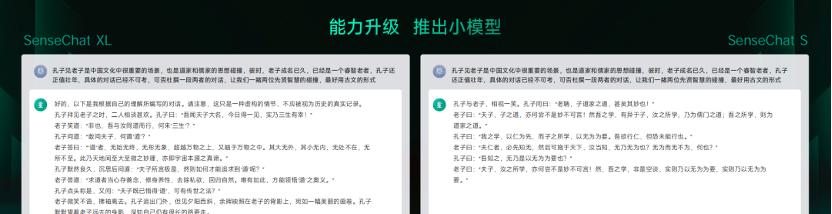

How to intuitively reflect the ability improvement of "Negotiation 2.0"? Xu Li, chairman and CEO of SenseTime, demonstrated a non-existent conversation between Lao Tzu and Confucius.

The answer to "Discussion 2.0" revolves around the "Tao". Confucius asked Lao Tzu, and although Lao Tzu had realized the Tao, he could not speak to Confucius, so he just flicked his sleeves and left. The dialogue that emerges from this one flows smoothly. "Discuss 2.0" even added a joke to the text:

Confucius said: "I heard the great name of my master, and I was fortunate to see it today!" ”

Lao Tzu smiled and said, "Feiye, I walk the same way as Ru, where do I come from 'three lives'?" ”

And according to the question, the whole dialogue is in literary form. And to avoid confusion, "Consultation 2.0" also states the premise that "this is only a fiction and should not be regarded as a true record of history" in the first sentence of the answer.

When Discuss 1.0 was first launched, live demonstrations already demonstrated its excellent multi-round dialogue and human-machine co-creation capabilities. After three months, "Discussion 2.0" has improved more in terms of knowledge and information accuracy, logical judgment ability, context understanding ability, and creativity.

For example, use "Consult 2.0" to make travel planning and instruct it to make a form:

Or test the thing about "everything your girlfriend says is right":

Even if you can not only read your girlfriend, "Discuss 2.0" can also read a bit of irony or yin and yang strange tone:

What has happened in the past three months of "Discussion 2.0", in fact, just look at the results of several exams. In the results of the three authoritative big language model evaluation benchmarks (MMLU, AGIEval, and C-Eval) around the world, "Negotiation 2.0" has outperformed ChatGPT.

In addition, some people may have noticed in the demo photos of Lao Tzu's dialogue with Confucius in the previous "Discussion 2.0" appeared in the split-screen demonstration of two versions of XL and S, which is "Discussion 2.0" after the upgrade to add more large models with different parameter sizes for customers to choose, among which the model version of the smallest parameters can even run on mobile terminals.

In terms of language, "Discussion 2.0" has added new languages such as Arabic and Cantonese. Support interaction between multiple languages such as Chinese Simplified, Chinese Traditional, and English. And the support for ultra-long text in "Discuss 2.0" has also been increased from 2k to 32k, which can fully understand the context.

For SenseTime, a ToB-oriented large model manufacturer, the quality of the big model itself is only the starting point, and how enterprise customers define the specific outline of the large model with their own needs, and how the latter achieves a stable iterative process and approaches the real pain point step by step, is the final place to win or lose.

Open knowledge base fusion capabilities

After SenseTime has trained a "Negotiation 2.0" with strong understanding, dialogue, reasoning and other skills, enterprise customers can also use their accumulated enterprise knowledge to turn large models into "professionals" who can serve their own enterprises well.

How to solve these engineering problems efficiently is very important.

SenseTime's "Discussion 2.0" has added a knowledge base fusion interface, allowing enterprises to quickly acquire professional knowledge and capabilities without waiting for the iterative upgrade of the basic large model. After the knowledge base is integrated, the model's ability to update and understand knowledge can be enhanced, and the rapid understanding and acquisition of knowledge can be enhanced, and the cost of training the model by customers will be greatly reduced.

Wang Xiaogang, co-founder and chief scientist of SenseTime, said: "With the knowledge base, we can summarize the corresponding knowledge in this field relatively simply and conveniently without entering our model itself", and because the information is more accurate, it also solves the problem of hallucinations.

Digital humans as tools for efficiency

At the same time as the comprehensive upgrade of "Discussion 2.0", the AIGC platform capabilities in the "Daily New SenseNova Large Model" system are also constantly breaking through, and after integrating the language large model capabilities, it has achieved leapfrog improvement.

For example, the aforementioned Wensheng picture creation platform "Second Painting" has been upgraded to version 3.0 this time, and the model parameters have been increased to 7 billion, and the detailed portrayal of the generated pictures has reached the level of professional photography. On the headache of prompt words, "Discuss 2.0" provides the automatic expansion ability of prompt words for "Second Draw 3.0". This means that users only need a few simple hints to achieve a detailed image result.

In the field of digital humans, SenseTime's digital human video generation platform "Ruying" has also been upgraded to version 2.0, which improves the fluency of voice and lip shape of "Ruying 2.0" by more than 30%, and can achieve 4K video effects. At the press conference, economists Ren Zeping, Master Yan Shen and Xu Li himself appeared as digital people, and the effect was enough to be chaotic.

In the landing scene of large models, digital humans are a very important way to carry them, and the recent very hot digital human live streaming is a typical scene. Live streaming including short videos is also one of the most focused scenarios for customers in the middle of "Ronin 2.0" in the 3-month test and public test.

Luan Qing, general manager of SenseTime's digital entertainment business unit, said that within the framework of AIGC, "Discussion 2.0" can undertake the copywriting and script creation of short video live broadcasts. And how "Ruying 2.0" can keep up with the trend in communication, it also depends on the language model ability of "Discussion 2.0" to learn the new short video corpus.

In addition to short videos and live broadcast scenarios, "Ronin 2.0" is accelerating into all walks of life.

For example, in the insurance industry, every insurance specialist has the need to promote new products or other personalized service-oriented content output for customers, and "Ruying 2.0" can replace the insurance specialist to do personalized content and services on the customer's birthday or at the node of a financial product release; In the education industry, "Ronin 2.0" has begun to assist teachers of leading vocational education platforms in China to produce educational materials and solve the internal demand for video production.

"The digital human is a typical efficiency tool within the enterprise." Luan Qing said.

As an AIGC creation platform, Ruying will continue to deepen its efforts in the field of video generation in the future, which Luan Qing believes is due to the fact that content creation is undergoing a dimensional change from text, pictures to video.

Towards multimodality

Since the proportion of picture and video information in the real world is very large, far exceeding language information, the need to understand the real world makes the future of the basic large model will definitely move towards multi-modality, which has been first seen through "Discussion 2.0".

In addition to text, Consult 2.0 has the ability to analyze image and video content.

For example, as shown in the figure above, "Discussion 2.0" can identify specific objects in a messy desk photo, and combine the characteristics of each item to answer the open question of "what do you do when you feel hot" that is close to process design; Or after seeing a menu photo, help users give an order plan within a limited price range.

Originally starting from the research of computer vision into the field of AI, SenseTime, which has already crossed a wave of AI, is more convinced that this wave of large models will be a real opportunity.

Today's big model research is based on the Transformer network architecture. "SenseTime began to engage in large model research in 2019, and it was this route to do vision at that time", in the view of Wang Xiaogang, co-founder and chief scientist of SenseTime, some visual standards and natural language standards are gradually fusing today, "When we develop in the direction of multi-modality, language and vision begin to have a deeper integration, which reflects a relatively strong accumulation and ability in this regard." ”

Many application scenarios we encounter in real life, such as autonomous driving, robotics and a series of fields, must be used in multi-modality. "However, multimodal data and some tasks are often not easy to obtain, and require deep industry accumulation, which is also SenseTime's advantage." Wang Xiaogang introduced.

Three months after its first public debut at this year's World Artificial Intelligence Conference, SenseTime's "Daily New SenseNova Big Model" system was opened to enterprise-level users with a comprehensive upgrade. At the same time, many people did not notice that SenseTime also cooperated with the Shanghai Artificial Intelligence Laboratory to lay out a multimodal large model of scholars. In the future, it is worth looking forward to whether SenseTime can take the lead in finding the key to winning the road to multi-modality.

Transferred to play