Reporting by XinZhiyuan

Source: Specialized

【Introduction to New Zhiyuan】The latest review paper on the interpretability of reinforcement learning.

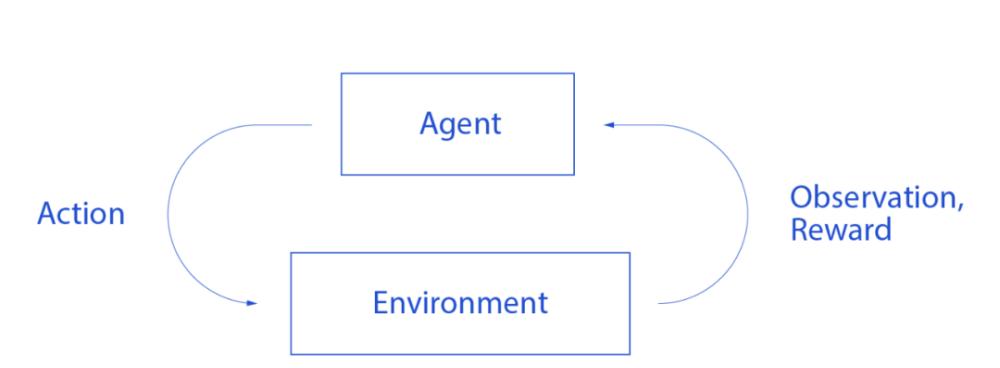

Reinforcement learning, a technique for discovering optimal behavioral strategies from trial and error processes, has become a common method for solving environmental interaction problems.

However, as a class of machine learning algorithms, reinforcement learning also faces the common problem in the field of machine learning, that is, it is difficult to be understood. The lack of interpretability limits the application of reinforcement learning in safety-sensitive fields such as medical treatment and driving, and leads to the lack of universally applicable solutions for reinforcement learning in environmental simulation, task generalization, and other issues.

To overcome this weakness in reinforcement learning, a large number of studies have emerged on explainable reinforcement learning (XRL).

However, there is a lack of consensus among academics about XRL. Therefore, this paper explores the fundamental problems of XRL and reviews the existing work. Specifically, this paper first explores the parent problem, the interpretability of artificial intelligence, which summarizes the existing definitions of the interpretability of artificial intelligence; secondly, constructs a theoretical system in the field of interpretability, thereby describing the common problems of XRL and artificial intelligence interpretability, including defining intelligent algorithms and mechanical algorithms, defining the meaning of interpretation, discussing the factors affecting interpretability, and dividing the intuitiveness of interpretation; and then, according to the characteristics of reinforcement learning itself, the three unique problems of XRL are defined, namely environmental interpretation After that, the existing methods are systematically classified and the latest progress of XRL is reviewed; finally, the potential research directions in the field of XRL are prospected.

http://www.jos.org.cn/jos/article/abstract/6485

Artificial Intelligence (AI) and machine learning (ML) have made breakthroughs in computer vision[1], natural language processing [2], agent strategies[3] and other research fields, and have gradually integrated into human life. Although ML algorithms have good performance for many problems, due to the lack of interpretability of the algorithms, the actual use of the model is often questioned[4][5], especially in safety-sensitive applications, such as autonomous driving, medical treatment, etc. The lack of interpretability has become one of the bottlenecks in machine learning.

Reinforcement Learning (RL) has been shown to be suitable for complex environmental interaction problems[6]-[8], such as robot control[9], game AI[10], etc. However, as a kind of method of machine learning, RL also faces the problem of lack of interpretability, which is mainly manifested in the following four aspects:

(1) Applications in security-sensitive areas are limited. Due to the lack of interpretability, the reliability of RL strategies is difficult to guarantee, and there are security risks. This is a problem that is difficult to ignore in safety-sensitive tasks such as medical treatment, driving, etc. Therefore, in order to avoid the dangers posed by unreliable models, RL is mostly limited to assisting human decision-making in safety-sensitive tasks, such as robot-assisted surgery[11], assisted driving[12], etc.;

(2) Difficulty in learning real-world knowledge. Although current RL applications perform well in some simulation environments, such as OpenAI gym[13], these simulation environments are mainly simple games, which are quite different from the real world. In addition, RL applications can be difficult to avoid overfitting of the environment. When overfitting occurs, the model learns the context of the environment rather than the real knowledge. This leads to the dilemma that, on the one hand, training RL models in the real world is usually expensive, and on the other hand, it is difficult to determine that the model trained in a virtual environment has learned the real law.

(3) Difficulty in generalizing strategies for similar tasks. RL policies are often strongly coupled to environments and are difficult to apply to similar environments. Even in the same environment, small changes in environmental parameters can greatly affect model performance. This problem affects the generalization ability of the model and makes it difficult to determine the performance of the model in similar tasks.

(4) It is difficult to deal with the security risks of the anti-attack. Adversarial attack[14] is an attack technique that generates adversarial samples by adding minor malicious disturbances to the input of the model. For people, the adversarial sample does not affect the judgment, or even is difficult to detect, but for the model, the adversarial sample will cause the output of the model to be greatly biased. Adversarial attacks are extended from deep learning to RL[15][16] and become a security risk for RL calculations. The effectiveness of the counter-attack further exposes the lack of explainability of RL, and further shows that the RL model has not learned the real knowledge.

The explanation is important for both the designer and the user of the model. For the designer of the model, the explanation can reflect the knowledge learned by the model, so that it is convenient to verify whether the model has learned robust knowledge through human experience, so that people can efficiently participate in the design and optimization of the model; For expert users in a specific field, the internal logic of the provided model is explained, and when the model performs better than the person, it is convenient to extract knowledge from the model to guide the person's practice in the field. For ordinary users, explain the reasons for the decision to present the model, so as to deepen the user's understanding of the model and enhance the user's confidence in the model.

Explainable Reinforcement Learning (XRL), or explainable reinforcement learning, is a sub-problem of Explainable Artificial Intelligence (XAI) that is used to enhance people's understanding of the model and optimize model performance, thereby solving the above four types of problems caused by the lack of explainability. There are commonalities between XRL and XAI, and XRL has its own uniqueness.

On the one hand, XRL and XAI have in common. First, the object of explanation is an intelligent algorithm rather than a mechanical algorithm. Mechanical algorithms, such as sorting, lookup, etc., are characterized by complete inputs, fixed solutions, and well-defined solutions. However, because of the incompleteness of the input and the uncertainty of the solution, the intelligent algorithm must find a better solution in the solution space; Secondly, people and models are two key entities that face each other directly. Unlike other techniques, the interpretability approach focuses on people's understanding of the model. Due to the lack of understanding of a large number of regulations and confusing data, the interpretation is usually an abstraction of the internal logic of the model, and this process must be accompanied by the simplification of the model strategy. The difficulty is how to ensure the consistency of the interpretation with the logic of the model body when providing an explanation to the person; Finally, the difficulty of interpretation is relative, and it is determined by the two factors of problem scale and model structure, and these two factors are transformed into each other under certain conditions. For example, simple structure models (such as decision trees, Bayesian networks, etc.) can usually visually show the logical relationship between input and output, but in the face of a huge model composed of a large number of simple structures, its intricate logical relationships still lead to the overall unintelligible model. At the same time, although structurally complex models (such as neural networks) are often difficult to understand, when the model is extremely reduced (such as collapsing the neural network into a composite function with a few variables), the model itself can still be understood.

On the other hand, XRL also has its own uniqueness. Reinforcement learning problems consist of three key factors: environment, task, and agent strategy, so these three key factors must be considered at the same time to solve the XRL problem. Since the development of XRL is still in its infancy, most of the methods are directly inherited from the study of XAI, resulting in existing research focusing on the interpretation of agent strategies, that is, explaining the motivation of agent behavior and the correlation between behavior. However, the lack of understanding of the environment and tasks makes some key problems impossible to solve: the lack of understanding of the environment makes people lack understanding of the internal laws of the environment when faced with complex tasks, resulting in the abstraction of the environmental state to ignore favorable information, making it difficult for the agent to learn the real law; The lack of task interpretation makes the correlation between the task objective and the process state sequence unclear, which is not conducive to the decoupling of the agent strategy and the environment, and affects the generalization ability of the reinforcement learning agent strategy in a similar task or dynamic environment. Therefore, there is a strong correlation between the interpretation of the environment, tasks and strategies, which is an inevitable problem for the implementation of reinforcement learning interpretation.

At present, XRL has become an important topic in the field of AI, although researchers have done a lot of work to improve the interpretability of reinforcement learning models, but the academic community still lacks a consistent understanding of XRL, resulting in the proposed method is difficult to compare. To solve this problem, this article explores the fundamental problems of XRL and summarizes the existing work. First of all, this paper starts from XAI and summarizes its general views as a basis for analyzing XRL problems. Then, the common problems of XRL and XAI are analyzed, and a theoretical system in the field of interpretability is constructed, including defining intelligent algorithms and mechanical algorithms, defining the meaning of interpretation, discussing the factors affecting interpretability, and dividing the intuitiveness of interpretation. Secondly, it explores the uniqueness of XRL problems and proposes unique problems in three XRL areas, including environmental interpretation, task interpretation, and strategy interpretation. Subsequently, the research progress in the existing XRL field is summarized. Existing methods will be classified on the basis of the technical category and the effect of interpretation, and for each classification, the properties of each type of method are determined based on the time the explanation was obtained, the scope of the explanation, the degree of interpretation, and the unique problems of the XRL; Finally, the potential research directions in the field of XRL are looked forward, focusing on the interpretation of the environment and tasks, and the unified evaluation criteria.

1 Summary of the point of view on the interpretability of artificial intelligence

The study of XRL cannot be separated from the foundations of XAI. On the one hand, XRL is a subfield of XAI, and its methods and definitions are closely related, so the existing research on XRL has extensively drawn on the results of XAI in other directions (such as vision); on the other hand, XRL is still in its infancy, and there is little discussion about its specificity, while for XAI, researchers have conducted extensive research and discussion for a long time[17] -[24], which has profound reference significance. Based on the above reasons, this paper explores the problem of explanatoryity from the perspective of XAI, and collates the consensus of the academic community on XAI as the basis for XRL research.

Although scholars have defined XAI from different perspectives, they guide a class of research in specific situations. However, the lack of a precise and uniform definition has led to differences in the academic community's understanding of XAI. This article summarizes the definitions related to XAI and divides them into two categories: metaphysical concept description and metaphysical concept description.

Metaphysical conceptual descriptions use abstract concepts to define interpretability[25] -[28]. These documents use abstract words to describe interpretability algorithms, such as trustworthy, reliability, etc. Credibility means that people trust the decisions made by the model with strong confidence, and reliability means that the model can always maintain its performance in different scenarios. Although such abstract concepts are not precise enough and can only produce intuitive explanations, they can still enable people to accurately understand the goals, objects and functions of interpretability and establish intuitive cognition of interpretability. These concepts suggest that interpretability algorithms have two key entities, namely people and models. In other words, interpretability is a model-oriented, human-targeted technology.

Metaphysical conceptual descriptions are defined from the point of view of philosophy, mathematics, etc., based on the practical meaning of interpretation. For example, Páez et al. [17] argue from a philosophical point of view that the understanding produced by interpretation is not exactly equivalent to knowledge, and that the process of understanding is not necessarily based on reality. We believe that interpretation exists as a medium that enhances one's understanding of a model by presenting its true knowledge or by constructing virtual logic. At the same time, people's understanding of the model does not have to be based on a complete grasp of the model, but only requires mastering the main logic of the model and being able to make cognitive predictions about the results. Doran et al.[29] argue that interpretability systems allow people to not only see, but also study and understand the mathematical reflections between model inputs and outputs. In general, the essence of an AI algorithm is a set of mathematical mappings from input to output, and interpretation is to show such mathematical mappings in a way that humans can understand and study. While mathematical mapping is also a way for people to describe the world, for complex mathematical mappings, such as high-dimensional, multilayer nested functions for representing neural networks, one cannot relate them to the intuitive logic of life. Tjoa et al. [19] argue that interpretability is used to interpret decisions made by algorithms, reveal patterns in the mechanisms in which algorithms operate, and provide coherent mathematical models or derivations for systems. This interpretation is also based on mathematical expressions, reflecting that people understand models more through their decision-making patterns than on mathematical reproducibility.

Some of the ideas differ slightly from the above literature, but they are still instructive. For example, Arrieta et al.[21] argue that interpretability is a passive feature of a model, indicating the extent to which the model is understood by a human observer. This view regards the interpretability of the model as a passive feature, ignoring the possibility of the model actively proposing explanations for the sake of greater explainability. Das et al.[23] argue that interpretation is a way to validate AI agents or AI algorithms. This view tends to focus on the results of the model, the purpose of which is to ensure consistent performance of the model. However, this description ignores the fact that the model itself means knowledge, and interpretability is not only a verification of the results of the model, but also helps to extract knowledge from the model that people have not yet mastered, and promotes the development of human practice. Although there are small discrepancies, the above perspective also raises a unique perspective, for example, the interpretability of the model can be regarded as a feature of the model, and the evaluation of the performance of the model is an important function of interpretation.

While there are many definitions of XAI, the basic concept of XAI in academia as a whole remains consistent. This paper attempts to extract the commonalities as a theoretical basis for studying the XRL problem. Through the analysis of the above literature, we summarize the academic consensus on XAI:

(1) Man and model are two key entities directly confronted by interpretability, and interpretability is a technology that takes the model as the object and the person as the target;

(2) Interpretation exists as a medium of understanding, which can be a real thing, an ideal-constructed logic, or both, so that people can understand the model;

(3) People's understanding of the model does not need to be based on a complete mastery of the model;

(4) Accurately reproducible mathematical derivation is irreplaceable and explainable, and people's understanding of models includes perceptual and rational cognition;

(5) Interpretability is a characteristic of the model that can be used to verify the performance of the model.

2 Common problems between the interpretability of reinforcement learning and the interpretability of artificial intelligence

Based on a summary of the definition of XAI, this section discusses the common problems faced by XRL and XAI. Because of the strong coupling between XRL and XAI, this section applies both to XAI and to XRL.

2.1 Definition of intelligent algorithms and mechanical algorithms

The object of interpretability is an intelligent algorithm, not a mechanical one. The mechanical algorithms in traditional cognition, such as sorting, looking, etc., have fixed algorithm programs in the face of determined task goals. Reinforcement learning, as an intelligent algorithm, finds the optimal strategy in the process of dynamically interacting with the environment and maximizes the rewards. Delineating intelligent and mechanical algorithms can be used to determine what is being interpreted and thus answer the question "what needs to be interpreted". On the one hand, there are differences between intelligent algorithms and mechanical algorithms, and interpretation is only necessary when facing intelligent algorithms; on the other hand, even for reinforcement learning, there is no need to explain all its processes, but should be explained for its parts with intelligent algorithm characteristics, such as action generation, environmental state transfer, etc. Therefore, before discussing the problem of interpretability, it is necessary to distinguish between intelligent algorithms and mechanical algorithms.

This article defines "full knowledge" and "full modeling" according to the degree to which the algorithm acquires known conditions and the completeness of modeling:

Complete knowledge: sufficient knowledge of the task is known to be valid, with the conditions to obtain an optimal solution by mechanical processes;

Full modeling: Complete problem modeling with the computing power required to complete the task;

Complete knowledge is a prerequisite for determining the optimal solution mechanically. For example, to solve the coefficient matrix rank of the linear equation system, full knowledge means that the rank of its augmented matrix is greater than or equal to the rank of the coefficient matrix, at this time according to the current knowledge, to obtain a definite solution or determine its no solution; full modeling means the full use of existing knowledge, in other words, full modeling from the modeler's point of view, indicating the ability to solve the task (including the design ability of the programmer and the hardware computing power) to use all knowledge. For example, in a 19×19 Go game, there is a theoretical optimal solution, but there is not yet enough computing power to obtain the optimal solution in a limited time.

Based on the above definition of full knowledge and full modeling, this paper further proposes the concept of "task complete" to determine the boundary between mechanical and intelligent algorithms:

Task Complete: Full knowledge and fully modeled specific tasks.

The task must be fully modeled with full knowledge. After the conditions for the task are complete, the advantages and disadvantages of the algorithm depend only on the modeling method and the actual needs of the user. The full definition of the task takes into account both knowledge and modeling (Figure 1).

The concept of task complete can be used to distinguish between mechanical and intelligent algorithms. Mechanical algorithms are task-accomplished, specifically, algorithms are known to be sufficiently knowledgeable and have been modeled without simplification. At this point, the algorithm has the conditions to obtain the optimal solution, so the process of the algorithm is deterministic and the obtained solution is predictable. For example, classical sorting algorithms, traditional data queries, 3×3 tic-tac-toe chess game algorithms, etc. are all mechanical algorithms. Intelligent algorithms are not complete tasks, which means that the algorithms do not have sufficient knowledge or take a simplified approach to modeling. Intelligent algorithms cannot directly obtain the optimal solution, and usually look for a better solution in the solution space. Such as algorithms based on greedy strategies, linear regression methods, 19×19 traditional Go strategies, machine learning algorithms, etc.

There may be two things that lead to incomplete tasks, namely incomplete knowledge and incomplete modeling. In the case of incomplete knowledge, the algorithm cannot directly determine the optimal solution, so it can only approximate the optimal solution in the solution space. At this point, the actual role of the intelligent algorithm is to select the solution in the solution space. The factors that lead to incomplete knowledge are usually objective, such as the state of the environment cannot be fully observed, the task goal is unpredictable, the task evaluation index is unknowable, the task is always unknowable, etc.; in the case of incomplete modeling, the algorithm usually ignores some knowledge, resulting in the algorithm process not making full use of knowledge, so that the optimal solution cannot be obtained. The reasons for incomplete modeling have objective and subjective aspects, objective reasons such as modeling deviations, incomplete modeling, etc., subjective reasons include reduced hardware requirements, model speed and so on. In reinforcement learning, not all processes are characterized by incomplete tasks, so only some need to be explained, such as strategy generation, environmental state transfer, etc.

2.2 Definition of "Interpretation"

In Chinese dictionaries, interpretation has the meaning of "analyze and clarify". This is not only in line with the understanding of the word in life, but also close to the meaning of "interpretation" in interpretability studies. However, when it comes to interpretability studies, this meaning appears broad. We hope to combine the understanding of interpretability to refine the meaning of "interpretation" and make it more instructive. Take the reinforcement learning model, for example, which learns the strategy for maximizing rewards, which contains implicit knowledge between the environment, rewards, and agents, while the XRL algorithm explicitly expresses this implicit knowledge. This article treats multiple pieces of knowledge as a set, called a knowledge system, and defines "interpretation" as follows from the perspective of the relationship between knowledge systems:

Explanation: Concise mapping between knowledge bodies. Concise mapping is the expression of the target knowledge without introducing new knowledge;

Specifically, interpretation is the process of converting an expression based on the original knowledge system into an expression of the target knowledge system, using only the knowledge of the target knowledge system without introducing new knowledge. The purpose of the XRL algorithm is to produce an explanation so that the original knowledge system can be concisely expressed by the target knowledge system. In XRL, the original body of knowledge usually refers to the reinforcement learning model, while the target body of knowledge usually refers to the cognition of the person, and the model and the person are the two key entities of interpretability. This article views the original knowledge system as a collection of multiple meta-knowledge and their inferences. to represent meta-knowledge, to represent a body of knowledge, then . Assuming that the knowledge acquired by the agent belongs to the knowledge system, and the knowledge that humans can understand belongs to the knowledge system, then interpretation is the process of transforming the knowledge system into a knowledge system Expression. Concise mapping is necessary for interpretation, and non-concise mapping may make it difficult for the interpretation itself to be understood, which in turn makes the interpretation itself incomprehensible (see 2.3).

In the process of transforming the expression of knowledge, the knowledge to be interpreted may not be fully described through the target knowledge system, and only part of the knowledge can be interpreted. This article uses the concepts of "full explanation" and "partial explanation" to describe this situation:

Fully interpreted: The knowledge to be interpreted is fully expressed by the target knowledge system. Among them, the knowledge to be interpreted belongs to the target knowledge system is a necessary condition;

Partial interpretation: The part of the knowledge to be explained is expressed by the target knowledge system.

Specifically, full and partial explanations describe the inclusions between knowledge systems (Figure 2). Full interpretation is possible only if the body of knowledge to be interpreted is fully contained in the target body of knowledge, otherwise only partial interpretation can be made. In XRL, full interpretation is often not necessary.

On the one hand, the boundary between the knowledge system to be interpreted and the target knowledge system is difficult to determine, resulting in high difficulty and cost of complete interpretation; on the other hand, the realization of the interpretation of the model usually does not need to be based on a complete grasp of the model. Therefore, partial interpretation is the method used in most interpretability studies, that is, only the main decision logic of the algorithm is described.

2.3 Factors Influencing Interpretability

One view is that the traditional ML (RL as a subset) method is easy to explain, and the introduction of deep learning has made explainability short, making ML difficult to interpret, so the essence of ML interpretation is the interpretation of deep learning [21]. This is contrary to the perception of the field of interpretability [28]. This view focuses only on models and ignores man's place in explainability. For man, even a theoretically understandable model, when scaled to a certain extent, still leads to the overall incomprehensibility. The factors influencing interpretability are defined in this article as follows:

Transparency: the degree of conciseness of the structure of the model to be explained;

Model size: the amount of knowledge contained in the model to be interpreted and the degree of diversification of knowledge portfolios;

This article argues that interpretability is a comprehensive description of model component transparency and model scale. Transparency and model size are the two main factors that affect interpretability. Specifically, strong interpretability means high transparency and low complexity, while a single factor, such as high complexity or low transparency, will result in weak interpretability of the model (Figure 3).

In different contexts, the word "transparent" has different meanings. For example, in a software architecture, transparency refers to the degree of abstraction of the underlying process, meaning that the upper-level program does not need to focus on the underlying implementation. Similarly, transparency has different meanings in the field of interpretability, such as the literature [26][27] considering transparency to be the degree to which a model can be understood, equating transparency with interpretability. Taking reinforcement learning as an example, reinforcement learning algorithms based on value tables are usually more interpretable at a certain scale, while the use of deep learning fitted value tables is less interpretable, because the process of generating strategies by querying value tables is in line with people's intuitive understanding, but the neural network propagation process can only be accurately described mathematically, and it is less transparent for people. However, this thinking focuses on the infrastructure in which the model is built, ignoring the difficulty that model size makes interpretation and ignoring the goal of interpretation— people. Therefore, to highlight the impact of model size on interpretation, we only narrowly understand transparency as the degree of conciseness of the structure of the model to be interpreted.

Model size measures the difficulty of interpretation in terms of people's ability to understand. Specifically, assuming that the knowledge in the model is composed of a series of meta-knowledge, the model size represents the degree of diversification of the combination between the total amount of meta-knowledge and the knowledge, and the difficulty of interpretation depends to some extent on the size of the model, and when the model scale exceeds a specific range (human comprehension ability), the model will not be understood. For example, linear additive models, decision tree models, Bayesian models, because the calculation process is concise, allows us to easily understand what results the model is based on, so it is considered easy to understand. However, as the scale of the model grows larger, the logic between the various factors inevitably intertwines and becomes intricate, making it ultimately impossible for us to grasp its master-slave relationship. For large-scale models in simple structures (such as decision tree branches), although all the results are theoretically traceable, when the scale of the model has exceeded the ability of human understanding, the system as a whole will still be unexplainable.

2.4 Degree of interpretability

There is a certain similarity between the human learning process and the reinforcement learning process, so if the human brain is regarded as the most advanced intelligent model at present, then the human understanding of the model is not only the intuitive feeling of the human model, but also the comprehensive evaluation of the reinforcement learning model by an advanced agent. However, an incomprehensible model cannot be effectively evaluated, so the interpretation of the model becomes the medium through which a person understands the model. As a medium between people and models, interpretability arithmetic has two characteristics of mutual balance to varying degrees: proximity to the model and proximity to human perception. Specifically, different interpretations focus more on accurate descriptive models, while others focus more on consistency with human perception. Based on this concept, this article divides interpretability into the following three levels:

(1) Mathematical expression: Explain the model through idealized mathematical deduction. Mathematical expression is the expression of a simplified model using mathematical language. Since the reinforcement learning model is based on mathematical theory, the model can be accurately described and reconstructed through mathematical expression. Although mathematical theoretical systems are an important way for people to describe the world, there are large differences between them and people's general intuition. Taking deep learning as an example, although there are a large number of articles that demonstrate its mathematical rationality, deep learning methods are still considered unexplainable. Thus, mathematical representations can describe models at the microscopic (parametric) level, but are difficult to migrate to human knowledge systems;

(2) Logical expression: Interpreting a model by transforming it into an explicit logical law. Logical expression is the extraction of the principal strategy in the model, that is, ignoring its subtle branches and highlighting the principal logic. On the one hand, logical expression retains the main strategy of the model, so it is similar to the real decision-making result of the model, and the interpretation itself can partially reproduce the decision-making of the model; on the other hand, the logical expression simplifies the model and conforms to human cognition. Logical expression is a more intuitive explanation, but it requires people to have knowledge in a specific field, which is an explanation for human experts, and it is not intuitive enough for the average user;

(3) Perceptual expression: by providing a regular interpretation model that conforms to human intuitive perception. Perceptual expressions are based on models that generate solutions that conform to human perception and are therefore easy to understand because they do not require a person to have knowledge in a specific domain. For example, visualization of key inputs, example comparisons, and other forms of interpretation fall under the category of perceptual expression. However, perceptual representation is usually a great reduction in model strategies, because the model's decisions cannot be reproduced, resulting in only explaining the rationality of the decision.

Among the three levels of interpretability, mathematical expression, as the first level, is also the theoretical basis for building reinforcement learning algorithms. In the case of known parameters of the model, mathematical expressions can usually be more accurate inferences of the results of the model, however, mathematical rationality does not mean that it can be understood; logical expressions are between mathematical expressions and perceptual expressions, which is an approximation of model strategies, but the explanations produced by logical expression methods usually require users to have expertise in specific fields; perceptual expressions screen important factors in model decision-making and present them in a clear, concise form, although the results are easy to understand. However, the ability to refactor the strategy is no longer available. All in all, different interpretations are balanced between approaching the model and approaching human perception, and it is difficult to reconcile.

3 The explanatory nature of reinforcement learning is unique

Unlike other ML approaches, RL problems consist of three key factors: environment, task, and agent. Wherein, the environment is a given black box system with certain internal laws; the task is the objective function of the agent to maximize its average reward; and the strategy is the basis of the agent's behavior and the correlation between a series of behaviors. Based on the three key components of reinforcement learning, this paper summarizes the three unique problems of XRL, namely environmental interpretation, task interpretation, and strategy interpretation. There is a close correlation between the three unique problems, which are inseparable from the entire reinforcement learning process and are directly faced by the realization of reinforcement learning explanations.

4 Research status of explanatory nature of reinforcement learning

Due to the wide range of fields involved in XRL, scholars have started from the perspective of various fields, resulting in large differences in the proposed methods. Therefore, this section summarizes the related methods in two steps. First, according to the technical categories and the presentation of interpretation, the existing methods are divided into five categories: visual and language-assisted interpretation, strategy imitation, interpretable model, logical relationship extraction and strategy decomposition. Then, on the basis of the general classification method (i.e., the time to obtain the explanation, the scope of the explanation), combined with the classification basis proposed herein (that is, the degree of interpretation, the key scientific problems faced), the properties of different category methods are determined.

In the field of interpretability, classification is usually based on two factors: the time when explanation was obtained and the scope of the explanation[31]. Specifically, according to the time of obtaining the explanation, the interpretability method is divided into intrinsic (intrinsic) interpretation and post-hoc (post-hoc) interpretation. Inherent interpretation limits the expression of the model so that the model produces interpretable output as it runs. For example, based on the principles and components of strong interpretability (decision trees, linear models, etc.) to construct a model, or by adding a specific process to the model to produce interpretable output; post-ex post interpretation is to summarize the model's behavior patterns through the analysis of the model behavior, so as to achieve the purpose of interpretation. In general, the intrinsic explanation is the explanation during the policy generation process, specific to a model, while the ex post facto interpretation is the explanation after the policy is generated, independent of the model. According to the scope of interpretation, the interpretability method is divided into global (global) interpretation and local (local) interpretation, global interpretation ignores the microstructure of the model (such as parameters, layers and other factors), from the macro level to provide the interpretation of the model, local interpretation from the microscopic, through the analysis of the microstructure of the model to obtain the interpretation of the model.

In addition to the above general classification of interpretability, this paper divides the interpretability method into three categories: mathematical expression, logical expression and perceptual expression based on the degree of conformity of interpretation with model and human perception (see 2.4). These three types of interpretability methods reflect the differences in the form of interpretation, the degree of approximation of interpretation and model results, and the intuitiveness of interpretation. The previous article (see 3) analyzes the 3 key issues facing XRL, namely environmental interpretation, task interpretation, and policy interpretation. At present, it is difficult for a single XRL method to solve three types of problems at the same time, so we also use this as a basis to distinguish the problems that current XRL methods focus on.

In summary, this article classifies the XRL methods based on "time to obtain explanations", "scope of interpretation", "degree of interpretation", and "key issues" (see Table 1). Due to the variety of algorithms, Table 1 shows only the characteristics of large categories of algorithms, some of which may not be fully compliant

summary

This article focuses on the problems of XRL, discusses the fundamentals of the field, and summarizes existing approaches. At present, a complete and unified consensus has not yet been formed in the field of XRL, and even in the entire field of XAI, the basic views of different studies are quite different and difficult to compare. This paper conducts a more in-depth study on the lack of consensus in this field. First of all, this paper refers to the parent problem of the XRL field- XAI, collects the existing views in the XAI field, and sorts out the more general understanding of the XAI field; Second, based on the definition of the XAI field, discuss the common problems faced by XAI and XRL; Then, combined with the characteristics of reinforcement learning itself, the unique problems faced by XRL are proposed; Finally, the relevant research methods are summarized and the relevant methods are classified. The classification includes methods that the author explicitly identifies as XRL, as well as methods that the authors do not highlight but are actually important for XRL. XRL is currently in its infancy, so there are a number of issues that need to be addressed urgently. This paper focuses on two types of issues: the interpretation of the environment and tasks and the unified evaluation criteria. This paper argues that these two types of problems are the cornerstones of the XRL-like field and are areas of research that deserve attention.

Resources: