新年新氣象,祝大家牛轉乾坤,牛氣沖天!

過年期間收到了很多朋友的新年祝福,沒有一一回應,見諒!

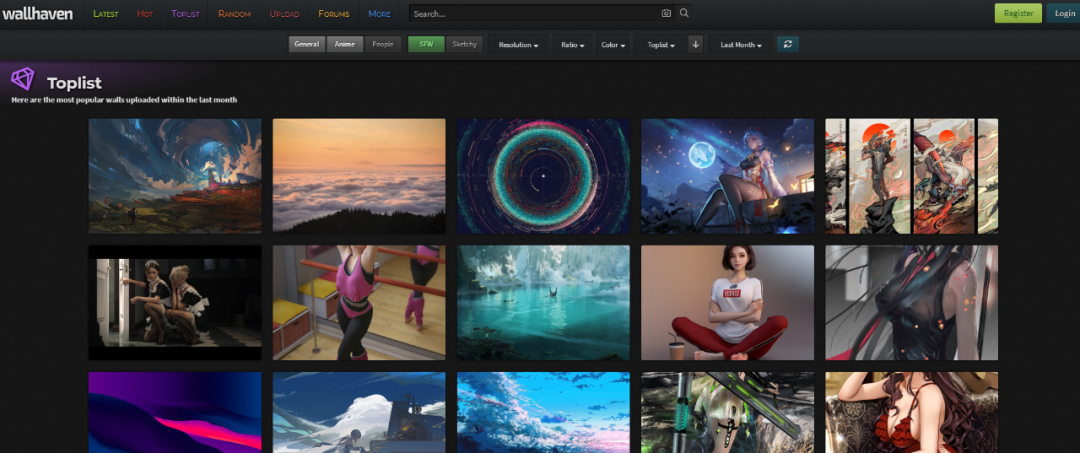

很久沒寫爬蟲了,手生了,在吾愛找了一個練手網站,國外的桌面網站,wallhaven,這裡采集下載下傳熱門圖檔為例,重溫一下python圖檔爬蟲,感興趣的不妨自行練手嘗試一番!

目标網址:https://wallhaven.cc/toplist

通過初步的觀察,可以很清晰的看到網站的翻頁情況

https://wallhaven.cc/toplist?page=1 https://wallhaven.cc/toplist?page=2 https://wallhaven.cc/toplist?page=2

這裡我們就可以應用python字元串連結來構造清單頁的網址連結

f"https://wallhaven.cc/toplist?page={pagenum}"

pagenum即為頁碼

進一步觀察圖檔資料

封面圖位址:https://th.wallhaven.cc/small/rd/rddgwm.jpg

大圖位址:https://w.wallhaven.cc/full/rd/wallhaven-rddgwm.jpg

這裡我們同樣可以應用python字元串連結來構造圖檔的網址連結

img = imgsrc.replace("th", "w").replace("small", "full")

imgs = img.split('/')

imgurl = f"{'/'.join(imgs[:-1])}/wallhaven-{imgs[-1]}"

不過這裡可能會存在一個BUG,比如小圖的字尾格式是jpg,但是大圖的字尾格式png,這個時候你以jpg的字尾格式通路圖檔,下載下傳的話無疑是會出錯的,這裡本渣渣的處理方式可能還是存在bug,笨方法無疑是通路到詳情頁拿到大圖的通路位址。

如果你有更好的處理方式,不妨交流分享!

初次基礎版本:

#wallhaven熱門圖檔采集下載下傳

#author 微信:huguo00289

# —*—coding: utf-8 -*-

import requests

from lxml import etree

from fake_useragent import UserAgent

url = "https://wallhaven.cc/toplist?page=1"

ua = UserAgent().random

html = requests.get(url=url, headers={'user-agent': ua}, timeout=6).content.decode('utf-8')

tree = etree.HTML(html)

imgsrcs = tree.xpath('//ul/li/figure/img/@src')

print(len(imgsrcs))

print(imgsrcs)

i = 1

for imgsrc in imgsrcs:

img = imgsrc.replace("th", "w").replace("small", "full")

imgs = img.split('/')

imgurl = f"{'/'.join(imgs[:-1])}/wallhaven-{imgs[-1]}"

print(imgurl)

try:

r = requests.get(url=imgurl, headers={'user-agent': ua}, timeout=6)

with open(f'{i}.jpg', 'wb') as f:

f.write(r.content)

print(f"儲存 {i}.jpg 圖檔成功!")

except Exception as e:

print(f"下載下傳圖檔出錯,錯誤代碼:{e}")

imgurl = imgurl.replace('jpg', 'png')

r = requests.get(url=imgurl, headers={'user-agent': ua}, timeout=6)

with open(f'{i}.png', 'wb') as f:

f.write(r.content)

print(f"儲存 {i}.png 圖檔成功!")

i = i + 1

優化版本,添加了類,多線程,以及逾時重試處理

#wallhaven熱門圖檔采集下載下傳

#author 微信:huguo00289

# —*—coding: utf-8 -*-

import requests

from lxml import etree

from fake_useragent import UserAgent

import time

from requests.adapters import HTTPAdapter

import threading

class Top(object):

def __init__(self):

self.ua=UserAgent().random

self.url="https://wallhaven.cc/toplist?page="

def get_response(self,url):

response=requests.get(url=url, headers={'user-agent': self.ua}, timeout=6)

return response

def get_third(self,url,num):

s = requests.Session()

s.mount('http://', HTTPAdapter(max_retries=num))

s.mount('https://', HTTPAdapter(max_retries=num))

print(time.strftime('%Y-%m-%d %H:%M:%S'))

try:

r = s.get(url=url, headers={'user-agent': self.ua},timeout=5)

return r

except requests.exceptions.RequestException as e:

print(e)

print(time.strftime('%Y-%m-%d %H:%M:%S'))

def get_html(self,response):

html = response.content.decode('utf-8')

tree = etree.HTML(html)

return tree

def parse(self,tree):

imgsrcs = tree.xpath('//ul/li/figure/img/@src')

print(len(imgsrcs))

return imgsrcs

def get_imgurl(self,imgsrc):

img = imgsrc.replace("th", "w").replace("small", "full")

imgs = img.split('/')

imgurl = f"{'/'.join(imgs[:-1])}/wallhaven-{imgs[-1]}"

print(imgurl)

return imgurl

def down(self,imgurl,imgname):

#r=self.get_response(imgurl)

r = self.get_third(imgurl,3)

with open(f'{imgname}', 'wb') as f:

f.write(r.content)

print(f"儲存 {imgname} 圖檔成功!")

time.sleep(2)

def downimg(self,imgsrc,pagenum,i):

imgurl = self.get_imgurl(imgsrc)

imgname = f'{pagenum}-{i}{imgurl[-4:]}'

try:

self.down(imgurl, imgname)

except Exception as e:

print(f"下載下傳圖檔出錯,錯誤代碼:{e}")

if "jpg" in imgname:

ximgname = f'{pagenum}-{i}.png'

if "png" in imgname:

ximgname = f'{pagenum}-{i}.jpg'

self.down(imgurl, ximgname)

def get_topimg(self,pagenum):

url=f'{self.url}{pagenum}'

print(url)

response=self.get_response(url)

tree=self.get_html(response)

imgsrcs=self.parse(tree)

i=1

for imgsrc in imgsrcs:

self.downimg(imgsrc,pagenum,i)

i=i+1

def get_topimgs(self,pagenum):

url=f'{self.url}{pagenum}'

print(url)

response=self.get_response(url)

tree=self.get_html(response)

imgsrcs=self.parse(tree)

i=1

threadings = []

for imgsrc in imgsrcs:

t = threading.Thread(target=self.downimg, args=(imgsrc,pagenum,i))

i = i + 1

threadings.append(t)

t.start()

for x in threadings:

x.join()

print("多線程下載下傳圖檔完成")

def main(self):

num=3

for pagenum in range(1,num+1):

print(f">>正在采集第{pagenum}頁圖檔資料..")

self.get_topimgs(pagenum)

if __name__=='__main__':

spider=Top()

spider.main()

采集下載下傳效果

福利

源碼打包,

同時附上兩個多線程以及一個多程序,

感興趣,尤其是想要研究多線程的不妨自行擷取,

公衆号背景回複“多線程”,即可擷取!

·················END·················

您好,我是二大爺,

革命老區外出進城務勞工員,

網際網路非早期非專業站長,

喜好python,寫作,閱讀,英語

不入流程式,自媒體,seo . . .

公衆号不掙錢,交個網友。

讀者交流群已建立,找到我備注 “交流”,即可獲得加入我們~

聽說點 “在看” 的都變得更好看呐~

關注關注二大爺呗~給你分享python,寫作,閱讀的内容噢~

掃一掃下方二維碼即可關注我噢~

關注我的都變秃了

說錯了,都變強了!

不信你試試