In daily life, we communicate with each other through movement and language. So is it possible that the way we communicate is no longer limited to these verbal movements, but can be interacted through brain waves?

Absolutely!

This technique is brain-computer interface technology. As the name suggests, brain-computer interface technology is a technique that establishes a direct connection between the brain and the computer without the involvement of peripheral neuromuscular tissue. This technology can record the activity of the brain in real time, "interpret" the information carried in it, and turn it into instructions that computers and other devices can understand, so as to complete the operation, achieve the goal, and achieve the "wishful thinking".

The famous physicist Hawking was a "frostbite" patient before his death, and for decades he could only express his wishes by the weak movements of his fingers and muscles in the corners of his eyes. Nowadays, with the development of brain-computer interface technology, computer interpretation of the brain is becoming more and more rapid and precise, and "frostbite" patients like Hawking will greatly improve their quality of life. At the same time, for ordinary people, the future "mind control" to achieve "wishful thinking", will also make great changes in our work and life. Professors Gao Shangkai and Gao Xiaorong of the Neural Engineering Laboratory of Tsinghua University School of Medicine have led a team that has been doing brain-computer interface research for 20 years. The reporter recently visited the laboratory to reveal how the brain-computer interconnection "wants to do things".

<h1>Put on a hat to interpret the "idea"</h1>

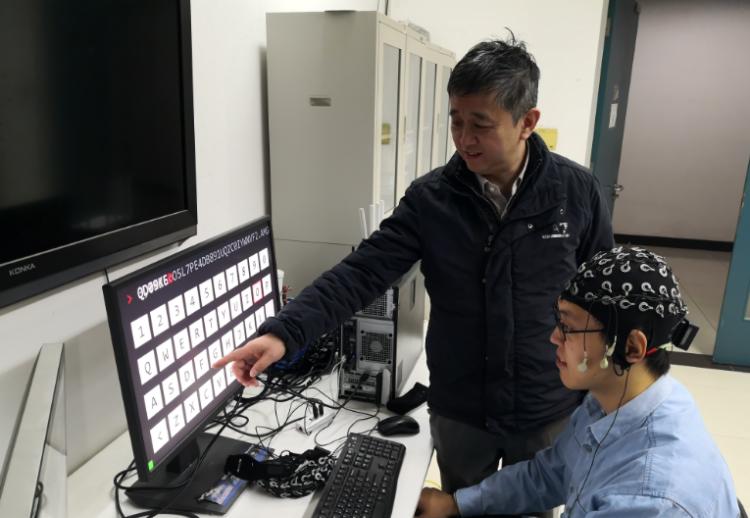

A few days ago, this reporter came to the School of Medicine of Tsinghua University. In the Neural Engineering Lab, Professor Gao Xiaorong is guiding students in their research. I saw a student wearing a strange hat with various sensors densely distributed on it. He stared intently at the computer screen in front of him, and the letter cards on it kept flashing...

In the Neuroengineering Laboratory of Tsinghua University School of Medicine, researchers are conducting research experiments on brain-computer interaction.

Gao Xiaorong introduced that our brains are active all the time. In this process, brainwave signals that accompany brain nerve activity are constantly being generated. How to obtain these EEG signals, connect with computer systems, and read information, there are currently two main ways. One is an invasive brain-computer interface, which records brain activity by implanting electrodes directly in the cerebral cortex through craniotomy. Because the invasive brain-computer interface can directly monitor the activity of the cerebral cortex, the data collected is of better quality and can achieve more complex information reading. However, due to issues such as safety and surgical trauma, it is still in the laboratory exploration stage.

The other is the non-invasive brain-computer interface that students are working on. It only needs to wear the EEG collection cap like a hat. "The weaknesses of this approach are also obvious. After all, the brain electricity we read is transmitted through the thick skull, and the signal quality is worse, so the algorithm that accurately interprets these brain electrical signals becomes particularly important. Gao Xiaorong said that non-invasive brain-computer interfaces are also a common research direction carried out by domestic scholars.

In 1999, Gao Xiaorong began to do brain-computer interface research, and this year has been a full 20 years. He led the team to first propose a steady-state visual evoked potential brain-computer interface, which greatly improved the accuracy of "mind control" and became one of the three main paradigms of non-invasive brain-computer interface.

How to understand this principle of brain-computer communication? Gao Xiaorong said that there is a follow-up effect of brain nerve electrical signals, if you apply external stimuli to people with certain laws, such as letting people look at a small square that flashes 15 times per second, you will find that the brain signal recorded from the scalp also has a corresponding law, ups and downs 15 times per second, so if you present people with small squares that flash with different laws, and give them different meanings in advance, then by analyzing the law of brain signals, you can not know which square people are looking at and what kind of instructions they want to enter.

<h1>The speed of "mind typing" is close to that of ordinary people</h1>

At the World Robot Congress held in Beijing this summer, there is a special "competition" that has attracted much attention. The contestants put on special "helmets" and stared at the computer screen without saying a word. Cables are intertwined on the "helmet", and monograms appear on the screen. Only to see, letters appeared one after another in the text box of the screen. This is a scene from the World Robot Congress Brain Control Typing Record Challenge.

The Brain Control Typing Skills Challenge was held at the World Robotics Congress in Beijing this summer. Through brain-computer interaction, the contestants compete with "mind typing".

The contestants adopted a non-invasive way to achieve brain-computer interconnection, use the brain to control the computer, and use "mind typing" to compete. In the end, Wei Siwen from Tianjin University, supported by an algorithm developed by a joint team of the University of Macau and the University of Hong Kong, won the championship with an ideal information transmission rate of 691.55 bits per minute, which also set a world record. As the deputy leader of the expert group of the competition, Gao Xiaorong told reporters that Wei Siwen's performance at that time was equivalent to outputing an English letter in 0.413 seconds at a 100% accuracy rate. This speed is already highly close to the text input we use our phones every day, "Already remarkable!" He said.

The use of brain-computer interfaces for information exchange is also promising in the field of rehabilitation. This year, the research results of Gao Xiaorong's team made a "frostbite" re-open his mouth to communicate with his family, and the story was on the screen. Graphic designer Wang Jia is a "frostbite" patient, in the past he loved sports and was handsome and sunny. But with the invasion of the disease, Wang Jia lost the ability to take care of himself, unable to stand, unable to move his hands and feet, or even unable to speak, only the eyeballs moved. "In fact, he can listen and think, but he just can't express it, and it's very painful." Gao Xiaorong said that they communicated with Wang Jia's family many times to develop the most suitable brain-computer interface algorithm for Wang Jia's physiological characteristics.

On the day of use, the research team came to Wang Jia's home early to help Wang Jia wash his hair and put on a "helmet". Power on the system and open the program. Wang Jia stared at a character on the screen, and his eyeballs turned slightly from time to time. A magical scene happens: the screen first jumps out of a word: rub. Wang Jia's parents first understood the meaning of this word and immediately helped him wipe the saliva from the corners of his mouth. Immediately after that, another sentence popped up on the screen: Thank you for your concern! Wang Jia's parents shed tears of excitement, "I can finally communicate with my children in the future!" The two old men said.

<h1>Let the robot "work" for the leg</h1>

In the biomedical engineering session of the recently held beijing-wide academic paper report, Gao Xiaorong showed everyone another magical research field: intelligent robot collaborative control method based on the surface of the calf. Popularly understood: by capturing the electrical signals of the muscles, let the robot work for the legs.

In 2014, Gao Xiaorong's team accepted the invitation of a top domestic technology company and began to lead the team to tackle tough problems, with the goal of interpreting people's gait by collecting muscle electrical signals. The intelligent device developed in this way can not only warn the elderly of the fall problem, but also develop intelligent prosthetics, intelligent wheelchairs, etc., by judging the user's leg muscle signals, intelligently identify the user's willingness to move, drive the legs or wheelchair forward and backward, "so that it can greatly improve the quality of life of a large number of movement disorder groups." Gao Xiaorong said.

Muscle electrical signals are electrophysiological signals that accompany skeletal muscle groups when they receive control information transmitted from brain nerve signals and produce corresponding contractions. The computer obtains from the corresponding body surface of the corresponding skeletal muscle, because there is no obstacle such as the skull, it is much easier to collect than the EEG signal, the signal is reliable, and it is easy to identify. But during the development process, it was found that its shortcomings were also very obvious: First, the fatigue rate of skeletal muscle was much faster than that of the brain. After 10 minutes or 20 minutes, the muscles begin to fatigue, and then there is movement deformation, so that the myoelectric signal is also disordered; at the same time, because the scale of activity of the legs is much larger than the head, the electrode that collects the signal cannot be fully fitted with the skeletal muscle, which will bring signal instability; in addition, the position deviation of the electrode will also cause the signal to drift.

In response to these difficulties, Gao Xiaorong led the team of students to make a lot of efforts, including the use of signal analysis methods such as transfer learning. Finally successfully developed.

According to reports, this set of human-computer interaction system is composed of an ELECTROMY acquisition module, a pattern recognition module and a robot control module. Among them, the EMG acquisition module collects the EMG signal in the trial, amplifies it and collects it and sends it to the pattern recognition module through Bluetooth communication; the pattern recognition analysis module processes the signal and then sends it to the robot control module; the robot control module inputs different instructions to the intelligent robot, thereby controlling the robot's movement.

Experiments show that 8 subjects performed forward, backward, left and right 320 gaits each in real-time interactive control. The robot recognition rate of all experiments is as high as more than 80%, of which the recognition rate of forward and backward is as high as 90%.

<h1>Brain-computer interaction makes mind manipulation come true</h1>

Gao Xiaorong said that the traditional human-computer interaction is mainly hand-based, such as we use our hands to tap the keyboard, click the mouse, or touch the screen of the mobile phone and PAD. In recent years, with the development of artificial intelligence, human-computer interaction has emerged through voice, video images (face recognition) and other methods. In the future, brain-computer interaction will gradually take the main stage, "we can use our minds to make phone calls, send and receive text messages, and even drive." "In addition, brain-computer interactions will also have a lot of promise in terms of personal privacy protection." Because fingerprints and even the iris of the human eye can be copied, but the human brain waves can certainly not be copied, which forms the uniqueness of confidentiality.

At this year's Brain Control Typing Record Challenge, Weissven achieved a rate of 691.55 bits per minute. Gao Xiaorong said that this record will be broken in the future. According to Moore's Law, computer performance doubles every 18 months. The efficiency of brain-computer interaction will also increase. They have calculated that after 10 years, the rate of brain-computer interaction may increase by more than 4 times, and at that time, communicating with the mind may not be a problem in terms of information flow speed.

"It seems mysterious and hard to believe, but a lot of research is from science fiction to science, and then to the application of technology." Brain-computer interaction has moved towards technological applications. Gao Xiaorong said.

Facing the future, the research of Gao Xiaorong's team has long been rolled out. The EEG collection device they are developing will no longer be an ugly hat, but a delicate and beautiful headset, gently buckled in the ear pinnacles, the collected brainwave signal wireless high-speed transmission, to help us freely control all kinds of wearable smart devices, office equipment, home equipment, cars...

The reporter learned that at present, many universities and research institutions such as Tsinghua University, South China University of Technology, National University of Defense Technology, Tianjin University, Zhejiang University, Xi'an Jiaotong University, East China University of Science and Technology are involved in the research of brain-computer interface, and the results are quite fruitful. The number of papers published in the field of brain-computer interface research in China is second only to the United States, ranking second. Nine of the 25 highly cited papers in the field worldwide are from China. In terms of the application of brain-computer interface, South China University of Technology has conducted clinical trials in more than 100 cases of severe paralysis patients, and Tianjin University has successfully realized the first space brain-computer interface experiment on Tiangong-2.

Source: Beijing Daily Client

Author Zhang Hang

Photograph: Zhang Hang

Executive Producers: Ding Zhaowen, Wang Ran

Editor: Wang Haiping

Process Editor: Sun Yujie