Zhi DongXi (public number: zhidxcom)

Author | Xu Shan

Edit | Yunpeng

After the market value evaporated by $230 billion overnight, Zuckerberg began to come up with "AI black technology" to save his own value!

Zhidong February 24 news, just today at one o'clock in the morning Beijing time, Zuckerberg specially held an event called "AI in the meta-universe", specifically announced Meta's detailed technical layout in the field of AI, which is also zuckerberg's first time in 2022 that Meta will focus on voice translation, AI creation and voice assistants.

At the conference, Meta announced the development of a translation software customized for everyone in the world, and researchers hope to create a barrier-free communication space in the "metaverse" by building advanced AI models and a translator that can be adapted to all languages.

Meta plans to develop a new AI system BuilderBot, what you say in the virtual world, AI can show you the corresponding picture, it seems that AI can also become an artifact like "Aladdin's lamp".

Not only that, Meta has launched the caiRaoke project, where developers have developed an end-to-end neural model that can provide more communication with people, can go a step deeper than previous simple conversations, and can understand the context in which people are speaking.

The latest earnings report shows that Meta's Reality Labs division, which specializes in "meta-universes", lost $10.2 billion in 2021. Has Zuckerberg's long-hidden AI trick saved the waning metaverse?

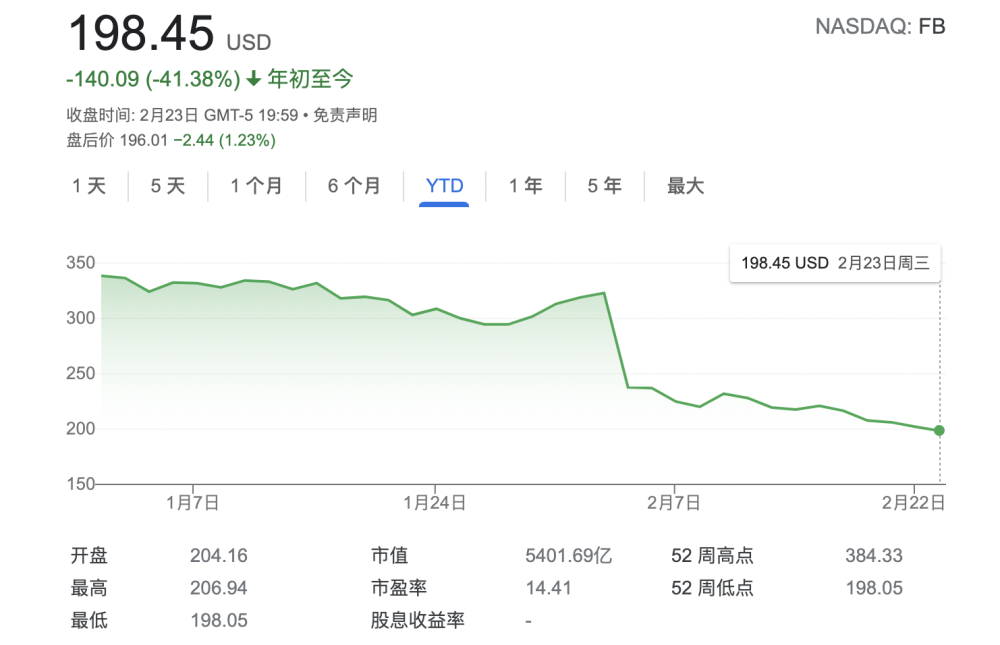

▲Meta 2021 stock price change chart

First, CEO Zuckerberg: Meta will focus on voice translation and voice assistants

Zuckerberg said that most AI research now focuses on how to make AI better understand the real world, but in the future, AI needs to help people achieve functions such as navigation in the real world and the virtual world. And since the virtual world is always changing, AI should have the ability to understand the environment and learn like humans.

▲ Meta CEO Zuckerberg

"In the metaverse, you're going to be able to visually interact with anywhere, including your position in 3D space, your face, gestures, and other body language, all of which require different input methods." He believes that AI is one of the important data portals.

Zuckerberg not only introduced the application of AI in the fields of speech translation, voice assistants, and AI creation, but also briefly talked about Meta's contribution to promoting AI technology research.

Currently, Meta is building three AI projects.

The first is an AI system called BuilderBot, which allows people to build them by describing parts of the virtual world. They showed how BuildterBot would look like in the future through a video. For example, when people are in the virtual world and say to the system, "I want a cloud in the sky," AI will automatically add a cloud to the island. Thinking about it this way, if the system can be successfully developed, the days of "clothes to reach out, food to open mouths" in the virtual world are not far away.

The second project is Meta's desire to build an AI that can think like a human. Yann LeCun, chief AI scientist at Meta AI, suggested that the ability to create a "world model" could be key to the project.

"One of the most important challenges for AI today is designing learning paradigms and architectures that enable machines to learn models of the world in a self-supervised manner and then use those models for prediction, reasoning, and planning." He tries to take relevant concepts from multiple disciplines and combine them with new concepts in machine learning into self-supervised learning and joint-embedding architectures models.

The third project is related to Meta's "metaverse" layout. At last October's event, Meta showed off a "one-size-fits-all" translation dialogue software, and now the details behind this scene are gradually emerging.

At the event, Meta AI announced that it will build a translation tool, and the project will be divided into two parts. The first part is covering the entire language, and Meta is building a new advanced AI model that can learn from languages that need to be trained with fewer examples, and then implement expert translations for hundreds of languages. The second part is building a universal speech translator, and researchers are devising new ways to translate speech from one language into another in real time.

In addition, Zuckerberg also briefly introduced Meta's contributions to privacy protection and data openness.

Meta has partnered with NYU Langone Medical Center on a project called "Rapid MRI" that can use AI to create MRI images from less data, enabling faster MRI scans.

"We can't really advance scientific research without carefully considering how and when we release data." At the same time, Zuckerberg also mentioned that when publishing datasets, they will consider privacy and fairness as guidelines.

Second, to create an AI model for the metaverse, Meta to create a variety of touch sensors

Meta's AI researchers have been discussing how to build a rich, representative model for years. And this new model is not only predictive now, but also applicable to the future.

"We hope that the model can be planned and reasoned over the long term so that it can be a good AI agent in the real world and the virtual world in the future." Joelle Pineau, co-managing director of Facebook AI Research, said.

Joelle Pineau, general manager of Facebook AI Research

In addition, she introduced several directions that Meta is focusing on, one of which is "robot".

"It can break through the limitations of fixed scenarios like labs or factories." And it can operate smoothly at home and in the office, and interact with humans naturally. "But we also need robots to improve their ability to perceive the world by touching themselves." ”

To this end, Meta has been developing new touch sensors. Meta is collaborating with other researchers to create a new sensor that is currently in the prototype stage.

Unlike other sensors, the outer layer of this touch sensor has a thin film embedded with magnetic particles embedded in the film. When the touch sensor is deformed, the magnetic signal changes.

With these changes, AI technology can infer the magnitude of the force applied to the contact points, and even use self-supervised learning models to automatically calibrate sensors to make them more suitable for a variety of scenarios.

▲ Touch sensor

Not only that, but Meta is also developing another digital sensor in collaboration with other R&D institutes. The surface of the sensor is composed of deformable elastic materials that can sense changes in force through changes in the image recorded by the camera inside the sensor.

▲Digital sensor

Joelle Pineau also mentioned that there is still a big gap between the virtual world and the real world, and there is still a lot of work to be done to build a reliable model of the world. In particular, high-resolution virtual environments can be presented in real time, from simple objects to the movements of the human body.

In her speech, she also announced that Meta's researchers, in collaboration with Instagram's research team, plan to release a prototype system called Instagram Feed Ranking.

Third, create a "universal" translator, but also support 100 languages automatic labeling

Machine translation expert Angela Fan believes that language is one of the main ways we use to understand our interactions with the world around us.

She describes how she lived in Canada and worked in Paris, often feeling the far-reaching effects of the language barrier. Based on these voice communication barriers, Meta wants everyone to feel the latest translation technology, "We want all future technologies to be inclusive by default." ”

It is estimated that about 2 billion people worldwide speak their native language without any available translation system. Moreover, there are thousands of languages in the world, but the translation system today only supports about 100 languages.

How to create a translation system that really works for everyone is a question that Meta has been thinking about.

Angela Fan believes that the first step in creating a more inclusive translation is to develop a system capable of supporting multiple languages. "If we want to implement multilingual translation, we need to create a separate model for each language direction, so we have to create tens of thousands of models, which is a very complicated thing."

In order to solve this problem, Meta tries to strengthen the training ability of the model according to different language systems. For example, Romansh, as a niche language, will have something in common with other language systems, and the connections between languages will simplify the development of language models.

"Recently, we won two top translation competitions." She said, "We proved that multilingual systems are indeed better than bilingual systems, and by increasing the size of the model, for example by generating more training data and downsizing translations, we proved that multilingual translation is a very promising development direction." ”

In addition to this, Meta can automatically create some examples of translated data without having to hire people to manually translate large amounts of data. At present, Meta has supported more than 100 languages to achieve automatic creation of datasets, and some datasets are open source.

Fourth, Meta plans to build a super voice assistant, continuous decision-making into the key to AI interaction

Alborz Geramifard, senior research manager at Meta AI, talks about how voice interaction assistants can be divided into three categories.

The first category is the primary voice assistant. For example, if we want to inquire about the phone bill, call customer service, you will hear please press the 1 button to get the phone bill information, please press the 2 button to get the subscription service, etc. "You often find that the service you want isn't in the list of options."

The second type of voice interaction assistant, but has some intelligence models that may have it. "You might interact with them at home simply. But they can't understand the semantic context and can't communicate deeply. ”

The third category is the super voice assistant that Meta wants to build. This type of super voice assistant perpetuates a deeper context and can provide users with a personalized experience. For example, if you want to play a song in the morning and it is raining outside the window, the super voice assistant will recommend a song for you to play according to the scene at the moment.

He also talked about how understanding AI and interactive AI are two different concepts. Understandable AI is a one-way process, from input to output, such as transcription audio parsing can get the corresponding text.

▲ The difference between comprehension AI and real-time interactive AI

Interaction is a continuous dialogue between the user and the AI. For example, James might want to send a message to Nick saying he'll be five minutes late. When the AI completes the relevant operation, James wants to modify the time to 10 minutes, in this case, James can continue to modify his schedule, and the AI can also complete the relevant operation. Questions of continuous decision-making are often involved in interactive conversations.

"Our goal is to combine the new model we built with VR/AR equipment to enable more immersive and multimodal forms of interaction through AI."

For example, your voice assistant can help you make delicious ingredients, list ingredients based on your recipes, and proactively guide you through the entire process of recipes. And, when you add salt to this dish, the super voice assistant notices that you're getting less and less salt, so it helps you place a shopping order. The interaction between the super voice assistant and you is getting closer and closer.

Fifth, open data sets, Meta strives to eliminate data bias

Last summer, Meta published an article outlining the five pillars of its AI principles, including privacy and security, fairness and inclusion, robustness, security, transparency, and controllability.

Faced with AI fairness, Jacqueline Pan, project manager at Facebook AI, talked about the AI team working with another internal team to release some conversational datasets designed to help assess potential algorithms that may exist in AI systems.

The latest dataset will contain videos of more than 45,000 paying participants engaged in unscripted conversations revealing their ages and genders, allowing AI to analyze information relatively impartially.

In addition, Meta is able to provide labels for skin tones and sounds, and the dataset is designed to help researchers assess the accuracy of their computer vision and audio models in these dimensions.

Meta also had a collaborative teaching project with Georgia Tech in 2020 to develop AI talent.

AI researchers will work with Georgia Tech faculty to polish a deep learning course development course as part of the university's online master's program in computer science, with the primary goal of helping students experience what techniques are used to learn scaling algorithms in the real world.

According to statistics, more than 1,600 students took this course in the first year, and nearly 2400 students completed this year. And about 85 percent of the students surveyed said they had gained a lot from the course.

Conclusion: The metaverse is a game of chess, zuckerberg uses AI technology

Speaking of the beginning of 2022, Zuckerberg is really miserable.

Since the heavy pressure on the metaverse, Zuckerberg has become a walking metaverse endorsement on the one hand, directly driving the global metacosmonic heat, while Meta's stock market performance on the other hand is dismal. The technology company, which once ranked among the five giants of the US stock market, is now in a difficult decline, and its market value has been suppressed by tesla, NVIDIA, TSMC and Tencent.

On February 4 this year, Meta announced its latest financial report, and the first disclosure of the meta-universe division loss increased significantly from $4.5 billion in 2019 to $10.2 billion in 2021. Its stock plunged 26 percent at the end of the day, the biggest market capitalization drop in the history of the U.S. stock market, with a market value drop of more than $237 billion.

Not only has the stock price plummeted and the market value shrunk, Zuckerberg himself fell out of the top 10 of the global rich list, which is not embarrassing.

Obviously, people are still in a wait-and-see state for zuckerberg's metaverse "big pie". In the early hours of this morning, Zuckerberg completely from a technical point of view, showing a set of cutting-edge AI technology packages that still closely revolve around the blueprint of the metacosmum to create a more convincing story.

But will Wall Street investors buy it? Meta's technical blueprint will take some time to truly support the future of the virtual world woven by Zuckerberg.