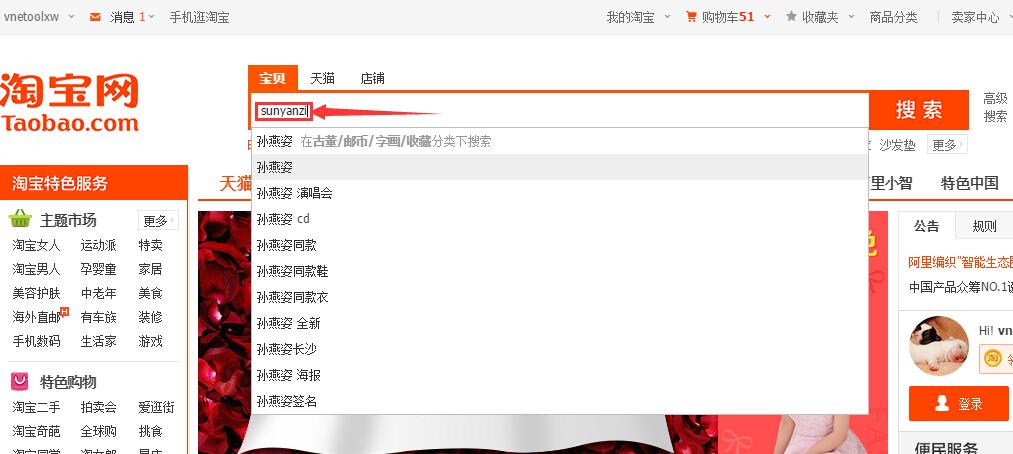

今天來說說拼音檢索,這個功能其實還是用來提升使用者體驗的,别的不說,最起碼避免了使用者切換輸入法,如果能支援中文漢語拼音簡拼,那使用者搜尋時輸入的字元更簡便了,使用者輸入次數少了就是為了給使用者使用時帶來便利。來看看一些拼音搜尋的經典案例:

看了上面幾張圖的功能示範,我想大家也應該知道了拼音檢索的作用以及為什麼要使用拼音檢索了。那接下來就來說說如何實作:

接下來要做的就是要把轉換得到的拼音進行ngram處理,比如:王傑的漢語拼音是wangjie,如果要使用者完整正确的輸入wangjie才能搜到有關“王傑”的結果,那未免有點在考使用者的漢語拼音基礎知識,萬一使用者前鼻音和後鼻音不分怎麼辦,是以我們需要考慮字首查詢或模糊比對,即使用者隻需要輸入wan就能比對到"王"字,這樣做的目的其實還是為了減少使用者操作步驟,用最少的操作步驟達到同樣的目的,那必然是最讨人喜歡的。再比如“孫燕姿”漢語拼音是“sunyanzi”,如果我期望輸入“yanz”也能搜到呢?這時候ngram就起作用啦,我們可以對“sunyanzi”進行ngram處理,假如ngram按2-4個長度進行切分,那得到的結果就是:su un ny

ya an nz zi sun uny nya yan anz nzi suny unya nyan yanz anzi,這樣使用者輸入yanz就能搜到了。但ngram隻适合使用者輸入的搜尋關鍵字比較短的情況下,因為如果使用者輸入的搜尋關鍵字全是漢字且長度為20-30個,再轉換為拼音,個數又要翻個5-6倍,再進行ngram又差不多翻了個10倍甚至更多,因為我們都知道booleanquery最多隻能連結1024個query,是以你懂的。 分出來的gram段會通過chartermattribute記錄在原始term的相同位置,跟同義詞實作原理差不多。是以拼音搜尋至關重要的是分詞,即在分詞階段就把拼音進行ngram處理然後當作同義詞存入chartermattribute中(這無疑也會增加索引體積,索引體積增大除了會額外多占點硬碟空間外,還會對索引重建性能以及搜尋性能有所影響),搜尋階段跟普通查詢沒什麼差別。如果你不想因為ngram後term數量太多影響搜尋性能,你可以試試edgengramtokenfilter進行字首ngram,即ngram時永遠從第一個字元開始切分,比如sunyanzi,按2-8個長度進行edgengramtokenfilter處理後結果就是:su sun suny sunya sunyan sunyanz sunyanzi。這樣處理可以減少term數量,但弊端就是你輸入yanzi就沒法搜尋到了(比對粒度變粗了,沒有ngram比對粒度精确),你懂的。

下面給出一個拼音搜尋的示例程式,代碼如下:

package com.yida.framework.lucene5.pinyin;

import java.io.ioexception;

import net.sourceforge.pinyin4j.pinyinhelper;

import net.sourceforge.pinyin4j.format.hanyupinyincasetype;

import net.sourceforge.pinyin4j.format.hanyupinyinoutputformat;

import net.sourceforge.pinyin4j.format.hanyupinyintonetype;

import net.sourceforge.pinyin4j.format.exception.badhanyupinyinoutputformatcombination;

import org.apache.lucene.analysis.tokenfilter;

import org.apache.lucene.analysis.tokenstream;

import org.apache.lucene.analysis.tokenattributes.chartermattribute;

/**

* 拼音過濾器[負責将漢字轉換為拼音]

* @author lanxiaowei

*

*/

public class pinyintokenfilter extends tokenfilter {

private final chartermattribute termatt;

/**漢語拼音輸出轉換器[基于pinyin4j]*/

private hanyupinyinoutputformat outputformat;

/**對于多音字會有多個拼音,firstchar即表示隻取第一個,否則會取多個拼音*/

private boolean firstchar;

/**term最小長度[小于這個最小長度的不進行拼音轉換]*/

private int mintermlength;

private char[] curtermbuffer;

private int curtermlength;

private boolean outchinese;

public pinyintokenfilter(tokenstream input) {

this(input, constant.default_first_char, constant.default_min_term_lrngth);

}

public pinyintokenfilter(tokenstream input, boolean firstchar) {

this(input, firstchar, constant.default_min_term_lrngth);

public pinyintokenfilter(tokenstream input, boolean firstchar,

int mintermlenght) {

this(input, firstchar, mintermlenght, constant.default_ngram_chinese);

int mintermlenght, boolean outchinese) {

super(input);

this.termatt = ((chartermattribute) addattribute(chartermattribute.class));

this.outputformat = new hanyupinyinoutputformat();

this.firstchar = false;

this.mintermlength = constant.default_min_term_lrngth;

this.outchinese = constant.default_out_chinese;

this.firstchar = firstchar;

this.mintermlength = mintermlenght;

if (this.mintermlength < 1) {

this.mintermlength = 1;

}

this.outputformat.setcasetype(hanyupinyincasetype.lowercase);

this.outputformat.settonetype(hanyupinyintonetype.without_tone);

public static boolean containschinese(string s) {

if ((s == null) || ("".equals(s.trim())))

return false;

for (int i = 0; i < s.length(); i++) {

if (ischinese(s.charat(i)))

return true;

return false;

public static boolean ischinese(char a) {

int v = a;

return (v >= 19968) && (v <= 171941);

public final boolean incrementtoken() throws ioexception {

while (true) {

if (this.curtermbuffer == null) {

if (!this.input.incrementtoken()) {

return false;

}

this.curtermbuffer = ((char[]) this.termatt.buffer().clone());

this.curtermlength = this.termatt.length();

}

if (this.outchinese) {

this.outchinese = false;

this.termatt.copybuffer(this.curtermbuffer, 0,

this.curtermlength);

this.outchinese = true;

string chinese = this.termatt.tostring();

if (containschinese(chinese)) {

this.outchinese = true;

if (chinese.length() >= this.mintermlength) {

try {

string chineseterm = getpinyinstring(chinese);

this.termatt.copybuffer(chineseterm.tochararray(), 0,

chineseterm.length());

} catch (badhanyupinyinoutputformatcombination badhanyupinyinoutputformatcombination) {

badhanyupinyinoutputformatcombination.printstacktrace();

}

this.curtermbuffer = null;

return true;

this.curtermbuffer = null;

public void reset() throws ioexception {

super.reset();

private string getpinyinstring(string chinese)

throws badhanyupinyinoutputformatcombination {

string chineseterm = null;

if (this.firstchar) {

stringbuilder sb = new stringbuilder();

for (int i = 0; i < chinese.length(); i++) {

string[] array = pinyinhelper.tohanyupinyinstringarray(

chinese.charat(i), this.outputformat);

if ((array != null) && (array.length != 0)) {

string s = array[0];

char c = s.charat(0);

sb.append(c);

chineseterm = sb.tostring();

} else {

chineseterm = pinyinhelper.tohanyupinyinstring(chinese,

this.outputformat, "");

return chineseterm;

}

import org.apache.lucene.analysis.tokenattributes.offsetattribute;

* 對轉換後的拼音進行ngram處理的tokenfilter

public class pinyinngramtokenfilter extends tokenfilter {

public static final boolean default_ngram_chinese = false;

private final int mingram;

private final int maxgram;

/**是否需要對中文進行ngram[預設為false]*/

private final boolean ngramchinese;

private final offsetattribute offsetatt;

private int curgramsize;

private int tokstart;

public pinyinngramtokenfilter(tokenstream input) {

this(input, constant.default_min_gram, constant.default_max_gram, default_ngram_chinese);

public pinyinngramtokenfilter(tokenstream input, int maxgram) {

this(input, constant.default_min_gram, maxgram, default_ngram_chinese);

public pinyinngramtokenfilter(tokenstream input, int mingram, int maxgram) {

this(input, mingram, maxgram, default_ngram_chinese);

public pinyinngramtokenfilter(tokenstream input, int mingram, int maxgram,

boolean ngramchinese) {

this.offsetatt = ((offsetattribute) addattribute(offsetattribute.class));

if (mingram < 1) {

throw new illegalargumentexception(

"mingram must be greater than zero");

if (mingram > maxgram) {

"mingram must not be greater than maxgram");

this.mingram = mingram;

this.maxgram = maxgram;

this.ngramchinese = ngramchinese;

if ((!this.ngramchinese)

&& (containschinese(this.termatt.tostring()))) {

this.curgramsize = this.mingram;

this.tokstart = this.offsetatt.startoffset();

if (this.curgramsize <= this.maxgram) {

if (this.curgramsize >= this.curtermlength) {

clearattributes();

this.offsetatt.setoffset(this.tokstart + 0, this.tokstart

+ this.curtermlength);

this.termatt.copybuffer(this.curtermbuffer, 0,

this.curtermlength);

int start = 0;

int end = start + this.curgramsize;

clearattributes();

this.offsetatt.setoffset(this.tokstart + start, this.tokstart

+ end);

this.termatt.copybuffer(this.curtermbuffer, start,

this.curgramsize);

this.curgramsize += 1;

this.curtermbuffer = null;

import java.io.bufferedreader;

import java.io.reader;

import java.io.stringreader;

import org.apache.lucene.analysis.analyzer;

import org.apache.lucene.analysis.tokenizer;

import org.apache.lucene.analysis.core.lowercasefilter;

import org.apache.lucene.analysis.core.stopanalyzer;

import org.apache.lucene.analysis.core.stopfilter;

import org.wltea.analyzer.lucene.iktokenizer;

* 自定義拼音分詞器

public class pinyinanalyzer extends analyzer {

private int mingram;

private int maxgram;

private boolean usesmart;

public pinyinanalyzer() {

super();

this.maxgram = constant.default_max_gram;

this.mingram = constant.default_min_gram;

this.usesmart = constant.default_ik_use_smart;

public pinyinanalyzer(boolean usesmart) {

this.usesmart = usesmart;

public pinyinanalyzer(int maxgram) {

public pinyinanalyzer(int maxgram,boolean usesmart) {

public pinyinanalyzer(int mingram, int maxgram,boolean usesmart) {

@override

protected tokenstreamcomponents createcomponents(string fieldname) {

reader reader = new bufferedreader(new stringreader(fieldname));

tokenizer tokenizer = new iktokenizer(reader, usesmart);

//轉拼音

tokenstream tokenstream = new pinyintokenfilter(tokenizer,

constant.default_first_char, constant.default_min_term_lrngth);

//對拼音進行ngram處理

tokenstream = new pinyinngramtokenfilter(tokenstream, this.mingram, this.maxgram);

tokenstream = new lowercasefilter(tokenstream);

tokenstream = new stopfilter(tokenstream,stopanalyzer.english_stop_words_set);

return new analyzer.tokenstreamcomponents(tokenizer, tokenstream);

package com.yida.framework.lucene5.pinyin.test;

import com.yida.framework.lucene5.pinyin.pinyinanalyzer;

import com.yida.framework.lucene5.util.analyzerutils;

* 拼音分詞器測試

public class pinyinanalyzertest {

public static void main(string[] args) throws ioexception {

string text = "2011年3月31日,孫燕姿與相戀5年多的男友納迪姆在新加坡登記結婚";

analyzer analyzer = new pinyinanalyzer(20);

analyzerutils.displaytokens(analyzer, text);

import org.apache.lucene.document.document;

import org.apache.lucene.document.field.store;

import org.apache.lucene.document.textfield;

import org.apache.lucene.index.directoryreader;

import org.apache.lucene.index.indexreader;

import org.apache.lucene.index.indexwriter;

import org.apache.lucene.index.indexwriterconfig;

import org.apache.lucene.index.term;

import org.apache.lucene.search.indexsearcher;

import org.apache.lucene.search.query;

import org.apache.lucene.search.scoredoc;

import org.apache.lucene.search.termquery;

import org.apache.lucene.search.topdocs;

import org.apache.lucene.store.directory;

import org.apache.lucene.store.ramdirectory;

* 拼音搜尋測試

public class pinyinsearchtest {

public static void main(string[] args) throws exception {

string fieldname = "content";

string querystring = "sunyanzi";

directory directory = new ramdirectory();

analyzer analyzer = new pinyinanalyzer();

indexwriterconfig config = new indexwriterconfig(analyzer);

indexwriter writer = new indexwriter(directory, config);

/****************建立測試索引begin********************/

document doc1 = new document();

doc1.add(new textfield(fieldname, "孫燕姿,新加坡籍華語流行音樂女歌手,剛出道便被譽為華語“四小天後”之一。", store.yes));

writer.adddocument(doc1);

document doc2 = new document();

doc2.add(new textfield(fieldname, "1978年7月23日,孫燕姿出生于新加坡,祖籍中國廣東省潮州市,父親孫耀宏是新加坡南洋理工大學電機系教授,母親是一名教師。姐姐孫燕嘉比燕姿大三歲,任職新加坡巴克萊投資銀行副總裁,妹妹孫燕美小六歲,是新加坡國立大學醫學碩士,燕姿作為家中的第二個女兒,次+女=姿,故取名“燕姿”", store.yes));

writer.adddocument(doc2);

document doc3 = new document();

doc3.add(new textfield(fieldname, "孫燕姿畢業于新加坡南洋理工大學,父親是燕姿音樂的啟蒙者,燕姿從小熱愛音樂,五歲開始學鋼琴,十歲第一次在舞台上唱歌,十八歲寫下第一首自己作詞作曲的歌《someone》。", store.yes));

writer.adddocument(doc3);

document doc4 = new document();

doc4.add(new textfield(fieldname, "華納音樂于2000年6月9日推出孫燕姿的首張音樂專輯《孫燕姿同名專輯》,孫燕姿由此開始了她的音樂之旅。", store.yes));

writer.adddocument(doc4);

document doc5 = new document();

doc5.add(new textfield(fieldname, "2000年,孫燕姿的首張專輯《孫燕姿同名專輯》獲得台灣地區年度專輯銷售冠軍,在台灣賣出30餘萬張的好成績,同年底,發行第二張專輯《我要的幸福》", store.yes));

writer.adddocument(doc5);

document doc6 = new document();

doc6.add(new textfield(fieldname, "2011年3月31日,孫燕姿與相戀5年多的男友納迪姆在新加坡登記結婚", store.yes));

writer.adddocument(doc6);

//強制合并為1個段

writer.forcemerge(1);

writer.close();

/****************建立測試索引end********************/

indexreader reader = directoryreader.open(directory);

indexsearcher searcher = new indexsearcher(reader);

query query = new termquery(new term(fieldname,querystring));

topdocs topdocs = searcher.search(query,integer.max_value);

scoredoc[] docs = topdocs.scoredocs;

if(null == docs || docs.length <= 0) {

system.out.println("no results.");

return;

//列印查詢結果

system.out.println("id[score]\tcontent");

for (scoredoc scoredoc : docs) {

int docid = scoredoc.doc;

document document = searcher.doc(docid);

string content = document.get(fieldname);

float score = scoredoc.score;

system.out.println(docid + "[" + score + "]\t" + content);

我隻貼出了比較核心的幾個類,至于關聯的其他類,請你們下載下傳底下的附件再詳細的看吧。拼音搜尋就說這麼多了,如果你還有什麼問題,請qq上聯系我(qq:7-3-6-0-3-1-3-0-5),或者加我的java技術群跟我們一起交流學習,我會非常的歡迎的。群号:

轉載:http://iamyida.iteye.com/blog/2207080