java層處理message,是在Looper死循環中不斷從MessageQueue中讀取Message進行處理,但是不知道有沒有注意到,MessageQueue的一些native函數。其實MessageQueue不僅在java有一套實作,MessageQueue在native層還有一套實作。

MessageQueue

MessageQueue初始化

frameworks/base/core/java/android/os/MessageQueue.java

MessageQueue(boolean quitAllowed) {

mQuitAllowed = quitAllowed;

mPtr = nativeInit();

}

frameworks/base/core/jni/android_os_MessageQueue.cpp

static jlong android_os_MessageQueue_nativeInit(JNIEnv* env, jclass clazz) {

//建立NativeMessageQueue

NativeMessageQueue* nativeMessageQueue = new NativeMessageQueue();

if (!nativeMessageQueue) {

jniThrowRuntimeException(env, "Unable to allocate native queue");

return 0;

}

nativeMessageQueue->incStrong(env);

return reinterpret_cast<jlong>(nativeMessageQueue);

}

NativeMessageQueue::NativeMessageQueue() :

mPollEnv(NULL), mPollObj(NULL), mExceptionObj(NULL) {

//在這個線程上建立一個native的Looper

mLooper = Looper::getForThread();

if (mLooper == NULL) {

mLooper = new Looper(false);

Looper::setForThread(mLooper);

}

}

MessageQueue讀取一個消息

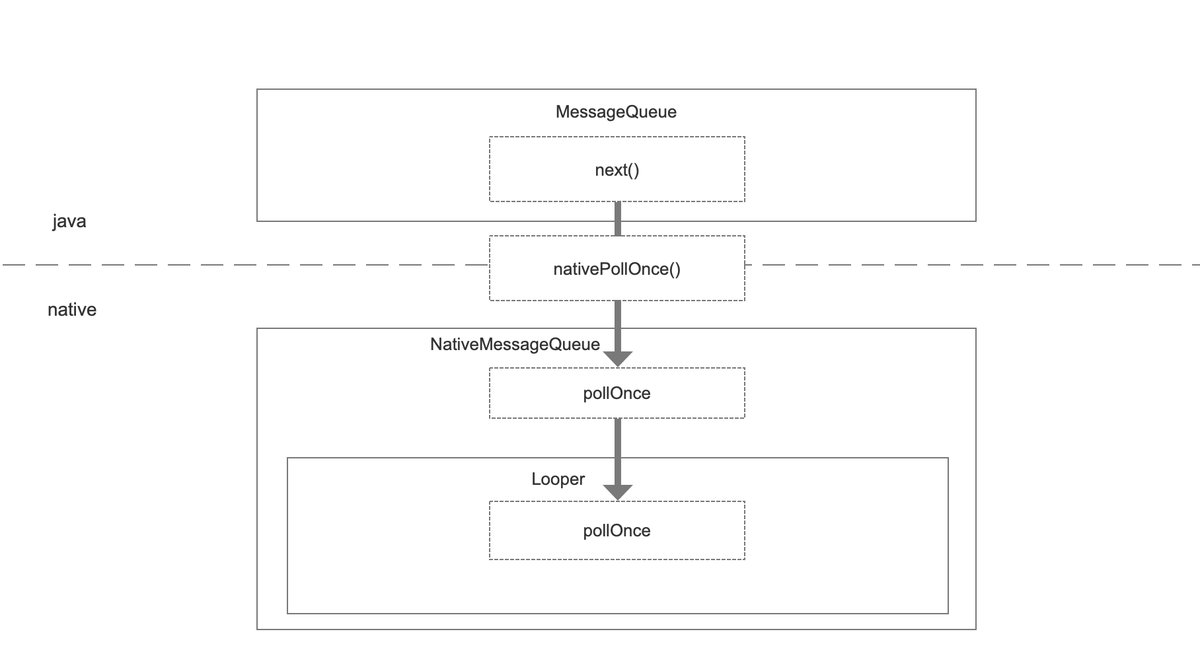

當java層的MessageQueue調用next()函數時,同時也會進行一個native message的處理。

frameworks/base/core/java/android/os/MessageQueue.java

Message next() {

// Return here if the message loop has already quit and been disposed.

// This can happen if the application tries to restart a looper after quit

// which is not supported.

final long ptr = mPtr;

if (ptr == 0) {

return null;

}

int pendingIdleHandlerCount = -1; // -1 only during first iteration

int nextPollTimeoutMillis = 0;

for (;;) {

if (nextPollTimeoutMillis != 0) {

Binder.flushPendingCommands();

}

//mPtr存儲了NativeMessageQueue指針,調用nativePollOnce讀取一次

nativePollOnce(ptr, nextPollTimeoutMillis);

...

}

}

frameworks/base/core/jni/android_os_MessageQueue.cpp

static void android_os_MessageQueue_nativePollOnce(JNIEnv* env, jobject obj,

jlong ptr, jint timeoutMillis) {

NativeMessageQueue* nativeMessageQueue = reinterpret_cast<NativeMessageQueue*>(ptr);

nativeMessageQueue->pollOnce(env, obj, timeoutMillis);

}

void NativeMessageQueue::pollOnce(JNIEnv* env, jobject pollObj, int timeoutMillis) {

mPollEnv = env;

mPollObj = pollObj;

//調用native Looper進行一次資料讀取

mLooper->pollOnce(timeoutMillis);

mPollObj = NULL;

mPollEnv = NULL;

if (mExceptionObj) {

env->Throw(mExceptionObj);

env->DeleteLocalRef(mExceptionObj);

mExceptionObj = NULL;

}

}

MessageQueue添加一個消息

如果我們如果注意過Java層的MessageQueue的話,可以發現在MessageQueue添加一條message的時候也會調用一個native的函數nativeWake。這裡需要喚醒是因為有可能Native 層的Looper阻塞了線程,使消息不能及時響應,是以需要喚醒native層的looper

frameworks/base/core/java/android/os/MessageQueue.java

boolean enqueueMessage(Message msg, long when) {

....

nativeWake(mPtr);

...

}

frameworks/base/core/jni/android_os_MessageQueue.cpp

static void android_os_MessageQueue_nativeWake(JNIEnv* env, jclass clazz, jlong ptr) {

NativeMessageQueue* nativeMessageQueue = reinterpret_cast<NativeMessageQueue*>(ptr);

nativeMessageQueue->wake();

}

void NativeMessageQueue::wake() {

mLooper->wake();

}

Looper

從前面MessageQueue的工作情況來看,最終都吧事情交到了Native層的Looper。

Looper初始化

system/core/libutils/Looper.cpp

Looper::Looper(bool allowNonCallbacks)

: mAllowNonCallbacks(allowNonCallbacks),

mSendingMessage(false),

mPolling(false),

mEpollRebuildRequired(false),

mNextRequestSeq(0),

mResponseIndex(0),

mNextMessageUptime(LLONG_MAX) {

//初始化一個管道,用于喚醒Looper

mWakeEventFd.reset(eventfd(0, EFD_NONBLOCK | EFD_CLOEXEC));

LOG_ALWAYS_FATAL_IF(mWakeEventFd.get() < 0, "Could not make wake event fd: %s", strerror(errno));

AutoMutex _l(mLock);

//native層的Looper實際是對epoll機制的一種封裝,這裡初始化epoll

rebuildEpollLocked();

}

void Looper::rebuildEpollLocked() {

...

//初始化epoll

// Allocate the new epoll instance and register the wake pipe.

mEpollFd.reset(epoll_create1(EPOLL_CLOEXEC));

LOG_ALWAYS_FATAL_IF(mEpollFd < 0, "Could not create epoll instance: %s", strerror(errno));

struct epoll_event eventItem;

memset(& eventItem, 0, sizeof(epoll_event)); // zero out unused members of data field union

eventItem.events = EPOLLIN;

eventItem.data.fd = mWakeEventFd.get();

//将喚醒管道添加到epoll監聽

int result = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, mWakeEventFd.get(), &eventItem);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not add wake event fd to epoll instance: %s",

strerror(errno));

...

}

Looper一次資訊讀取

system/core/libutils/Looper.cpp

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

int result = 0;

for (;;) {

//先處理已經讀取一條的Response資料,傳出去

while (mResponseIndex < mResponses.size()) {

const Response& response = mResponses.itemAt(mResponseIndex++);

int ident = response.request.ident;

if (ident >= 0) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning signalled identifier %d: "

"fd=%d, events=0x%x, data=%p",

this, ident, fd, events, data);

#endif

if (outFd != nullptr) *outFd = fd;

if (outEvents != nullptr) *outEvents = events;

if (outData != nullptr) *outData = data;

return ident;

}

}

//跳出for循環,不再阻塞線程

if (result != 0) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning result %d", this, result);

#endif

if (outFd != nullptr) *outFd = 0;

if (outEvents != nullptr) *outEvents = 0;

if (outData != nullptr) *outData = nullptr;

return result;

}

//從epoll中讀取資料

result = pollInner(timeoutMillis);

}

}

pollInner是将事件從epoll中讀取出來,調用request中Callback,實作回調;處理mMessageEnvelopes中的message

int Looper::pollInner(int timeoutMillis) {

....

//讀取事件

struct epoll_event eventItems[EPOLL_MAX_EVENTS];

int eventCount = epoll_wait(mEpollFd.get(), eventItems, EPOLL_MAX_EVENTS, timeoutMillis);

...

//處理事件

for (int i = 0; i < eventCount; i++) {

int fd = eventItems[i].data.fd;

uint32_t epollEvents = eventItems[i].events;

//喚醒事件處理

if (fd == mWakeEventFd.get()) {

if (epollEvents & EPOLLIN) {

awoken();

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on wake event fd.", epollEvents);

}

} else {

//處理普通事件。Looper添加的事件監聽都會以request的形式存在

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex >= 0) {

int events = 0;

if (epollEvents & EPOLLIN) events |= EVENT_INPUT;

if (epollEvents & EPOLLOUT) events |= EVENT_OUTPUT;

if (epollEvents & EPOLLERR) events |= EVENT_ERROR;

if (epollEvents & EPOLLHUP) events |= EVENT_HANGUP;

//将事件加入mResponses隊列

pushResponse(events, mRequests.valueAt(requestIndex));

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on fd %d that is "

"no longer registered.", epollEvents, fd);

}

}

}

Done: ;

// 處理MessageEnvelope消息;這個和java層的消息處理邏輯是一樣的,調用message中的Handler,然後調用Handler中的handleMessage函數

mNextMessageUptime = LLONG_MAX;

while (mMessageEnvelopes.size() != 0) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

const MessageEnvelope& messageEnvelope = mMessageEnvelopes.itemAt(0);

if (messageEnvelope.uptime <= now) {

// Remove the envelope from the list.

// We keep a strong reference to the handler until the call to handleMessage

// finishes. Then we drop it so that the handler can be deleted *before*

// we reacquire our lock.

{ // obtain handler

sp<MessageHandler> handler = messageEnvelope.handler;

Message message = messageEnvelope.message;

mMessageEnvelopes.removeAt(0);

mSendingMessage = true;

mLock.unlock();

#if DEBUG_POLL_AND_WAKE || DEBUG_CALLBACKS

ALOGD("%p ~ pollOnce - sending message: handler=%p, what=%d",

this, handler.get(), message.what);

#endif

handler->handleMessage(message);

} // release handler

mLock.lock();

mSendingMessage = false;

result = POLL_CALLBACK;

} else {

// The last message left at the head of the queue determines the next wakeup time.

mNextMessageUptime = messageEnvelope.uptime;

break;

}

}

// Release lock.

mLock.unlock();

// 處理事件監聽中的mResponses中的回調

for (size_t i = 0; i < mResponses.size(); i++) {

Response& response = mResponses.editItemAt(i);

if (response.request.ident == POLL_CALLBACK) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

#if DEBUG_POLL_AND_WAKE || DEBUG_CALLBACKS

ALOGD("%p ~ pollOnce - invoking fd event callback %p: fd=%d, events=0x%x, data=%p",

this, response.request.callback.get(), fd, events, data);

#endif

// Invoke the callback. Note that the file descriptor may be closed by

// the callback (and potentially even reused) before the function returns so

// we need to be a little careful when removing the file descriptor afterwards.

int callbackResult = response.request.callback->handleEvent(fd, events, data);

if (callbackResult == 0) {

removeFd(fd, response.request.seq);

}

// Clear the callback reference in the response structure promptly because we

// will not clear the response vector itself until the next poll.

response.request.callback.clear();

result = POLL_CALLBACK;

}

}

return result;

}

Looper喚醒

Looper的喚醒很簡單,就是向mWakeEventFd管道寫資料就行。

void Looper::wake() {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ wake", this);

#endif

uint64_t inc = 1;

//TEMP_FAILURE_RETRY 重試同步調用

ssize_t nWrite = TEMP_FAILURE_RETRY(write(mWakeEventFd.get(), &inc, sizeof(uint64_t)));

if (nWrite != sizeof(uint64_t)) {

if (errno != EAGAIN) {

LOG_ALWAYS_FATAL("Could not write wake signal to fd %d (returned %zd): %s",

mWakeEventFd.get(), nWrite, strerror(errno));

}

}

}

Looper添加監聽

Looper監聽很簡單,就是将加入監聽的對象形成一個request加入mRequest中,然後加入epoll監聽

int Looper::addFd(int fd, int ident, int events, const sp<LooperCallback>& callback, void* data) {

...

{ // acquire lock

AutoMutex _l(mLock);

//建構request

Request request;

request.fd = fd;

request.ident = ident;

request.events = events;

request.seq = mNextRequestSeq++;

request.callback = callback;

request.data = data;

if (mNextRequestSeq == -1) mNextRequestSeq = 0; // reserve sequence number -1

struct epoll_event eventItem;

request.initEventItem(&eventItem);

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex < 0) {

//加入epoll監聽

int epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, fd, &eventItem);

if (epollResult < 0) {

ALOGE("Error adding epoll events for fd %d: %s", fd, strerror(errno));

return -1;

}

mRequests.add(fd, request);

} else {

//加入epoll監聽

int epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_MOD, fd, &eventItem);

if (epollResult < 0) {

if (errno == ENOENT) {

#if DEBUG_CALLBACKS

ALOGD("%p ~ addFd - EPOLL_CTL_MOD failed due to file descriptor "

"being recycled, falling back on EPOLL_CTL_ADD: %s",

this, strerror(errno));

#endif

epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, fd, &eventItem);

if (epollResult < 0) {

ALOGE("Error modifying or adding epoll events for fd %d: %s",

fd, strerror(errno));

return -1;

}

scheduleEpollRebuildLocked();

} else {

ALOGE("Error modifying epoll events for fd %d: %s", fd, strerror(errno));

return -1;

}

}

mRequests.replaceValueAt(requestIndex, request);

}

} // release lock

return 1;

}

Looper添加消息

建構消息MessageEnvelopes,添加到mMessageEnvelopes

void Looper::sendMessageAtTime(nsecs_t uptime, const sp<MessageHandler>& handler,

const Message& message) {

#if DEBUG_CALLBACKS

ALOGD("%p ~ sendMessageAtTime - uptime=%" PRId64 ", handler=%p, what=%d",

this, uptime, handler.get(), message.what);

#endif

size_t i = 0;

{ // acquire lock

AutoMutex _l(mLock);

size_t messageCount = mMessageEnvelopes.size();

while (i < messageCount && uptime >= mMessageEnvelopes.itemAt(i).uptime) {

i += 1;

}

MessageEnvelope messageEnvelope(uptime, handler, message);

mMessageEnvelopes.insertAt(messageEnvelope, i, 1);

if (mSendingMessage) {

return;

}

} // release lock

if (i == 0) {

wake();

}

}