Academician Huang responded to Ultraman's 7 trillion chip plan: Laughed

Fish sheep Cressy from the temple of Wafei

量子位 | 公众号 QbitAI

Ultraman has just been exposed to raise $7 trillion to compete with Nvidia to reshape the global semiconductor landscape.

Lao Huang on the back foot really responded: Old man, exaggerated.

Speaking specifically, he also brought a bit of yin and yang aesthetics (manual dog head):

($7 trillion) can obviously buy all the GPUs.

If you assume that computers aren't going to get any faster, you might conclude that we need 14 planets, 3 galaxies, and 4 suns to fuel it all. But computer architecture is still advancing.

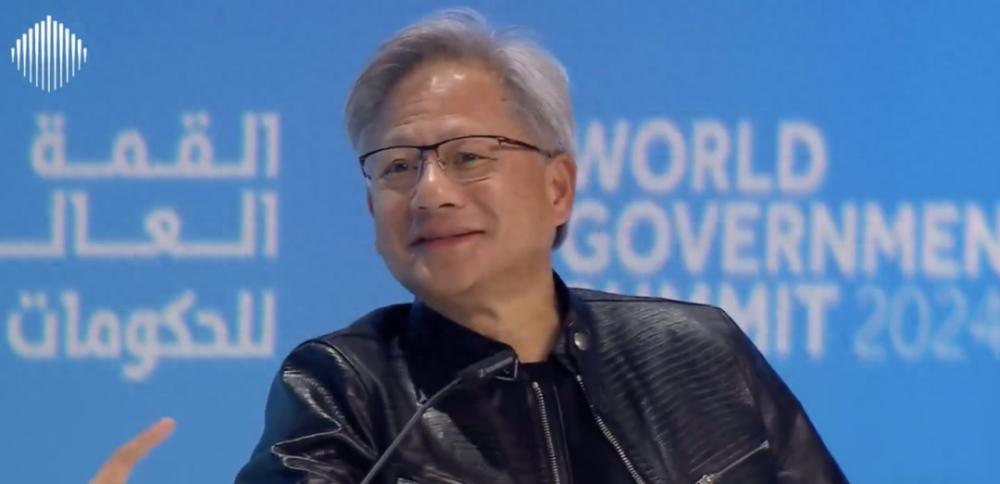

△图源:World Government Summit

In short, Huang believes that more efficient and lower-cost chips will continue to emerge, which will make Ultraman's massive "$7 trillion" investment less necessary.

But then again, Lao Huang didn't say anything to death. He also stressed that the growth of investment in the field of AI will not stop in the short term, and predicted that the size of AI data centers will double in five years.

In fact, the 7 trillion news from Ultraman was exposed, and netizens did not eat less melons.

According to Gartner's forecast, the total revenue of the global semiconductor industry in 2023 will be $533 billion, and $7 trillion is 14 times this figure.

Netizens estimate that these funds are not only enough to swallow up Nvidia + TSMC + Intel + Samsung + Qualcomm + Broadcom + AMD + ASML and a series of semiconductor head companies in one go, but the rest of the money is more than enough to buy a Meta.

So what information did Lao Huang share this time, if you are interested, the following is a transcript~ (compiled by Kimi and ChatGPT, assisted by human editors)

Jensen Huang: The most important AI event of last year was Llama 2

Moderator: I want to start with a question that has always been in my mind, how many GPUs can $7 trillion buy?

Jensen Huang: Obviously, all GPUs.

Moderator: I'd love to ask Sam this question, it's a very big number (laughs). When it comes to ambition, there's no shortage of ambition, but how should today's government plan for AI? What do you suggest?

Huang: First of all, it's an amazing time because we're at the beginning of a new industrial revolution that used to bring about an information revolution with steam engines, electricity, PCs, and the Internet, and now artificial intelligence.

Unprecedented in all, we are experiencing two shifts at the same time: the end of general-purpose computing and the beginning of accelerated computing.

It's like using CPU computing as the basis for all work is no longer feasible today. The reason for this is that 60 years have passed since we invented the CPU in 1964, the year IBM System 360 was released. We've actually relied on this wave of technology for 60 years, and now we're at the beginning of accelerated computing.

If you want sustainable computing, energy-efficient computing, high-performance computing, cost-effective computing, you can no longer rely on general-purpose computing. You need specialized domain-specific acceleration, and that's the foundation that drives the growth of accelerated computing. It enables a new type of application, artificial intelligence.

The question is, what is cause and what is effect? You know, first of all, accelerated computing makes new types of applications possible. There are a lot of applications that are accelerating today.

Now that we are at the beginning of this new era, what will happen next?

Currently, the total value of data centers worldwide is about $1 trillion. In the next 4-5 years, this number will grow to $2 trillion, and these data centers will be the source of global software operations. All of this will be accelerated, and this accelerated computing architecture is ideal for the next generation of software, generative AI. That's the core change that's happening right now.

As you replace general-purpose computing, keep in mind that the performance of the architecture is also improving. So you can't just assume that you're going to buy more computers, you have to assume that computers are going to get faster. So the actual computing resources needed are not that much. Otherwise, if you assume that computers don't get any faster, you might come to the conclusion that we need 14 planets, 3 galaxies, and 4 suns to fuel it all.

One of our greatest contributions over the past 10 years has been to advance computing and artificial intelligence by a factor of 1 million. So, whatever you think is the need that drives the world, it's important to consider that it's going to evolve 1 million times faster and more efficiently.

Moderator: Regarding the fear of AI taking over the world, I think we need to clarify what is real and what is hype. What do you think is the biggest problem right now?

Jensen Huang: Very good question. First of all, we must safely develop creative new technologies, and that is absolutely right. Whether it's aircraft, automobiles, manufacturing systems, medicine, all these different industries are heavily regulated today. These regulations must be expanded and enhanced to account for the situation in which AI will come to us through products and services.

Now, there are interest groups trying to scare people and mystify AI to discourage others from taking action on the technology. I think this is a mistake and we want to generalize AI technology.

If you ask me what the most important AI event of the last year was, I think it was Llama 2, which is an open-source model. Or Falcon, another excellent model. And mistral and so on. All of these technologies are built on transparency, explainability. Because of these open-source models, many different innovations such as security, alignment, guardrails, reinforcement learning, and many others are possible.

Getting everybody involved in the advancement of AI is probably the most important thing to do, rather than trying to convince people that AI is too complex, too dangerous, too mysterious, and that only two or three people in the world can do it, and I think the latter is a huge mistake.

Moderator: Do you think the next AI era will continue to be built on GPUs, and what breakthroughs do you think will be made in the future?

Jensen Huang: Actually, almost all of the world's largest companies are doing in-house development. Google, AWS, Microsoft, Meta are all making their own chips.

Nvidia GPUs are in the spotlight because it's the only platform that's open to everyone.

A unified architecture covers all domains. Our CUDA architecture is able to adapt to any emerging architectural pattern, whether it's CNN, RNN, LSTM, or now Transformer. Now, a variety of different architectures are being created for Vision Transformers, Birdseye View Transformers, and all of these different architectures can be developed on NVIDIA GPUs.

Moderator: The characteristic of AI is that it has evolved a lot in a very short period of time, so the infrastructure that was used five years ago can be very different from the infrastructure that is used today.

But Lao Huang's point is very important, that Nvidia always occupies a place.

Moderator: Let's change the topic and talk about education instead of AI for a while. At the forefront of technology, what should people focus on in terms of education, what should people learn, and how should they educate their children?

Jensen Huang: Wow, that's a good question, but my answer may sound the exact opposite.

You may remember that in the last 10 or 15 years, almost everyone who answered this question in a formal setting would say that computer science, programming is something that everyone should learn.

But in reality, it's almost the exact opposite, because our job is to create computing technology so that no one needs to be "programmed" (in the traditional sense) and that everyone in the world becomes a programmer.

This is the miracle of artificial intelligence. This is the first time we've closed the (programming) technology gap and made AI accessible to more people, which is why AI is talked about almost everywhere.

Because for the first time, everyone in the company can become a technology expert, and now is the perfect time for the technology divide to close.

Problems that require specialized people to solve, such as digital biology, youth education, manufacturing or agriculture, are now accessible to everyone.

Because people have computers that can do what people tell them to do, help humans automate their work, increase productivity and efficiency, I think this is a great time. Of course, people need to learn to use such tools right away, and this is a pressing issue.

At the same time, it is important to realize that it is now easier than ever before to participate in AI, and that society has a responsibility to upskill everyone. At the same time, I am sure that this process of ascension will be pleasant and surprising.

Interviewer: So, if I were to choose a college major, what advice would you give me?

Jensen Huang: I'll start by thinking about one of the most complex sciences to understand, and I think biology, especially biology as it relates to humans.

Not only is it broad, but it's also complex, difficult to understand, and crucially, it can have a huge impact.

We call the field of life sciences, and the disciplines related to medicine are called drug discovery.

But in traditional industries like computer science, no one says "car discovery," "computer discovery," or "software discovery," but rather calls it engineering.

Every year, our software, chips, and infrastructure get better than the year before, but progress in the life sciences has been sporadic.

If I were given a chance to make a new choice, I would realize that the discipline that will engineer life sciences – life engineering – is coming, and it will become an engineering field, not just a purely scientific field.

So, I want today's young people to enjoy working with proteins, enzymes, and materials, and using engineering to make them more energy-efficient, lightweight, durable, and more sustainable.

In the future, all these inventions will be part of engineering, not scientific discoveries.

One More Thing

Just on Monday, Nvidia's market capitalization once surpassed Amazon's to become the fourth-highest company in the U.S. stock market. The top three are Microsoft, Apple, and Google's parent company Alphabet.

However, Amazon regained the fourth position at the close, closing with a market capitalization of $1.79 trillion, while Nvidia closed with a market capitalization of about $1.78 trillion.

Since the beginning of 2024, Nvidia's stock price has climbed by nearly 50% due to the strong global demand for chips. According to estimates, Nvidia's market capitalization has increased by about $600 billion since the beginning of this year, exceeding the increase in the last seven months of 2023.