文章目錄

-

- docker的4種網絡模式

-

- bridge模式

- container模式

- host模式

- none模式

- 名稱空間的建立

-

- ip netns建立

- netns的操作

- 轉移裝置

-

- veth pair

- 建立veth pair

- 實作Network Namespace間通信

- veth裝置重命名

- 四種網絡模式配置

-

- bridge模式配置

- none模式配置

- container模式配置

- host模式配置

- 容器的常用操作

-

- 手動指定容器要使用的DNS

- 手動往/etc/hosts檔案中注入主機名到IP位址的映射

- 開放容器端口

- 自定義docker0橋的網絡屬性資訊

- docker遠端連接配接

- docker建立自定義橋

docker的4種網絡模式

| 網絡模式 | 配置 | 說明 |

|---|---|---|

| host | -network host | 容器和主控端共享Network namespace |

| container | –network container:NAME_OR_ID | 容器和另外一個容器共享Network namespace |

| none | –network none | 容器有獨立的Network namespace,但并沒有對其進行任何網絡設定,如配置設定veth pair 和網橋連接配接,配置IP等 |

| bridge | –network bridge | 預設模式 |

bridge模式

當Docker程序啟動時,會在主機上建立一個名為docker0的虛拟網橋,此主機上啟動的Docker容器會連接配接到這個虛拟網橋上。虛拟網橋的工作方式和實體交換機類似,這樣主機上的所有容器就通過交換 機連在了一個二層網絡中。

從docker0子網中配置設定一個IP給容器使用,并設定docker0的IP位址為容器的預設網關。在主機上建立一對虛拟網卡veth pair裝置,Docker将veth pair裝置的一端放在新建立的容器中,并命名為eth0(容器的網卡),另一端放在主機中,以vethxxx這樣類似的名字命名,并将這個網絡裝置加入到docker0網橋中。可以通過brctl show指令檢視。

bridge模式是docker的預設網絡模式,不寫–network參數,就是bridge模式。使用docker run -p時,docker實際是在iptables做了DNAT規則,實作端口轉發功能。可以使用iptables -t nat -vnL檢視。

#打開ip轉發

[[email protected] ~]# cat /proc/sys/net/ipv4/ip_forward

1

#建立一個容器

[[email protected] ~]# docker run -it nginx /bin/bash

[[email protected] ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b3843c8ae4d9 nginx "/bin/bash" 36 minutes ago Up 36 minutes 80/tcp bold_spence

[[email protected] ~]# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT 72 packets, 13929 bytes)

pkts bytes target prot opt in out source destination

5 260 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 72 packets, 13929 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 707 packets, 52946 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT 707 packets, 52946 bytes)

pkts bytes target prot opt in out source destination

0 0 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

#建立centos容器,通路nginx容器

[[email protected] ~]# docker run -it centos /bin/bash

[[email protected] /]# curl http://172.17.0.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/" target="_blank" rel="external nofollow" target="_blank" rel="external nofollow" >nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/" target="_blank" rel="external nofollow" target="_blank" rel="external nofollow" >nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

container模式

這個模式指定新建立的容器和已經存在的一個容器共享一個 Network Namespace,而不是和主控端共享。新建立的容器不會建立自己的網卡,配置自己的 IP,而是和一個指定的容器共享 IP、端口範圍等。同樣,兩個容器除了網絡方面,其他的如檔案系統、程序清單等還是隔離的。兩個容器的程序可以通過 lo 網卡裝置通信。

#建立centos容器用container模式

[[email protected] ~]# docker run -it --network=container:8491d65371b4 centos /bin/bash

#在centos容器裡沒有ip

[[email protected] ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

92ed3b3c2687 centos "/bin/bash" 3 minutes ago Up 3 minutes jovial_snyder

8491d65371b4 nginx "nginx -g 'daemon of…" 9 minutes ago Up 9 minutes 80/tcp optimistic_montalcini

[[email protected] ~]# docker inspect 92ed3b3c2687

...

"NetworkSettings": {

"Bridge": "",

"SandboxID": "",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {},

"SandboxKey": "",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "",

"Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"MacAddress": "",

"Networks": {}

}

...

#在centos容器裡建立檔案

[[email protected] /]# ls

bin dev etc home lib lib64 lost+found media mnt opt proc root run sbin srv sys tmp usr var

[[email protected] /]# mkdir 123

[[email protected] /]# ls

123 dev home lib64 media opt root sbin sys usr

bin etc lib lost+found mnt proc run srv tmp var

#nginx容器裡并沒有出現123

[email protected]:/# ls

bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

host模式

如果啟動容器的時候使用host模式,那麼這個容器将不會獲得一個獨立的Network Namespace,而是和主控端共用一個Network Namespace。容器将不會虛拟出自己的網卡,配置自己的IP等,而是使用主控端的IP和端口。但是,容器的其他方面,如檔案系統、程序清單等還是和主控端隔離的。

使用host模式的容器可以直接使用主控端的IP位址與外界通信,容器内部的服務端口也可以使用主控端的端口,不需要進行NAT,host最大的優勢就是網絡性能比較好,但是docker host上已經使用的端口就不能再用了,網絡的隔離性不好。

#主控端沒有80端口

[[email protected] ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 5 *:873 *:*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 5 :::873 :::*

#建立host模式的nginx

[[email protected] ~]# docker run -d --network=host nginx

b52a048ed2e01e0ba25896b8b7e724789d91e6f634cc4dfb7421a8d9f8ef1e57

#主控端有了80端口

[[email protected] ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:80 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 5 *:873 *:*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 5 :::873 :::*

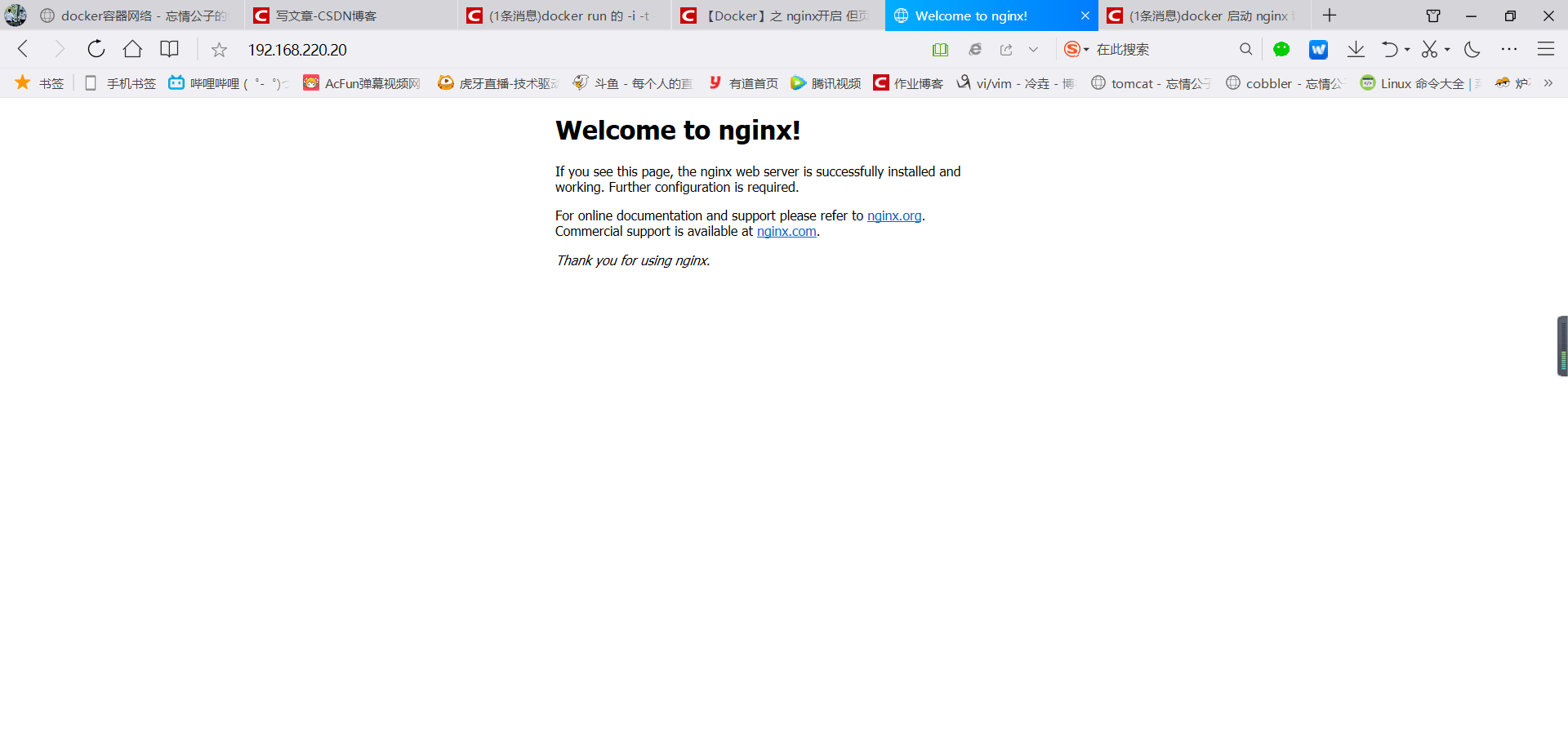

通路主控端機ip也可以通路到nginx

none模式

使用none模式,Docker容器擁有自己的Network Namespace,但是,并不為Docker容器進行任何網絡配置。也就是說,這個Docker容器沒有網卡、IP、路由等資訊。需要我們自己為Docker容器添加網卡、配置IP等。

這種網絡模式下容器隻有lo回環網絡,沒有其他網卡。none模式可以在容器建立時通過–network none來指定。這種類型的網絡沒有辦法聯網,封閉的網絡能很好的保證容器的安全性。

應用場景:

啟動一個容器處理資料,比如轉換資料格式

一些背景的計算和處理任務

#建立none模式的centos容器

[[email protected] ~]# docker run --network=none -it centos /bin/bash

[[email protected] /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

#沒有ip位址

#檢視容器資訊

[[email protected] ~]# docker inspect f4c096312345

...

"Networks": {

"none": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "f312ddeef6847b34befd9207049ff97c6a16fa9cb299ae2d2d97814a6226d442",

"EndpointID": "efde2a34fee8d5bc99340685269bdb21abe081dc5bc833c3ec97b38288d5aead",

"Gateway": "",

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "",

"DriverOpts": null

}

#沒有關于ipv4的資訊

名稱空間的建立

ip netns建立

[[email protected] ~]# ip netns list

[[email protected] ~]# ip netns add ns0

[[email protected] ~]# ip netns list

ns0

新建立的 Network Namespace 會出現在/var/run/netns/目錄下。如果相同名字的 namespace 已經存在,指令會報Cannot create namespace file “/var/run/netns/ns0”: File exists的錯誤。

[[email protected] ~]# ls /var/run/netns/

ns0

[[email protected] ~]# ip netns add ns0

Cannot create namespace file "/var/run/netns/ns0": File exists

對于每個 Network Namespace 來說,它會有自己獨立的網卡、路由表、ARP 表、iptables 等和網絡相關的資源。

netns的操作

ip指令提供了ip netns exec子指令可以在對應的 Network Namespace 中執行指令。

檢視新建立 Network Namespace 的網卡資訊

[[email protected] ~]# ip netns exec ns0 ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

可以看到,新建立的Network Namespace中會預設建立一個lo回環網卡,此時網卡處于關閉狀态。此時,嘗試去 ping 該lo回環網卡,會提示Network is unreachable

[[email protected] ~]# ip netns exec ns0 ping 127.0.0.1

connect: Network is unreachable

通過下面的指令啟用lo回環網卡:

[[email protected] ~]# ip netns exec ns0 ip link set lo up

[[email protected] ~]# ip netns exec ns0 ping 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.047 ms

64 bytes from 127.0.0.1: icmp_seq=2 ttl=64 time=0.073 ms

轉移裝置

我們可以在不同的 Network Namespace 之間轉移裝置(如veth)。由于一個裝置隻能屬于一個 Network Namespace ,是以轉移後在這個 Network Namespace 内就看不到這個裝置了。

其中,veth裝置屬于可轉移裝置,而很多其它裝置(如lo、vxlan、ppp、bridge等)是不可以轉移的。

veth pair

veth pair 全稱是 Virtual Ethernet Pair,是一個成對的端口,所有從這對端口一 端進入的資料包都将從另一端出來,反之也是一樣。

引入veth pair是為了在不同的 Network Namespace 直接進行通信,利用它可以直接将兩個 Network Namespace 連接配接起來。

建立veth pair

[[email protected] ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:b9:3c:6d brd ff:ff:ff:ff:ff:ff

inet 192.168.220.20/24 brd 192.168.220.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::59a:c315:2825:665f/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:fe:7e:5c:ed brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:feff:fe7e:5ced/64 scope link

valid_lft forever preferred_lft forever

[[email protected] ~]# ip link add type veth

[[email protected] ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:b9:3c:6d brd ff:ff:ff:ff:ff:ff

inet 192.168.220.20/24 brd 192.168.220.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::59a:c315:2825:665f/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:fe:7e:5c:ed brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:feff:fe7e:5ced/64 scope link

valid_lft forever preferred_lft forever

10: [email protected]: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN qlen 1000

link/ether a2:d8:72:1d:30:c2 brd ff:ff:ff:ff:ff:ff

11: [email protected]: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 4e:c3:09:83:e9:ee brd ff:ff:ff:ff:ff:ff

可以看到,此時系統中新增了一對veth pair,将veth0和veth1兩個虛拟網卡連接配接了起來,此時這對 veth pair 處于”未啟用“狀态。

實作Network Namespace間通信

下面我們利用veth pair實作兩個不同的 Network Namespace 之間的通信。剛才我們已經建立了一個名為ns0的 Network Namespace,下面再建立一個資訊Network Namespace,命名為ns1

[[email protected] ~]# ip netns add ns1

[[email protected] ~]# ip netns list

ns1

ns0

然後我們将veth0加入到ns0,将veth1加入到ns1

[[email protected] ~]# ip link set veth0 netns ns0

[[email protected] ~]# ip link set veth1 netns ns1

然後我們分别為這對veth pair配置上ip位址,并啟用它們

[[email protected] ~]# ip netns exec ns0 ip link set veth0 up

[[email protected] ~]# ip netns exec ns0 ip addr add 10.0.0.1/24 dev veth0

[[email protected] ~]# ip netns exec ns0 ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

10: [email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether a2:d8:72:1d:30:c2 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 10.0.0.1/24 scope global veth0

valid_lft forever preferred_lft forever

inet6 fe80::a0d8:72ff:fe1d:30c2/64 scope link

valid_lft forever preferred_lft forever

[[email protected] ~]# ip netns exec ns1 ip link set lo up

[[email protected] ~]# ip netns exec ns1 ip link set veth1 up

[[email protected] ~]# ip netns exec ns1 ip addr add 10.0.0.2/24 dev veth1

[[email protected] ~]# ip netns exec ns1 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

11: [email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether 4e:c3:09:83:e9:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.0.2/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::4cc3:9ff:fe83:e9ee/64 scope link

valid_lft forever preferred_lft forever

檢視這對veth pair的狀态

[[email protected] ~]# ip netns exec ns0 ip a

10: [email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 32:c4:23:dd:a7:1c brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 10.0.0.1/24 scope global veth0

valid_lft forever preferred_lft forever

inet6 fe80::30c4:23ff:fedd:a71c/64 scope link

valid_lft forever preferred_lft forever

[[email protected] ~]# ip netns exec ns1 ip a

11: [email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether a2:52:52:cd:54:62 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.0.2/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::a052:52ff:fecd:5462/64 scope link

valid_lft forever preferred_lft forever

從上面可以看出,我們已經成功啟用了這個veth pair,并為每個veth裝置配置設定了對應的ip位址。我們嘗試在ns1中通路ns0中的ip位址:

[[email protected] ~]# ip netns exec ns1 ping 10.0.0.1

PING 10.0.0.1 (10.0.0.1) 56(84) bytes of data.

64 bytes from 10.0.0.1: icmp_seq=1 ttl=64 time=0.072 ms

64 bytes from 10.0.0.1: icmp_seq=2 ttl=64 time=0.082 ms

可以看到,veth pair成功實作了兩個不同Network Namespace之間的網絡互動。

veth裝置重命名

[[email protected] ~]# ip netns exec ns0 ip link set veth0 down

[[email protected] ~]# ip netns exec ns0 ip link set dev veth0 name eth0

[[email protected] ~]# ip netns exec ns0 ifconfig -a

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::30c4:23ff:fedd:a71c prefixlen 64 scopeid 0x20<link>

ether 32:c4:23:dd:a7:1c txqueuelen 1000 (Ethernet)

RX packets 12 bytes 928 (928.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 20 bytes 1576 (1.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[[email protected] ~]# ip netns exec ns0 ip link set eth0 up

四種網絡模式配置

bridge模式配置

[[email protected] ~]# docker run -it --name t1 --rm busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:508 (508.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # exit

[[email protected] ~]# docker container ls -a

# 在建立容器時添加--network bridge與不加--network選項效果是一緻的

[[email protected] ~]# docker run -it --name t1 --network bridge --rm busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:508 (508.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # exit

none模式配置

[[email protected] ~]# docker run -it --name t1 --network none --rm busybox

/ # ifconfig -a

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # exit

container模式配置

啟動第一個容器

[email protected] ~]# docker run -it --name b1 --rm busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:508 (508.0 B) TX bytes:0 (0.0 B)

啟動第二個容器

[[email protected] ~]# docker run -it --name b2 --rm busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:03

inet addr:10.0.0.3 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:508 (508.0 B) TX bytes:0 (0.0 B)

可以看到名為b2的容器IP位址是10.0.0.3,與第一個容器的IP位址不是一樣的,也就是說并沒有共享網絡,此時如果我們将第二個容器的啟動方式改變一下,就可以使名為b2的容器IP與B1容器IP一緻,也即共享IP,但不共享檔案系統。

[[email protected] ~]# docker run -it --name b2 --rm --network container:b1 busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:0 (0.0 B)

此時我們在b1容器上建立一個目錄

/ # mkdir /tmp/data

/ # ls /tmp

data

到b2容器上檢查/tmp目錄會發現并沒有這個目錄,因為檔案系統是處于隔離狀态,僅僅是共享了網絡而已。

在b2容器上部署一個站點

/ # echo 'hello world' > /tmp/index.html

/ # ls /tmp

index.html

/ # httpd -h /tmp

/ # netstat -antl

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 :::80 :::* LISTEN

在b1容器上用本地位址去通路此站點

/ # wget -O - -q 127.0.0.1:80

hello world

由此可見,container模式下的容器間關系就相當于一台主機上的兩個不同程序

host模式配置

啟動容器時直接指明模式為host

[[email protected] ~]# docker run -it --name b2 --rm --network host busybox

/ # ifconfig

docker0 Link encap:Ethernet HWaddr 02:42:06:25:98:91

inet addr:10.0.0.1 Bcast:10.0.255.255 Mask:255.255.0.0

inet6 addr: fe80::42:6ff:fe25:9891/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:55 errors:0 dropped:0 overruns:0 frame:0

TX packets:82 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8339 (8.1 KiB) TX bytes:7577 (7.3 KiB)

ens33 Link encap:Ethernet HWaddr 00:0C:29:01:78:90

inet addr:192.168.10.144 Bcast:192.168.10.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe01:7890/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:55301 errors:0 dropped:0 overruns:0 frame:0

TX packets:26269 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:63769938 (60.8 MiB) TX bytes:2672449 (2.5 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:42 errors:0 dropped:0 overruns:0 frame:0

TX packets:42 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4249 (4.1 KiB) TX bytes:4249 (4.1 KiB)

vethffa4d46 Link encap:Ethernet HWaddr 06:4F:68:16:6E:B0

inet6 addr: fe80::44f:68ff:fe16:6eb0/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:648 (648.0 B)

此時如果我們在這個容器中啟動一個http站點,我們就可以直接用主控端的IP直接在浏覽器中通路這個容器中的站點了。

容器的常用操作

檢視容器的主機名

[[email protected] ~]# docker run -it --name t1 --network bridge --rm busybox

/ # hostname

7769d784c6da

在容器啟動時注入主機名

[[email protected] ~]# docker run -it --name t1 --network bridge --hostname wangqing --rm busybox

/ # hostname

wangqing

/ # cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

10.0.0.2 wangqing # 注入主機名時會自動建立主機名到IP的映射關系

/ # cat /etc/resolv.conf

# Generated by NetworkManager

search localdomain

nameserver 192.168.10.2 # DNS也會自動配置為主控端的DNS

/ # ping www.baidu.com

PING www.baidu.com (182.61.200.7): 56 data bytes

64 bytes from 182.61.200.7: seq=0 ttl=127 time=26.073 ms

64 bytes from 182.61.200.7: seq=1 ttl=127 time=26.378 ms

手動指定容器要使用的DNS

[[email protected] ~]# docker run -it --name t1 --network bridge --hostname wangqing --dns 114.114.114.114 --rm busybox

/ # cat /etc/resolv.conf

search localdomain

nameserver 114.114.114.114

/ # nslookup -type=a www.baidu.com

Server: 114.114.114.114

Address: 114.114.114.114:53

Non-authoritative answer:

www.baidu.com canonical name = www.a.shifen.com

Name: www.a.shifen.com

Address: 182.61.200.6

Name: www.a.shifen.com

Address: 182.61.200.7

手動往/etc/hosts檔案中注入主機名到IP位址的映射

[[email protected] ~]# docker run -it --name t1 --network bridge --hostname wangqing --add-host www.a.com:1.1.1.1 --rm busybox

/ # cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

1.1.1.1 www.a.com

10.0.0.2 wangqing

開放容器端口

執行docker run的時候有個-p選項,可以将容器中的應用端口映射到主控端中,進而實作讓外部主機可以通過通路主控端的某端口來通路容器内應用的目的。

-p選項能夠使用多次,其所能夠暴露的端口必須是容器确實在監聽的端口。

-p選項的使用格式:

-

-p

将指定的容器端口映射至主機所有位址的一個動态端口

-

-p :

将容器端口映射至指定的主機端口

-

-p ::

将指定的容器端口映射至主機指定的動态端口

-

-p ::

将指定的容器端口映射至主機指定的端口

動态端口指的是随機端口,具體的映射結果可使用docker port指令檢視。

[[email protected] ~]# docker run --name web --rm -p 80 nginx

以上指令執行後會一直占用着前端,我們新開一個終端連接配接來看一下容器的80端口被映射到了主控端的什麼端口上

[[email protected] ~]# docker port web

80/tcp -> 0.0.0.0:32769

由此可見,容器的80端口被暴露到了主控端的32769端口上,此時我們在主控端上通路一下這個端口看是否能通路到容器内的站點

[[email protected] ~]# curl http://127.0.0.1:32769

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/" target="_blank" rel="external nofollow" target="_blank" rel="external nofollow" >nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/" target="_blank" rel="external nofollow" target="_blank" rel="external nofollow" >nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

iptables防火牆規則将随容器的建立自動生成,随容器的删除自動删除規則。

将容器端口映射到指定IP的随機端口

[[email protected] ~]# docker run --name web --rm -p 192.168.10.144::80 nginx

在另一個終端上檢視端口映射情況

[[email protected] ~]# docker port web

80/tcp -> 192.168.10.144:32768

将容器端口映射到主控端的指定端口

[[email protected] ~]# docker run --name web --rm -p 80:80 nginx

在另一個終端上檢視端口映射情況

[[email protected] ~]# docker port web

80/tcp -> 0.0.0.0:80

自定義docker0橋的網絡屬性資訊

自定義docker0橋的網絡屬性資訊需要修改/etc/docker/daemon.json配置檔案

{

"bip": "192.168.1.5/24",

"fixed-cidr": "192.168.1.5/25",

"fixed-cidr-v6": "2001:db8::/64",

"mtu": 1500,

"default-gateway": "10.20.1.1",

"default-gateway-v6": "2001:db8:abcd::89",

"dns": ["10.20.1.2","10.20.1.3"]

}

核心選項為bip,即bridge ip之意,用于指定docker0橋自身的IP位址;其它選項可通過此位址計算得出

docker遠端連接配接

dockerd守護程序的C/S,其預設僅監聽Unix Socket格式的位址(/var/run/docker.sock),如果要使用TCP套接字,則需要修改/etc/docker/daemon.json配置檔案,添加如下内容,然後重新開機docker服務:

"hosts": ["tcp://0.0.0.0:2375", "unix:///var/run/docker.sock"]

在用戶端上向dockerd直接傳遞“-H|–host”選項指定要控制哪台主機上的docker容器

docker -H 192.168.10.145:2375 ps

docker建立自定義橋

建立一個額外的自定義橋,差別于docker0

[[email protected] ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

413997d70707 bridge bridge local

0a04824fc9b6 host host local

4dcb8fbdb599 none null local

[[email protected] ~]# docker network create -d bridge --subnet "192.168.2.0/24" --gateway "192.168.2.1" br0

b340ce91fb7c569935ca495f1dc30b8c37204b2a8296c56a29253a067f5dedc9

[[email protected] ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

b340ce91fb7c br0 bridge local

413997d70707 bridge bridge local

0a04824fc9b6 host host local

4dcb8fbdb599 none null local

使用新建立的自定義橋來建立容器:

[[email protected] ~]# docker run -it --name b1 --network br0 busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:C0:A8:02:02

inet addr:192.168.2.2 Bcast:192.168.2.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:11 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:926 (926.0 B) TX bytes:0 (0.0 B)

再建立一個容器,使用預設的bridge橋:

[email protected] ~]# docker run --name b2 -it busybox

/ # ls

bin dev etc home proc root sys tmp usr var

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:508 (508.0 B) TX bytes:0 (0.0 B)