雖然并不打算使用JAVA深入的開發Mapreduce程式,但經過這幾日的了解,總覺得,如果不寫幾段MapReduce程式,也許真的不能很好了解HADOOP的一些思想。用PIG或Hive時,很多時候,察覺不到HDFS的存在。

從網上找了一段讀寫HDFS的代碼,來自《HADOOP實戰》一書。因為之前沒有任何JAVA程式設計的基礎,是以今天的目标更多是搭建一個JAVA的開發環境,能夠将COPY的代碼編譯,并跑起來。

在網上看到的資料,一直談到hadoop-eclipse-plugin,但很遺憾,在HADOOP2.2.0中實在是沒有找到,在CSDN倒是找到一個,可惜在我的環境中無法使用。直到在http://stackoverflow.com/questions/20021169/hadoop-2-2-0-eclipse-plugin這裡看到There is no eclipse plugin for 2.2.0 its still in development stage but you cna try intellj which is also nice dev tool for java。

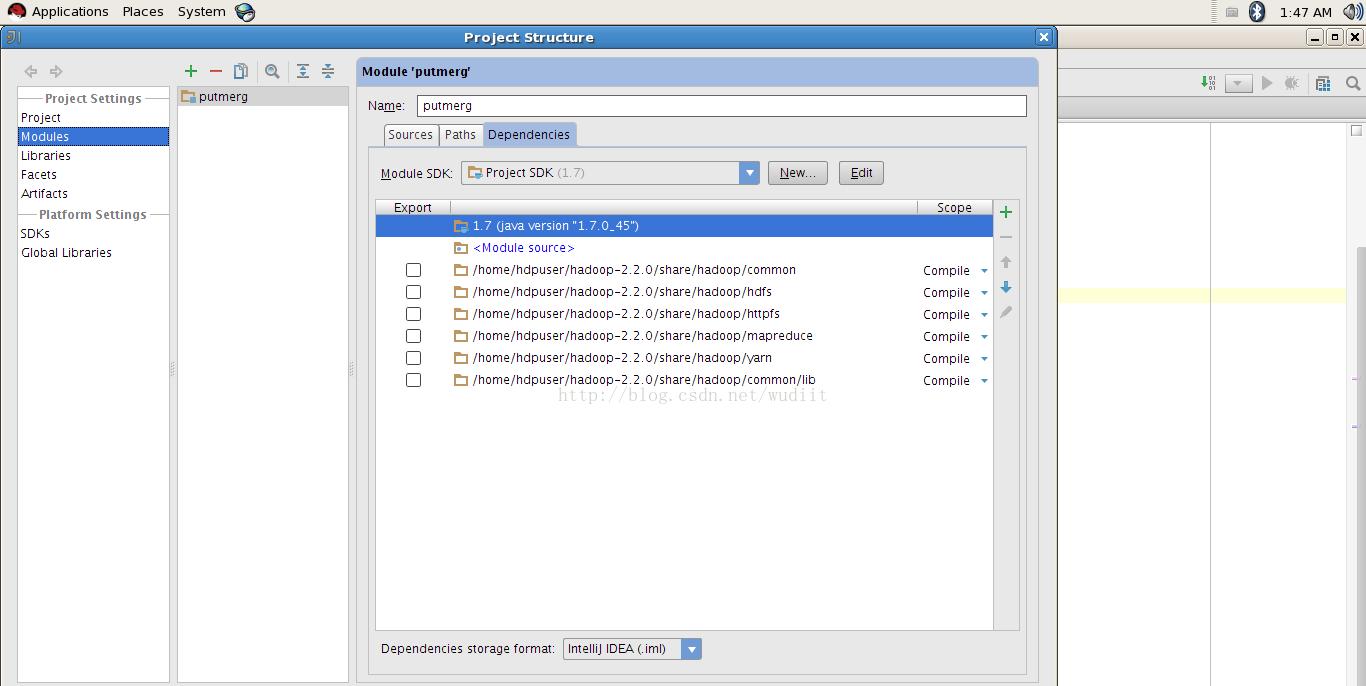

跟着連結到了http://vichargrave.com/intellij-project-for-building-hadoop-the-definitive-guide-examples/,下載下傳了IntelliJ IDEA 13。參考文中内容做如下設定。感覺就像在C裡編譯時include。

然後把如下代碼copy進去,研讀代碼了解功能,并添加注釋

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class PutMerge {

public static void main(String[] args) throws IOException {

Configuration conf = new Configuration();

FileSystem hdfs = FileSystem.get(conf);

FileSystem local = FileSystem.getLocal(conf);//擷取一個本地檔案系統的FileSystem對象

Path inputDir = new Path(args[0]);//參數1

Path hdfsFile = new Path(args[1]); //參數2

try {

FileStatus[] inputFiles = local.listStatus(inputDir);//擷取目錄中的檔案清單

FSDataOutputStream out = hdfs.create(hdfsFile);//将資料寫入HDFS檔案

//循環讀取inputDir中的檔案,并寫入hdfs

for (int i=0; i<inputFiles.length; i++) {

System.out.println(inputFiles[i].getPath().getName());

FSDataInputStream in = local.open(inputFiles[i].getPath());

byte buffer[] = new byte[256];

int bytesRead = 0;

while( (bytesRead = in.read(buffer)) > 0) {

out.write(buffer, 0, bytesRead);

}

in.close();

}

out.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

編譯。産生對應的PutMerge.class檔案。此檔案放在//home/hdpuser/IdeaProjects/putmerg/out/production/putmerg中

然後 jar cvf PutMerge.jar -C /home/hdpuser/IdeaProjects/putmerg/out/production/putmerg .

産生PutMerge.jar包

随後在/home/hdpuser中建立input目錄,并産生兩個檔案,如下

[[email protected] ~]$ ls input

testdt tetdt2

[[email protected] ~]$ cat input/*

wudi 123 aaa

cg 321 bbb

mm 111 ccc

a b c

x y z

測試程式運作

[[email protected] ~]$ hadoop jar PutMerge.jar PutMerge ~/input /output

testdt

tetdt2

到hadoop中檢測生成的output檔案

[[email protected] ~]$ hadoop fs -cat /output

wudi 123 aaa

cg 321 bbb

mm 111 ccc

a b c

x y z

随後,壞毛病作祟,還是想在指令行裡編譯java檔案。研讀相關資料後,做如下操作:

1、檢視代碼中import的這些家夥,在那個JAR包裡。(實在沒有相關的JAVA知識,以通過文檔http://hadoop.apache.org/docs/current/api/org/apache/hadoop/fs/package-summary.html判斷這些家夥在那個JAR包裡邊,隻能根據名字猜測)

運氣不錯,直接想到了hadoop-common-2.2.0.jar,用jar tvf檢視,确認判斷。

[[email protected] common]$ pwd

/home/hdpuser/hadoop-2.2.0/share/hadoop/common

[[email protected] common]$ ls

hadoop-common-2.2.0.jar hadoop-common-2.2.0-tests.jar hadoop-nfs-2.2.0.jar jdiff lib sources templates

[[email protected] common]$ jar tvf hadoop-common-2.2.0.jar | grep -w conf

0 Fri Dec 06 21:35:02 CST 2013 org/apache/hadoop/conf/

953 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration$5.class

2470 Fri Dec 06 21:35:00 CST 2013 org/apache/hadoop/conf/ReconfigurableBase.class

531 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Reconfigurable.class

1901 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/ReconfigurationException.class

3014 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration.class

2351 Fri Dec 06 21:35:02 CST 2013 org/apache/hadoop/conf/ReconfigurationUtil.class

451 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$NegativeCacheSentinel.class

719 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/conf/Configurable.class

770 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$IntegerRanges$Range.class

3857 Fri Dec 06 21:35:02 CST 2013 org/apache/hadoop/conf/ConfServlet.class

675 Fri Dec 06 21:35:02 CST 2013 org/apache/hadoop/conf/ReconfigurationUtil$PropertyChange.class

46708 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration.class

980 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$Resource.class

2032 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$DeprecatedKeyInfo.class

8355 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/ReconfigurationServlet.class

2126 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$IntegerRanges$RangeNumberIterator.class

950 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration$7.class

951 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration$6.class

953 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration$4.class

958 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration$1.class

959 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration$2.class

236 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$1.class

561 Fri Dec 06 21:35:02 CST 2013 org/apache/hadoop/conf/ConfServlet$BadFormatException.class

959 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$ParsedTimeDuration$3.class

1231 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/conf/Configured.class

3611 Fri Dec 06 21:34:58 CST 2013 org/apache/hadoop/conf/Configuration$IntegerRanges.class

[[email protected] common]$ jar tvf hadoop-common-2.2.0.jar | grep -w FileSystem

2347 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$Cache$Key.class

1535 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$Cache$ClientFinalizer.class

586 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$3.class

6182 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$Cache.class

46009 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem.class

2764 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$5.class

2850 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$Statistics.class

1255 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$1.class

2541 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$4.class

1263 Fri Dec 06 21:34:56 CST 2013 org/apache/hadoop/fs/FileSystem$2.class

1022 Fri Dec 06 21:34:56 CST 2013 META-INF/services/org.apache.hadoop.fs.FileSystem

2、指令行執行編譯,産生了6個警告資訊,直接忽略。

[[email protected] src]$ javac -classpath /home/hdpuser/hadoop-2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar PutMerge.java

/home/hdpuser/hadoop-2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar(org/apache/hadoop/fs/FSDataInputStream.class): warning: Cannot find annotation method 'value()' in type 'LimitedPrivate': class file for org.apache.hadoop.classification.InterfaceAudience not found

/home/hdpuser/hadoop-2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar(org/apache/hadoop/fs/FSDataOutputStream.class): warning: Cannot find annotation method 'value()' in type 'LimitedPrivate'

/home/hdpuser/hadoop-2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar(org/apache/hadoop/fs/FileSystem.class): warning: Cannot find annotation method 'value()' in type 'LimitedPrivate'

/home/hdpuser/hadoop-2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar(org/apache/hadoop/fs/FileSystem.class): warning: Cannot find annotation method 'value()' in type 'LimitedPrivate'

/home/hdpuser/hadoop-2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar(org/apache/hadoop/fs/FileSystem.class): warning: Cannot find annotation method 'value()' in type 'LimitedPrivate'

/home/hdpuser/hadoop-2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar(org/apache/hadoop/fs/Path.class): warning: Cannot find annotation method 'value()' in type 'LimitedPrivate'

6 warnings

3、産生了對應的class檔案,随後打包後,到hadoop執行,OK。

小結:

今天的内容,其實和HADOOP沒啥關系。就是JAVA的編譯。

從來沒玩過JAVA,也從來沒打算接觸JAVA,還是喜歡C這樣相對”低級“一些的東西。hadoop也有用C++寫代碼的方式,可看起來總覺得不是那回事。下一步還是要寫一些MapReduce的程式,總感覺不這麼做,HADOOP這玩意就少了很多樂趣和體驗。