序言

上一篇記錄了訓練過程,但是項目中提供的模型網絡都是偏大的,如果想要在邊緣裝置上部署,還是比較吃力的,是以本文記錄如何加入新的網絡模型進行訓練,以repvgg為例,加入mobilenet、shufflenet等網絡同配置。

以及抽取pa100k資料集部分屬性作為自己資料集進行訓練,對于自主标記的資料集,按照相同格式準備即可。

一、添加新網絡repvgg

本項目中用到網絡結構其實和分類網絡沒有太大差別,檢視網絡的建構,同樣是卷積+全連接配接結構,是以建構起來就簡單多了,隻需要替換backbone即可。

在models/backbone中添加repvgg.py檔案,檔案内容如下,提供的backbone隻有兩個:RepVGG_A0、RepVGG_A0_m:

import torch.nn as nn

import numpy as np

import torch

import torch.nn.functional as F

from models.registry import BACKBONE

__all__ = ['RepVGG_A0','RepVGG_A0_m']

def conv_bn(in_channels, out_channels, kernel_size, stride, padding, groups=1):

# 3 x 3的卷積層

result = nn.Sequential()

result.add_module('conv', nn.Conv2d(in_channels=in_channels, out_channels=out_channels,

kernel_size=kernel_size, stride=stride, padding=padding, groups=groups, bias=False))

result.add_module('bn', nn.BatchNorm2d(num_features=out_channels))

return result

class RepVGGBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size,

stride=1, padding=0, dilation=1, groups=1, padding_mode='zeros', deploy=False):

super(RepVGGBlock, self).__init__()

self.deploy = deploy # 部署

self.groups = groups

self.in_channels = in_channels

assert kernel_size == 3

assert padding == 1

padding_11 = padding - kernel_size // 2

self.nonlinearity = nn.ReLU() # 激活

if deploy:

self.rbr_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=True, padding_mode=padding_mode)

else:

self.rbr_identity = nn.BatchNorm2d(num_features=in_channels) if out_channels == in_channels and stride == 1 else None

self.rbr_dense = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride, padding=padding, groups=groups)

self.rbr_1x1 = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=stride, padding=padding_11, groups=groups)

# print('RepVGG Block, identity = ', self.rbr_identity)

def forward(self, inputs):

if hasattr(self, 'rbr_reparam'):

return self.nonlinearity(self.rbr_reparam(inputs))

if self.rbr_identity is None:

id_out = 0

else:

id_out = self.rbr_identity(inputs)

return self.nonlinearity(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out)

# This func derives the equivalent kernel and bias in a DIFFERENTIABLE way.

# You can get the equivalent kernel and bias at any time and do whatever you want,

# for example, apply some penalties or constraints during training, just like you do to the other models.

# May be useful for quantization or pruning.

def get_equivalent_kernel_bias(self):

kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense) # 融合3*3卷積和BN

kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1) # 融合1*1卷積和BN

kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity) # 融合值為1的3*3卷積和BN

return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

def _pad_1x1_to_3x3_tensor(self, kernel1x1):

if kernel1x1 is None:

return 0

else:

return torch.nn.functional.pad(kernel1x1, [1,1,1,1])

def _fuse_bn_tensor(self, branch):

if branch is None:

return 0, 0

if isinstance(branch, nn.Sequential):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

else:

assert isinstance(branch, nn.BatchNorm2d)

if not hasattr(self, 'id_tensor'):

input_dim = self.in_channels // self.groups

kernel_value = np.zeros((self.in_channels, input_dim, 3, 3), dtype=np.float32)

for i in range(self.in_channels):

kernel_value[i, i % input_dim, 1, 1] = 1

self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

def repvgg_convert(self):

kernel, bias = self.get_equivalent_kernel_bias()

return kernel.detach().cpu().numpy(), bias.detach().cpu().numpy(),

class RepVGG(nn.Module):

def __init__(self, num_blocks, num_classes=1000, width_multiplier=None, override_groups_map=None, deploy=False):

super(RepVGG, self).__init__()

assert len(width_multiplier) == 4

self.deploy = deploy

self.override_groups_map = override_groups_map or dict()

assert 0 not in self.override_groups_map

self.in_planes = min(64, int(64 * width_multiplier[0]))

self.out_planes = int(512 * width_multiplier[3])

self.stage0 = RepVGGBlock(in_channels=3, out_channels=self.in_planes, kernel_size=3, stride=2, padding=1, deploy=self.deploy)

self.cur_layer_idx = 1

self.stage1 = self._make_stage(int(64 * width_multiplier[0]), num_blocks[0], stride=2)

self.stage2 = self._make_stage(int(128 * width_multiplier[1]), num_blocks[1], stride=2)

self.stage3 = self._make_stage(int(256 * width_multiplier[2]), num_blocks[2], stride=2)

self.stage4 = self._make_stage(int(512 * width_multiplier[3]), num_blocks[3], stride=2)

# self.gap = nn.AdaptiveAvgPool2d(output_size=1)

# self.linear = nn.Linear(int(512 * width_multiplier[3]), num_classes)

def _make_stage(self, planes, num_blocks, stride):

strides = [stride] + [1]*(num_blocks-1)

blocks = []

for stride in strides:

cur_groups = self.override_groups_map.get(self.cur_layer_idx, 1)

blocks.append(RepVGGBlock(in_channels=self.in_planes, out_channels=planes, kernel_size=3,

stride=stride, padding=1, groups=cur_groups, deploy=self.deploy))

self.in_planes = planes

self.cur_layer_idx += 1

return nn.Sequential(*blocks)

def forward(self, x):

out = self.stage0(x)

out = self.stage1(out)

out = self.stage2(out)

out = self.stage3(out)

out = self.stage4(out)

# out = self.gap(out)

# out = out.view(out.size(0), -1)

# out = self.linear(out)

return out

optional_groupwise_layers = [2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 26]

g2_map = {l: 2 for l in optional_groupwise_layers}

g4_map = {l: 4 for l in optional_groupwise_layers}

def create_RepVGG_A0(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[2, 4, 14, 1], num_classes=num_classes,

width_multiplier=[0.75, 0.75, 0.75, 2.5], override_groups_map=None, deploy=deploy)

def create_RepVGG_A0_m(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[2, 4, 14, 1], num_classes=num_classes,

width_multiplier=[0.75, 0.75, 0.75, 1], override_groups_map=None, deploy=deploy)

def create_RepVGG_A0_s(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[2, 4, 14, 1], num_classes=num_classes,

width_multiplier=[0.75, 0.75, 0.5, 1], override_groups_map=None, deploy=deploy)

def create_RepVGG_A1(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[2, 4, 14, 1], num_classes=num_classes,

width_multiplier=[1, 1, 1, 2.5], override_groups_map=None, deploy=deploy)

def create_RepVGG_A2(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[2, 4, 14, 1], num_classes=num_classes,

width_multiplier=[1.5, 1.5, 1.5, 2.75], override_groups_map=None, deploy=deploy)

def create_RepVGG_B0(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=num_classes,

width_multiplier=[1, 1, 1, 2.5], override_groups_map=None, deploy=deploy)

def create_RepVGG_B1(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=num_classes,

width_multiplier=[2, 2, 2, 4], override_groups_map=None, deploy=deploy)

def create_RepVGG_B1g2(deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=1000,

width_multiplier=[2, 2, 2, 4], override_groups_map=g2_map, deploy=deploy)

def create_RepVGG_B1g4(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=num_classes,

width_multiplier=[2, 2, 2, 4], override_groups_map=g4_map, deploy=deploy)

def create_RepVGG_B2(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=num_classes,

width_multiplier=[2.5, 2.5, 2.5, 5], override_groups_map=None, deploy=deploy)

def create_RepVGG_B2g2(deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=1000,

width_multiplier=[2.5, 2.5, 2.5, 5], override_groups_map=g2_map, deploy=deploy)

def create_RepVGG_B2g4(deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=1000,

width_multiplier=[2.5, 2.5, 2.5, 5], override_groups_map=g4_map, deploy=deploy)

def create_RepVGG_B3(num_classes=1000,deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=num_classes,

width_multiplier=[3, 3, 3, 5], override_groups_map=None, deploy=deploy)

def create_RepVGG_B3g2(deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=1000,

width_multiplier=[3, 3, 3, 5], override_groups_map=g2_map, deploy=deploy)

def create_RepVGG_B3g4(deploy=False):

return RepVGG(num_blocks=[4, 6, 16, 1], num_classes=1000,

width_multiplier=[3, 3, 3, 5], override_groups_map=g4_map, deploy=deploy)

func_dict = {

'RepVGG-A0': create_RepVGG_A0,

'RepVGG-A0_s': create_RepVGG_A0_s,

'RepVGG-A0_m': create_RepVGG_A0_m,

'RepVGG-A1': create_RepVGG_A1,

'RepVGG-A2': create_RepVGG_A2,

'RepVGG-B0': create_RepVGG_B0,

'RepVGG-B1': create_RepVGG_B1,

'RepVGG-B1g2': create_RepVGG_B1g2,

'RepVGG-B1g4': create_RepVGG_B1g4,

'RepVGG-B2': create_RepVGG_B2,

'RepVGG-B2g2': create_RepVGG_B2g2,

'RepVGG-B2g4': create_RepVGG_B2g4,

'RepVGG-B3': create_RepVGG_B3,

'RepVGG-B3g2': create_RepVGG_B3g2,

'RepVGG-B3g4': create_RepVGG_B3g4,

}

def get_RepVGG_func_by_name(name):

return func_dict[name]

# Use like this:

# train_model = create_RepVGG_A0(deploy=False)

# train train_model

# deploy_model = repvgg_convert(train_model, create_RepVGG_A0, save_path='repvgg_deploy.pth')

def repvgg_model_convert(model:torch.nn.Module, build_func, save_path=None,num_classes=1000):

converted_weights = {}

for name, module in model.named_modules():

if hasattr(module, 'repvgg_convert'):

kernel, bias = module.repvgg_convert()

converted_weights[name + '.rbr_reparam.weight'] = kernel

converted_weights[name + '.rbr_reparam.bias'] = bias

elif isinstance(module, torch.nn.Linear):

converted_weights[name + '.weight'] = module.weight.detach().cpu().numpy()

converted_weights[name + '.bias'] = module.bias.detach().cpu().numpy()

else:

print(name, type(module))

del model

deploy_model = build_func(num_classes=num_classes,deploy=True)

for name, param in deploy_model.named_parameters():

print('deploy param: ', name, param.size(), np.mean(converted_weights[name]))

param.data = torch.from_numpy(converted_weights[name]).float()

if save_path is not None:

torch.save(deploy_model.state_dict(), save_path,_use_new_zipfile_serialization=False)

return deploy_model

@BACKBONE.register("repvgg_a0")

def RepVGG_A0(model_path = "/home/cai/project/Rethinking_of_PAR/model/RepVGG-A0-train.pth"):

model = create_RepVGG_A0()

if model_path is not None:

pretrained_params = torch.load(model_path,map_location=torch.device("cpu"))

# pretrained_params= \

# {k: v for k, v in pretrained_params.items() if

# k in net.state_dict().keys() and net.state_dict()[k].numel() == v.numel()}

model.load_state_dict(pretrained_params, strict=False)

return model

@BACKBONE.register("repvgg_a0_m")

def RepVGG_A0_m(model_path = "/home/cai/project/Rethinking_of_PAR/model/RepVGG-A0-train.pth"):

model = create_RepVGG_A0_m()

if model_path is not None:

pretrained_params = torch.load(model_path,map_location=torch.device("cpu"))

# pretrained_params= \

# {k: v for k, v in pretrained_params.items() if

# k in net.state_dict().keys() and net.state_dict()[k].numel() == v.numel()}

model.load_state_dict(pretrained_params, strict=False)

return 需要注意的是這兩個部分,在初始化網絡前要加載好預訓練模型,其他網絡也是類似:

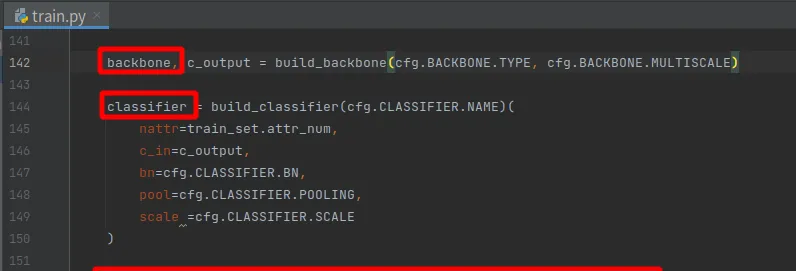

然後在models/model_factory.py中添加,這兩個網絡最後層輸出的對應通道數:

在train.py中導入

最後修改配置檔案,添加repvgg_a0網絡,就可以運作了:

二、自定義資料集

pa100k資料集中包含了26種屬性,有一些屬性對我來說沒有用,不想訓練這麼多,是以抽取其中18中屬性進行訓練,那麼該如何準備呢?

首先,先通過以下腳本将pa100k的mat标簽檔案轉換為txt形式:

import pandas as pd

import scipy

from scipy import io

def mat2txt(data, key):

subdata = data[key]

dfdata = pd.DataFrame(subdata)

dfdata.to_csv("/home/cai/data/PA100K/%s.txt" % key, index=False)

if __name__ == "__main__":

data = scipy.io.loadmat("/home/cai/data/PA100K/annotation.mat")

key_list = ["attributes", "test_images_name", "test_label",

"train_images_name", "train_label",

"val_images_name", "val_label"]

for key in key_list:

mat2txt(data, key) 得到如下幾個檔案:

在通過如下檔案對屬性進行剔除,并聲稱新的txt檔案:

# 根據txt生成标簽

import os

txts = [["train_images_name", "train_label"],["test_images_name", "test_label"],["val_images_name", "val_label"]]

txt_path = "/home/cai/data/PA100K"

for txt_list in txts:

file1 = open(os.path.join(txt_path,txt_list[0]+".txt"),"r")

file2 = open(os.path.join(txt_path,txt_list[1]+".txt"),"r")

save_file = open(txt_list[0].split("_")[0]+".txt","w")

label1_list = []

label2_list = []

for line1 in file1.readlines():

label1_list.append(line1)

for line2 in file2.readlines():

label2_list.append(line2)

for i,label in enumerate(label1_list):

if i ==0 :

continue

label1 = label1_list[i].split('\n')[0].split('\'')

label2 = label2_list[i].split(',')

for i,ind in enumerate([12,15,16,17,18,19,20,25]): # 這是要剔除的相應屬性索引

label2.pop(ind-i)

label2 = ",".join(label2)

save_file.write("/home/cai/data/PA100K/PA100k/data/"+label1[1]+"\t"+label2+"\n")

file1.close()

file2.close()

save_file.close() 得到三個檔案train.txt、test.txt、val.txt,内容如下,如果是自己标注的資料集,想辦法轉成這個格式即可:

然後根據dataset/pedes_attr/preprocess/format_pa100k.py編寫format_mydata.py檔案,在生成pkl之前需要對資料集屬性進行重排,重排規則看dataset/pedes_attr/annotation.md,其實不排也可以,不排的話将generate_data_description函數中label_txt,reorder=Flase即可,相應的修改位置已經在代碼中标明:

import os

import numpy as np

import random

import pickle

from easydict import EasyDict

from scipy.io import loadmat

np.random.seed(0)

random.seed(0)

classes_name = ['Female','AgeOver60','Age18-60','AgeLess18','Front','Side','Back','Hat','Glasses','HandBag','ShoulderBag','Backpack'

,'ShortSleeve','LongSleeve','LongCoat','Trousers','Shorts','Skirt&Dress'] # 屬性類别

group_order = [7, 8, 12, 13, 14, 15, 16, 17, 9, 10, 11, 1, 2, 3, 0, 4, 5, 6] # 需要對新屬性進行重排,屬性重排後的索引順序(不懂的看上一篇)

# clas_name = ['Hat','Glasses','ShortSleeve','LongSleeve','LongCoat','Trousers','Shorts','Skirt&Dress','HandBag','ShoulderBag','Backpack'

# ,'AgeOver60','Age18-60','AgeLess18','Female','Front','Side','Back']

def make_dir(path):

if os.path.exists(path):

pass

else:

os.mkdir(path)

def generate_data_description(save_dir, label_txt,reorder):

"""

create a dataset description file, which consists of images, labels

"""

image_name = []

image_label = []

file = open(label_txt,"r")

for line in file.readlines():

name = line.split("\t")[0]

label = line.split('\t')[1].split('\n')[0].split(',')

label = list(map(int, label))

image_name.append(name)

image_label.append(label)

dataset = EasyDict()

dataset.description = 'pa100k'

dataset.reorder = 'group_order'

dataset.root = os.path.join(save_dir, 'data')

dataset.image_name = image_name

dataset.label = np.array(image_label)

dataset.attr_name = classes_name

dataset.label_idx = EasyDict()

dataset.label_idx.eval = list(range(len(classes_name)))

if reorder:

dataset.label_idx.eval = group_order

dataset.partition = EasyDict()

dataset.partition.train = np.arange(0, 80000) # np.array(range(80000)) # 資料集數量劃分,自己根據自己資料集來,這是pa100k的劃分

dataset.partition.val = np.arange(80000, 90000) # np.array(range(80000, 90000))

dataset.partition.test = np.arange(90000, 100000) # np.array(range(90000, 100000))

dataset.partition.trainval = np.arange(0, 90000) # np.array(range(90000))

dataset.weight_train = np.mean(dataset.label[dataset.partition.train], axis=0).astype(np.float32)

dataset.weight_trainval = np.mean(dataset.label[dataset.partition.trainval], axis=0).astype(np.float32)

with open(os.path.join(save_dir, 'dataset_all.pkl'), 'wb+') as f:

pickle.dump(dataset, f)

if __name__ == "__main__":

# save_dir = '/mnt/data1/jiajian/datasets/attribute/PA100k/'

save_dir = '/home/cai/data/PA100K/MyData/' # 資料集圖檔存放路徑 MyData/data MyData/dataset_all.pkl

label_txt = "/home/cai/project/Rethinking_of_PAR/data/MyData/label.txt" # train.txt test.txt val.txt 合并成label.txt

generate_data_description(save_dir, label_txt,reorder=True) 修改完成後需要到該檔案下添加自己資料集名字,我的資料集取名為MyData:

在configs中建立mydata.yaml,内容根據pa100k的來修改,示例如下,加入了新的網絡和資料集:

python train.py -c configs/pedes_baseline/mydata.yaml