作者 | Jason Brownlee

編譯 | 公衆号編輯部

你應該使用哪種機器學習算法?

這是應用機器學習中的一個讓大家很捉急的問題。

在Randal Olson和其他人最近的一篇論文中,他們試圖去回答它,并給出一個指導關于算法和參數。

在這篇文章中,你将展開一項研究和評估許多機器學習算法通過大量的機器學習資料集。并且得到對這項研究的一些意見。

論文

2017年,Randal Olson等人發表了一篇标題為“Data-driven Advice for Applying Machine Learning to Bioinformatics Problems” 的論文。

論文下載下傳位址:https://arxiv.org/abs/1708.05070

他們的工作目标是解決每個從業人員在開始預測模組化問題時所面臨的問題,即:

我應該使用什麼算法?

作者将此問題描述為choice overload,如下所示:

Although having several readily-available ML algorithm implementations is advantageous to bioinformatics researchers seeking to move beyond simple statistics, many researchers experience “choice overload” and find difficulty in selecting the right ML algorithm for their problem at hand.

他們通過在大量機器學習資料集的樣本上運作其算法樣本來解決這個問題,以了解通常哪些算法和參數最适合。

論文描述為:

… a thorough analysis of 13 state-of-the-art, commonly used machine learning algorithms on a set of 165 publicly available classification problems in order to provide data-driven algorithm recommendations to current researchers

機器學習算法

A total of 13 different algorithms were chosen for the study.Algorithms were chosen to provide a mix of types or underlying assumptions.The goal was to represent the most common classes of algorithms used in the literature, as well as recent state-of-the-art algorithms The complete list of algorithms is provided below.

下面提供了完整的13種算法清單:

- Gaussian Naive Bayes (GNB)

- Bernoulli Naive Bayes (BNB)

- Multinomial Naive Bayes (MNB)

- Logistic Regression (LR)

- Stochastic Gradient Descent (SGD)

- Passive Aggressive Classifier (PAC)

- Support Vector Classifier (SVC)

- K-Nearest Neighbor (KNN)

- Decision Tree (DT)

- Random Forest (RF)

- Extra Trees Classifier (ERF)

- AdaBoost (AB)

- Gradient Tree Boosting (GTB)

scikit-learn庫被用來實作這些算法。

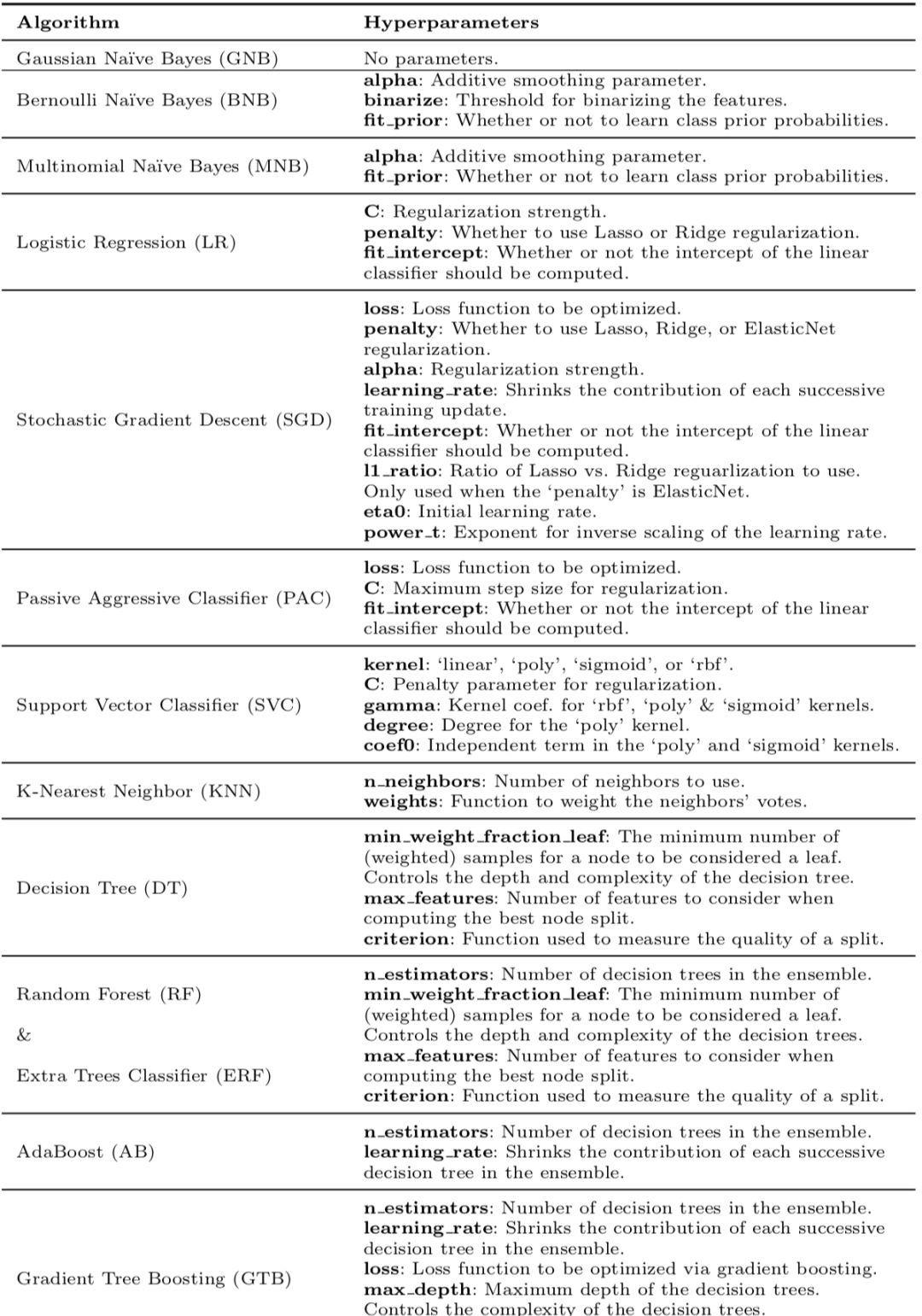

每個算法具有零個或多個參數,并且針對每個算法執行合理參數值的網格搜尋。

對于每種算法,使用固定的網格搜尋來調整超參數。

下面列出了算法和超參數評估表:

使用10倍交叉驗證和平衡準确性度量來評估算法。

交叉驗證沒有重複,可能會在結果中引入一些統計噪音。

機器學習資料集

研究選擇了165種标準機器學習問題。

許多問題來自生物資訊學領域,盡管并非所有資料集都屬于這一研究領域。

所有的預測問題都是兩類或更多類的分類問題。

The algorithms were compared on 165 supervised classification datasets from the Penn Machine Learning Benchmark (PMLB). […] PMLB is a collection of publicly available classification problems that have been standardized to the same format and collected in a central location with easy access via Python.

資料集來自Penn機器學習基準(PMLB)集合,你可以在GitHub項目中了解關于此資料集的更多資訊。位址:https://github.com/EpistasisLab/penn-ml-benchmarks

在拟合模型之前,所有資料集均已标準化。

結果分析

The entire experimental design consisted of over 5.5 million ML algorithm and parameter evaluations in total, resulting in a rich set of data that is analyzed from several viewpoints…

對每個資料集對算法性能進行排名,然後計算每個算法的平均排名。

這提供了一個粗略和容易了解每一種算法在平均情況下好或不好活的方法。

結果表明,梯度提升(Gradient boosting)和随機森林(random forest )的排名最低(表現最好),樸素貝葉斯(Naive Bayes)平均得分最高(表現最差)。

The post-hoc test underlines the impressive performance of Gradient Tree Boosting, which significantly outperforms every algorithm except Random Forest at the p < 0.01 level.

通過這張圖,展示了所有算法的結果,摘自論文。

沒有單一的算法表現最好或最差。

這是機器學習實踐者所熟知的,但對于該領域的初學者來說很難掌握。

你必須在一個給定的資料集上測試一套算法,看看什麼效果最好。

… it is worth noting that no one ML algorithm performs best across all 165 datasets. For example, there are 9 datasets for which Multinomial NB performs as well as or better than Gradient Tree Boosting, despite being the overall worst- and best-ranked algorithms, respectively. Therefore, it is still important to consider different ML algorithms when applying ML to new datasets.

此外,選擇正确的算法是不夠的。你還必須為資料集選擇正确的算法配置。

選擇正确的ML算法并調整其參數對于大多數問題是至關重要的。

結果發現,根據算法和資料集的不同,調整算法可将該方法的性能從提高至3%——50%。

The results demonstrate why it is unwise to use default ML algorithm hyperparameters: tuning often improves an algorithm’s accuracy by 3-5%, depending on the algorithm. In some cases, parameter tuning led to CV accuracy improvements of 50%.

本圖表展示了參數調整對每種算法的改進情況。

并非所有算法都是必需的。

結果發現,在165個測試資料集中的106個中,五種算法和特定參數的性能達到Top1%。

推薦這五種算法:

- Gradient Boosting

- Random Forest

- Support Vector Classifier

- Extra Trees

- Logistic Regression

The paper provides a table of these algorithms, including the recommend parameter settings and the number of datasets covered, e.g. where the algorithm and configuration achieved top 1% performance.

實際結果

本文有兩個重要的發現對于從業者是有價值的,尤其是對那些剛開始學習機器學習算法或者對此有困惑的人。

1、使用Ensemble Trees

The analysis demonstrates the strength of state-of-the-art, tree-based ensemble algorithms, while also showing the problem-dependent nature of ML algorithm performance.

2、Spot Check and Tune

沒有人可以看到你的問題,并告訴你使用什麼算法。

你必須為每種算法測試一套參數,以檢視哪些方法更适合你的特定問題。

In addition, the analysis shows that selecting the right ML algorithm and thoroughly tuning its parameters can lead to a significant improvement in predictive accuracy on most problems, and is there a critical step in every ML application.

這個問題的讨論請檢視這個連結:https://machinelearningmastery.com/a-data-driven-approach-to-machine-learning/

進一步閱讀

如果你希望深入了解類似問題,這裡提供了有關該主題的更多資源:

- Data-driven Advice for Applying Machine Learning to Bioinformatics Problems(https://arxiv.org/abs/1708.05070)

- scikit-learn benchmarks on GitHub(https://github.com/rhiever/sklearn-benchmarks)

- Penn Machine Learning Benchmarks(https://github.com/EpistasisLab/penn-ml-benchmarks)

- Quantitative comparison of scikit-learn’s predictive models on a large number of machine learning datasets: A good start(https://crossinvalidation.com/2017/08/22/quantitative-comparison-of-scikit-learns-predictive-models-on-a-large-number-of-machine-learning-datasets-a-good-start/)

- Use Random Forest: Testing 179 Classifiers on 121 Datasets(https://machinelearningmastery.com/use-random-forest-testing-179-classifiers-121-datasets/)

原文:https://machinelearningmastery.com/start-with-gradient-boosting/