作者丨[email protected]知乎

來源丨https://zhuanlan.zhihu.com/p/411651933

編輯丨計算機視覺工坊

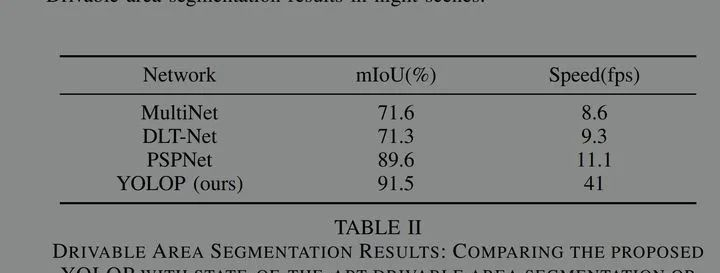

華中科大在最近開源了全景感覺模型YOLOP,在YOLOv4的基礎上做了改進,與YOLOv4相同的是都采用了CSP、SPP等子產品,不同的是,YOLOP在Detect檢測頭之外增加了可駕駛區域分割和航道分割兩個分支。很簡潔的模型結構,獲得了不錯的效果。

官方開源的代碼在:

https://link.zhihu.com/?target=https%3A//github.com/hustvl/YOLOP

在我fork的分支,可以找到包含轉換後的onnx檔案和轉換代碼。

本篇文章不再介紹具體的模型結構,而是簡單記錄下,我對YOLOP進行C++工程化的過程。先附上完整的C++工程代碼。

https://link.zhihu.com/?target=https%3A//github.com/DefTruth/lite.ai.toolkit/blob/main/ort/cv/yolop.cpp

轉換成ONNX的過程

為了成功相容onnx,首先需要對common中的Focus和Detect進行修改。為了不和原來的common.py搞混,把修改後的檔案命名為common2.py。需要修改的主要包括DepthSeperabelConv2d、Focus和Detect。

修改DepthSeperabelConv2d

由于在原來的代碼中找不到BN_MOMENTUM變量,直接使用會出錯,issues#19. 是以我去掉了這個變量。修改後為:

class DepthSeperabelConv2d(nn.Module):

"""

DepthSeperable Convolution 2d with residual connection

"""

def __init__(self, inplanes, planes, kernel_size=3, stride=1, downsample=None, act=True):

super(DepthSeperabelConv2d, self).__init__()

self.depthwise = nn.Sequential(

nn.Conv2d(inplanes, inplanes, kernel_size, stride=stride, groups=inplanes, padding=kernel_size // 2,

bias=False),

nn.BatchNorm2d(inplanes)

)

# self.depthwise = nn.Conv2d(inplanes, inplanes, kernel_size,

# stride=stride, groups=inplanes, padding=1, bias=False)

# self.pointwise = nn.Conv2d(inplanes, planes, 1, bias=False)

self.pointwise = nn.Sequential(

nn.Conv2d(inplanes, planes, 1, bias=False),

nn.BatchNorm2d(planes)

)

self.downsample = downsample

self.stride = stride

try:

self.act = nn.Hardswish() if act else nn.Identity()

except:

self.act = nn.Identity()

def forward(self, x):

# residual = x

out = self.depthwise(x)

out = self.act(out)

out = self.pointwise(out)

if self.downsample is not None:

residual = self.downsample(x)

out = self.act(out)

return out 修改Detect

原來的直接記憶體操作,會導緻轉換後的模型推理異常。可以推理,但是結果是錯的。參考我在yolov5的工程化記錄ort_yolov5.zh.md以及tiny_yolov4的工程化記錄ort_tiny_yolov4.zh.md。我對Detect子產品進行了修改,使其相容onnx。修改後的Detect子產品如下:

class Detect(nn.Module):

stride = None # strides computed during build

def __init__(self, nc=13, anchors=(), ch=()): # detection layer

super(Detect, self).__init__()

self.nc = nc # number of classes

self.no = nc + 5 # number of outputs per anchor

self.nl = len(anchors) # number of detection layers 3

self.na = len(anchors[0]) // 2 # number of anchors 3

self.grid = [torch.zeros(1)] * self.nl # init grid

a = torch.tensor(anchors).float().view(self.nl, -1, 2) # (nl=3,na=3,2)

self.register_buffer('anchors', a) # shape(nl,na,2)

self.register_buffer('anchor_grid', a.clone().view(self.nl, 1, -1, 1, 1, 2)) # shape(nl=3,1,na=3,1,1,2)

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv

self.inplace = True # use in-place ops (e.g. slice assignment)

def forward(self, x):

z = [] # inference output

for i in range(self.nl):

x[i] = self.m[i](x[i]) # conv (bs,na*no,ny,nx)

bs, _, ny, nx = x[i].shape

# x(bs,255,20,20) to x(bs,3,20,20,nc+5) (bs,na,ny,nx,no=nc+5=4+1+nc)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training: # inference

# if self.grid[i].shape[2:4] != x[i].shape[2:4] or self.onnx_dynamic:

# self.grid[i] = self._make_grid(nx, ny).to(x[i].device)

self.grid[i] = self._make_grid(nx, ny).to(x[i].device)

y = x[i].sigmoid() # (bs,na,ny,nx,no=nc+5=4+1+nc)

xy = (y[..., 0:2] * 2. - 0.5 + self.grid[i]) * self.stride[i] # xy (bs,na,ny,nx,2)

wh = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i].view(1, self.na, 1, 1, 2) # wh (bs,na,ny,nx,2)

y = torch.cat((xy, wh, y[..., 4:]), -1) # (bs,na,ny,nx,2+2+1+nc=xy+wh+conf+cls_prob)

z.append(y.view(bs, -1, self.no)) # y (bs,na*ny*nx,no=2+2+1+nc=xy+wh+conf+cls_prob)

return x if self.training else (torch.cat(z, 1), x)

# torch.cat(z, 1) (bs,na*ny*nx*nl,no=2+2+1+nc=xy+wh+conf+cls_prob)

@staticmethod

def _make_grid(nx=20, ny=20):

yv, xv = torch.meshgrid([torch.arange(ny), torch.arange(nx)])

return torch.stack((xv, yv), 2).view((1, 1, ny, nx, 2)).float() 修改Focus

在YOLOP工程化中,我并沒有修改Focus子產品,因為我在使用了高版本的pytorch(1.8.0)和onnx(1.8.0)後,轉換出來的onnx模型檔案沒有問題,可以正常推理。

然後是,修改MCnet,讓模型輸出想要的3個結果張量。

export_onnx.py

import torch

import torch.nn as nn

from lib.models.common2 import Conv, SPP, Bottleneck, BottleneckCSP, Focus, Concat, Detect, SharpenConv

from torch.nn import Upsample

from lib.utils import check_anchor_order

from lib.utils import initialize_weights

import argparse

import onnx

import onnxruntime as ort

import onnxsim

import math

import cv2

# The lane line and the driving area segment branches without share information with each other and without link

YOLOP = [

[24, 33, 42], # Det_out_idx, Da_Segout_idx, LL_Segout_idx

[-1, Focus, [3, 32, 3]], # 0

[-1, Conv, [32, 64, 3, 2]], # 1

[-1, BottleneckCSP, [64, 64, 1]], # 2

[-1, Conv, [64, 128, 3, 2]], # 3

[-1, BottleneckCSP, [128, 128, 3]], # 4

[-1, Conv, [128, 256, 3, 2]], # 5

[-1, BottleneckCSP, [256, 256, 3]], # 6

[-1, Conv, [256, 512, 3, 2]], # 7

[-1, SPP, [512, 512, [5, 9, 13]]], # 8 SPP

[-1, BottleneckCSP, [512, 512, 1, False]], # 9

[-1, Conv, [512, 256, 1, 1]], # 10

[-1, Upsample, [None, 2, 'nearest']], # 11

[[-1, 6], Concat, [1]], # 12

[-1, BottleneckCSP, [512, 256, 1, False]], # 13

[-1, Conv, [256, 128, 1, 1]], # 14

[-1, Upsample, [None, 2, 'nearest']], # 15

[[-1, 4], Concat, [1]], # 16 #Encoder

[-1, BottleneckCSP, [256, 128, 1, False]], # 17

[-1, Conv, [128, 128, 3, 2]], # 18

[[-1, 14], Concat, [1]], # 19

[-1, BottleneckCSP, [256, 256, 1, False]], # 20

[-1, Conv, [256, 256, 3, 2]], # 21

[[-1, 10], Concat, [1]], # 22

[-1, BottleneckCSP, [512, 512, 1, False]], # 23

[[17, 20, 23], Detect,

[1, [[3, 9, 5, 11, 4, 20], [7, 18, 6, 39, 12, 31], [19, 50, 38, 81, 68, 157]], [128, 256, 512]]],

# Detection head 24: from_(features from specific layers), block, nc(num_classes) anchors ch(channels)

[16, Conv, [256, 128, 3, 1]], # 25

[-1, Upsample, [None, 2, 'nearest']], # 26

[-1, BottleneckCSP, [128, 64, 1, False]], # 27

[-1, Conv, [64, 32, 3, 1]], # 28

[-1, Upsample, [None, 2, 'nearest']], # 29

[-1, Conv, [32, 16, 3, 1]], # 30

[-1, BottleneckCSP, [16, 8, 1, False]], # 31

[-1, Upsample, [None, 2, 'nearest']], # 32

[-1, Conv, [8, 2, 3, 1]], # 33 Driving area segmentation head

[16, Conv, [256, 128, 3, 1]], # 34

[-1, Upsample, [None, 2, 'nearest']], # 35

[-1, BottleneckCSP, [128, 64, 1, False]], # 36

[-1, Conv, [64, 32, 3, 1]], # 37

[-1, Upsample, [None, 2, 'nearest']], # 38

[-1, Conv, [32, 16, 3, 1]], # 39

[-1, BottleneckCSP, [16, 8, 1, False]], # 40

[-1, Upsample, [None, 2, 'nearest']], # 41

[-1, Conv, [8, 2, 3, 1]] # 42 Lane line segmentation head

]

class MCnet(nn.Module):

def __init__(self, block_cfg):

super(MCnet, self).__init__()

layers, save = [], []

self.nc = 1 # traffic or not

self.detector_index = -1

self.det_out_idx = block_cfg[0][0]

self.seg_out_idx = block_cfg[0][1:]

self.num_anchors = 3

self.num_outchannel = 5 + self.nc # dx,dy,dw,dh,obj_conf+cls_conf

# Build model

for i, (from_, block, args) in enumerate(block_cfg[1:]):

block = eval(block) if isinstance(block, str) else block # eval strings

if block is Detect:

self.detector_index = i

block_ = block(*args)

block_.index, block_.from_ = i, from_

layers.append(block_)

save.extend(x % i for x in ([from_] if isinstance(from_, int) else from_) if x != -1) # append to savelist

assert self.detector_index == block_cfg[0][0]

self.model, self.save = nn.Sequential(*layers), sorted(save)

self.names = [str(i) for i in range(self.nc)]

# set stride、anchor for detector

Detector = self.model[self.detector_index] # detector

if isinstance(Detector, Detect):

s = 128 # 2x min stride

# for x in self.forward(torch.zeros(1, 3, s, s)):

# print (x.shape)

with torch.no_grad():

model_out = self.forward(torch.zeros(1, 3, s, s))

detects, _, _ = model_out

Detector.stride = torch.tensor([s / x.shape[-2] for x in detects]) # forward

# print("stride"+str(Detector.stride ))

Detector.anchors /= Detector.stride.view(-1, 1, 1) # Set the anchors for the corresponding scale

check_anchor_order(Detector)

self.stride = Detector.stride

# self._initialize_biases()

initialize_weights(self)

def forward(self, x):

cache = []

out = []

det_out = None

for i, block in enumerate(self.model):

if block.from_ != -1:

x = cache[block.from_] if isinstance(block.from_, int) \

else [x if j == -1 else cache[j] for j in

block.from_] # calculate concat detect

x = block(x)

if i in self.seg_out_idx: # save driving area segment result

# m = nn.Sigmoid()

# out.append(m(x))

out.append(torch.sigmoid(x))

if i == self.detector_index:

# det_out = x

if self.training:

det_out = x

else:

det_out = x[0] # (torch.cat(z, 1), input_feat) if test

cache.append(x if block.index in self.save else None)

return det_out, out[0], out[1] # det, da, ll

# (1,na*ny*nx*nl,no=2+2+1+nc=xy+wh+obj_conf+cls_prob), (1,2,h,w) (1,2,h,w)

def _initialize_biases(self, cf=None): # initialize biases into Detect(), cf is class frequency

# https://arxiv.org/abs/1708.02002 section 3.3

# cf = torch.bincount(torch.tensor(np.concatenate(dataset.labels, 0)[:, 0]).long(), minlength=nc) + 1.

# m = self.model[-1] # Detect() module

m = self.model[self.detector_index] # Detect() module

for mi, s in zip(m.m, m.stride): # from

b = mi.bias.view(m.na, -1) # conv.bias(255) to (3,85)

b[:, 4] += math.log(8 / (640 / s) ** 2) # obj (8 objects per 640 image)

b[:, 5:] += math.log(0.6 / (m.nc - 0.99)) if cf is None else torch.log(cf / cf.sum()) # cls

mi.bias = torch.nn.Parameter(b.view(-1), requires_grad=True)

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument('--height', type=int, default=640) # height

parser.add_argument('--width', type=int, default=640) # width

args = parser.parse_args()

do_simplify = True

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = MCnet(YOLOP)

checkpoint = torch.load('./weights/End-to-end.pth', map_location=device)

model.load_state_dict(checkpoint['state_dict'])

model.eval()

height = args.height

width = args.width

print("Load ./weights/End-to-end.pth done!")

onnx_path = f'./weights/yolop-{height}-{width}.onnx'

inputs = torch.randn(1, 3, height, width)

print(f"Converting to {onnx_path}")

torch.onnx.export(model, inputs, onnx_path,

verbose=False, opset_version=12, input_names=['images'],

output_names=['det_out', 'drive_area_seg', 'lane_line_seg'])

print('convert', onnx_path, 'to onnx finish!!!')

# Checks

model_onnx = onnx.load(onnx_path) # load onnx model

onnx.checker.check_model(model_onnx) # check onnx model

print(onnx.helper.printable_graph(model_onnx.graph)) # print

if do_simplify:

print(f'simplifying with onnx-simplifier {onnxsim.__version__}...')

model_onnx, check = onnxsim.simplify(model_onnx, check_n=3)

assert check, 'assert check failed'

onnx.save(model_onnx, onnx_path)

x = inputs.cpu().numpy()

try:

sess = ort.InferenceSession(onnx_path)

for ii in sess.get_inputs():

print("Input: ", ii)

for oo in sess.get_outputs():

print("Output: ", oo)

print('read onnx using onnxruntime sucess')

except Exception as e:

print('read failed')

raise e

"""

PYTHONPATH=. python3 ./export_onnx.py --height 640 --width 640

PYTHONPATH=. python3 ./export_onnx.py --height 1280 --width 1280

PYTHONPATH=. python3 ./export_onnx.py --height 320 --width 320

""" Python版本onnxruntime測試

test_onnx.py

這裡我重新現實作了一套推理的邏輯,和原來的demo.py結果保持一緻,但隻使用了numpy和opencv做資料前後處理,沒有用torchvision的transform子產品,便于c++複現python邏輯。同時,為了保持邏輯的簡單,我實作了等價的resize_unscale函數,替代了原來的letterbox_for_img,這個函數有些工程不友好,而且個人一直都認為copyMakeBorder操作有些雞肋。resize_unscale進行resize時保持原圖的寬高尺度的比例不變,如果是采用普通的resize,推理效果會變差。

import os

import cv2

import torch

import argparse

import onnxruntime as ort

import numpy as np

from lib.core.general import non_max_suppression

def resize_unscale(img, new_shape=(640, 640), color=114):

shape = img.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

canvas = np.zeros((new_shape[0], new_shape[1], 3))

canvas.fill(color)

# Scale ratio (new / old) new_shape(h,w)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r)) # w,h

new_unpad_w = new_unpad[0]

new_unpad_h = new_unpad[1]

pad_w, pad_h = new_shape[1] - new_unpad_w, new_shape[0] - new_unpad_h # wh padding

dw = pad_w // 2 # divide padding into 2 sides

dh = pad_h // 2

if shape[::-1] != new_unpad: # resize

img = cv2.resize(img, new_unpad, interpolation=cv2.INTER_AREA)

canvas[dh:dh + new_unpad_h, dw:dw + new_unpad_w, :] = img

return canvas, r, dw, dh, new_unpad_w, new_unpad_h # (dw,dh)

def infer_yolop(weight="yolop-640-640.onnx",

img_path="./inference/images/7dd9ef45-f197db95.jpg"):

ort.set_default_logger_severity(4)

onnx_path = f"./weights/{weight}"

ort_session = ort.InferenceSession(onnx_path)

print(f"Load {onnx_path} done!")

outputs_info = ort_session.get_outputs()

inputs_info = ort_session.get_inputs()

for ii in inputs_info:

print("Input: ", ii)

for oo in outputs_info:

print("Output: ", oo)

print("num outputs: ", len(outputs_info))

save_det_path = f"./pictures/detect_onnx.jpg"

save_da_path = f"./pictures/da_onnx.jpg"

save_ll_path = f"./pictures/ll_onnx.jpg"

save_merge_path = f"./pictures/output_onnx.jpg"

img_bgr = cv2.imread(img_path)

height, width, _ = img_bgr.shape

# convert to RGB

img_rgb = img_bgr[:, :, ::-1].copy()

# resize & normalize

canvas, r, dw, dh, new_unpad_w, new_unpad_h = resize_unscale(img_rgb, (640, 640))

img = canvas.copy().transpose(2, 0, 1).astype(np.float32) # (3,640,640) RGB

img /= 255.0

img[:, :, 0] -= 0.485

img[:, :, 1] -= 0.456

img[:, :, 2] -= 0.406

img[:, :, 0] /= 0.229

img[:, :, 1] /= 0.224

img[:, :, 2] /= 0.225

img = np.expand_dims(img, 0) # (1, 3,640,640)

# inference: (1,n,6) (1,2,640,640) (1,2,640,640)

det_out, da_seg_out, ll_seg_out = ort_session.run(

['det_out', 'drive_area_seg', 'lane_line_seg'],

input_feed={"images": img}

)

det_out = torch.from_numpy(det_out).float()

boxes = non_max_suppression(det_out)[0] # [n,6] [x1,y1,x2,y2,conf,cls]

boxes = boxes.cpu().numpy().astype(np.float32)

if boxes.shape[0] == 0:

print("no bounding boxes detected.")

return

# scale coords to original size.

boxes[:, 0] -= dw

boxes[:, 1] -= dh

boxes[:, 2] -= dw

boxes[:, 3] -= dh

boxes[:, :4] /= r

print(f"detect {boxes.shape[0]} bounding boxes.")

img_det = img_rgb[:, :, ::-1].copy()

for i in range(boxes.shape[0]):

x1, y1, x2, y2, conf, label = boxes[i]

x1, y1, x2, y2, label = int(x1), int(y1), int(x2), int(y2), int(label)

img_det = cv2.rectangle(img_det, (x1, y1), (x2, y2), (0, 255, 0), 2, 2)

cv2.imwrite(save_det_path, img_det)

# select da & ll segment area.

da_seg_out = da_seg_out[:, :, dh:dh + new_unpad_h, dw:dw + new_unpad_w]

ll_seg_out = ll_seg_out[:, :, dh:dh + new_unpad_h, dw:dw + new_unpad_w]

da_seg_mask = np.argmax(da_seg_out, axis=1)[0] # (?,?) (0|1)

ll_seg_mask = np.argmax(ll_seg_out, axis=1)[0] # (?,?) (0|1)

print(da_seg_mask.shape)

print(ll_seg_mask.shape)

color_area = np.zeros((new_unpad_h, new_unpad_w, 3), dtype=np.uint8)

color_area[da_seg_mask == 1] = [0, 255, 0]

color_area[ll_seg_mask == 1] = [255, 0, 0]

color_seg = color_area

# convert to BGR

color_seg = color_seg[..., ::-1]

color_mask = np.mean(color_seg, 2)

img_merge = canvas[dh:dh + new_unpad_h, dw:dw + new_unpad_w, :]

img_merge = img_merge[:, :, ::-1]

# merge: resize to original size

img_merge[color_mask != 0] = \

img_merge[color_mask != 0] * 0.5 + color_seg[color_mask != 0] * 0.5

img_merge = img_merge.astype(np.uint8)

img_merge = cv2.resize(img_merge, (width, height),

interpolation=cv2.INTER_LINEAR)

for i in range(boxes.shape[0]):

x1, y1, x2, y2, conf, label = boxes[i]

x1, y1, x2, y2, label = int(x1), int(y1), int(x2), int(y2), int(label)

img_merge = cv2.rectangle(img_merge, (x1, y1), (x2, y2), (0, 255, 0), 2, 2)

# da: resize to original size

da_seg_mask = da_seg_mask * 255

da_seg_mask = da_seg_mask.astype(np.uint8)

da_seg_mask = cv2.resize(da_seg_mask, (width, height),

interpolation=cv2.INTER_LINEAR)

# ll: resize to original size

ll_seg_mask = ll_seg_mask * 255

ll_seg_mask = ll_seg_mask.astype(np.uint8)

ll_seg_mask = cv2.resize(ll_seg_mask, (width, height),

interpolation=cv2.INTER_LINEAR)

cv2.imwrite(save_merge_path, img_merge)

cv2.imwrite(save_da_path, da_seg_mask)

cv2.imwrite(save_ll_path, ll_seg_mask)

print("detect done.")

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument('--weight', type=str, default="yolop-640-640.onnx")

parser.add_argument('--img', type=str, default="./inference/images/9aa94005-ff1d4c9a.jpg")

args = parser.parse_args()

infer_yolop(weight=args.weight, img_path=args.img)

"""

PYTHONPATH=. python3 ./test_onnx.py --weight yolop-640-640.onnx --img ./inference/images/9aa94005-ff1d4c9a.jpg

""" 測試結果如下:

C++版本的ONNXRuntime推理實作

yolop.cpp

//

// Created by DefTruth on 2021/9/14.

//

#include "yolop.h"#include "ort/core/ort_utils.h"

using ortcv::YOLOP;

void YOLOP::resize_unscale(const cv::Mat &mat, cv::Mat &mat_rs,

int target_height, int target_width,

YOLOPScaleParams &scale_params)

{

if (mat.empty()) return;

int img_height = static_cast<int>(mat.rows);

int img_width = static_cast<int>(mat.cols);

mat_rs = cv::Mat(target_height, target_width, CV_8UC3,

cv::Scalar(114, 114, 114));

// scale ratio (new / old) new_shape(h,w)

float w_r = (float) target_width / (float) img_width;

float h_r = (float) target_height / (float) img_height;

float r = std::fmin(w_r, h_r);

// compute padding

int new_unpad_w = static_cast<int>((float) img_width * r); // floor

int new_unpad_h = static_cast<int>((float) img_height * r); // floor

int pad_w = target_width - new_unpad_w; // >=0

int pad_h = target_height - new_unpad_h; // >=0

int dw = pad_w / 2;

int dh = pad_h / 2;

// resize with unscaling

cv::Mat new_unpad_mat = mat.clone();

cv::resize(new_unpad_mat, new_unpad_mat, cv::Size(new_unpad_w, new_unpad_h));

new_unpad_mat.copyTo(mat_rs(cv::Rect(dw, dh, new_unpad_w, new_unpad_h)));

// record scale params.

scale_params.r = r;

scale_params.dw = dw;

scale_params.dh = dh;

scale_params.new_unpad_w = new_unpad_w;

scale_params.new_unpad_h = new_unpad_h;

scale_params.flag = true;

}

Ort::Value YOLOP::transform(const cv::Mat &mat_rs)

{

cv::Mat canva = mat_rs.clone();

cv::cvtColor(canva, canva, cv::COLOR_BGR2RGB);

// (1,3,640,640) 1xCXHXW

ortcv::utils::transform::normalize_inplace(canva, mean_vals, scale_vals); // float32

return ortcv::utils::transform::create_tensor(

canva, input_node_dims, memory_info_handler,

input_values_handler, ortcv::utils::transform::CHW);

}

void YOLOP::detect(const cv::Mat &mat,

std::vector<types::Boxf> &detected_boxes,

types::SegmentContent &da_seg_content,

types::SegmentContent &ll_seg_content,

float score_threshold, float iou_threshold,

unsigned int topk, unsigned int nms_type)

{

if (mat.empty()) return;

float img_height = static_cast<float>(mat.rows);

float img_width = static_cast<float>(mat.cols);

const int target_height = input_node_dims.at(2);

const int target_width = input_node_dims.at(3);

// resize & unscale

cv::Mat mat_rs;

YOLOPScaleParams scale_params;

this->resize_unscale(mat, mat_rs, target_height, target_width, scale_params);

if ((!scale_params.flag) || mat_rs.empty()) return;

// 1. make input tensor

Ort::Value input_tensor = this->transform(mat_rs);

// 2. inference scores & boxes.

auto output_tensors = ort_session->Run(

Ort::RunOptions{nullptr}, input_node_names.data(),

&input_tensor, 1, output_node_names.data(), num_outputs

); // det_out, drive_area_seg, lane_line_seg

// 3. rescale & fetch da|ll seg.

std::vector<types::Boxf> bbox_collection;

this->generate_bboxes_da_ll(scale_params, output_tensors, bbox_collection,

da_seg_content, ll_seg_content, score_threshold,

img_height, img_width);

// 4. hard|blend nms with topk.

this->nms(bbox_collection, detected_boxes, iou_threshold, topk, nms_type);

}

void YOLOP::generate_bboxes_da_ll(const YOLOPScaleParams &scale_params,

std::vector<Ort::Value> &output_tensors,

std::vector<types::Boxf> &bbox_collection,

types::SegmentContent &da_seg_content,

types::SegmentContent &ll_seg_content,

float score_threshold, float img_height,

float img_width)

{

Ort::Value &det_out = output_tensors.at(0); // (1,n,6=5+1=cxcy+cwch+obj_conf+cls_conf)

Ort::Value &da_seg_out = output_tensors.at(1); // (1,2,640,640)

Ort::Value &ll_seg_out = output_tensors.at(2); // (1,2,640,640)

auto det_dims = output_node_dims.at(0); // (1,n,6)

const unsigned int num_anchors = det_dims.at(1); // n = ?

float r = scale_params.r;

int dw = scale_params.dw;

int dh = scale_params.dh;

int new_unpad_w = scale_params.new_unpad_w;

int new_unpad_h = scale_params.new_unpad_h;

// generate bounding boxes.

bbox_collection.clear();

unsigned int count = 0;

for (unsigned int i = 0; i < num_anchors; ++i)

{

float obj_conf = det_out.At<float>({0, i, 4});

if (obj_conf < score_threshold) continue; // filter first.

unsigned int label = 1; // 1 class only

float cls_conf = det_out.At<float>({0, i, 5});

float conf = obj_conf * cls_conf; // cls_conf (0.,1.)

if (conf < score_threshold) continue; // filter

float cx = det_out.At<float>({0, i, 0});

float cy = det_out.At<float>({0, i, 1});

float w = det_out.At<float>({0, i, 2});

float h = det_out.At<float>({0, i, 3});

types::Boxf box;

// de-padding & rescaling

box.x1 = ((cx - w / 2.f) - (float) dw) / r;

box.y1 = ((cy - h / 2.f) - (float) dh) / r;

box.x2 = ((cx + w / 2.f) - (float) dw) / r;

box.y2 = ((cy + h / 2.f) - (float) dh) / r;

box.score = conf;

box.label = label;

box.label_text = "traffic car";

box.flag = true;

bbox_collection.push_back(box);

count += 1; // limit boxes for nms.

if (count > max_nms)

break;

}

#if LITEORT_DEBUG

std::cout << "detected num_anchors: " << num_anchors << "\n";

std::cout << "generate_bboxes num: " << bbox_collection.size() << "\n";

#endif

// generate da && ll seg.

da_seg_content.names_map.clear();

da_seg_content.class_mat = cv::Mat(new_unpad_h, new_unpad_w, CV_8UC1, cv::Scalar(0));

da_seg_content.color_mat = cv::Mat(new_unpad_h, new_unpad_w, CV_8UC3, cv::Scalar(0, 0, 0));

ll_seg_content.names_map.clear();

ll_seg_content.class_mat = cv::Mat(new_unpad_h, new_unpad_w, CV_8UC1, cv::Scalar(0));

ll_seg_content.color_mat = cv::Mat(new_unpad_h, new_unpad_w, CV_8UC3, cv::Scalar(0, 0, 0));

for (int i = dh; i < dh + new_unpad_h; ++i)

{

// row ptr.

uchar *da_p_class = da_seg_content.class_mat.ptr<uchar>(i - dh);

uchar *ll_p_class = ll_seg_content.class_mat.ptr<uchar>(i - dh);

cv::Vec3b *da_p_color = da_seg_content.color_mat.ptr<cv::Vec3b>(i - dh);

cv::Vec3b *ll_p_color = ll_seg_content.color_mat.ptr<cv::Vec3b>(i - dh);

for (int j = dw; j < dw + new_unpad_w; ++j)

{

// argmax

float da_bg_prob = da_seg_out.At<float>({0, 0, i, j});

float da_fg_prob = da_seg_out.At<float>({0, 1, i, j});

float ll_bg_prob = ll_seg_out.At<float>({0, 0, i, j});

float ll_fg_prob = ll_seg_out.At<float>({0, 1, i, j});

unsigned int da_label = da_bg_prob < da_fg_prob ? 1 : 0;

unsigned int ll_label = ll_bg_prob < ll_fg_prob ? 1 : 0;

if (da_label == 1)

{

// assign label for pixel(i,j)

da_p_class[j - dw] = 1 * 255; // 255 indicate drivable area, for post resize

// assign color for detected class at pixel(i,j).

da_p_color[j - dw][0] = 0;

da_p_color[j - dw][1] = 255; // green

da_p_color[j - dw][2] = 0;

// assign names map

da_seg_content.names_map[255] = "drivable area";

}

if (ll_label == 1)

{

// assign label for pixel(i,j)

ll_p_class[j - dw] = 1 * 255; // 255 indicate lane line, for post resize

// assign color for detected class at pixel(i,j).

ll_p_color[j - dw][0] = 0;

ll_p_color[j - dw][1] = 0;

ll_p_color[j - dw][2] = 255; // red

// assign names map

ll_seg_content.names_map[255] = "lane line";

}

}

}

// resize to original size.

const unsigned int h = static_cast<unsigned int>(img_height);

const unsigned int w = static_cast<unsigned int>(img_width);

// da_seg_mask 255 or 0

cv::resize(da_seg_content.class_mat, da_seg_content.class_mat,

cv::Size(w, h), cv::INTER_LINEAR);

cv::resize(da_seg_content.color_mat, da_seg_content.color_mat,

cv::Size(w, h), cv::INTER_LINEAR);

// ll_seg_mask 255 or 0

cv::resize(ll_seg_content.class_mat, ll_seg_content.class_mat,

cv::Size(w, h), cv::INTER_LINEAR);

cv::resize(ll_seg_content.color_mat, ll_seg_content.color_mat,

cv::Size(w, h), cv::INTER_LINEAR);

da_seg_content.flag = true;

ll_seg_content.flag = true;

}

void YOLOP::nms(std::vector<types::Boxf> &input, std::vector<types::Boxf> &output,

float iou_threshold, unsigned int topk, unsigned int nms_type)

{

if (nms_type == NMS::BLEND) ortcv::utils::blending_nms(input, output, iou_threshold, topk);

else if (nms_type == NMS::OFFSET) ortcv::utils::offset_nms(input, output, iou_threshold, topk);

else ortcv::utils::hard_nms(input, output, iou_threshold, topk);

} 測試用例見yolop-cpp-demo.

最新進展,PR已經被merged進YOLOP的官方倉庫了,現在可以直接在YOLOP 下載下傳轉換後的onnx檔案啦~

本文僅做學術分享,如有侵權,請聯系删文。

下載下傳1

在「計算機視覺工坊」公衆号背景回複:深度學習,即可下載下傳深度學習算法、3D深度學習、深度學習架構、目标檢測、GAN等相關内容近30本pdf書籍。

下載下傳2

在「計算機視覺工坊」公衆号背景回複:計算機視覺,即可下載下傳計算機視覺相關17本pdf書籍,包含計算機視覺算法、Python視覺實戰、Opencv3.0學習等。

下載下傳3

在「計算機視覺工坊」公衆号背景回複:SLAM,即可下載下傳獨家SLAM相關視訊課程,包含視覺SLAM、雷射SLAM精品課程。

重磅!計算機視覺工坊-學習交流群已成立

掃碼添加小助手微信,可申請加入3D視覺工坊-學術論文寫作與投稿 微信交流群,旨在交流頂會、頂刊、SCI、EI等寫作與投稿事宜。

同時也可申請加入我們的細分方向交流群,目前主要有ORB-SLAM系列源碼學習、3D視覺、CV&深度學習、SLAM、三維重建、點雲後處理、自動駕駛、CV入門、三維測量、VR/AR、3D人臉識别、醫療影像、缺陷檢測、行人重識别、目标跟蹤、視覺産品落地、視覺競賽、車牌識别、硬體選型、深度估計、學術交流、求職交流等微信群,請掃描下面微信号加群,備注:”研究方向+學校/公司+昵稱“,例如:”3D視覺 + 上海交大 + 靜靜“。請按照格式備注,否則不予通過。添加成功後會根據研究方向邀請進去相關微信群。原創投稿也請聯系。

▲長按加微信群或投稿

▲長按關注公衆号

3D視覺從入門到精通知識星球:針對3D視覺領域的視訊課程(三維重建系列三維點雲系列結構光系列、手眼标定、相機标定、orb-slam3知識點彙總、入門進階學習路線、最新paper分享、疑問解答五個方面進行深耕,更有各類大廠的算法工程人員進行技術指導。與此同時,星球将聯合知名企業釋出3D視覺相關算法開發崗位以及項目對接資訊,打造成集技術與就業為一體的鐵杆粉絲聚集區,近2000星球成員為創造更好的AI世界共同進步,知識星球入口:

學習3D視覺核心技術,掃描檢視介紹,3天内無條件退款

圈裡有高品質教程資料、答疑解惑、助你高效解決問題

覺得有用,麻煩給個贊和在看~