一、環境準備及安裝

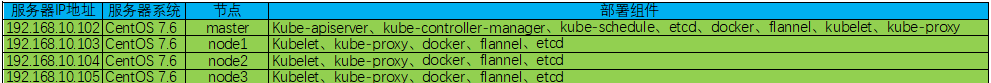

伺服器環境準備

yum -y install epel-release yum-utils device-mapper-persistent-data lvm2 wget

#yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo 準備所需 yum 源,在 master 上配置,然後執行下面指令複制到其他4個節點即可。

[root@localhost yum.repos.d]# for i in {103..106};do scp CentOS-Base-7.repo kubernetes.repo docker.repo [email protected].$i:/etc/yum.repos.d/;done CentOS yum源

cat > /etc/yum.repos.d/CentOS-Base-7.repo << EOF

[base]

name=CentOS-$releasever - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras - mirrors.aliyun.com

baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-$releasever - Plus - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#contrib - packages by Centos Users

[contrib]

name=CentOS-$releasever - Contrib - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

EOF k8s aliyun源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

k8s google源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF docker yum源

cat > /etc/yum.repos.d/docker-ce.repo << EOF

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-debuginfo]

name=Docker CE Stable - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-source]

name=Docker CE Stable - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-edge]

name=Docker CE Edge - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/edge

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-edge-debuginfo]

name=Docker CE Edge - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/edge

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-edge-source]

name=Docker CE Edge - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/edge

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test]

name=Docker CE Test - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-debuginfo]

name=Docker CE Test - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-source]

name=Docker CE Test - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly]

name=Docker CE Nightly - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-debuginfo]

name=Docker CE Nightly - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-source]

name=Docker CE Nightly - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

EOF gcloud源

tee -a /etc/yum.repos.d/google-cloud-sdk.repo << EOM

[google-cloud-sdk]

name=Google Cloud SDK

baseurl=https://packages.cloud.google.com/yum/repos/cloud-sdk-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOM 建立 yum 中繼資料

yum clean all

yum makecache fast yum 安裝 etcd、kubernetes

yum -y install etcd kubernetes google-cloud-sdk 最新版:

curl -fsSL https://get.docker.com/ | sh 注意:docker必須跟着kubernetes版本走

建立 kubernetes、etcd 密鑰目錄

mkdir -p /wdata/kubernetes/ssl 在 master 上建立免密登入私鑰(執行 ssh-keygen ,一路回車)

[root@localhost ~]# ssh-keygen

[root@localhost ~]# for i in {103..106};do ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected].$i;done 二、啟動

在master節點上執行

systemctl start etcd

systemctl start docker

systemctl start kube-apiserver

systemctl start kube-controller-manager

systemctl start kube-scheduler

systemctl start kubelet

systemctl start kube-proxy 在所有slave節點上執行

systemctl start etcd

systemctl start docker

systemctl start kubelet

systemctl start kube-proxy 三、開機啟動

systemctl enable etcd

systemctl enable docker

systemctl enable kube-apiserver

systemctl enable kube-controller-manager

systemctl enable kube-scheduler

systemctl enable kubelet

systemctl enable kube-proxy systemctl enable etcd

systemctl enable docker

systemctl enable kubelet

systemctl enable kube-proxy 四、查詢狀态

systemctl status etcd

systemctl status docker

systemctl status kube-apiserver

systemctl status kube-controller-manager

systemctl status kube-scheduler

systemctl status kubelet

systemctl status kube-proxy systemctl status etcd

systemctl status docker

systemctl status kubelet

systemctl status kube-proxy 五、在所有節點編輯 /lib/systemd/system/docker.service ,在ExecStart=..上面加入

[root@localhost ~]# vim /lib/systemd/system/docker.service

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT 重新開機docker

[root@localhost ~]# systemctl daemon-reload && systemctl restart docker && systemctl status docker 或者在master節點修改完之後分發到其他節點

for i in {103..106};do scp /lib/systemd/system/docker.service [email protected].$i:/lib/systemd/system/;done 六、所有節點設定k8s啟動參數

cat > /etc/sysctl.d/k8s.conf <<EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@localhost ~]# sysctl -p /etc/sysctl.d/k8s.conf 七、在 master 需要安裝CFSSL工具,這将會用來建立 TLS certificates。

[root@localhost ~]# export CFSSL_URL="https://pkg.cfssl.org/R1.2"

[root@localhost ~]# wget "${CFSSL_URL}/cfssl_linux-amd64" -O /usr/local/bin/cfssl

[root@localhost ~]# wget "${CFSSL_URL}/cfssljson_linux-amd64" -O /usr/local/bin/cfssljson

[root@localhost ~]# wget "${CFSSL_URL}/cfssl-certinfo_linux-amd64" -O /usr/local/bin/cfssl-certinfo

[root@localhost ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

[root@localhost ~]# export PATH=/usr/local/bin:$PATH 八、關閉selinux

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config 九、修改系統 hosts 檔案,加入下面 5 行

[root@localhost ~]# vim /etc/hosts

192.168.10.102 master

192.168.10.103 node1

192.168.10.104 node2

192.168.10.105 node3

192.168.10.106 node4 或者

cat >> /etc/hosts <<EOF

192.168.10.102 master

192.168.10.103 node1

192.168.10.104 node2

192.168.10.105 node3

192.168.10.106 node4

EOF 十、建立叢集 CA 與 Certificates,在主節點master上進行操作

配置CA,進入/wdata/kubernetes/ssl目錄,然後進入該目錄下進行操作

[root@master ~]# cd /wdata/kubernetes/ssl

[root@master ssl]# cfssl print-defaults config > config.json

[root@master ssl]# cfssl print-defaults csr > csr.json# 根據config.json檔案的格式建立如下的ca-config.json檔案

# 過期時間設定成了 87600hcat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF 建立CA憑證簽名請求,即建立ca-csr.json,格式如下

cat >ca-csr.json<<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF 生成CA憑證和私鑰

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

ls ca*

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem 建立Kubernetes證書簽名請求檔案 kubernetes-csr.json,注意記得替換相應ip

配置CA,進入 /wdata/kubernetes/ssl 目錄進行操作

[root@master ssl]# cd /wdata/kubernetes/ssl

[root@master ssl]# cfssl print-defaults config > config.json

[root@master ssl]# cfssl print-defaults csr > csr.json # 根據config.json檔案的格式建立如下的ca-config.json檔案

# 過期時間設定成了 87600h

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF cat >ca-csr.json<<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF 建立 kubernetes-csr.json 檔案

cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.10.102",

"192.168.10.103",

"192.168.10.104",

"192.168.10.105",

"192.168.10.106",

"master",

"node1",

"node2",

"node3",

"node4"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca

ls ca*

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem 生成kubernetes證書和私鑰

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

ls kubernetes*

kubernetes.csr kubernetes-csr.json kubernetes-key.pem kubernetes.pem 建立admin簽名檔案 admin-csr-json

cat >admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF 生成admin證書和密鑰

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

ls admin*

admin.csr admin-csr.json admin-key.pem admin.pem 建立kube-proxy 證書簽名請求檔案 kube-proxy-csr.json

cat > kube-proxy-csr.json<<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF 生成 kube-proxy 用戶端證書和密鑰

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

ls kube-proxy*

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem 分發證書,将生成的證書和密鑰檔案(字尾名為.pem)拷貝到所有機器的/etc/kubernetes/ssl目錄下備用

mkdir -p /etc/kubernetes/ssl

cp /wdata/kubernetes/ssl/*.pem /etc/kubernetes/ssl

cd /etc/kubernetes/ssl

for i in {103..106};do scp *.pem 192.168.10.$i:/etc/kubernetes/ssl/;done 建立 kubectl kuberconfig 檔案

export KUBE_APISERVER="https://192.168.10.102:6443" # 設定叢集參數

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=false --server=${KUBE_APISERVER} # 設定用戶端認證參數

kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --embed-certs=false --client-key=/etc/kubernetes/ssl/admin-key.pem # 設定上下文參數

kubectl config set-context kubernetes --cluster=kubernetes --user=admin # 設定預設上下文

kubectl config use-context kubernetes 建立 TLS Bootstrapping Token

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv << EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

cp token.csv /etc/kubernetes/ 建立 kubelet bootstrapping kubeconfig 檔案

cd /etc/kubernetes

export KUBE_APISERVER="https://192.168.10.102:6443" kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=bootstrap.kubeconfig kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig kubectl config use-context default --kubeconfig=bootstrap.kubeconfig 建立 kube-proxy kubeconfig 檔案

export KUBE_APISERVER="https://192.168.10.102:6443" kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig 分發 kubeconfig 檔案,将兩個 kubeconfig 檔案分發到所有 node 節點伺服器的 /etc/kubernetes/ 目錄

for i in {103..106};do scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected].$i:/etc/kubernetes/;done 到這裡,建立證書以及密鑰就告一段落了,相信有很多人都有所迷惑,因為剛才建立了好多密鑰和證書,下面總結下證書的作用:

生成的 CA 證書和秘鑰檔案如下:

ca-key.pem

ca.pem

kubernetes-key.pem

kubernetes.pem

kube-proxy.pem

kube-proxy-key.pem

admin.pem

admin-key.pem

使用證書的元件如下:

etcd:使用 ca.pem、kubernetes-key.pem、kubernetes.pem;

kube-apiserver:使用 ca.pem、kubernetes-key.pem、kubernetes.pem;

kubelet:使用 ca.pem;

kube-proxy:使用 ca.pem、kube-proxy-key.pem、kube-proxy.pem;

kubectl:使用 ca.pem、admin-key.pem、admin.pem;

kube-controller-manager:使用 ca-key.pem、ca.pem

相信看完上面的總結就一目了然了,OK下面我們來進行etcd叢集的安裝。

十一、所有節點部署 etcd

Kuberntes 使用 etcd 來存儲所有資料,下面我們來建立三節點etcd叢集,也就是master、node1、node2前面我們已經建立了很多TLS證書,咱們這裡就複用下kubernetes的證書,以下操作在所有節點執行。

由于上面已經 yum 安裝了 etcd ,是以,以下1、2兩部可忽略。

1、下載下傳 etcd 源碼檔案

wget https://github.com/coreos/etcd/releases/download/v3.1.5/etcd-v3.1.5-linux-amd64.tar.gz

tar -xvf etcd-v3.1.5-linux-amd64.tar.gz

mv etcd-v3.1.5-linux-amd64/etcd* /usr/local/bin 2、更新 etcd.server 檔案

[root@master ~]# vim /lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

User=etcd

# set GOMAXPROCS to number of processors

#ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/etcd --name=\"${ETCD_NAME}\" --data-dir=\"${ETCD_DATA_DIR}\" --listen-client-urls=\"${ETCD_LISTEN_CLIENT_URLS}\""

ExecStart=/usr/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster-state=${ETCD_INITIAL_CLUSTER_STATE} \

--initial-cluster=${ETCD_INITIAL_CLUSTER}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target 參數說明(下同):

name 節點名稱

data-dir 指定節點的資料存儲目錄

listen-peer-urls 監聽URL,用于與其他節點通訊

listen-client-urls 對外提供服務的位址:比如 http://ip:2379,http://127.0.0.1:2379 ,用戶端會連接配接到這裡和 etcd 互動

initial-advertise-peer-urls 該節點同伴監聽位址,這個值會告訴叢集中其他節點

initial-cluster-state 叢集中所有節點的資訊,格式為 node1=http://ip1:2380,node2=http://ip2:2380,… 。注意:這裡的 node1 是節點的 --name 指定的名字;後面的 ip1:2380 是 --initial-advertise-peer-urls 指定的值

initial-cluster-state 建立叢集的時候,這個值為 new ;假如已經存在的叢集,這個值為 existing

initial-cluster-token 建立叢集的 token,這個值每個叢集保持唯一。這樣的話,如果你要重新建立叢集,即使配置和之前一樣,也會再次生成新的叢集和節點 uuid;否則會導緻多個叢集之間的沖突,造成未知的錯誤

advertise-client-urls 對外公告的該節點用戶端監聽位址,這個值會告訴叢集中其他節點

注意:etcd 的資料目錄為 /var/lib/etcd,需在啟動服務前建立這個目錄,否則啟動服務的時候會報錯“Failed at step CHDIR spawning /usr/bin/etcd: No such file or directory”;

3、環境變量配置檔案/etc/etcd/etcd.conf,每個節點單獨配置,共5個節點

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.102:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.102:2379"

ETCD_NAME="master"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.102:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.102:2379"

ETCD_INITIAL_CLUSTER="master=https://192.168.10.102:2380,node1=https://192.168.10.103:2380,node2=https://192.168.10.104:2380,node3=https://192.168.10.105:2380,node4=https://192.168.10.106:2380"

ETCD_INITIAL_CLUSTER_TOKEN="kubernetes"

ETCD_INITIAL_CLUSTER_STATE="new"

###########################################################################################################################

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.103:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.103:2379"

ETCD_NAME="node1"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.103:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.103:2379"

ETCD_INITIAL_CLUSTER="master=https://192.168.10.102:2380,node1=https://192.168.10.103:2380,node2=https://192.168.10.104:2380,node3=https://192.168.10.105:2380,node4=https://192.168.10.106:2380"

ETCD_INITIAL_CLUSTER_TOKEN="kubernetes"

ETCD_INITIAL_CLUSTER_STATE="new"

###########################################################################################################################

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.104:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.104:2379"

ETCD_NAME="node2"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.104:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.104:2379"

ETCD_INITIAL_CLUSTER="master=https://192.168.10.102:2380,node1=https://192.168.10.103:2380,node2=https://192.168.10.104:2380,node3=https://192.168.10.105:2380,node4=https://192.168.10.106:2380"

ETCD_INITIAL_CLUSTER_TOKEN="kubernetes"

ETCD_INITIAL_CLUSTER_STATE="new"

###########################################################################################################################

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.105:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.105:2379"

ETCD_NAME="node3"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.105:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.105:2379"

ETCD_INITIAL_CLUSTER="master=https://192.168.10.102:2380,node1=https://192.168.10.103:2380,node2=https://192.168.10.104:2380,node3=https://192.168.10.105:2380,node4=https://192.168.10.106:2380"

ETCD_INITIAL_CLUSTER_TOKEN="kubernetes"

ETCD_INITIAL_CLUSTER_STATE="new"

###########################################################################################################################

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.106:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.106:2379"

ETCD_NAME="node4"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.106:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.106:2379"

ETCD_INITIAL_CLUSTER="master=https://192.168.10.102:2380,node1=https://192.168.10.103:2380,node2=https://192.168.10.104:2380,node3=https://192.168.10.105:2380,node4=https://192.168.10.106:2380"

ETCD_INITIAL_CLUSTER_TOKEN="kubernetes"

ETCD_INITIAL_CLUSTER_STATE="new" 4、重新開機 etcd 服務

systemctl daemon-reload

systemctl restart etcd

systemctl status etcd # 在所有的 kubernetes節點重複上面的步驟,直到所有機器的 etcd 服務都已啟動。

5、驗證服務

[root@master ~]# etcdctl member list

33e828919ccd6e00: name=etcd3 peerURLs=http://192.168.10.104:2380 clientURLs=http://127.0.0.1:2379,http://192.168.10.104:2379 isLeader=false

63f491cf242db4b3: name=etcd1 peerURLs=http://192.168.10.102:2380 clientURLs=http://127.0.0.1:2379,http://192.168.10.102:2379 isLeader=true

d8703b918bb9d544: name=etcd2 peerURLs=http://192.168.10.103:2380 clientURLs=http://127.0.0.1:2379,http://192.168.10.103:2379 isLeader=false [root@master ~]# etcdctl cluster-health

member 33e828919ccd6e00 is healthy: got healthy result from http://127.0.0.1:2379

member 63f491cf242db4b3 is healthy: got healthy result from http://127.0.0.1:2379

member d8703b918bb9d544 is healthy: got healthy result from http://127.0.0.1:2379

cluster is healthy 6、問題總結

①、如果驗證輸出以下内容

[root@master ~]# etcdctl member list

8e9e05c52164694d: name=etcd1 peerURLs=http://localhost:2380 clientURLs=http://127.0.0.1:2379,http://192.168.10.102:2379 isLeader=true 此問題是在搭建叢集前,先進行了單執行個體的etcd部署測試, 即 etcd2、etcd3 節點分别進行了單執行個體部署測試,也就是曆史資料的影響。删除曆史資料即可。

rm -rf /var/lib/etcd/* ②、如果有其他問題,則需要将 etcd.service 中的 User=etcd 修改為 User=root ,且 ExecStart 中不能有空格

③、一個叢集中隻有一個Leader,如果不是一個就是報錯。

以下内容為搭建帶認證的 etcd 叢集,可忽略

7、建立 etcd 密鑰檔案

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF 生成 etcd-ca-csr.json 檔案

cat >etcd-ca-csr.json<<EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "etcd",

"OU": "System"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF 生成 etcd 的證書簽名請求檔案 etcd-csr.json

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.10.102",

"192.168.10.103",

"192.168.10.104",

"etcd1",

"etcd1",

"etcd2",

"etcd3"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "etcd",

"OU": "System"

}

]

}

EOF 生成 etcd 用戶端證書和密鑰

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare etcd-ca

cfssl gencert -ca=etcd-ca.pem -ca-key=etcd-ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd 8、修改 etcd 服務檔案 etcd.service ,在所有節點加入以下标紅的内容

[root@master ~]# vim /lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

User=etcd

# set GOMAXPROCS to number of processors

# ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/etcd --name=\"${ETCD_NAME}\" --data-dir=\"${ETCD_DATA_DIR}\" --listen-client-urls=\"${ETCD_LISTEN_CLIENT_URLS}\""

ExecStart=/usr/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster-state=${ETCD_INITIAL_CLUSTER_STATE} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--cert-file=${ETCD_CERT_FILE} \

--key-file=${ETCD_KEY_FILE} \

--peer-cert-file=${ETCD_CERT_FILE} \

--peer-key-file=${ETCD_KEY_FILE} \

--trusted-ca-file=${ETCD_TRUSTED_CA_FILE} \

--peer-trusted-ca-file=${ETCD_PEER_TRUSTED_CA_FILE}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target 9、修改 etcd 配置檔案 etcd.conf ,加入以下内容

#[Security]

ETCD_CERT_FILE="/etc/kubernetes/ssl/kubernetes.pem"

ETCD_KEY_FILE="/etc/kubernetes/ssl/kubernetes-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/kubernetes/ssl/kubernetes.pem"

ETCD_PEER_CERT_FILE="/etc/kubernetes/ssl/kubernetes.pem"

ETCD_PEER_KEY_FILE="/etc/kubernetes/ssl/kubernetes-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/kubernetes/ssl/kubernetes.pem" 10、驗證叢集

[root@master ~]# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints=https://192.168.10.102:2379 member list

5590395074cc9636: name=node2 peerURLs=https://192.168.10.104:2380 clientURLs=https://0.0.0.0:4001,https://127.0.0.1:2379,https://192.168.10.104:2379 isLeader=false

5c724b8641f4fa1c: name=master peerURLs=https://192.168.10.102:2380 clientURLs=https://0.0.0.0:4001,https://127.0.0.1:2379,https://192.168.10.102:2379 isLeader=false

748537d982cf5c99: name=node3 peerURLs=https://192.168.10.105:2380 clientURLs=https://0.0.0.0:4001,https://127.0.0.1:2379,https://192.168.10.105:2379 isLeader=false

c05394aa51d11fda: name=node4 peerURLs=https://192.168.10.106:2380 clientURLs=https://0.0.0.0:4001,https://127.0.0.1:2379,https://192.168.10.106:2379 isLeader=false

d3993eda148039ba: name=node1 peerURLs=https://192.168.10.103:2380 clientURLs=https://0.0.0.0:4001,https://127.0.0.1:2379,https://192.168.10.103:2379 isLeader=true [root@master ~]# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints=https://192.168.10.102:2379 cluster-health

member 5590395074cc9636 is healthy: got healthy result from https://192.168.10.104:2379

member 5c724b8641f4fa1c is healthy: got healthy result from https://192.168.10.102:2379

member 748537d982cf5c99 is healthy: got healthy result from https://192.168.10.105:2379

member c05394aa51d11fda is healthy: got healthy result from https://192.168.10.106:2379

member d3993eda148039ba is healthy: got healthy result from https://192.168.10.103:2379

cluster is healthy 十二、Kubernetes 叢集部署

經過上面的步驟,前期準備工作完成,下面開始正式部署 Kubernetes 。以下操作均在 master 節點進行

1、修改 kube-apiserver.service 服務檔案

[root@master ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/apiserver

User=kube

ExecStart=/usr/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target 2、修改kube-apiserver配置檔案

[root@master ~]# vim /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://127.0.0.1:8080"

############################# 将以上預設配置檔案修改為以下内容 #############################

KUBE_LOGTOSTDERR="--logtostderr=false"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=https://192.168.10.102:6443" 說明:該配置檔案同時被kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy使用。

3、修改 apiserver 檔案

[root@master ~]# vim /etc/kubernetes/apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=192.168.10.102"

# The port on the local server to listen on.

# KUBE_API_PORT="--port=8080"

# Port minions listen on

# KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379,https://192.168.10.105:2379,https://192.168.10.106:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

# Add your own!

KUBE_API_ARGS="--authorization-mode=AlwaysAllow --advertise-address=192.168.10.102 --bind-address=192.168.10.102 --secure-port=6443 --insecure-port=8080 --kubelet-https=true --service-node-port-range=1-65535 --storage-backend=etcd3 --etcd-servers=https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379,https://192.168.10.105:2379,https://192.168.10.106:2379 --token-auth-file=/etc/kubernetes/token.csv --tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem --tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --client-ca-file=/etc/kubernetes/ssl/ca.pem --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem --etcd-cafile=/etc/kubernetes/ssl/ca.pem --etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem --etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem --enable-swagger-ui=true --allow-privileged=true --apiserver-count=1 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/log/kubernetes/apiserver/audit.log --log-dir=/var/log/kubernetes --v=0 --event-ttl=1h" 參數說明:

# --admission-control:kuberneres叢集的準入控制機制,各控制子產品以插件的形式依次生效,叢集時必須包含ServiceAccount;

AlwaysAdmit, AlwaysDeny, AlwaysPullImages, DefaultStorageClass, DefaultTolerationSeconds, DenyEscalatingExec, DenyExecOnPrivileged, EventRateLimit, ExtendedResourceToleration, ImagePolicyWebhook, Initializers, LimitPodHardAntiAffinityTopology, LimitRanger, MutatingAdmissionWebhook, NamespaceAutoProvision, NamespaceExists, NamespaceLifecycle, NodeRestriction, OwnerReferencesPermissionEnforcement, PersistentVolumeClaimResize, PersistentVolumeLabel, PodNodeSelector, PodPreset, PodSecurityPolicy, PodTolerationRestriction, Priority, ResourceQuota, SecurityContextDeny, ServiceAccount, StorageObjectInUseProtection, TaintNodesByCondition, ValidatingAdmissionWebhook

# --bind-address:不能為 127.0.0.1;在本位址的6443端口開啟https服務,預設值0.0.0.0;

# --insecure-port=0:禁用不安全的http服務,預設開啟,端口8080,設定為0禁用;

# --secure-port=6443:https安全端口,預設即6443,0表示禁用;

# --authorization-mode:在安全端口使用 RBAC 授權模式,未通過授權的請求拒絕;

# --service-cluster-ip-range:指定 Service Cluster IP 位址段,該位址段外部路由不可達;

# --service-node-port-range:指定 NodePort 的端口範圍;

# --storage-backend:持久化存儲類型,v1.6版本後預設即etcd3;

# --enable-swagger-ui:設定為true時,啟用swagger-ui網頁,可通過apiserver的usl/swagger-ui通路,預設為false;

# --allow-privileged:設定為true時,kubernetes允許在Pod中運作擁有系統特權的容器應用;

# --audit-log-*:審計日志相關;

# --event-ttl:apiserver中各時間保留時間,預設即1h,通常用于審計與追蹤;

# --logtostderr:預設為true,輸出到stderr,不輸出到日志;

# --log-dir:日志目錄;

# --v:日志級别

建立日志目錄及相關日志檔案并授權

[root@master ~]# mkdir -p /var/log/kubernetes/apiserver/

[root@master ~]# touch /var/log/kubernetes/apiserver/audit.log

[root@master ~]# chown -R kube.kube /var/log/kubernetes 重新開機 apiserver 服務

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

systemctl status kube-apiserver 4、修改 kube-control-manager.service 服務檔案

[root@master ~]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/controller-manager

User=kube

ExecStart=/usr/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target 5、修改 controller-manager 配置檔案

[root@master ~]# vim /etc/kubernetes/controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 --service-cluster-ip-range=10.254.0.0/16 --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem --root-ca-file=/etc/kubernetes/ssl/ca.pem --leader-elect=true" 重新開機 kube-control-manager 服務

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

systemctl status kube-controller-manager 6、修改 kube-scheduler.service 服務檔案

[root@master ~]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/scheduler

User=kube

ExecStart=/usr/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target 7、修改 scheduler 檔案

[root@master ~]# vim /etc/kubernetes/scheduler

###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--leader-elect=true --address=127.0.0.1" 重新開機 scheduler 服務

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

systemctl status kube-scheduler 8、修改修改 kubelet.service 服務檔案

[root@master ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_API_SERVER \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target 9、修改 kubelet 配置檔案

[root@master ~]# vim /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=192.168.10.102"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=192.168.10.102"

# location of the api-server

KUBELET_API_SERVER="--api-servers=https://192.168.10.102:6443"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=192.168.10.102"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=192.168.10.102"

# location of the api-server

KUBELET_API_SERVER="--api-servers=https://192.168.10.102:6443"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS="--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0 --kubeconfig=/etc/kubernetes/kubelet --cert-dir=/etc/kubernetes/ssl --cluster-dns=10.254.0.1 --cluster-domain=cluster.k8s. --allow-privileged=true --serialize-image-pulls=false --logtostderr=false --log-dir=/var/log/kubernetes/kubelet --v=0"

# KUBELET_ARGS="--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0 --kubeconfig=/etc/kubernetes/kubelet --cluster-dns=10.254.0.1 --cluster-domain=cluster.k8s. --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem --root-ca-file=/etc/kubernetes/ssl/ca.pem --allow-privileged=true --serialize-image-pulls=false --logtostderr=false --log-dir=/var/log/kubernetes/kubelet --v=0" 重新開機 kubelet 服務

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

systemctl status kubelet 10、驗證 Master 節點功能

[root@master kubernetes]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-4 Healthy {"health":"true"}

etcd-3 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"} 如果出現以下錯誤:

[root@master kubernetes]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: getsockopt: connection refused

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: getsockopt: connection refused

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-3 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-4 Healthy {"health":"true"} 則需要将scheduler檔案和controller-manager檔案中的address修改為127.0.0.1,因為目前kube-apiserver期望scheduler和controller-manager在同一台伺服器。

此處也可使用 kubectl get cs ,如果報錯 Unable to

connect to the server: x509: certificate signed by unknown authority ,則使用上面的方式進行驗證,或者做如下操作

從〜/.kube/config中删除嵌入的根證書并運作此config指令:

kubectl config set-cluster ${KUBE_CONTEXT} --insecure-skip-tls-verify=true --server=${KUBE_CONTEXT}

說明:${KUBE_CONTEXT} 此參數可自定義,此處為 kubernetes

十三、所有節點部署 Flannel

所有的node節點都需要安裝Flannel網絡插件,才能讓所有的Pod加入到同一個區域網路中,是以下面的操作在所有節點都需要執行一遍。建議直接使用yum安裝flanneld,除非對版本有特殊需求,預設安裝的是0.7.1版本的flannel。

1、yum 安裝 flannel 插件

[root@master ~]# yum -y install flannel 2、修改 flanneld.service 服務啟動檔案,加入下面标紅的兩

[root@master ~]# vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/etc/sysconfig/flanneld

EnvironmentFile=-/etc/sysconfig/docker-network

ExecStart=/usr/bin/flanneld-start -etcd-endpoints=${FLANNEL_ETCD_ENDPOINTS} -etcd-prefix=${FLANNEL_ETCD_PREFIX} $FLANNEL_OPTIONS

ExecStartPost=/usr/libexec/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

WantedBy=docker.service 3、修改 /etc/sysconfig/flanneld 配置檔案

[root@master ~]# vim /etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379,https://192.168.10.105:2379,https://192.168.10.106:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/atomic.io/network"

# FLANNEL_ETCD_PREFIX="/kube-centos/network"

# Any additional options that you want to pass

FLANNEL_OPTIONS="-etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem -etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem" 注意:如果是多網卡(例如vagran環境),則需要在 FLANNEL_OPENTION 中添加指定的外網出口的網卡,例如iface=eth0

4、在 etcd 中建立網絡配置(這個配置隻在 master 節點操作即可)

[root@master ~]# etcdctl --endpoints https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379,https://192.168.10.105:2379,https://192.168.10.106:2379 \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

mkdir /kube-centos/network

[root@master ~]# etcdctl --endpoints https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379,https://192.168.10.105:2379,https://192.168.10.106:2379 \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

mk /atomic.io/network/config '{"Network":"10.254.0.0/16"}' 說明:如果此處想使用 vxlan ,在可以直接将 host-gw 修改為 vxlan 即可。

5、啟動 flannel

systemctl daemon-reload

systemctl enable flanneld

systemctl start flanneld

systemctl status flanneld 6、檢視flannel狀态

[root@master ~]# systemctl status flanneld 如果有如上圖提示,證明配置成功

十四、部署 node 節點

到此為止我們已經完成了Master節點服務、etcd叢集、flannel叢集都已經搭建完成,下面我們來部署 node 節點的服務。首先需要确認下node節點的flannel、docker、etcd是否啟動,其次檢查下/etc/kubernetes/下的證書和配置檔案是否在,具體操作這裡就不再贅述了。

1、修改 docker 配置,使其可以使用 flannel 網絡

使用systemctl指令啟動flanneld後,會自動執行./mk-docker-opts.sh -i在/run/flannel/目錄下生成如下兩個檔案環境變量檔案:

[root@master ~]# ll /run/flannel/

總用量 8

-rw-r--r-- 1 root root 174 7月 22 20:44 docker

-rw-r--r-- 1 root root 98 7月 22 20:44 subnet.env Docker将會讀取這兩個環境變量檔案作為容器啟動參數,修改 docker 的配置檔案 /usr/lib/systemd/system/docker.service,增加一條環境變量配置:

EnvironmentFile=-/run/flannel/docker 3、安裝 conntrack

[root@master ~]# yum -y install conntrack-tools 4、修改 kube-proxy 的 service 配置檔案 /usr/lib/systemd/system/kube-proxy.service

[root@master ~]# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target 5、修改 kube-proxy 配置檔案 /etc/kubernetes/proxy ,在每個節點都進行修改,這裡隻記錄一條,其餘的修改IP即可

[root@master ~]# vim /etc/kubernetes/proxy

KUBE_PROXY_ARGS="--bind-address=192.168.10.102 --hostname-override=192.168.10.102 --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig --cluster-cidr=10.254.0.0/16" 重新開機 kube-proxy 服務

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

systemctl status kube-proxy 重新開機 docker 服務

systemctl daemon-reload

systemctl enable docker

systemctl restart docker

systemctl status docker 6、叢集測試

[root@master ~]# kubectl --insecure-skip-tls-verify=true run nginx --replicas=2 --labels="run=load-balancer-example" --image=nginx --port=80

deployment "nginx" created

[root@master ~]# kubectl expose deployment nginx --type=NodePort --name=example-service

service "example-service" exposed

[root@master kubernetes]# kubectl describe svc example-service

Name:example-service

Namespace:default

Labels:run=load-balancer-example

Selector:run=load-balancer-example

Type:NodePort

IP:10.254.253.193

Port:<unset>80/TCP

NodePort:<unset>57593/TCP

Endpoints:<none>

Session Affinity:None

No events.