一 Kafka在zookeeper中存儲結構圖

二 分析

2.1 topic注冊資訊

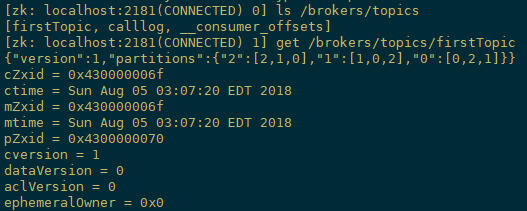

/brokers/topics/[topic] :

存儲某個topic的partitions所有配置設定資訊

[zk: localhost:2181(CONNECTED) 1] get /brokers/topics/firstTopic

Schema:

{ "version": "版本編号目前固定為數字1", "partitions": {"partitionId編号": [ 同步副本組brokerId清單],"partitionId編号": [同步副本組brokerId清單], .......}}

Example:

{"version": 1,"partitions": {"2": [2, 1, 0],"1": [1, 0, 2],"0": [0, 2, 1],}} 2.2 partition狀态資訊

/brokers/topics/[topic]/partitions/[0...N] 其中[0..N]表示partition索引号

/brokers/topics/[topic]/partitions/[partitionId]/state

Schema:

{"controller_epoch": 表示kafka叢集中的中央控制器選舉次數,"leader": 表示該partition選舉leader的brokerId,"version": 版本編号預設為1,

"leader_epoch": 該partition leader選舉次數,"isr": [同步副本組brokerId清單]}

Example:

{"controller_epoch":61,"leader":0,"version":1,"leader_epoch":0,"isr":[0,2,1]} 2.3 Broker注冊資訊

/brokers/ids/[0...N]

每個broker的配置檔案中都需要指定一個數字類型的id(全局不可重複),此節點為臨時znode(EPHEMERAL)

Schema:

{"jmx_port": jmx端口号,"timestamp": kafka broker初始啟動時的時間戳,"host": 主機名或ip位址,"version": 版本編号預設為1,

"port": kafka broker的服務端端口号,由server.properties中參數port确定}

Example:

{"jmx_port":-1,"host":"192.168.100.21","timestamp":"1533452008040","port":9092,"version":4} 2.4 Controller epoch

/controller_epoch --> int (epoch)

此值為一個數字,kafka叢集中第一個broker第一次啟動時為1,以後隻要叢集中center controller中央控制器所在broker變更或挂掉,就會重新選舉新的center controller,每次center controller變更controller_epoch值就會 + 1;

2.5 Controller注冊資訊

/controller -> int (broker id of the controller) 存儲center controller中央控制器所在kafka broker的資訊

Schema:

{"version": 版本編号預設為1,"brokerid": kafka叢集中broker唯一編号,"timestamp": kafka broker中央控制器變更時的時間戳}

Example:

{"version": 1,"brokerid": 0,"timestamp": "1533452008692"} 2.6 Consumer and Consumer group

a.每個consumer用戶端被建立時,會向zookeeper注冊自己的資訊;

b.此作用主要是為了"負載均衡".

c.同一個Consumer Group中的Consumers,Kafka将相應Topic中的每個消息隻發送給其中一個Consumer。

d.Consumer Group中的每個Consumer讀取Topic的一個或多個Partitions,并且是唯一的Consumer;

e.一個Consumer group的多個consumer的所有線程依次有序地消費一個topic的所有partitions,如果Consumer group中所有consumer總線程大于partitions數量,則會出現空閑情況;

舉例說明:

kafka叢集中建立一個topic為report-log 4 partitions 索引編号為0,1,2,3,假如有目前有三個消費者node:注意-->一個consumer中一個消費線程可以消費一個或多個partition.如果每個consumer建立一個consumer thread線程,各個node消費情況如下,node1消費索引編号為0,1分區,node2費索引編号為2,node3費索引編号為3,如果每個consumer建立2個consumer thread線程,各個node消費情況如下(是從consumer node先後啟動狀态來确定的),node1消費索引編号為0,1分區;node2費索引編号為2,3;node3為空閑狀态。

總結:

從以上可知,Consumer Group中各個consumer是根據先後啟動的順序有序消費一個topic的所有partitions的。如果Consumer Group中所有consumer的總線程數大于partitions數量,則可能consumer thread或consumer會出現空閑狀态。

2.7 Consumer均衡算法

當一個group中,有consumer加入或者離開時,會觸發partitions均衡.均衡的最終目的,是提升topic的并發消費能力.

1) 假如topic1,具有如下partitions: P0,P1,P2,P3

2) 加入group中,有如下consumer: C0,C1

3) 首先根據partition索引号對partitions排序: P0,P1,P2,P3

4) 根據(consumer.id + '-'+ thread序号)排序: C0,C1

5) 計算倍數: M = [P0,P1,P2,P3].size / [C0,C1].size,本例值M=2(向上取整)

6) 然後依次配置設定partitions: C0 = [P0,P1],C1=[P2,P3],即Ci = [P(i * M),P((i + 1) * M -1)]

2.8 Consumer注冊資訊

每個consumer都有一個唯一的ID(consumerId可以通過配置檔案指定,也可以由系統生成),此id用來标記消費者資訊.

/consumers/[groupId]/ids/[consumerIdString]

是一個臨時的znode,此節點的值為請看consumerIdString産生規則,即表示此consumer目前所消費的topic + partitions清單.

consumerId産生規則:

StringconsumerUuid = null;

if(config.consumerId!=null && config.consumerId)

consumerUuid = consumerId;

else {

String uuid = UUID.randomUUID()

consumerUuid = "%s-%d-%s".format(

InetAddress.getLocalHost.getHostName, System.currentTimeMillis,

uuid.getMostSignificantBits().toHexString.substring(0,8));

}

String consumerIdString = config.groupId + "_" + consumerUuid;

[zk: localhost:2181(CONNECTED) 11] get /consumers/console-consumer-2304/ids/console-consumer-2304_hadoop2-1525747915241-6b48ff32 Schema:

{"version": 版本編号預設為1,"subscription": { //訂閱topic清單"topic名稱": consumer中topic消費者線程數},"pattern": "static",

"timestamp": "consumer啟動時的時間戳"}

Example:

{"version": 1,"subscription": {"topic2": 1},"pattern": "white_list","timestamp": "1525747915336"} 2.9 Consumer owner

/consumers/[groupId]/owners/[topic]/[partitionId] -> consumerIdString + threadId索引編号

a) 首先進行"Consumer Id注冊";

b) 然後在"Consumer id 注冊"節點下注冊一個watch用來監聽目前group中其他consumer的"退出"和"加入";隻要此znode path下節點清單變更,都會觸發此group下consumer的負載均衡.(比如一個consumer失效,那麼其他consumer接管partitions).

c) 在"Broker id 注冊"節點下,注冊一個watch用來監聽broker的存活情況;如果broker清單變更,将會觸發所有的groups下的consumer重新balance.

2.10 Consumer offset

/consumers/[groupId]/offsets/[topic]/[partitionId] -> long (offset)

用來跟蹤每個consumer目前所消費的partition中最大的offset

此znode為持久節點,可以看出offset跟group_id有關,以表明當消費者組(consumer group)中一個消費者失效,

重新觸發balance,其他consumer可以繼續消費.

2.11 Re-assign partitions

/admin/reassign_partitions

{

"fields":[

{ "name":"version","type":"int","doc":"version id" },

{ "name":"partitions",

"type":{"type":"array", "items":{

"fields":[{"name":"topic","type":"string","doc":"topic of the partition to be reassigned"},

{"name":"partition", "type":"int","doc":"the partition to be reassigned"},

{"name":"replicas","type":"array","items":"int","doc":"a list of replica ids"}],

}

"doc":"an array of partitions to be reassigned to new replicas"

}

}

]

}

Example:

{"version": 1,"partitions": [{"topic": "Foo","partition": 1, "replicas": [0, 1, 3] }] } 2.12 Preferred replication election

/admin/preferred_replica_election

{

"fields":[

{

"name":"version",

"type":"int",

"doc":"version id"

},

{

"name":"partitions",

"type":{

"type":"array",

"items":{

"fields":[

{

"name":"topic",

"type":"string",

"doc":"topic of the partition for which preferred replica election should be triggered"

},

{

"name":"partition",

"type":"int",

"doc":"the partition for which preferred replica election should be triggered"

}

],

}

"doc":"an array of partitions for which preferred replica election should be triggered"

}

}

]

}

例子:

{

"version": 1,

"partitions":

[

{

"topic": "Foo",

"partition": 1

},

{

"topic": "Bar",

"partition": 0

}

]

} 2.13 删除topics

/admin/delete_topics

Schema:

{ "fields":

[ {"name": "version", "type": "int", "doc": "version id"},

{"name": "topics",

"type": { "type": "array", "items": "string", "doc": "an array of topics to be deleted"}

} ]

}

例子:

{ "version": 1,"topics": ["foo", "bar"]} 2.14 Topic配置

/config/topics/[topic_name]