k8s監控實戰-部署prometheus

目錄

-

- 1 prometheus前言相關

-

-

- 1.1 Prometheus的特點

- 1.2 基本原理

-

-

-

-

- 1.2.1 原理說明

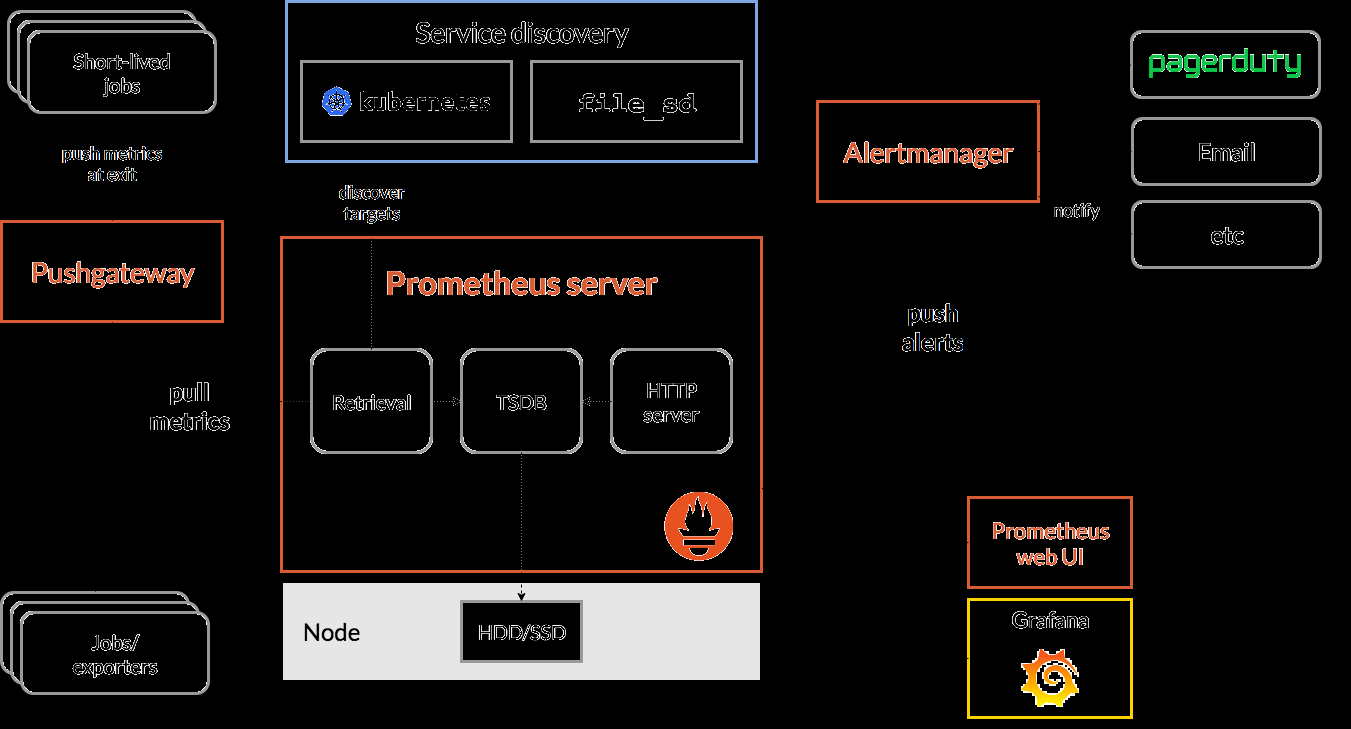

- 1.2.2 架構圖:

- 1.2.3 三大套件

- 1.2.4 架構服務過程

- 1.2.5 常用的exporter

-

-

-

- 2 部署4個exporter

-

-

- 2.1 部署kube-state-metrics

-

-

-

-

- 2.1.1 準備docker鏡像

- 2.1.2 準備rbac資源清單

- 2.1.3 準備Dp資源清單

- 2.1.4 應用資源配置清單

-

-

-

-

- 2.2 部署node-exporter

-

-

-

-

- 2.2.1 準備docker鏡像

- 2.2.2 準備ds資源清單

- 2.2.3 應用資源配置清單:

-

-

-

-

- 2.3 部署cadvisor

-

-

-

-

- 2.3.1 準備docker鏡像

- 2.3.2 準備ds資源清單

- 2.3.3 應用資源配置清單:

-

-

-

-

- 2.4 部署blackbox-exporter

-

-

-

-

- 2.4.1 準備docker鏡像

- 2.4.2 準備cm資源清單

- 2.4.3 準備dp資源清單

- 2.4.4 準備svc資源清單

- 2.4.5 準備ingress資源清單

- 2.4.6 添加域名解析

- 2.4.7 應用資源配置清單

- 2.4.8 通路域名測試

-

-

-

- 3 部署prometheus server

-

-

- 3.1 準備prometheus server環境

-

-

-

-

- 3.1.1 準備docker鏡像

- 3.1.2 準備rbac資源清單

- 3.1.3 準備dp資源清單

- 3.1.4 準備svc資源清單

- 3.1.5 準備ingress資源清單

- 3.1.6 添加域名解析

-

-

-

-

- 3.2 部署prometheus server

-

-

-

-

- 3.2.1 準備目錄和證書

- 3.2.2 建立prometheus配置檔案

- 3.2.3 應用資源配置清單

- 3.2.4 浏覽器驗證

-

-

-

- 4 使服務能被prometheus自動監控

-

-

- 4.1 讓traefik能被自動監控

-

-

-

-

- 4.1.1 修改traefik的yaml

- 4.1.2 應用配置檢視

-

-

-

-

- 4.2 用blackbox檢測TCP/HTTP服務狀态

-

-

-

-

- 4.2.1 被檢測服務準備

- 4.2.2 添加tcp的annotation

- 4.2.3 添加http的annotation

-

-

-

-

- 4.3 添加監控jvm資訊

-

由于docker容器的特殊性,傳統的zabbix無法對k8s叢集内的docker狀态進行監控,是以需要使用prometheus來進行監控

prometheus官網:

官網位址- 多元度資料模型,使用時間序列資料庫TSDB而不使用mysql。

- 靈活的查詢語言PromQL。

- 不依賴分布式存儲,單個伺服器節點是自主的。

- 主要基于HTTP的pull方式主動采集時序資料

- 也可通過pushgateway擷取主動推送到網關的資料。

- 通過服務發現或者靜态配置來發現目标服務對象。

- 支援多種多樣的圖表和界面展示,比如Grafana等。

Prometheus的基本原理是通過各種exporter提供的HTTP協定接口

周期性抓取被監控元件的狀态,任意元件隻要提供對應的HTTP接口就可以接入監控。

不需要任何SDK或者其他的內建過程,非常适合做虛拟化環境監控系統,比如VM、Docker、Kubernetes等。

網際網路公司常用的元件大部分都有exporter可以直接使用,如Nginx、MySQL、Linux系統資訊等。

- Server 主要負責資料采集和存儲,提供PromQL查詢語言的支援。

- Alertmanager 警告管理器,用來進行報警。

- Push Gateway 支援臨時性Job主動推送名額的中間網關。

-

Prometheus Daemon負責定時去目标上抓取metrics(名額)資料

每個抓取目标需要暴露一個http服務的接口給它定時抓取。

支援通過配置檔案、文本檔案、Zookeeper、DNS SRV Lookup等方式指定抓取目标。

-

PushGateway用于Client主動推送metrics到PushGateway

而Prometheus隻是定時去Gateway上抓取資料。

适合一次性、短生命周期的服務

-

Prometheus在TSDB資料庫存儲抓取的所有資料

通過一定規則進行清理和整理資料,并把得到的結果存儲到新的時間序列中。

-

Prometheus通過PromQL和其他API可視化地展示收集的資料。

支援Grafana、Promdash等方式的圖表資料可視化。

Prometheus還提供HTTP API的查詢方式,自定義所需要的輸出。

-

Alertmanager是獨立于Prometheus的一個報警元件

支援Prometheus的查詢語句,提供十分靈活的報警方式。

prometheus不同于zabbix,沒有agent,使用的是針對不同服務的exporter

正常情況下,監控k8s叢集及node,pod,常用的exporter有四個:

-

kube-state-metrics

收集k8s叢集master&etcd等基本狀态資訊

-

node-exporter

收集k8s叢集node資訊

-

cadvisor

收集k8s叢集docker容器内部使用資源資訊

-

blackbox-exporte

收集k8s叢集docker容器服務是否存活

老套路,下載下傳docker鏡像,準備資源配置清單,應用資源配置清單:

docker pull quay.io/coreos/kube-state-metrics:v1.5.0

docker tag 91599517197a harbor.zq.com/public/kube-state-metrics:v1.5.0

docker push harbor.zq.com/public/kube-state-metrics:v1.5.0 準備目錄

mkdir /data/k8s-yaml/kube-state-metrics

cd /data/k8s-yaml/kube-state-metrics cat >rbac.yaml <<'EOF'

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

EOF cat >dp.yaml <<'EOF'

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "2"

labels:

grafanak8sapp: "true"

app: kube-state-metrics

name: kube-state-metrics

namespace: kube-system

spec:

selector:

matchLabels:

grafanak8sapp: "true"

app: kube-state-metrics

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

grafanak8sapp: "true"

app: kube-state-metrics

spec:

containers:

- name: kube-state-metrics

image: harbor.zq.com/public/kube-state-metrics:v1.5.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: http-metrics

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

serviceAccountName: kube-state-metrics

EOF 任意node節點執行

kubectl apply -f http://k8s-yaml.zq.com/kube-state-metrics/rbac.yaml

kubectl apply -f http://k8s-yaml.zq.com/kube-state-metrics/dp.yaml 驗證測試

kubectl get pod -n kube-system -o wide|grep kube-state-metrices

~]# curl http://172.7.21.4:8080/healthz

ok 傳回OK表示已經成功運作。

由于node-exporter是監控node的,需要每個節點啟動一個,是以使用ds控制器

docker pull prom/node-exporter:v0.15.0

docker tag 12d51ffa2b22 harbor.zq.com/public/node-exporter:v0.15.0

docker push harbor.zq.com/public/node-exporter:v0.15.0 mkdir /data/k8s-yaml/node-exporter

cd /data/k8s-yaml/node-exporter cat >ds.yaml <<'EOF'

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: node-exporter

namespace: kube-system

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

selector:

matchLabels:

daemon: "node-exporter"

grafanak8sapp: "true"

template:

metadata:

name: node-exporter

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

volumes:

- name: proc

hostPath:

path: /proc

type: ""

- name: sys

hostPath:

path: /sys

type: ""

containers:

- name: node-exporter

image: harbor.zq.com/public/node-exporter:v0.15.0

imagePullPolicy: IfNotPresent

args:

- --path.procfs=/host_proc

- --path.sysfs=/host_sys

ports:

- name: node-exporter

hostPort: 9100

containerPort: 9100

protocol: TCP

volumeMounts:

- name: sys

readOnly: true

mountPath: /host_sys

- name: proc

readOnly: true

mountPath: /host_proc

hostNetwork: true

EOF 主要用途就是将主控端的

/proc

,

sys

目錄挂載給容器,是容器能擷取node節點主控端資訊

任意node節點

kubectl apply -f http://k8s-yaml.zq.com/node-exporter/ds.yaml

kubectl get pod -n kube-system -o wide|grep node-exporter docker pull google/cadvisor:v0.28.3

docker tag 75f88e3ec333 harbor.zq.com/public/cadvisor:0.28.3

docker push harbor.zq.com/public/cadvisor:0.28.3 mkdir /data/k8s-yaml/cadvisor

cd /data/k8s-yaml/cadvisor cadvisor由于要擷取每個node上的pod資訊,是以也需要使用daemonset方式運作

cat >ds.yaml <<'EOF'

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: cadvisor

namespace: kube-system

labels:

app: cadvisor

spec:

selector:

matchLabels:

name: cadvisor

template:

metadata:

labels:

name: cadvisor

spec:

hostNetwork: true

#------pod的tolerations與node的Taints配合,做POD指定排程----

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

#-------------------------------------

containers:

- name: cadvisor

image: harbor.zq.com/public/cadvisor:v0.28.3

imagePullPolicy: IfNotPresent

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: var-run

mountPath: /var/run

- name: sys

mountPath: /sys

readOnly: true

- name: docker

mountPath: /var/lib/docker

readOnly: true

ports:

- name: http

containerPort: 4194

protocol: TCP

readinessProbe:

tcpSocket:

port: 4194

initialDelaySeconds: 5

periodSeconds: 10

args:

- --housekeeping_interval=10s

- --port=4194

terminationGracePeriodSeconds: 30

volumes:

- name: rootfs

hostPath:

path: /

- name: var-run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: docker

hostPath:

path: /data/docker

EOF 應用清單前,先在每個node上做以下軟連接配接,否則服務可能報錯

mount -o remount,rw /sys/fs/cgroup/

ln -s /sys/fs/cgroup/cpu,cpuacct /sys/fs/cgroup/cpuacct,cpu 應用清單

kubectl apply -f http://k8s-yaml.zq.com/cadvisor/ds.yaml 檢查:

kubectl -n kube-system get pod -o wide|grep cadvisor docker pull prom/blackbox-exporter:v0.15.1

docker tag 81b70b6158be harbor.zq.com/public/blackbox-exporter:v0.15.1

docker push harbor.zq.com/public/blackbox-exporter:v0.15.1 mkdir /data/k8s-yaml/blackbox-exporter

cd /data/k8s-yaml/blackbox-exporter cat >cm.yaml <<'EOF'

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: kube-system

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

timeout: 2s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200,301,302]

method: GET

preferred_ip_protocol: "ip4"

tcp_connect:

prober: tcp

timeout: 2s

EOF cat >dp.yaml <<'EOF'

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: blackbox-exporter

namespace: kube-system

labels:

app: blackbox-exporter

annotations:

deployment.kubernetes.io/revision: 1

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

volumes:

- name: config

configMap:

name: blackbox-exporter

defaultMode: 420

containers:

- name: blackbox-exporter

image: harbor.zq.com/public/blackbox-exporter:v0.15.1

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml

- --log.level=info

- --web.listen-address=:9115

ports:

- name: blackbox-port

containerPort: 9115

protocol: TCP

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 100m

memory: 50Mi

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

EOF cat >svc.yaml <<'EOF'

kind: Service

apiVersion: v1

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

selector:

app: blackbox-exporter

ports:

- name: blackbox-port

protocol: TCP

port: 9115

EOF cat >ingress.yaml <<'EOF'

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

rules:

- host: blackbox.zq.com

http:

paths:

- path: /

backend:

serviceName: blackbox-exporter

servicePort: blackbox-port

EOF 這裡用到了一個域名,添加解析

vi /var/named/zq.com.zone

blackbox A 10.4.7.10

systemctl restart named kubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/cm.yaml

kubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/dp.yaml

kubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/svc.yaml

kubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/ingress.yaml 通路

http://blackbox.zq.com,顯示如下界面,表示blackbox已經運作成

docker pull prom/prometheus:v2.14.0

docker tag 7317640d555e harbor.zq.com/infra/prometheus:v2.14.0

docker push harbor.zq.com/infra/prometheus:v2.14.0 mkdir /data/k8s-yaml/prometheus-server

cd /data/k8s-yaml/prometheus-server cat >rbac.yaml <<'EOF'

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

namespace: infra

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: infra

EOF 加上

--web.enable-lifecycle

啟用遠端熱加載配置檔案,配置檔案改變後不用重新開機prometheus

調用指令是

curl -X POST http://localhost:9090/-/reload

storage.tsdb.min-block-duration=10m

隻加載10分鐘資料到内

storage.tsdb.retention=72h

保留72小時資料

cat >dp.yaml <<'EOF'

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "5"

labels:

name: prometheus

name: prometheus

namespace: infra

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: prometheus

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: harbor.zq.com/infra/prometheus:v2.14.0

imagePullPolicy: IfNotPresent

command:

- /bin/prometheus

args:

- --config.file=/data/etc/prometheus.yml

- --storage.tsdb.path=/data/prom-db

- --storage.tsdb.min-block-duration=10m

- --storage.tsdb.retention=72h

- --web.enable-lifecycle

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /data

name: data

resources:

requests:

cpu: "1000m"

memory: "1.5Gi"

limits:

cpu: "2000m"

memory: "3Gi"

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

serviceAccountName: prometheus

volumes:

- name: data

nfs:

server: hdss7-200

path: /data/nfs-volume/prometheus

EOF cat >svc.yaml <<'EOF'

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: infra

spec:

ports:

- port: 9090

protocol: TCP

targetPort: 9090

selector:

app: prometheus

EOF cat >ingress.yaml <<'EOF'

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

name: prometheus

namespace: infra

spec:

rules:

- host: prometheus.zq.com

http:

paths:

- path: /

backend:

serviceName: prometheus

servicePort: 9090

EOF 這裡用到一個域名

prometheus.zq.com

,添加解析:

vi /var/named/od.com.zone

prometheus A 10.4.7.10

systemctl restart named mkdir -p /data/nfs-volume/prometheus/etc

mkdir -p /data/nfs-volume/prometheus/prom-db

cd /data/nfs-volume/prometheus/etc/

# 拷貝配置檔案中用到的證書:

cp /opt/certs/ca.pem ./

cp /opt/certs/client.pem ./

cp /opt/certs/client-key.pem ./ 配置檔案說明:

此配置為通用配置,除第一個job

etcd

是做的靜态配置外,其他8個job都是做的自動發現

是以隻需要修改

etcd

的配置後,就可以直接用于生産環境

cat >/data/nfs-volume/prometheus/etc/prometheus.yml <<'EOF'

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'etcd'

tls_config:

ca_file: /data/etc/ca.pem

cert_file: /data/etc/client.pem

key_file: /data/etc/client-key.pem

scheme: https

static_configs:

- targets:

- '10.4.7.12:2379'

- '10.4.7.21:2379'

- '10.4.7.22:2379'

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:10255

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:4194

- job_name: 'kubernetes-kube-state'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

regex: .*true.*

action: keep

- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']

regex: 'node-exporter;(.*)'

action: replace

target_label: nodename

- job_name: 'blackbox_http_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: http

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+);(.+)

replacement: $1:$2$3

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'blackbox_tcp_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [tcp_connect]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: tcp

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'traefik'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]

action: keep

regex: traefik

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

EOF kubectl apply -f http://k8s-yaml.zq.com/prometheus-server/rbac.yaml

kubectl apply -f http://k8s-yaml.zq.com/prometheus-server/dp.yaml

kubectl apply -f http://k8s-yaml.zq.com/prometheus-server/svc.yaml

kubectl apply -f http://k8s-yaml.zq.com/prometheus-server/ingress.yaml http://prometheus.zq.com,

如果能成功通路的話,表示啟動成功

點選status->configuration就是我們的配置檔案

點選status->targets,展示的就是我們在prometheus.yml中配置的job-name,這些targets基本可以滿足我們收集資料的需求。

5個編号的job-name已經被發現并擷取資料

接下來就需要将剩下的4個ob-name對應的服務納入監控

納入監控的方式是給需要收集資料的服務添加annotations

修改fraefik的yaml檔案,跟labels同級,添加annotations配置

vim /data/k8s-yaml/traefik/ds.yaml

........

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

#--------增加内容--------

annotations:

prometheus_io_scheme: "traefik"

prometheus_io_path: "/metrics"

prometheus_io_port: "8080"

#--------增加結束--------

spec:

serviceAccountName: traefik-ingress-controller

........ 任意節點重新應用配置

kubectl delete -f http://k8s-yaml.zq.com/traefik/ds.yaml

kubectl apply -f http://k8s-yaml.zq.com/traefik/ds.yaml 等待pod重新開機以後,再在prometheus上檢視traefik是否能正常擷取資料了

blackbox是檢測容器内服務存活性的,也就是端口健康狀态檢查,分為tcp和http兩種方法

能用http的情況盡量用http,沒有提供http接口的服務才用tcp

使用測試環境的dubbo服務來做示範,其他環境類似

- dashboard中開啟apollo-portal和test空間中的apollo

- dubbo-demo-service使用tcp的annotation

- dubbo-demo-consumer使用HTTP的annotation

等兩個服務起來以後,首先在dubbo-demo-service資源中添加一個TCP的annotation

vim /data/k8s-yaml/test/dubbo-demo-server/dp.yaml

......

spec:

......

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

#--------增加内容--------

annotations:

blackbox_port: "20880"

blackbox_scheme: "tcp"

#--------增加結束--------

spec:

containers:

image: harbor.zq.com/app/dubbo-demo-service:apollo_200512_0746 kubectl delete -f http://k8s-yaml.zq.com/test/dubbo-demo-server/dp.yaml

kubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-server/dp.yaml 浏覽器中檢視

http://blackbox.zq.com/和

http://prometheus.zq.com/targets我們運作的dubbo-demo-server服務,tcp端口20880已經被發現并在監控中

接下來在dubbo-demo-consumer資源中添加一個HTTP的annotation:

vim /data/k8s-yaml/test/dubbo-demo-consumer/dp.yaml

spec:

......

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

#--------增加内容--------

annotations:

blackbox_path: "/hello?name=health"

blackbox_port: "8080"

blackbox_scheme: "http"

#--------增加結束--------

spec:

containers:

- name: dubbo-demo-consumer

...... kubectl delete -f http://k8s-yaml.zq.com/test/dubbo-demo-consumer/dp.yaml

kubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-consumer/dp.yaml dubbo-demo-service和dubbo-demo-consumer都添加下列annotation注解,以便監控pod中的jvm資訊

vim /data/k8s-yaml/test/dubbo-demo-server/dp.yaml

vim /data/k8s-yaml/test/dubbo-demo-consumer/dp.yaml

annotations:

#....已有略....

prometheus_io_scrape: "true"

prometheus_io_port: "12346"

prometheus_io_path: "/" 12346是dubbo的POD啟動指令中使用jmx_javaagent用到的端口,是以可以用來收集jvm資訊

kubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-server/dp.yaml

kubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-consumer/dp.yaml 至此,所有9個服務,都擷取了資料