标簽

PostgreSQL , perf insight , 等待事件 , 采樣 , 發現問題 , Oracle 相容性

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#%E8%83%8C%E6%99%AF 背景

通常普通的監控會包括系統資源的監控:

cpu

io

記憶體

網絡

等,但是僅憑資源的監控,當問題發生時,如何快速的定位到問題在哪裡?需要更進階的監控:

更進階的監控方法通常是從資料庫本身的特性觸發,但是需要對資料庫具備非常深刻的了解,才能做出好的監控和診斷系統。屬于專家型或叫做經驗型的監控和診斷系統。

《[未完待續] PostgreSQL 一鍵診斷項 - 珍藏級》 《PostgreSQL 實時健康監控 大屏 - 低頻名額 - 珍藏級》 《PostgreSQL 實時健康監控 大屏 - 高頻名額(伺服器) - 珍藏級》 《PostgreSQL 實時健康監控 大屏 - 高頻名額 - 珍藏級》 《PostgreSQL pgmetrics - 多版本、健康監控名額采集、報告》 《PostgreSQL pg_top pgcenter - 實時top類工具》 《PostgreSQL、Greenplum 日常監控 和 維護任務 - 最佳實踐》 《PostgreSQL 如何查找TOP SQL (例如IO消耗最高的SQL) (包含SQL優化内容) - 珍藏級》 《PostgreSQL 鎖等待監控 珍藏級SQL - 誰堵塞了誰》然而資料庫在不斷的演進,經驗型的診斷系統好是好,但是不通用,有沒有更加通用,有效的發現系統問題的方法?

AWS與Oracle perf insight的思路非常不錯,實際上就是等待事件的統計追蹤,作為性能診斷的方法。

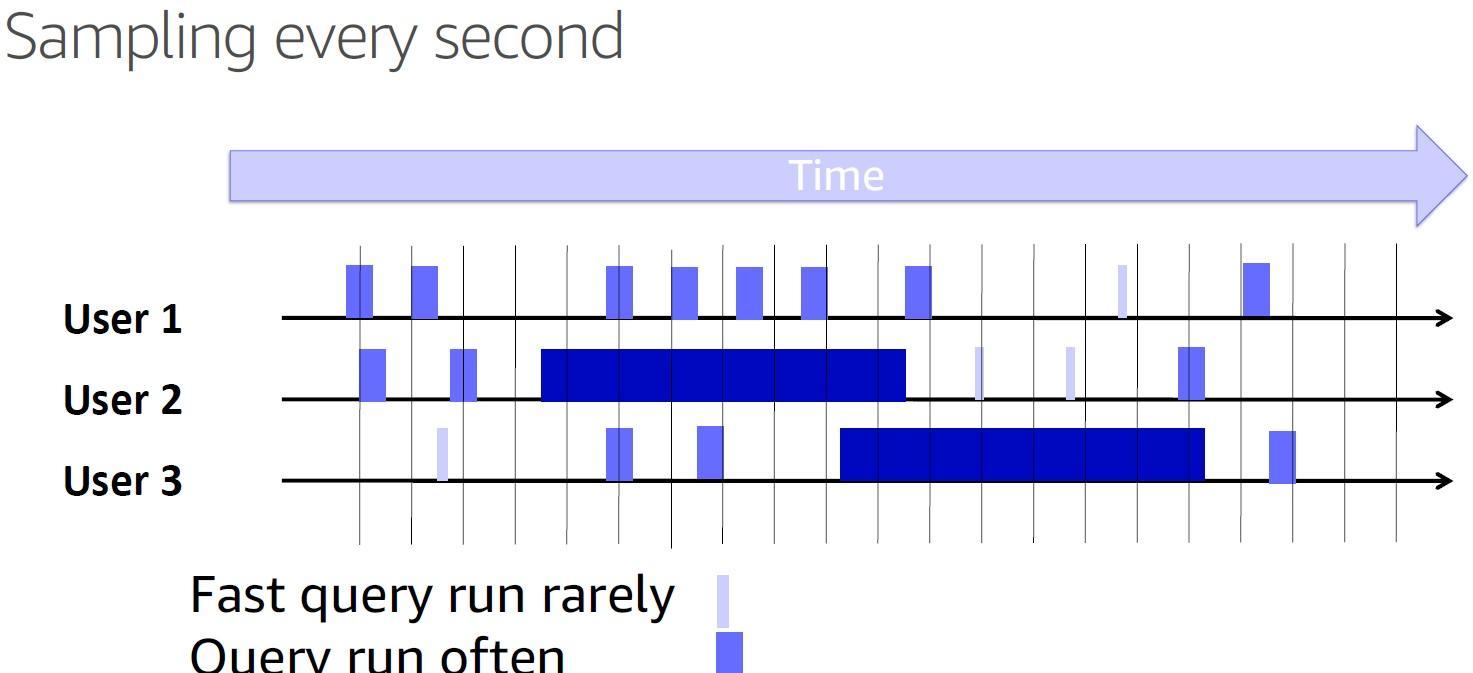

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_PerfInsights.html 《AWS performance insight》簡單來說就是對系統不停的打點,例如每秒一個采樣,僅記錄這一秒資料庫活躍的會話(包括等待中的會話),等待事件,QUERY,時間,使用者,資料庫。這幾個名額。

活躍度會話,不管是在耗費CPU,還是在等待(鎖,IO)或者其他,實際上都是占用了資源的。可以算出平均的活躍會話(例如10秒的平均值,5秒的平均值)(avg active sessions)。

這個avg active sessions是一個值,這個值和資料庫執行個體的CPU個數進行比較,就可以衡量出系統是否存在瓶頸(當avg active sessions超過CPU個數時,說明存在瓶頸)。

當某個時間視窗存在瓶頸,瓶頸在哪裡,則可以通過這個時間視窗内的打點明細,進行統計。等待事件,QUERY,使用者,資料庫。

PostgreSQL打點的方法也很多:

1、(推薦)通過pg_stat_activity 記憶體中的動态視圖擷取,每秒取一次ACTIVE的内容(例如:會話ID,等待事件,QUERY,時間,使用者,資料庫)。

https://www.postgresql.org/docs/11/monitoring-stats.html#MONITORING-STATS-VIEWS2、(不推薦)開啟審計日志,在審計日志中擷取,這個在高并發系統中,不太好用。并且審計日志是在結束時列印,一個QUERY的中間執行過程并不完全是占用CPU或其他資源的,是以審計日志擷取的資訊對于perf insight并沒有什麼效果。

perf insight的入門門檻低,可以擺平很多問題,在出現問題時快速定位到問題SQL,問題的等待事件在哪裡。結合經驗型的監控,可以建構PG非常強大的監控、診斷、優化體系。

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#perf-insight-%E5%AE%9E%E7%8E%B0%E8%AE%B2%E8%A7%A3 perf insight 實作講解

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#%E4%B8%BE%E4%BE%8B1 舉例1

會話1

postgres=# begin;

BEGIN

postgres=# lock table abc in access exclusive mode ;

LOCK TABLE

會話2

postgres=# select * from abc;

從pg_stat_activity擷取狀态,可以看到會話2在等待,會話處于active狀态,這種消耗需要被記錄到avg active session中,用來評估資源消耗名額。

postgres=# select now(),state,datname,usename,wait_event_type,wait_event,query from pg_stat_activity where state in ('active', 'fastpath function call');

now | state | datname | usename | wait_event_type | wait_event | query

-------------------------------+--------+----------+----------+-----------------+------------+--------------------------------------------------------------------------------------------

2019-01-25 21:17:28.540264+08 | active | postgres | postgres | | | select datname,usename,query,state,wait_event_type,wait_event,now() from pg_stat_activity;

2019-01-25 21:17:28.540264+08 | active | postgres | postgres | Lock | relation | select * from abc;

(2 rows)

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#%E4%B8%BE%E4%BE%8B2 舉例2

使用pgbench壓測資料庫,每秒打點,後期進行可視化展示

pgbench -i -s 100

1、壓測隻讀

pgbench -M prepared -n -r -P 1 -c 64 -j 64 -T 300 -S

2、檢視壓測時的活躍會話狀态

postgres=#

select now()::timestamptz(0),state,

datname,usename,wait_event_type,wait_event,query

from pg_stat_activity

where state in

('active', 'fastpath function call')

and pid<>pg_backend_pid();

now | state | datname | usename | wait_event_type | wait_event | query

---------------------+--------+----------+----------+-----------------+------------+-------------------------------------------------------

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | Client | ClientRead | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-25 21:28:52 | active | postgres | postgres | | | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

(46 rows)

3、為了友善統計,可以在本地建表,用于收集pg_stat_activity的内容,在實際的生産中,可以把這個資訊讀走,存到其他地方(例如專用于監控的其他資料庫)。

postgres=# create unlogged table perf_insight as

select now()::timestamptz(0) as ts,

extract(epoch from backend_start)||'.'||pid as sessid,

state,datname,usename,

wait_event_type||'_'||wait_event as waiting ,

query from

pg_stat_activity

where state in

('active', 'fastpath function call')

and pid<>pg_backend_pid();

SELECT 48

4、試着寫入當時pg_stat_activity狀态

postgres=#

insert into perf_insight

select now()::timestamptz(0),

extract(epoch from backend_start)||'.'||pid,

state,datname,

usename,wait_event_type||'_'||wait_event,

query from pg_stat_activity

where state in ('active', 'fastpath function call')

and pid<>pg_backend_pid();

INSERT 0 42

5、使用psql watch,每秒打一個點

postgres=# \watch 1

6、隻讀壓測,壓測結果,130萬QPS

pgbench -M prepared -n -r -P 1 -c 64 -j 64 -T 300 -S

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 300 s

number of transactions actually processed: 390179555

latency average = 0.049 ms

latency stddev = 0.026 ms

tps = 1300555.237752 (including connections establishing)

tps = 1300584.885231 (excluding connections establishing)

statement latencies in milliseconds:

0.001 \set aid random(1, 100000 * :scale)

0.049 SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

7、接下來,開啟一個讀寫壓測,9.4萬TPS(yue 47萬qps)

pgbench -M prepared -n -r -P 1 -c 64 -j 64 -T 300

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 300 s

number of transactions actually processed: 28371829

latency average = 0.677 ms

latency stddev = 0.413 ms

tps = 94569.412707 (including connections establishing)

tps = 94571.934011 (excluding connections establishing)

statement latencies in milliseconds:

0.002 \set aid random(1, 100000 * :scale)

0.001 \set bid random(1, 1 * :scale)

0.001 \set tid random(1, 10 * :scale)

0.001 \set delta random(-5000, 5000)

0.045 BEGIN;

0.108 UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

0.069 SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

0.091 UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

0.139 UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

0.068 INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

0.153 END;

8、perf insight 可視化需要的素材

時間、狀态、會話ID、資料庫名、使用者名、等待事件、查詢

當然,我們可以再細化,例如增加會話ID字段,可以針對一個會話來進行展示和統計。

postgres=# \d perf_insight

Unlogged table "public.perf_insight"

Column | Type |

---------+--------------------------------+-

ts | timestamp(0) with time zone | 時間戳

sessid | text | 會話ID

state | text | 狀态

datname | name | 資料庫

usename | name | 使用者

waiting | text | 等待事件

query | text | SQL語句

9、檢視perf insight素材内容

postgres=# select * from perf_insight limit 10;

ts | sessid | state | datname | usename | waiting | query

---------------------+------------------------+--------+----------+----------+--------------------------+----------------------------------------------------------------------

2019-01-26 09:43:28 | 1548467007.4805.32968 | active | postgres | postgres | Lock_transactionid | UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2;

2019-01-26 09:43:28 | 1548467007.47991.32966 | active | postgres | postgres | Client_ClientRead | END;

2019-01-26 09:43:28 | 1548467007.48362.32979 | active | postgres | postgres | Lock_transactionid | UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2;

2019-01-26 09:43:28 | 1548467007.48388.32980 | active | postgres | postgres | Lock_tuple | UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2;

2019-01-26 09:43:28 | 1548467007.48329.32978 | active | postgres | postgres | Lock_transactionid | UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2;

2019-01-26 09:43:28 | 1548467007.48275.32976 | active | postgres | postgres | Lock_tuple | UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2;

2019-01-26 09:43:28 | 1548467007.48107.32970 | active | postgres | postgres | Lock_transactionid | UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2;

2019-01-26 09:43:28 | 1548467007.48243.32975 | active | postgres | postgres | Lock_transactionid | UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2;

2019-01-26 09:43:28 | 1548467007.48417.32981 | active | postgres | postgres | IPC_ProcArrayGroupUpdate | SELECT abalance FROM pgbench_accounts WHERE aid = $1;

2019-01-26 09:43:28 | 1548467007.48448.32982 | active | postgres | postgres | Lock_tuple | UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2;

(10 rows)

10、檢視在這段時間中,有多少種等待事件

postgres=# select distinct waiting from perf_insight ;

waiting

--------------------------

LWLock_wal_insert

LWLock_XidGenLock

Lock_extend

LWLock_ProcArrayLock

Lock_tuple

Lock_transactionid

LWLock_lock_manager

Client_ClientRead

IPC_ProcArrayGroupUpdate

LWLock_buffer_content

IPC_ClogGroupUpdate

LWLock_CLogControlLock

IO_DataFileExtend

(14 rows)

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#perf-insight-%E5%8F%AF%E8%A7%86%E5%8C%96%E7%BB%9F%E8%AE%A1 perf insight 可視化,統計

采集粒度為1秒,可以對n秒的打點求平均值(分不同次元),得到可視化圖形:

1、總avg active sessions ,用于告警。

2、其他次元,用于分析造成性能瓶頸問題的權重:

2.1、等待事件次元(NULL表示無等待,純CPU time) avg active sessions

2.2、query 次元 avg active sessions

2.3、資料庫次元 avg active sessions

2.4、使用者次元 avg active sessions

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#%E5%A6%82%E4%BD%95%E5%88%A4%E6%96%AD%E9%97%AE%E9%A2%98 如何判斷問題:

例如,對于一個64線程的系統:

avg active sessions 在64以下時,可以認為是沒有問題的。

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#1-%E6%80%BB-avg-active-sessions%E7%94%A8%E4%BA%8E%E5%91%8A%E8%AD%A6 1 總 avg active sessions,用于告警。

5秒統計間隔。

select

coalesce(t1.ts, t2.ts) ts,

coalesce(avg_active_sessions,0) avg_active_sessions

from

(

select

to_timestamp((extract(epoch from ts))::int8/5*5) ts,

count(*)/5::float8 avg_active_sessions

from perf_insight

group by 1

) t1

full outer join

(select

generate_series(

to_timestamp((extract(epoch from min(ts)))::int8/5*5),

to_timestamp((extract(epoch from max(ts)))::int8/5*5),

interval '5 s'

) ts

from perf_insight

) t2

on (t1.ts=t2.ts);

ts | avg_active_sessions

------------------------+---------------------

2019-01-26 05:39:20+08 | 14.2

2019-01-26 05:39:25+08 | 30.4

2019-01-26 05:39:30+08 | 35.8

2019-01-26 05:39:35+08 | 41.8

2019-01-26 05:39:40+08 | 38.6

2019-01-26 05:39:45+08 | 38.2

2019-01-26 05:39:50+08 | 34.6

2019-01-26 05:39:55+08 | 35.6

2019-01-26 05:40:00+08 | 42.4

2019-01-26 05:40:05+08 | 36.8

2019-01-26 05:40:10+08 | 36.2

2019-01-26 05:40:15+08 | 39.4

2019-01-26 05:40:20+08 | 40

2019-01-26 05:40:25+08 | 35.8

2019-01-26 05:40:30+08 | 37.2

2019-01-26 05:40:35+08 | 36.4

2019-01-26 05:40:40+08 | 40.6

2019-01-26 05:40:45+08 | 39.2

2019-01-26 05:40:50+08 | 36.6

2019-01-26 05:40:55+08 | 37.4

2019-01-26 05:41:00+08 | 38

2019-01-26 05:41:05+08 | 38.6

2019-01-26 05:41:10+08 | 38.4

2019-01-26 05:41:15+08 | 40.4

2019-01-26 05:41:20+08 | 35.8

2019-01-26 05:41:25+08 | 40.6

2019-01-26 05:41:30+08 | 39.4

2019-01-26 05:41:35+08 | 37.4

2019-01-26 05:41:40+08 | 36.6

2019-01-26 05:41:45+08 | 39.6

2019-01-26 05:41:50+08 | 36.2

2019-01-26 05:41:55+08 | 37.4

2019-01-26 05:42:00+08 | 37.8

2019-01-26 05:42:05+08 | 39

2019-01-26 05:42:10+08 | 36.2

2019-01-26 05:42:15+08 | 37

2019-01-26 05:42:20+08 | 36.4

2019-01-26 05:42:25+08 | 36

2019-01-26 05:42:30+08 | 37.6

2019-01-26 05:42:35+08 | 0

2019-01-26 05:42:40+08 | 0

2019-01-26 05:42:45+08 | 0

2019-01-26 05:42:50+08 | 8.4

2019-01-26 05:42:55+08 | 40.6

2019-01-26 05:43:00+08 | 42.4

2019-01-26 05:43:05+08 | 37.4

2019-01-26 05:43:10+08 | 44.8

2019-01-26 05:43:15+08 | 36.2

2019-01-26 05:43:20+08 | 39.6

2019-01-26 05:43:25+08 | 41.4

2019-01-26 05:43:30+08 | 34.2

2019-01-26 05:43:35+08 | 41.8

2019-01-26 05:43:40+08 | 37.4

2019-01-26 05:43:45+08 | 30.2

2019-01-26 05:43:50+08 | 36.6

2019-01-26 05:43:55+08 | 36

2019-01-26 05:44:00+08 | 33.8

2019-01-26 05:44:05+08 | 37.8

2019-01-26 05:44:10+08 | 39.2

2019-01-26 05:44:15+08 | 36.6

2019-01-26 05:44:20+08 | 39.8

2019-01-26 05:44:25+08 | 35.2

2019-01-26 05:44:30+08 | 35.8

2019-01-26 05:44:35+08 | 42.8

2019-01-26 05:44:40+08 | 40.8

2019-01-26 05:44:45+08 | 39.4

2019-01-26 05:44:50+08 | 40

2019-01-26 05:44:55+08 | 40.2

2019-01-26 05:45:00+08 | 41.2

2019-01-26 05:45:05+08 | 41.6

2019-01-26 05:45:10+08 | 40.6

2019-01-26 05:45:15+08 | 33.8

2019-01-26 05:45:20+08 | 35.8

2019-01-26 05:45:25+08 | 42.2

2019-01-26 05:45:30+08 | 37.8

2019-01-26 05:45:35+08 | 37.6

2019-01-26 05:45:40+08 | 40.2

2019-01-26 05:45:45+08 | 37.4

2019-01-26 05:45:50+08 | 38.2

2019-01-26 05:45:55+08 | 39.6

2019-01-26 05:46:00+08 | 41.6

2019-01-26 05:46:05+08 | 36

2019-01-26 05:46:10+08 | 34.6

2019-01-26 05:46:15+08 | 37.8

2019-01-26 05:46:20+08 | 40.8

2019-01-26 05:46:25+08 | 42

2019-01-26 05:46:30+08 | 36.4

2019-01-26 05:46:35+08 | 44.6

2019-01-26 05:46:40+08 | 38.8

2019-01-26 05:46:45+08 | 35

2019-01-26 05:46:50+08 | 36.2

2019-01-26 05:46:55+08 | 37.2

2019-01-26 05:47:00+08 | 36

2019-01-26 05:47:05+08 | 38.2

2019-01-26 05:47:10+08 | 37.2

2019-01-26 05:47:15+08 | 42.8

2019-01-26 05:47:20+08 | 32

2019-01-26 05:47:25+08 | 41

2019-01-26 05:47:30+08 | 44

2019-01-26 05:47:35+08 | 37.4

2019-01-26 05:47:40+08 | 36.2

2019-01-26 05:47:45+08 | 39

2019-01-26 05:47:50+08 | 27.8

(103 rows)

10秒統計間隔的SQL

select

coalesce(t1.ts,t2.ts) ts,

coalesce(avg_active_sessions,0) avg_active_sessions

from

(

select

to_timestamp((extract(epoch from ts))::int8/10*10) ts,

count(*)/10::float8 avg_active_sessions

from perf_insight

group by 1

) t1

full outer join

(

select

generate_series(

to_timestamp((extract(epoch from min(ts)))::int8/10*10),

to_timestamp((extract(epoch from max(ts)))::int8/10*10),

interval '10 s'

) ts

from perf_insight

) t2

on (t1.ts=t2.ts);

ts | avg_active_sessions

------------------------+---------------------

2019-01-26 05:39:20+08 | 22.3

2019-01-26 05:39:30+08 | 38.8

2019-01-26 05:39:40+08 | 38.4

2019-01-26 05:39:50+08 | 35.1

2019-01-26 05:40:00+08 | 39.6

2019-01-26 05:40:10+08 | 37.8

2019-01-26 05:40:20+08 | 37.9

2019-01-26 05:40:30+08 | 36.8

2019-01-26 05:40:40+08 | 39.9

2019-01-26 05:40:50+08 | 37

2019-01-26 05:41:00+08 | 38.3

2019-01-26 05:41:10+08 | 39.4

2019-01-26 05:41:20+08 | 38.2

2019-01-26 05:41:30+08 | 38.4

2019-01-26 05:41:40+08 | 38.1

2019-01-26 05:41:50+08 | 36.8

2019-01-26 05:42:00+08 | 38.4

2019-01-26 05:42:10+08 | 36.6

2019-01-26 05:42:20+08 | 36.2

2019-01-26 05:42:30+08 | 18.8

2019-01-26 05:42:40+08 | 0

2019-01-26 05:42:50+08 | 24.5

2019-01-26 05:43:00+08 | 39.9

2019-01-26 05:43:10+08 | 40.5

2019-01-26 05:43:20+08 | 40.5

2019-01-26 05:43:30+08 | 38

2019-01-26 05:43:40+08 | 33.8

2019-01-26 05:43:50+08 | 36.3

2019-01-26 05:44:00+08 | 35.8

2019-01-26 05:44:10+08 | 37.9

2019-01-26 05:44:20+08 | 37.5

2019-01-26 05:44:30+08 | 39.3

2019-01-26 05:44:40+08 | 40.1

2019-01-26 05:44:50+08 | 40.1

2019-01-26 05:45:00+08 | 41.4

2019-01-26 05:45:10+08 | 37.2

2019-01-26 05:45:20+08 | 39

2019-01-26 05:45:30+08 | 37.7

2019-01-26 05:45:40+08 | 38.8

2019-01-26 05:45:50+08 | 38.9

2019-01-26 05:46:00+08 | 38.8

2019-01-26 05:46:10+08 | 36.2

2019-01-26 05:46:20+08 | 41.4

2019-01-26 05:46:30+08 | 40.5

2019-01-26 05:46:40+08 | 36.9

2019-01-26 05:46:50+08 | 36.7

2019-01-26 05:47:00+08 | 37.1

2019-01-26 05:47:10+08 | 40

2019-01-26 05:47:20+08 | 36.5

2019-01-26 05:47:30+08 | 40.7

2019-01-26 05:47:40+08 | 37.6

2019-01-26 05:47:50+08 | 13.9

(52 rows)

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#2-%E5%85%B7%E4%BD%93%E5%88%B0%E4%B8%80%E4%B8%AA%E6%97%B6%E9%97%B4%E6%AE%B5%E5%86%85%E6%98%AF%E4%BB%80%E4%B9%88%E9%97%AE%E9%A2%98 2 具體到一個時間段内,是什麼問題

例如2019-01-26 05:45:20+08,這個時間區間,性能問題鑽取:

1、資料庫次元的資源消耗時間占用,判定哪個資料庫占用的資源最多

postgres=#

select

datname,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 05:45:20+08' -- 問題時間點

group by 1

order by cnt desc;

datname | cnt

----------+-----

postgres | 39

(1 row)

2、使用者次元的資源消耗時間占用,判定哪個使用者占用的資源最多

postgres=#

select

usename,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 05:45:20+08' -- 問題時間點

group by 1

order by cnt desc;

usename | cnt

----------+-----

postgres | 39

(1 row)

3、等待事件次元的資源消耗時間占用,判定問題集中在哪些等待事件上,可以針對性的優化、加資源。

postgres=#

select

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 05:45:20+08' -- 問題時間點

group by 1

order by cnt desc;

waiting | cnt

--------------------------+------

CPU_TIME | 15.3

Client_ClientRead | 10.6

IPC_ProcArrayGroupUpdate | 6.1

Lock_transactionid | 5.4

Lock_tuple | 0.5

LWLock_wal_insert | 0.3

LWLock_ProcArrayLock | 0.2

LWLock_buffer_content | 0.2

IPC_ClogGroupUpdate | 0.2

LWLock_lock_manager | 0.1

LWLock_CLogControlLock | 0.1

(11 rows)

4、SQL次元的資源消耗時間占用,判定問題集中在哪些SQL上,可以針對性的優化。

postgres=#

select

query,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 05:45:20+08' -- 問題時間點

group by 1

order by cnt desc;

query | cnt

-------------------------------------------------------------------------------------------------------+------

END; | 11.5

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | 11.3

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | 6.8

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | 4.5

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | 2.3

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | 2.1

BEGIN; | 0.5

(7 rows)

5、單條QUERY在不同等待事件上的資源消耗時間占用,判定問題SQL的突出等待事件,可以針對性的優化、加資源。

postgres=#

select

query,

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 05:45:20+08' -- 問題時間點

group by 1,2

order by 1,cnt desc;

query | waiting | cnt

-------------------------------------------------------------------------------------------------------+--------------------------+-----

BEGIN; | Client_ClientRead | 0.3

BEGIN; | CPU_TIME | 0.2

END; | CPU_TIME | 4.6

END; | IPC_ProcArrayGroupUpdate | 3.7

END; | Client_ClientRead | 3.1

END; | IPC_ClogGroupUpdate | 0.1

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | CPU_TIME | 1

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | Client_ClientRead | 0.6

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | IPC_ProcArrayGroupUpdate | 0.6

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | IPC_ClogGroupUpdate | 0.1

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | CPU_TIME | 1.2

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | Client_ClientRead | 0.6

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | Lock_transactionid | 0.3

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | CPU_TIME | 3.8

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | Client_ClientRead | 2.9

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | LWLock_wal_insert | 0.1

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | Lock_transactionid | 4

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | CPU_TIME | 2.5

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | Client_ClientRead | 2.1

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | IPC_ProcArrayGroupUpdate | 1.7

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | Lock_tuple | 0.5

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | LWLock_buffer_content | 0.2

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | LWLock_ProcArrayLock | 0.2

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | LWLock_wal_insert | 0.1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | CPU_TIME | 2

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | Lock_transactionid | 1.1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | Client_ClientRead | 1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | IPC_ProcArrayGroupUpdate | 0.1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | LWLock_CLogControlLock | 0.1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | LWLock_lock_manager | 0.1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | LWLock_wal_insert | 0.1

(31 rows)

6、點中單條QUERY,在不同等待事件上的資源消耗時間占用,判定問題SQL的突出等待事件,可以針對性的優化、加資源。

通過4,發現占用最多的是END這條SQL,那麼這條SQL的等待時間分布如何?是什麼等待引起的?

postgres=#

select

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 05:45:20+08' -- 問題時間點

and query='END;'

group by 1

order by cnt desc;

waiting | cnt

--------------------------+-----

CPU_TIME | 4.6

IPC_ProcArrayGroupUpdate | 3.7

Client_ClientRead | 3.1

IPC_ClogGroupUpdate | 0.1

(4 rows)

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#3-%E5%BC%80%E5%90%AF%E4%B8%80%E4%B8%AA%E5%8F%AF%E4%BB%A5%E9%80%A0%E6%88%90%E6%80%A7%E8%83%BD%E9%97%AE%E9%A2%98%E7%9A%84%E5%8E%8B%E6%B5%8B%E5%9C%BA%E6%99%AF%E9%80%9A%E8%BF%87perf-insight%E7%9B%B4%E6%8E%A5%E5%8F%91%E7%8E%B0%E9%97%AE%E9%A2%98 3 開啟一個可以造成性能問題的壓測場景,通過perf insight直接發現問題

1、開啟640個并發,讀寫壓測,由于資料量小,并發高,直接導緻了ROW LOCK沖突的問題,使用perf insight問題畢現。

pgbench -M prepared -n -r -P 1 -c 640 -j 640 -T 300

postgres=#

select

query,

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 06:38:20+08' -- 問題時間點

group by 1,2

order by 1,cnt desc;

query | waiting | cnt

-------------------------------------------------------------------------------------------------------+--------------------------+-------

BEGIN; | Lock_transactionid | 0.3

BEGIN; | Lock_tuple | 0.3

BEGIN; | LWLock_lock_manager | 0.1

END; | IPC_ProcArrayGroupUpdate | 29.5

END; | CPU_TIME | 14.1

END; | Lock_transactionid | 13

END; | Client_ClientRead | 8.4

END; | Lock_tuple | 8.1

END; | LWLock_lock_manager | 3

END; | LWLock_ProcArrayLock | 0.4

END; | LWLock_buffer_content | 0.3

END; | IPC_ClogGroupUpdate | 0.1

END; | LWLock_wal_insert | 0.1

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | IPC_ProcArrayGroupUpdate | 1.3

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | CPU_TIME | 0.4

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | Lock_transactionid | 0.3

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | Lock_tuple | 0.2

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | Client_ClientRead | 0.2

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES ($1, $2, $3, $4, CURRENT_TIMESTAMP); | LWLock_lock_manager | 0.1

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | Lock_tuple | 0.9

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | Lock_transactionid | 0.9

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | IPC_ProcArrayGroupUpdate | 0.4

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | Client_ClientRead | 0.3

SELECT abalance FROM pgbench_accounts WHERE aid = $1; | CPU_TIME | 0.1

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | Lock_transactionid | 1.7

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | IPC_ProcArrayGroupUpdate | 1.4

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | Lock_tuple | 0.9

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | LWLock_lock_manager | 0.1

UPDATE pgbench_accounts SET abalance = abalance + $1 WHERE aid = $2; | CPU_TIME | 0.1

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | Lock_transactionid | 161.5 # 突出問題在這裡

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | IPC_ProcArrayGroupUpdate | 27.2

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | Lock_tuple | 27.2

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | LWLock_lock_manager | 19.6

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | CPU_TIME | 12.3

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | Client_ClientRead | 4

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | LWLock_buffer_content | 3.3

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | LWLock_ProcArrayLock | 0.3

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | LWLock_wal_insert | 0.1

UPDATE pgbench_branches SET bbalance = bbalance + $1 WHERE bid = $2; | IPC_ClogGroupUpdate | 0.1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | Lock_transactionid | 178.4 # 突出問題在這裡

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | Lock_tuple | 83.7 # 突出問題在這裡

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | CPU_TIME | 5.6

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | IPC_ProcArrayGroupUpdate | 5.3

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | LWLock_lock_manager | 3.8

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | Client_ClientRead | 2

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | LWLock_ProcArrayLock | 0.1

UPDATE pgbench_tellers SET tbalance = tbalance + $1 WHERE tid = $2; | LWLock_buffer_content | 0.1

(47 rows)

postgres=#

select

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

where

to_timestamp((extract(epoch from ts))::int8/10*10) -- 以10秒統計粒度的圖形為例

='2019-01-26 06:38:20+08' -- 問題時間點

group by 1

order by cnt desc;

waiting | cnt

--------------------------+-------

Lock_transactionid | 356.1

Lock_tuple | 121.3

IPC_ProcArrayGroupUpdate | 65.1

CPU_TIME | 32.6

LWLock_lock_manager | 26.7

Client_ClientRead | 14.9

LWLock_buffer_content | 3.7

LWLock_ProcArrayLock | 0.8

LWLock_wal_insert | 0.2

IPC_ClogGroupUpdate | 0.2

(10 rows)

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#%E5%85%B6%E4%BB%96%E5%8E%8B%E6%B5%8B%E5%9C%BA%E6%99%AF%E4%BD%BF%E7%94%A8perf-insight%E5%8F%91%E7%8E%B0%E9%97%AE%E9%A2%98%E7%9A%84%E4%BE%8B%E5%AD%90 其他壓測場景使用perf insight發現問題的例子

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#1%E6%89%B9%E9%87%8F%E6%95%B0%E6%8D%AE%E5%86%99%E5%85%A5block-extend%E6%88%96wal-insert-lock%E7%93%B6%E9%A2%88%E6%88%96pglz%E5%8E%8B%E7%BC%A9%E7%93%B6%E9%A2%88 1、批量資料寫入,BLOCK extend或wal insert lock瓶頸,或pglz壓縮瓶頸。

create table test(id int, info text default repeat(md5(random()::text),1000));

vi test.sql

insert into test(id) select generate_series(1,10);

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 64 -j 64 -T 300

postgres=#

select

to_timestamp((extract(epoch from ts))::int8/10*10) ts,

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

group by 1,2

order by 1,cnt desc;

ts | waiting | cnt

------------------------+--------------------------+------

2019-01-26 10:28:50+08 | IO_DataFileExtend | 0.1

2019-01-26 10:29:00+08 | CPU_TIME | 50

2019-01-26 10:29:00+08 | Lock_extend | 11.9 -- 擴充資料檔案

2019-01-26 10:29:00+08 | Client_ClientRead | 0.3

2019-01-26 10:29:00+08 | IO_DataFileExtend | 0.2

2019-01-26 10:29:00+08 | LWLock_lock_manager | 0.1

2019-01-26 10:29:10+08 | CPU_TIME | 47.1

2019-01-26 10:29:10+08 | Lock_extend | 13.5

2019-01-26 10:29:10+08 | Client_ClientRead | 0.7

2019-01-26 10:29:10+08 | IO_DataFileExtend | 0.3

2019-01-26 10:29:10+08 | LWLock_buffer_content | 0.2

2019-01-26 10:29:10+08 | LWLock_lock_manager | 0.1

2019-01-26 10:29:20+08 | CPU_TIME | 54.5

2019-01-26 10:29:20+08 | Lock_extend | 6.7

2019-01-26 10:29:20+08 | Client_ClientRead | 0.2

2019-01-26 10:29:20+08 | IO_DataFileExtend | 0.1

2019-01-26 10:29:30+08 | CPU_TIME | 61.9 -- CPU,通過perf top來看是 pglz接口的瓶頸(pglz_compress)

2019-01-26 10:29:30+08 | Client_ClientRead | 0.2

2019-01-26 10:29:40+08 | CPU_TIME | 30.9

2019-01-26 10:29:40+08 | LWLock_wal_insert | 0.2

2019-01-26 10:29:40+08 | Client_ClientRead | 0.1

(28 rows)

是以上面這個問題,如果改成不壓縮,那麼瓶頸就會變成其他的:

alter table test alter COLUMN info set storage external;

postgres=# \d+ test

Table "public.test"

Column | Type | Collation | Nullable | Default | Storage | Stats target | Description

--------+---------+-----------+----------+-------------------------------------+----------+--------------+-------------

id | integer | | | | plain | |

info | text | | | repeat(md5((random())::text), 1000) | external | |

瓶頸就會變成其他的:

2019-01-26 10:33:50+08 | Lock_extend | 43.2

2019-01-26 10:33:50+08 | LWLock_buffer_content | 14.8

2019-01-26 10:33:50+08 | CPU_TIME | 4.6

2019-01-26 10:33:50+08 | LWLock_lock_manager | 0.5

2019-01-26 10:33:50+08 | LWLock_wal_insert | 0.4

2019-01-26 10:33:50+08 | IO_DataFileExtend | 0.4

2019-01-26 10:33:50+08 | Client_ClientRead | 0.1

2019-01-26 10:34:00+08 | Lock_extend | 55.6

2019-01-26 10:34:00+08 | LWLock_buffer_content | 6.3

2019-01-26 10:34:00+08 | CPU_TIME | 1.2

2019-01-26 10:34:00+08 | IO_DataFileExtend | 0.8

2019-01-26 10:34:00+08 | LWLock_wal_insert | 0.1

2019-01-26 10:34:10+08 | Lock_extend | 6.3

2019-01-26 10:34:10+08 | LWLock_buffer_content | 5.8

2019-01-26 10:34:10+08 | CPU_TIME | 0.7

是以治本的方法是提供更好的壓縮接口,這也是PG 12的版本正在改進的:

《[未完待續] PostgreSQL 開放壓縮接口 與 lz4壓縮插件》 《[未完待續] PostgreSQL zstd 壓縮算法 插件》https://github.com/digoal/blog/blob/master/201901/20190125_02.md#2%E7%A7%92%E6%9D%80%E5%8D%95%E6%9D%A1update%E8%A1%8C%E9%94%81%E7%93%B6%E9%A2%88 2、秒殺,單條UPDATE。行鎖瓶頸。

create table t_hot (id int primary key, cnt int8);

insert into t_hot values (1,0);

vi test.sql

update t_hot set cnt=cnt+1 where id=1;

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 64 -j 64 -T 300

postgres=#

select

to_timestamp((extract(epoch from ts))::int8/10*10) ts,

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

group by 1,2

order by 1,cnt desc;

2019-01-26 10:37:50+08 | Lock_tuple | 29.6 -- 瓶頸為行鎖沖突

2019-01-26 10:37:50+08 | LWLock_lock_manager | 11.4 -- 伴随熱點塊

2019-01-26 10:37:50+08 | LWLock_buffer_content | 8.4

2019-01-26 10:37:50+08 | Lock_transactionid | 7.6

2019-01-26 10:37:50+08 | CPU_TIME | 6.5

2019-01-26 10:37:50+08 | Client_ClientRead | 0.2

2019-01-26 10:38:00+08 | Lock_tuple | 29.2 -- 瓶頸為行鎖沖突

2019-01-26 10:38:00+08 | LWLock_buffer_content | 15.6 -- 伴随熱點塊

2019-01-26 10:38:00+08 | CPU_TIME | 7.9

2019-01-26 10:38:00+08 | LWLock_lock_manager | 7.2

2019-01-26 10:38:00+08 | Lock_transactionid | 3.7

秒殺的場景,優化方法

《PostgreSQL 秒殺4種方法 - 增加 批量流式加減庫存 方法》 《HTAP資料庫 PostgreSQL 場景與性能測試之 30 - (OLTP) 秒殺 - 高并發單點更新》 《聊一聊雙十一背後的技術 - 不一樣的秒殺技術, 裸秒》 《PostgreSQL 秒殺場景優化》https://github.com/digoal/blog/blob/master/201901/20190125_02.md#3%E6%9C%AA%E4%BC%98%E5%8C%96sql%E5%85%A8%E8%A1%A8%E6%89%AB%E6%8F%8Ffiltercpu-time%E7%93%B6%E9%A2%88 3、未優化SQL,全表掃描filter,CPU time瓶頸。

postgres=# create table t_bad (id int, info text);

CREATE TABLE

postgres=# insert into t_bad select generate_series(1,10000), md5(random()::Text);

INSERT 0 10000

vi test.sql

\set id random(1,10000)

select * from t_bad where id=:id;

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 64 -j 64 -T 300

瓶頸

postgres=#

select

to_timestamp((extract(epoch from ts))::int8/10*10) ts,

coalesce(waiting, 'CPU_TIME') waiting,

count(*)/10::float8 cnt

from perf_insight

group by 1,2

order by 1,cnt desc;

2019-01-26 10:41:40+08 | CPU_TIME | 61.3

2019-01-26 10:41:40+08 | Client_ClientRead | 0.9

2019-01-26 10:41:50+08 | CPU_TIME | 61.7

2019-01-26 10:41:50+08 | Client_ClientRead | 0.1

2019-01-26 10:42:00+08 | CPU_TIME | 60.7

2019-01-26 10:42:00+08 | Client_ClientRead | 0.5

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#perf-insight-%E7%9A%84%E5%9F%BA%E5%87%86%E7%BA%BF perf insight 的基準線

如果要設定一個基準線,用于報警。那麼:

1、基準線跟QPS沒什麼關系。

2、基準線跟avg active sessions有莫大關系。avg active sessions大于執行個體CPU核數時,說明有性能問題。

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#perf-insight-%E4%B8%8D%E6%98%AF%E4%B8%87%E8%83%BD%E7%9A%84 perf insight 不是萬能的

perf insight 發現當時的問題是非常迅速的。

神醫華佗說,不治已病治未病才是最高境界,perf insight實際上是發現已病,而未病是發現不了的。

未病還是需要對引擎的深刻了解和豐富的經驗積累。

例如:

1、年齡

2、FREEZE風暴

3、sequence耗盡

4、索引推薦

5、膨脹

6、安全風險

7、不合理索引

8、增長趨勢

9、碎片

10、分區建議

11、冷熱分離建議

12、TOP SQL診斷與優化

13、擴容(容量、計算資源、IO、記憶體...)建議

14、分片建議

15、架構優化建議

等。

除此之外,perf insight對于這類情況也是發現不了的:

1、long query (waiting (ddl, block one session)),當long query比較少,總體avg active session低于基準水位時,實際上long query的問題就無法暴露。

然而long query是有一些潛在問題的,例如可能導緻膨脹。

perf insight + 經驗型監控、診斷,可以使得你的資料庫監測系統更加強壯。

https://github.com/digoal/blog/blob/master/201901/20190125_02.md#%E5%85%B6%E4%BB%96%E7%9F%A5%E8%AF%86%E7%82%B9%E5%86%85%E6%A0%B8%E9%9C%80%E6%94%B9%E8%BF%9B%E7%82%B9 其他知識點、核心需改進點

1、會話ID,使用backend的啟動時間,backend pid兩者結合,就可以作為PG資料庫的唯一session id。

有了session id,就可以基于SESSION次元進行性能診斷和可視化展示。

select extract(epoch from backend_start)||'.'||pid as sessid

from pg_stat_activity ;

sessid

------------------------

1547978042.41326.13447

1547978042.41407.13450

2、對于未使用綁定變量的SQL,要做SQL層的統計透視,就會比較悲劇了,因為隻要輸入的變量不同在pg_stat_activity的query