Text CNN

1. 簡介

TextCNN 是利用卷積神經網絡對文本進行分類的算法,由 Yoon Kim 在 “Convolutional Neural Networks for Sentence Classification” 一文中提出. 是2014年的算法.

我們将實作一個類似于Kim Yoon的卷積神經網絡語句分類的模型。 本文提出的模型在一系列文本分類任務(如情感分析)中實作了良好的分類性能,并已成為新的文本分類架構的标準基準。

2.準備好需要的庫和資料集

-

tensorflow -

h5py -

hdf5 -

keras -

numpy -

itertools -

collections -

re -

sklearn 0.19.0

準備資料集:

連結: https://pan.baidu.com/s/1oO4pDHeu3xIgkDtkLgQEVA 密碼: 6wrv

3. 資料和預處理

我們使用的資料集是 Movie Review data from Rotten Tomatoes,也是原始文獻中使用的資料集之一。 資料集包含10,662個示例評論句子,正負向各占一半。 資料集的大小約為1M。 請注意,由于這個資料集很小,我們很可能會使用強大的模型。 此外,資料集不附帶拆分的訓練/測試集,是以我們隻需将20%的資料用作 test set。

資料預處理的函數包括以下幾點(data_helpers.py):

l load_data_and_labels()從原始資料檔案中加載正負向情感的句子。使用one_hot編碼為每個句子打上标簽;[0,1],[1,0]

l clean_str()正則化去除句子中的标點。

l pad_sentences()使每個句子都擁有最長句子的長度,不夠的地方補上<PAD/>。允許我們有效地批量我們的資料,因為批進行中的每個示例必須具有相同的長度。

l build_vocab()建立單詞的映射,去重,對單詞按照自然順序排序。然後給排好序的單詞标記标号。建構詞的彙索引,并将每個單詞映射到0到單詞個數之間的整數(詞庫大小)。 每個句子都成為一個整數向量。

l build_input_data()将處理好的句子轉換為numpy數組。

l load_data()将上述操作整合正在一個函數中。

import numpy as np

import re

import itertools

from collections import Counter

def clean_str(string):

"""

Tokenization/string cleaning for datasets.

Original taken from https://github.com/yoonkim/CNN_sentence/blob/master/process_data.py

"""

string = re.sub(r"[^A-Za-z0-9(),!?\'\`]", " ", string)

string = re.sub(r"\'s", " \'s", string)

string = re.sub(r"\'ve", " \'ve", string)

string = re.sub(r"n\'t", " n\'t", string)

string = re.sub(r"\'re", " \'re", string)

string = re.sub(r"\'d", " \'d", string)

string = re.sub(r"\'ll", " \'ll", string)

string = re.sub(r",", " , ", string)

string = re.sub(r"!", " ! ", string)

string = re.sub(r"\(", " \( ", string)

string = re.sub(r"\)", " \) ", string)

string = re.sub(r"\?", " \? ", string)

string = re.sub(r"\s{2,}", " ", string)

return string.strip().lower()

def load_data_and_labels():

"""

Loads polarity data from files, splits the data into words and generates labels.

Returns split sentences and labels.

"""

# Load data from files

positive_examples = list(open("./data/rt-polarity.pos", "r", encoding='latin-1').readlines())

positive_examples = [s.strip() for s in positive_examples]

negative_examples = list(open("./data/rt-polarity.neg", "r", encoding='latin-1').readlines())

negative_examples = [s.strip() for s in negative_examples]

# Split by words

x_text = positive_examples + negative_examples

x_text = [clean_str(sent) for sent in x_text]

x_text = [s.split(" ") for s in x_text]

# Generate labels

positive_labels = [[0, 1] for _ in positive_examples]

negative_labels = [[1, 0] for _ in negative_examples]

y = np.concatenate([positive_labels, negative_labels], 0)

return [x_text, y]

def pad_sentences(sentences, padding_word="<PAD/>"):

"""

Pads all sentences to the same length. The length is defined by the longest sentence.

Returns padded sentences.

"""

sequence_length = max(len(x) for x in sentences)

padded_sentences = []

for i in range(len(sentences)):

sentence = sentences[i]

num_padding = sequence_length - len(sentence)

new_sentence = sentence + [padding_word] * num_padding

padded_sentences.append(new_sentence)

return padded_sentences

def build_vocab(sentences):

"""

Builds a vocabulary mapping from word to index based on the sentences.

Returns vocabulary mapping and inverse vocabulary mapping.

"""

# Build vocabulary

word_counts = Counter(itertools.chain(*sentences))

# Mapping from index to word

vocabulary_inv = [x[0] for x in word_counts.most_common()]

vocabulary_inv = list(sorted(vocabulary_inv))

# Mapping from word to index

vocabulary = {x: i for i, x in enumerate(vocabulary_inv)}

return [vocabulary, vocabulary_inv]

def build_input_data(sentences, labels, vocabulary):

"""

Maps sentences and labels to vectors based on a vocabulary.

"""

x = np.array([[vocabulary[word] for word in sentence] for sentence in sentences])

y = np.array(labels)

return [x, y]

def load_data():

"""

Loads and preprocessed data for the dataset.

Returns input vectors, labels, vocabulary, and inverse vocabulary.

"""

# Load and preprocess data

sentences, labels = load_data_and_labels()

sentences_padded = pad_sentences(sentences)

vocabulary, vocabulary_inv = build_vocab(sentences_padded)

x, y = build_input_data(sentences_padded, labels, vocabulary)

return [x, y, vocabulary, vocabulary_inv] 4. 模型

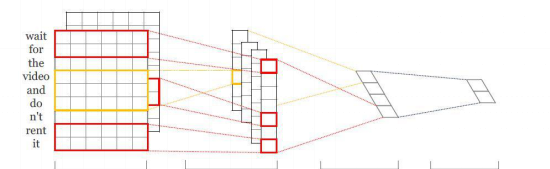

第一層将單詞嵌入到低維向量中。 下一層使用多個過濾器大小對嵌入的字矢量執行卷積。 例如,一次滑過3,4或5個字。池化層選擇使用最大池化。

之後将這三個卷積池化層結合起來。接下來,我們将卷積層的max_pooling結果,使用Flatten層将特征融合成一個長的特征向量,添加dropout正則,并使用softmax層對結果進行分類。

_______________________________________________________________________________

Layer (type) Output Shape Param # Connected to

===============================================================================

input_1 (InputLayer) (None, 56) 0

embedding_1 (Embedding) (None, 56, 256) 4803840 input_1[0][0]

reshape_1 (Reshape) (None, 56, 256, 1) 0 embedding_1[0][0]

conv2d_1 (Conv2D) (None, 54, 1, 512) 393728 reshape_1[0][0]

conv2d_2 (Conv2D) (None, 53, 1, 512) 524800 reshape_1[0][0]

conv2d_3 (Conv2D) (None, 52, 1, 512) 655872 reshape_1[0][0]

max_pooling2d_1 (MaxPooling2D) (None, 1, 1, 512) 0 conv2d_1[0][0]

max_pooling2d_2 (MaxPooling2D) (None, 1, 1, 512) 0 conv2d_2[0][0]

max_pooling2d_3 (MaxPooling2D) (None, 1, 1, 512) 0 conv2d_3[0][0]

concatenate_1 (Concatenate) (None, 3, 1, 512) 0 max_pooling2d_1[0][0]

max_pooling2d_2[0][0]

max_pooling2d_3[0][0]

flatten_1 (Flatten) (None, 1536) 0 concatenate_1[0][0]

dropout_1 (Dropout) (None, 1536) 0 flatten_1[0][0]

dense_1 (Dense) (None, 2) 3074 dropout_1[0][0]

Total params: 6,381,314

Trainable params: 6,381,314

Non-trainable params: 0

- 優化器選擇了:adam

- loss選擇了binary_crossentropy(二分類問題)

- 評價标準為分類問題的标準評價标準(是否分對)

from keras.layers import Input, Dense, Embedding, Conv2D, MaxPool2D

from keras.layers import Reshape, Flatten, Dropout, Concatenate

from keras.callbacks import ModelCheckpoint

from keras.optimizers import Adam

from keras.models import Model

from sklearn.model_selection import train_test_split

from data_helpers import load_data

print('Loading data')

x, y, vocabulary, vocabulary_inv = load_data()

# x.shape -> (10662, 56)

# y.shape -> (10662, 2)

# len(vocabulary) -> 18765

# len(vocabulary_inv) -> 18765

X_train, X_test, y_train, y_test = train_test_split( x, y, test_size=0.2, random_state=42)

# X_train.shape -> (8529, 56)

# y_train.shape -> (8529, 2)

# X_test.shape -> (2133, 56)

# y_test.shape -> (2133, 2)

sequence_length = x.shape[1] # 56

vocabulary_size = len(vocabulary_inv) # 18765

embedding_dim = 256

filter_sizes = [3,4,5]

num_filters = 512

drop = 0.5

epochs = 100

batch_size = 30

# this returns a tensor

print("Creating Model...")

inputs = Input(shape=(sequence_length,), dtype='int32')

embedding = Embedding(input_dim=vocabulary_size, output_dim=embedding_dim, input_length=sequence_length)(inputs)

reshape = Reshape((sequence_length,embedding_dim,1))(embedding)

conv_0 = Conv2D(num_filters, kernel_size=(filter_sizes[0], embedding_dim), padding='valid', kernel_initializer='normal', activation='relu')(reshape)

conv_1 = Conv2D(num_filters, kernel_size=(filter_sizes[1], embedding_dim), padding='valid', kernel_initializer='normal', activation='relu')(reshape)

conv_2 = Conv2D(num_filters, kernel_size=(filter_sizes[2], embedding_dim), padding='valid', kernel_initializer='normal', activation='relu')(reshape)

maxpool_0 = MaxPool2D(pool_size=(sequence_length - filter_sizes[0] + 1, 1), strides=(1,1), padding='valid')(conv_0)

maxpool_1 = MaxPool2D(pool_size=(sequence_length - filter_sizes[1] + 1, 1), strides=(1,1), padding='valid')(conv_1)

maxpool_2 = MaxPool2D(pool_size=(sequence_length - filter_sizes[2] + 1, 1), strides=(1,1), padding='valid')(conv_2)

concatenated_tensor = Concatenate(axis=1)([maxpool_0, maxpool_1, maxpool_2])

flatten = Flatten()(concatenated_tensor)

dropout = Dropout(drop)(flatten)

output = Dense(units=2, activation='softmax')(dropout)

# this creates a model that includes

model = Model(inputs=inputs, outputs=output)

checkpoint = ModelCheckpoint('weights.{epoch:03d}-{val_acc:.4f}.hdf5', monitor='val_acc', verbose=1, save_best_only=True, mode='auto')

adam = Adam(lr=1e-4, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=0.0)

model.compile(optimizer=adam, loss='binary_crossentropy', metrics=['accuracy'])

print("Traning Model...")

model.fit(X_train, y_train, batch_size=batch_size, epochs=epochs, verbose=1, callbacks=[checkpoint], validation_data=(X_test, y_test)) # starts training