一、搭建高可用OpenStack(Queen版)集群之部署块存储服务(Cinder)控制节点集群

一、块存储(Cinder)简介

1、Cinder概述

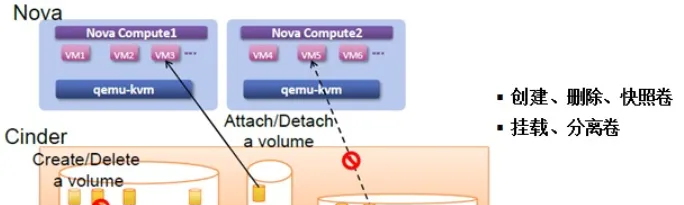

Cinder是一个资源管理系统,负责向虚机提供持久块存储资源。

Cinder把不同的后端存储进行封装,向外提供统一的API。

Cinder不是新开发的块存储系统,而是使用插件的方式,结合不同后端存储的驱动提供块存储服务。主要核心是对卷的管理,允许对卷、卷的类型、卷的快照进行处理。

2、Cinder的内部架构

1、三个主要组成部分

- cinder-api 组件负责向外提供Cinder REST API

- cinder-scheduler 组件负责分配存储资源

- cinder-volume 组件负责封装driver,不同的driver负责控制不同的后端存储

2、组件之间的RPC靠消息队列(Queue)实现

3、Cinder的开发工作

主要集中在scheduler和driver,以便提供更多的调度算法、更多的调度方法、更多的功能、以及指出更多的后端存储。

4、Volume元数据和状态保存在Database中

3、Cinder的基本功能

| 1 | 卷操作 | 创建卷 |

| 2 | 从已有卷创建卷 (克隆) | |

| 3 | 扩展卷 | |

| 4 | 删除卷 | |

| 5 | 卷-虚机操作 | 挂载卷到虚机 |

| 6 | 分离虚机卷 | |

| 7 | 卷-快照操作 | 创建卷的快照 |

| 8 | 从已有卷快照创建卷 | |

| 9 | 删除快照 | |

| 10 | 卷-镜像操作 | 从镜像创建卷 |

| 11 | 从卷创建镜像 |

4、Cinder插件

二、部署块存储服务(Cinder)控制节点集群

在采用ceph或其他商业/非商业后端存储时,建议将cinder-volume服务部署在控制节点,通过pacemaker将服务运行在active/passive模式。

1、创建cinder数据库

在任意控制节点创建数据库,后台数据自动同步

mysql -u root -p

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY '123456';

flush privileges;

exit; 2、创建cinder-api

在任意控制节点操作

调用cinder服务需要认证信息,加载环境变量脚本即可

. admin-openrc 1、创建cinder用户

service项目已在glance章节创建;

neutron用户在”default” domain中

[root@controller01 ~]# openstack user create --domain default --password=cinder_pass cinder

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id 2、cinder赋权

为cinder用户赋予admin权限(没有返回值)

openstack role add --project service --user cinder admin 3、创建cinder服务实体

- cinder服务实体类型”volume”;

- 创建v2/v3两个服务实体

[root@controller01 ~]# openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | 300afab521fc48828c74c1d44d2802dd |

| name | cinderv3 |

| type | volumev3 |

+-------------+----------------------------------+

[root@controller01 ~]# openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id 4、创建cinder-api

注意:

- region与初始化admin用户时生成的region一致;

- api地址统一采用vip,如果public/internal/admin分别使用不同的vip,请注意区分;

- cinder-api 服务类型为volume;

- cinder-api后缀为用户project-id,可通过”openstack project list”查看

# v2 public api

openstack endpoint create --region RegionTest volumev2 public http://controller:8776/v2/%\(project_id\)s

# v2 internal api

openstack endpoint create --region RegionTest volumev2 internal http://controller:8776/v2/%\(project_id\)s

# v2 admin api

openstack endpoint create --region RegionTest volumev2 admin http://controller:8776/v2/%\(project_id\)s

# v3 public api

openstack endpoint create --region RegionTest volumev3 public http://controller:8776/v3/%\(project_id\)s

# v3 internal api

openstack endpoint create --region RegionTest volumev3 internal http://controller:8776/v3/%\(project_id\)s

# v3 admin api

openstack endpoint create --region RegionTest volumev3 admin http://controller:8776/v3/%\(project_id\)s 3、安装、配置cinder

在全部控制节点操作

yum install 修改配置时,注意:

- ”my_ip”参数,根据节点修改;

- cinder.conf文件的权限:root:cinder

cp -rp /etc/cinder/cinder.conf{,.bak}

egrep -v "^$|^#" [DEFAULT]

state_path = /var/lib/cinder

my_ip = 10.20.9.189

glance_api_servers = http://controller:9292

auth_strategy = keystone

osapi_volume_listen = $my_ip

osapi_volume_listen_port = 8776

log_dir = /var/log/cinder

# 前端采用haproxy时,服务连接rabbitmq会出现连接超时重连的情况,可通过各服务与rabbitmq的日志查看;

# transport_url = rabbit://openstack:openstack@controller:5673

# rabbitmq本身具备集群机制,官方文档建议直接连接rabbitmq集群;但采用此方式时服务启动有时会报错,原因不明;如果没有此现象,强烈建议连接rabbitmq直接对接集群而非通过前端haproxy

transport_url=rabbit://openstack:openstack@controller01:5672,openstack:openstack@controller02:5672,openstack:openstack@controller03:5672

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:123456@controller/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller01:11211,controller02:11211,controller03:11211

auth_type = password

project_domain_id = default

user_domain_id = default

project_name = service

username = cinder

password = cinder_pass

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = $state_path/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[profiler]

[service_user]

[ssl]

[vault] 5、配置nova.conf

在全部控制节点操作

配置只涉及nova.conf的”[cinder]”字段;加入对应regiong

vim /etc/nova/nova.conf

[cinder]

os_region_name=RegionTest 6、同步cinder数据库

任意控制节点操作

同步cinder数据库,忽略部分”deprecation”信息

[root@controller01 cinder]# su -s /bin/bash -c "cinder-manage db sync" cinder

Option "logdir" from group "DEFAULT" is deprecated. Use option "log-dir" from group "DEFAULT". 验证

mysql -h controller -u cinder -p123456 -e "use cinder;show tables;" 7、启动服务,并设置成开机自启

全部控制节点操作

1、变更nova配置文件,首先需要重启nova服务

systemctl restart openstack-nova-api.service

systemctl status openstack-nova-api.service 2、启动cinder,并设置为开机启动

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl restart openstack-cinder-api.service

systemctl restart openstack-cinder-scheduler.service 3、查看服务状态

systemctl status openstack-cinder-api.service openstack-cinder-scheduler.service 8、验证

. admin-openrc 查看agent服务(使用cinder service-list或者openstack volume service list)

[root@controller01 cinder]# openstack volume service list

+------------------+--------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+--------------+------+---------+-------+----------------------------+

| cinder-scheduler | controller01 | nova | enabled | up | 2018-09-10T07:32:50.000000 |

| cinder-scheduler | controller03 | nova | enabled | up | 2018-09-10T07:32:50.000000 |

| cinder-scheduler | controller02 | nova | enabled | up | 2018-09-10T07:32:50.000000 9、设置pcs资源

在任意控制节点操作

1、添加资源cinder-api与cinder-scheduler

pcs resource create openstack-cinder-api systemd:openstack-cinder-api --clone interleave=true

pcs resource create openstack-cinder-scheduler systemd:openstack-cinder-scheduler --clone interleave=true cinder-api与cinder-scheduler以active/active模式运行;

openstack-nova-volume以active/passive模式运行

2、查看资源

[root@controller01 cinder]# pcs resource

vip (ocf::heartbeat:IPaddr2): Started controller01

Clone Set: lb-haproxy-clone [lb-haproxy]

Started: [ controller01 ]

Stopped: [ controller02 controller03 ]

Clone Set: openstack-keystone-clone [openstack-keystone]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-glance-api-clone [openstack-glance-api]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-glance-registry-clone [openstack-glance-registry]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-nova-api-clone [openstack-nova-api]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-nova-consoleauth-clone [openstack-nova-consoleauth]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-nova-scheduler-clone [openstack-nova-scheduler]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-nova-conductor-clone [openstack-nova-conductor]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-nova-novncproxy-clone [openstack-nova-novncproxy]

Started: [ controller01 controller02 controller03 ]

Clone Set: neutron-server-clone [neutron-server]

Started: [ controller01 controller02 controller03 ]

Clone Set: neutron-linuxbridge-agent-clone [neutron-linuxbridge-agent]

Started: [ controller02 controller03 ]

Stopped: [ controller01 ]

Clone Set: neutron-l3-agent-clone [neutron-l3-agent]

Started: [ controller01 controller02 controller03 ]

Clone Set: neutron-dhcp-agent-clone [neutron-dhcp-agent]

Started: [ controller01 controller02 controller03 ]

Clone Set: neutron-metadata-agent-clone [neutron-metadata-agent]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-cinder-api-clone [openstack-cinder-api]

Started: [ controller01 controller02 controller03 ]

Clone Set: openstack-cinder-scheduler-clone [openstack-cinder-scheduler]

Started: [ controller01 controller02 controller03 ] 二、通过haproxy查看后端服务情况

到此为至控制节点的服务组件部署完毕,查看负载和服务情况

http://10.20.9.47:1080/

账号:admin 密码:admin