如果你是 ACK容器集群

(AlibabaCloud Container Service for Kubernetes)的管理员,你可能经常需要为其他的普通开发者角色创建不同的RAM子账户并进行授权操作,当需要对多个开发人员授予相同ACK集群操作权限时,为每个开发者创建子账号并授权就显得太过重复和繁琐了。

本文基于

ack-ram-authenticator 项目,演示如何配置ACK集群使用RAM Role进行身份验证。0. 步骤概览

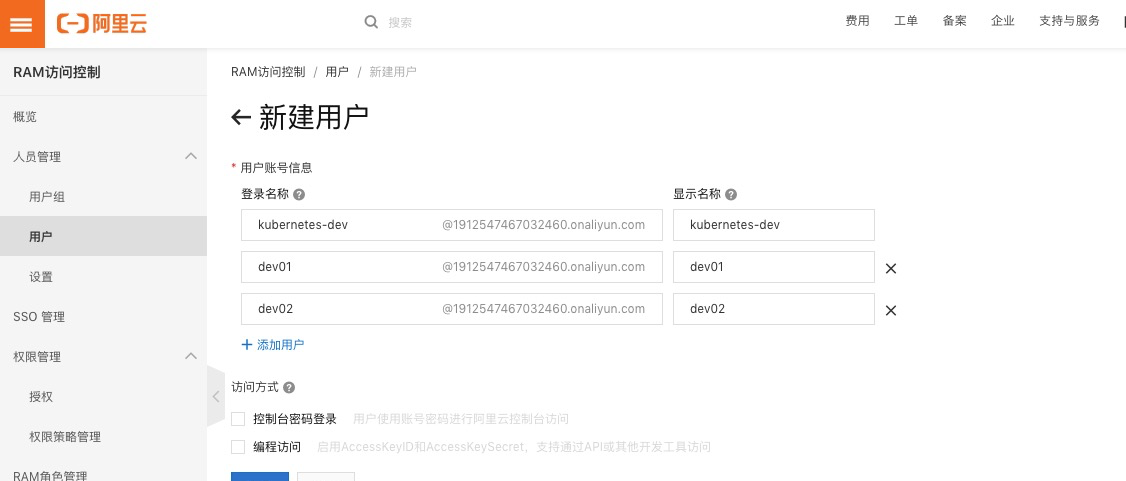

(1)

RAM控制台创建子账户

kubernetes-dev

、

dev01

dev02

...

devN

和RAM Role

KubernetesDev

并为子账户kubernetes-dev授权

(2) ACK集群中部署和运行 ack-ram-authenticator server

(3) 配置ACK集群Apiserver使用ack-ram-authenticator server

(4) 设置kubectl使用由ack-ram-authenticator提供的身份验证令牌

1. RAM控制台创建子账户和RAM Role

1.1 创建子账户kubernetes-dev dev1 dev2 ... devN

分别对dev01 dev02 devN授权AliyunSTSAssumeRoleAccess:

1.2 在ACK集群中对子账户kubernetes-dev授权开发者权限

按照提示完成授权:

1.3 创建RAM Role KubernetesDev

2. 部署和运行ack-ram-authenticator server

$ git clone https://github.com/haoshuwei/ack-ram-authenticator 参考

example.yaml文件中的ConfigMap配置文件为:

apiVersion: v1

kind: ConfigMap

metadata:

namespace: kube-system

name: ack-ram-authenticator

labels:

k8s-app: ack-ram-authenticator

data:

config.yaml: |

# a unique-per-cluster identifier to prevent replay attacks

# (good choices are a random token or a domain name that will be unique to your cluster)

clusterID: <your cluster id>

server:

# each mapRoles entry maps an RAM role to a username and set of groups

# Each username and group can optionally contain template parameters:

# 1) "{{AccountID}}" is the 16 digit RAM ID.

# 2) "{{SessionName}}" is the role session name.

mapRoles:

# statically map acs:ram::000000000000:role/KubernetesAdmin to a cluster admin

- roleARN: acs:ram::<your main account uid>:role/KubernetesDev

username: 2377xxxx # <your subaccount kubernetes-dev uid> $ kubectl apply -f ack-ram-authenticator-cm.yaml 部署DaemonSet:

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

namespace: kube-system

name: ack-ram-authenticator

labels:

k8s-app: ack-ram-authenticator

spec:

updateStrategy:

type: RollingUpdate

template:

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

labels:

k8s-app: ack-ram-authenticator

spec:

# run on the host network (don't depend on CNI)

hostNetwork: true

# run on each master node

nodeSelector:

node-role.kubernetes.io/master: ""

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

- key: CriticalAddonsOnly

operator: Exists

containers:

- name: ack-ram-authenticator

image: registry.cn-hangzhou.aliyuncs.com/acs/ack-ram-authenticator:v1.0.1

imagePullPolicy: Always

args:

- server

- --config=/etc/ack-ram-authenticator/config.yaml

- --state-dir=/var/ack-ram-authenticator

- --generate-kubeconfig=/etc/kubernetes/ack-ram-authenticator/kubeconfig.yaml

resources:

requests:

memory: 20Mi

cpu: 10m

limits:

memory: 20Mi

cpu: 100m

volumeMounts:

- name: config

mountPath: /etc/ack-ram-authenticator/

- name: state

mountPath: /var/ack-ram-authenticator/

- name: output

mountPath: /etc/kubernetes/ack-ram-authenticator/

volumes:

- name: config

configMap:

name: ack-ram-authenticator

- name: output

hostPath:

path: /etc/kubernetes/ack-ram-authenticator/

- name: state

hostPath:

path: /var/ack-ram-authenticator/ $ kubectl apply -f ack-ram-authenticator-ds.yaml 检查ack-ram-authenticator在ack集群的3个master节点是上否运行正常:

$ kubectl -n kube-system get po|grep ram

ack-ram-authenticator-7m92f 1/1 Running 0 42s

ack-ram-authenticator-fqhn8 1/1 Running 0 42s

ack-ram-authenticator-xrxbs 1/1 Running 0 42s 3. 配置ACK集群Apiserver使用ack-ram-authenticator server

Kubernetes API使用令牌认证webhook来集成ACK RAM Authenticator,运行ACK RAM Authenticator Server时,它会生成一个webhook配置文件并将其保存在主机文件系统中,所以我们需要在API Server中配置使用这个配置文件:

分别修改3个master上的api server配置

/etc/kubernetes/manifests/kube-apiserver.yaml

并添加以下字段:

spec.containers.command:

--authentication-token-webhook-config-file=/etc/kubernetes/ack-ram-authenticator/kubeconfig.yaml spec.containers.volumeMounts:

- mountPath: /etc/kubernetes/ack-ram-authenticator/kubeconfig.yaml

name: ack-ram-authenticator

readOnly: true spec.volumes:

- hostPath:

path: /etc/kubernetes/ack-ram-authenticator/kubeconfig.yaml

type: FileOrCreate

name: ack-ram-authenticator 重启kubelet使其生效:

$ systemctl restart kubelet.service 4. 设置kubectl使用由ack-ram-authenticator提供的身份验证令牌

配置开发角色人员可以使用的kubeconfig文件:

基于kubernetes-dev子账户的kubeconfig文件(控制台获取)做修改如下:

apiVersion: v1

clusters:

- cluster:

server: https://xxx:6443

certificate-authority-data: xxx

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: "2377xxx"

name: 2377xxx-xxx

current-context: 2377xxx-xxx

kind: Config

preferences: {}

// 以下为修改部分

users:

- name: "kubernetes-dev"

user:

exec:

apiVersion: client.authentication.k8s.io/v1alpha1

command: ack-ram-authenticator

args:

- "token"

- "-i"

- "<your cluster id>"

- "-r"

- "acs:ram::xxxxxx:role/kubernetesdev" 此时,这个kubeconfig文件就可以共享给开发角色的人员下载使用。

开发人员使用共享出来的kubeconfig文件之前需要在自己的环境里安装部署ack-ram-authenticator二进制客户端文件:

下载并安装ack-ram-authenticator(

下载链接)二进制客户端文件:

$ go get -u -v github.com/AliyunContainerService/ack-ram-authenticator/cmd/ack-ram-authenticator dev1 dev2 ... devN子账户使用自己的AK并配置文件

~/.acs/credentials

:

{

"AcsAccessKeyId": "xxxxxx",

"AcsAccessKeySecret": "xxxxxx"

} 此时,dev1 dev2 ... devN使用共享的kubeconfig访问集群资源时都会统一映射到kubernetes-dev的权限上:

ACK中不同角色的访问权限说明:

验证:

$ kubectl get po

NAME READY STATUS RESTARTS AGE

busybox-c5bd49fb9-n26zj 1/1 Running 0 3d3h

nginx-5966f7d8c5-rtzb6 1/1 Running 0 3d2h $ kubectl get no

Error from server (Forbidden): nodes is forbidden: User "237753164652952730" cannot list resource "nodes" in API group "" at the cluster scope