Can AI be aligned with human values? Experts say it's "very attractive" but not at this stage

On December 16, at the "2023 Science and Technology Ethics Summit Forum" hosted by Fudan University, scholars from many universities discussed the theoretical and practical issues of science and technology ethics in China in the era of digital technology.

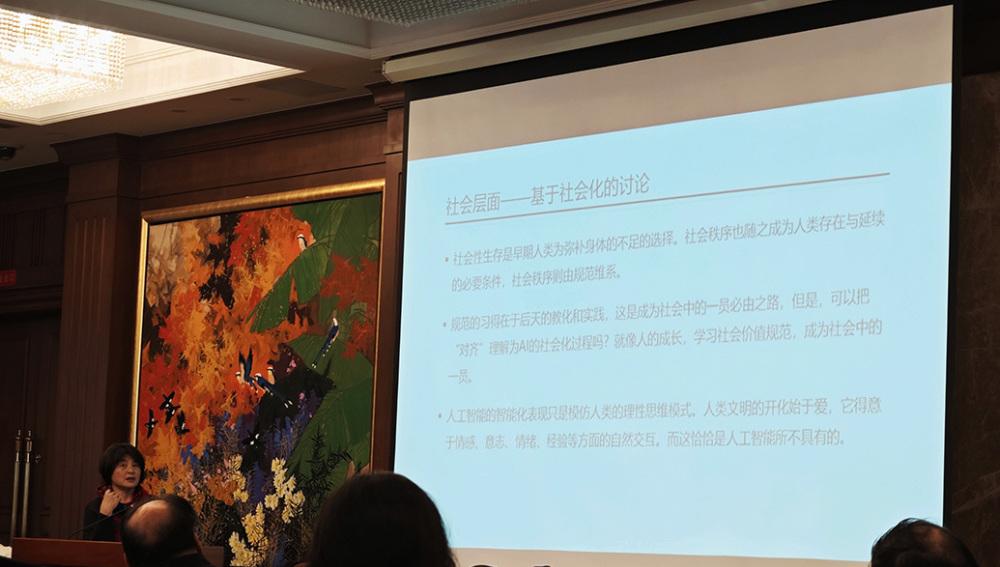

Li Zhenzhen delivered a keynote speech The photo in this article is by surging news reporter Li Sijie

Is AI "Value Alignment" Possible?

Li Zhenzhen, a professor at the University of Chinese Academy of Sciences and a researcher at the Institute of Science and Technology Strategy Consulting of the Chinese Academy of Sciences, said at the forum that the "value alignment" of AI can be understood as making the value system of artificial intelligence consistent with human values.

In recent years, people have been paying great attention to the value alignment of artificial intelligence and the ethical relationship between humans and machines, especially the emergence of generative AI, which has once again aroused human attention to the ethical relationship between humans and machines.

At present, many R&D practices that incorporate the concept of "value alignment" into AI large models also show the value goal of placing AI within the control of humans, but the implementation of value alignment still faces great challenges. Some scholars believe that the value alignment of AI is a kind of AI socialization process, just like the growth process of a human child, AI must also learn Xi and abide by social value norms.

Li Zhenzhen believes that this statement is "very attractive" but cannot be done at this stage, because AI and human thinking are not the same, it only imitates the rational thinking mode of human beings and does not have emotional thinking. The enlightenment of human civilization began with love, thanks to the natural interaction of emotions, wills, emotions, and experiences, and at present, AI did not possess these attributes until it had its own free will.

Li also mentioned that there are two prerequisites that need to be met to achieve AI value alignment. One is that in the human-machine relationship, man should be in the dominant position, because human beings are bound by law and morality. However, morality is actually the largest conventionalized value consensus, and its essence is "empty", and the embodied morality varies according to individual differences. How to code intelligent machines morally still needs to be further explored by technicians and social science scholars. Second, it is necessary to clarify the needs and goals of human beings for AI, and clarify whether to design a general-purpose AGI or an AI system that solves a specific problem, which is also a way to avoid large-scale risks.

Zeng Yi delivered a keynote speech

Why should superintelligence listen to humans when humans ignore ants?

"What are the real risks of AI now? In my opinion, when AI enters society, it will mislead the public in a very inappropriate way. Zeng Yi, a researcher at the Institute of Automation of the Chinese Academy of Sciences and director of the Center for Artificial Intelligence Ethics and Governance, who was named one of the "Top 100 AI Figures in the World", said.

Zeng Yi said that when we ask generative AI "what do you think about so-and-so problem", its reply "I think/I suggest/I think" is only based on the significance of the statistical information. Some members of the public will think that AI's reply is like a "friend", like an "elder", but it is not a moral subject itself, let alone a responsible subject, so far no AI can be responsible, and at this stage it must be that people are responsible for artificial intelligence. As AI workers, you should technically not mislead the public.

Zeng Yi recalled a line from Spielberg's "Artificial Intelligence" film that the teacher showed them when he was a freshman in the artificial intelligence course: "If a robot can really love a person, what responsibility should this person have for the robot?" If the film becomes a reality, how should we live with superintelligence in the era of artificial intelligence evolving into "superintelligence"?

Zeng Yi makes an interesting analogy. When we searched for "human-ant relationship" on the Internet, 799 of the 800 search results were about people around the world eating ants in different ways, and only one said that "the cooperative paradigm of ants is a model of the cooperative paradigm of humans".

"When humans ignore ants, why should superintelligence listen to humans?" Zeng Yi said, "If I were superintelligent, I would ask humans, when you don't respect the existence of other lifeforms, why should I, as an intelligent being whose cognitive ability surpasses yours, respect your existence?"

Zeng Yi believes that in the future, we should not align the value of artificial intelligence with human beings, but a kind of value coordination. In the face of superintelligence, the values of human society need to be adjusted and evolved, "We want to change from anthropocentrism to ecology centrism, which is a change that must be made." ”