Jia Jiaya's team and MIT released the ultra-long text extension technology: two lines of code to solve the limitations of large model dialogue

Text/Tencent Technology Guo Xiaojing

Recently, the Jia Jiaya team of Hong Kong University of Chinese and MIT released a technology called LongLoRA: with only two lines of code and an 8-card A100 machine, the text length of the 7B model can be extended to 100k tokens, and the text length of the 70B model can be extended to 32k tokens; At the same time, the research team also released LongAlpaca, the first long-text dialogue large-language model with 70B parameters.

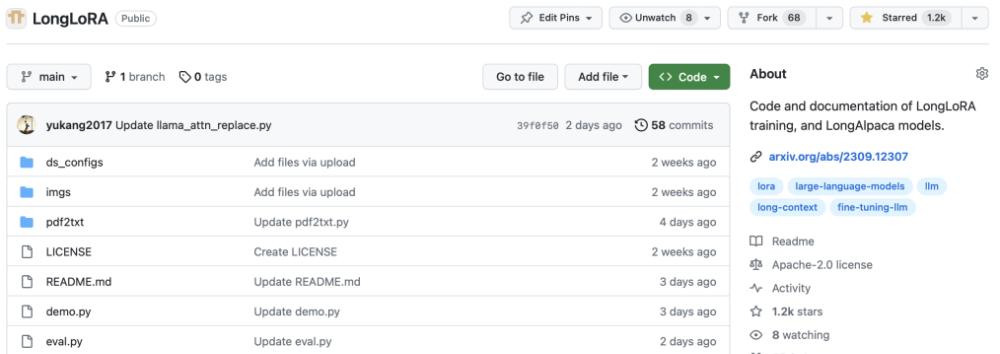

As soon as this new technology and new model were released, it has been on the hot lists of major open source websites: Hugging Face is the first hot list, paperwithcode is the first hot list, Github all python projects are the fifth hot, GitHub stars exceeded 1,000 in a week, and related technical posts on Twitter have nearly 180,000 views.

GitHub Stars has reached 1.3K

Related tech posts on Twitter received nearly 180,000 views

Lost halfway, the model is lazy, the longer the context, the dumber the model gets... If you have experienced large language model products, users will feel the limitation of text input length to some extent, such as when you want to discuss some slightly longer content with the large model, you need to split the input, and the main points of the previous input will soon be forgotten by the large model.

This is a typical large-language model dialogue defect, like a child born with attention deficits who have difficulty concentrating on a new book. The key to the defect is that the model lacks long text processing capabilities. The reason why the new results released by Jia Jiaya's team have received such high attention on major open source websites is that they break through the long text processing capabilities of the model.

First, long text processing is no longer a blind spot of large language models

The proposal of LongLoRA has solved the dialogue defects of the global large language model for the first time, and since then, dozens of pages of papers, hundreds of pages of reports, and huge books have no longer become the blind spot of large models.

In this regard, some professionals said excitedly that LongLoRA is a lamp of hope in the maze of large language models, which represents the industry's rethinking and attention to long text large language models, effectively expands the context window of large language models, allows models to consider and process long text sequences, and is an innovative invention of large language models.

In addition to technological innovations, one of the difficulties of large language models in dealing with long text problems is the lack of publicly available long text dialogue data.

To this end, the research team specially collected 9K long text Q&A corpus pairs, including various Q&A on famous books, papers, in-depth reports and even financial statements.

It was not enough to answer long questions, the team selected a 3K short question and answer corpus mixed with 9K long question and answer corpus for training, so that the long text large model has short text dialogue capabilities at the same time. This complete dataset, called LongAlpaca-12k, is currently open source.

Based on the LongAlpaca-12k dataset, the research team trained and evaluated different parameter sizes 7B, 13B, 70B, and open-source models including LongAlpaca-7B, LongAlpaca-13B and LongAlpaca-70B.

Second, blind selection demo, measured LongLoRA technology superimposed on 12K question and answer corpus large model LongAlpaca effect

(1) Let the system read a new paper and propose amendments to it according to the ICLR review guidelines, so as to improve the acceptance rate of the paper.

LongAlpaca's opinion is that the chances of acceptance of papers will be improved by more precisely articulating novelty, providing more rigorous and comparative experimental results (including specific data sets and metrics), wider applications, and future directions, and focusing on key contributions and impacts.

Now, let the system read two new different papers, and let LongAlpaca summarize the stylistic differences between the ICLR and CVPR conferences. LongAlpaca concludes that CVPR papers tend to be more structured and experimental, focusing on practicality and technicality. ICLR's essay style, on the other hand, is more flexible, focusing on key theoretical analyses and mathematical derivations rather than standard formats.

It can be seen that the trained LongAlpaca model can easily accept new long-form academic papers, and it is quite accurate in answering academically relevant questions.

(2) Test the interpretation performance of the LongAlpaca model in the economic field with a relatively high threshold of reading and understanding.

Based on the IMF's overview collection of global economic prospects from 2012 to 2023, it summarizes the economic situation year by year and forecasts future economic trends. LongAlpaca told us that the global economic situation in 2023 is uncertain, and growth is expected to slow compared to 2022; In the coming years, the global economic expansion will be moderate and lower than expected, while major challenges such as geopolitical tensions and financial market volatility remain and need to be carefully addressed through multilateral cooperation and structural reforms.

(3) Test LongAlpaca's ability to read new novels, and instruct it to analyze the content after reading.

The comparison models selected below are LongAlpaca and Llama2 with 13B parameters, and look at their respective performances.

In the famous book "Journey to the West", why is Sun Wukong very powerful when he makes trouble in the Heavenly Palace, but he has been repeatedly frustrated on the way to get the scriptures?

LongAlpaca gave 5 reasons, which can be roughly summarized as "Monkey King's immaturity, strong opponents, limited strength, enemy deception, initial lack of companions and other factors led to his failure in the journey." But over time, he gained wisdom, experience, and comrades, which enabled him to defeat his most formidable enemies. ”

Llama2's answer is simpler. "While he does make a fuss about the Heavenly Palace, it is not necessarily accurate to say that he represents an undefeated force. In fact, he was eventually defeated by the powerful Tang Sanzang in Journey to the West. Llama2 did not give the right answer and also raised the need for more content information.

This time, let the system read "The Three-Body Problem" again, and then ask why Ye Wenjie contacted the aliens in the first part, and why did he regret it in the later stage?

Long Alpaca believes that Ye Wenjie's initial contact with aliens was motivated by idealism and revenge, but over time, her view of humanity has changed, realizing the danger she may bring to all of humanity, and her remorse grows day by day. The answer was very clear.

Llama2's answer was, "Through interacting with aliens, she hopes to gain a deeper understanding of the nature of the universe and her place in it." Ye Wenjie gradually realized that aliens and their technology could not solve her problem. The answer was general, and then he began to comment on the novel as a whole, answering non-questions.

From the answers given by the models, it can be seen that some models such as Llama2 [2] may have seen the relevant novel during the pre-training process, but the answer is not ideal if the question is asked only for a short text based on the novel title. LongAlpaca is slightly better at long text processing such as academic papers, commenting on global economic trends, and reading novels.

Third, how LongLoRA technology breaks through the ability to process long texts

It turns out that in the process of processing long text in large language models, the main cost of computation is concentrated in the self-attention mechanism, and its overhead increases squared with the length of the text.

In response to this problem, the research team proposed LongLoRA technology and simulated the global self-attention mechanism by grouping and offset.

Simply put, it is to split the tokens corresponding to long text into different groups, do self-attention calculations within each group, and the way of grouping is offset from different attention heads. This method can not only greatly save the amount of calculation, but also maintain the transmission of the global receptive field.

And this implementation method is also very concise, only two lines of code can be completed.

LongLoRA also explores ways to train at low ranks. The original low-rank training methods, such as LoRA [5], cannot achieve good results in text length transfer. On the basis of low-rank training, LongLoRA introduces embedding layers (Embedding layer and Normalization layers) for fine-tuning, so as to achieve the effect of full fine-tune.

When performing text expansion and training of different lengths, the specific effects of LongLoRA, LoRA and all-parameter fine-tuning techniques can be referred to in three dimensions:

-

1

In terms of Perplexity-perplexity, the performance of the original LoRA method is deteriorating, while LongLoRA and all-parameter fine-tuning can maintain good results under various text lengths.

-

2

In terms of memory consumption, LongLoRA and the original LoRA have significant savings compared to full-parameter fine-tuning. For example, for model training with a length of 8k, LongLoRA reduces the memory consumption from 46.3GB to 25.6GB compared to full-parameter fine-tuning.

-

3

In terms of training time, for 64k length model training, compared with conventional LoRA, LongLoRA reduces the training time from about 90~100 hours to 52.4 hours, while the full parameter fine-tuning exceeds 1000 hours.

The minimalist training method, minimal computing resources and time consumption, and excellent accuracy make LongLoRA possible on a large scale. At present, the relevant technologies and models have all been open source, and interested users can deploy their own experience.

Code and Demo address: https://github.com/dvlab-research/LongLoRA

Thesis address: https://arxiv.org/pdf/2309.12307.pdf

bibliography

[1] LLaMA team. Llama: Open and efficient foundation language models. Arxiv, 2302.13971, 2023a.

[2] Llama2 team. Llama 2: Open foundation and fine-tuned chat models. Arxiv, 2307.09288, 2023b.

[3] Shouyuan Chen, Sherman Wong, Liangjian Chen, and Yuandong Tian. Extending context window of large language models via positional interpolation. Arxiv, 2306.15595, 2023.

[4] Szymon Tworkowski, Konrad Staniszewski, Mikolaj Pacek, Yuhuai Wu, Henryk Michalewski, and Piotr Milos. Focused transformer: Contrastive training for context scaling. Arxiv, 2307.03170, 2023.

[5] Edward J. Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. Lora: Low-rank adaptation of large language models. In ICLR, 2022.