Recurrent neural networks are able to learn the time dependence of sequence prediction problems across multiple time steps. Modern recurrent neural networks, such as long short-term memory or LSTM, are trained with variations of backpropagation algorithms called backpropagation times. The algorithm has been further modified to improve the efficiency of sequence prediction problems for very long sequences, called Truncated Backpropagation Through Time.

An important configuration parameter when training a recurrent neural network such as an LSTM using truncation backpropagation is to decide how many time steps to use as input. That is, how to accurately split a long input sequence into subsequences for best performance.

We next use 6 different methods where you can split very long input sequences to effectively train a recurrent neural network using truncated backpropagation in Python using Keras.

Backpropagation is truncated by time

Backpropagation is a training algorithm that updates the weights in a neural network to minimize the error between the expected and predicted outputs of a given input.

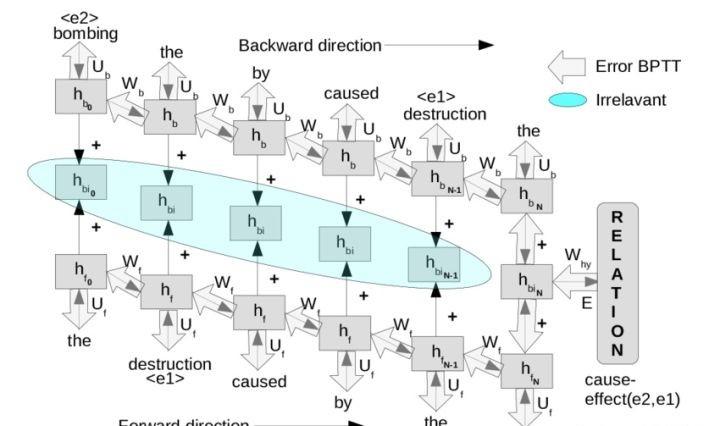

For sequence prediction problems where there is an order dependency between observations, recurrent neural networks are used instead of classical feed-forward neural networks. Recurrent neural networks are trained using a variant of a backpropagation algorithm called Backpropagation Through Time, or BPTT for short.

In effect, BPTT unfolds the recurrent neural network and propagates errors backwards over the entire input sequence, one time step at a time. The weights are then updated with the accumulated gradient.

BPTT can be slow to train a recurrent neural network on problems with long input sequences. In addition to velocity, accumulating gradients over such a large number of time steps can cause values to shrink to zero, or eventually overflow or explode value growth.

The first modification of BPTT was to limit the number of time steps used for backpropagation, actually estimating the gradient used to update the weights rather than fully calculating it.

The BPTT training algorithm has two parameters:

- k1: Defines the number of time steps displayed to the network in forward delivery.

- k2: Defines the number of time steps to view when estimating the gradient of backpropagation.

Therefore, we can use the symbolic BPTT(k1, k2) when considering how to configure the training algorithm, where k1 = k2 = n, where n is the input sequence length of the classical non-truncated BPTT.

The impact of BPTT configuration on RNN sequence models, modern recurrent neural networks like LSTMs can use their internal state to remember long input sequences, such as more than thousands of time steps.

This means that the configuration of the BPTT does not necessarily define the memory of the network that is being optimized by selecting the number of time steps. You can choose when to reset the internal state of the network separately from the mechanism used to update the network weights.

Instead, the choice of BPTT parameters affects how the network estimates the error gradient used to update the weights. More generally, the configuration defines the number of time steps in which the network can be considered to simulate a sequence problem.

We can formally state it as:

yhat(t) = f(X(t), X(t-1), X(t-2), ... X(t-n)) where yhat is the output of a specific time step, f(...) is a relationship approximated by a recurrent neural network, and X(t) is an observation of a specific time step.

It is conceptually similar to the window size on a multilayer perceptron trained on a time series problem or the p and q parameters of a linear time series model such as ARIMA (but completely different in practice). BPTT defines the range of sequences that the model enters during training.

Keras implementation of BPTT

The Keras deep learning library provides BPTT implementations for training recurrent neural networks. Specifically, k1 and k2 are equal and fixed to each other.

- BPTT(k1, k2), where k1 = k2

This is achieved by training a fixed-size 3D input required by a recurrent neural network, such as a long short-term memory network or an LSTM.

LSTM expects the input data to have the following dimensions: sample, time step, and characteristic.

This is the second dimension of this input format, and the time step defines the number of time steps used for forward and backward delivery of the sequence prediction problem.

Therefore, when preparing input data for a series prediction problem in Keras, you must carefully select the specified number of time steps.

Choices that don't take long will affect both:

- The internal state accumulated during forward delivery.

- Gradient estimate used to update backpropagation weights.

Note that by default, the internal state of the network is reset after each batch, but more explicit control over when the internal state is reset can be achieved by using a so-called finite state LSTM and manually invoking the reset operation.

Prepare sequence data for BPTT in Keras

The way in which the sequence data is decomposed defines the number of time steps used in BPTT forward and backward passes.

Therefore, you must carefully consider how to prepare your training data, listing 6 methods to consider.

1. Use the data as is

If there are not many time steps in each sequence, such as tens or hundreds of time steps, you can use the input sequence as is.

A practical limit of approximately 200 to 400 BPTTs that are not long has been recommended. If the series data is less than or equal to this range, you can reshape the sequence observations to the time step of the input data.

For example, if you have a collection of 100 univariate sequences with 25 time steps, you can refactor it to 100 samples, 25 time steps, and 1 feature, or [100, 25, 1].

2. Naïve data splitting

If you have long input sequences, such as thousands of time steps, you may need to decompose long input sequences into multiple consecutive subsequences.

This will require the use of stateful LSTMs in Keras to preserve internal state in the input of the subsequence and only reset at the end of a truly more complete input sequence.

For example, if there are 50,000 not long 100 if the input sequence is entered, then each input sequence can be divided into 500 100 subsequences that are not long in time. One input sequence becomes 100 samples, so the 100 original samples become 10,000. The input dimensions for Keras are 10,000 samples, 500 time steps, and 1 feature or [10000, 500, 1]. Care needs to be taken to save the state for each 100 subsequences and to reset the internal state explicitly after each 100 samples or with a batch size of 100.

Splitting of an entire sequence neatly divided into fixed-size subsequences is preferred. The choice of the factor (subsequence length) of the whole sequence is arbitrary, hence the name "naive data split".

Splitting a sequence into subseries does not take into account domain information about the appropriate number of time steps to estimate the error gradient used to update the weights.

3. Domain-specific data splitting

It is difficult to know the correct number of time steps required to provide a useful error gradient estimate.

We can use the naïve method to get a model quickly, but the model may be far from optimized. Or we can use domain-specific information to estimate the number of time steps associated with the model as we learn the problem.

For example, if the sequence problem is a regression time series, perhaps a review of the autocorrelation and partial autocorrelation plots can inform the choice of time steps.

If the sequence problem is a natural language processing problem, perhaps the input sequence can be split by sentence and then populated to a fixed length, or it can be split according to the average sentence length in the field.

Think broadly and consider what domain-specific knowledge you can use to break the sequence into meaningful chunks.

4. System data splitting (e.g. grid search)

You can systematically evaluate a different set of subsequence lengths for a sequence prediction problem instead of guessing the appropriate number of time steps.

You can perform a mesh search on each subsequence length and employ the configuration that results in the model with the best average performance.

If you are considering using this method, you need to be careful to start with the subsequence length as a factor in the full sequence length. If you explore subsequence lengths for factors that are not full sequence lengths, use padding and possible masks. Consider experiments that use slightly over-prescribed networks (more memory units and more training periods) than are needed to solve the problem to help rule out network capacity limitations.

Take the average performance of multiple runs (for example, 30 times) for each different configuration.

If the compute resources are not limiting, it is recommended that you conduct a systematic survey of different numbers of time steps.

5. Use BPTT(1, 1) to rely heavily on internal state

You can reformulate a sequence prediction problem with one input and one output for each time step.

For example, if you have 100 sequences with 50 time steps, each time step becomes a new sample. 100 samples become 5,000. The 3D input becomes 5,000 samples, 1 time step, and 1 feature, or [5000, 1, 1].

Again, this will require retaining the internal state for each time step of the sequence and resetting at the end of each actual sequence (50 samples).

This places the burden of the learning sequence prediction problem on the internal state of the recurrent neural network. Depending on the type of problem, it may exceed the processing power of the network, and predicting the problem may not learn.

Personal experience suggests that this formula may be suitable for prediction problems that require memorization of sequences, but perform poorly when the result is a complex function observed in the past.

6. Forward and backward sequence length

The Keras deep learning library is used to support the number of de-evens passed forward and backward by time truncation.

Essentially, the k1 parameter can be specified by the number of time steps of the input sequence, and the k2 parameter can be specified by the truncate_gradient parameter on the LSTM layer.

#Machine Learning##Python##Data Analytics ##深度学习 #