Xiao Cha was sent from The Temple of Oufei

Qubits | Official account QbitAI

Using a horn to recognize handwritten numbers?

It sounds like metaphysics, but it's actually a serious Nature paper.

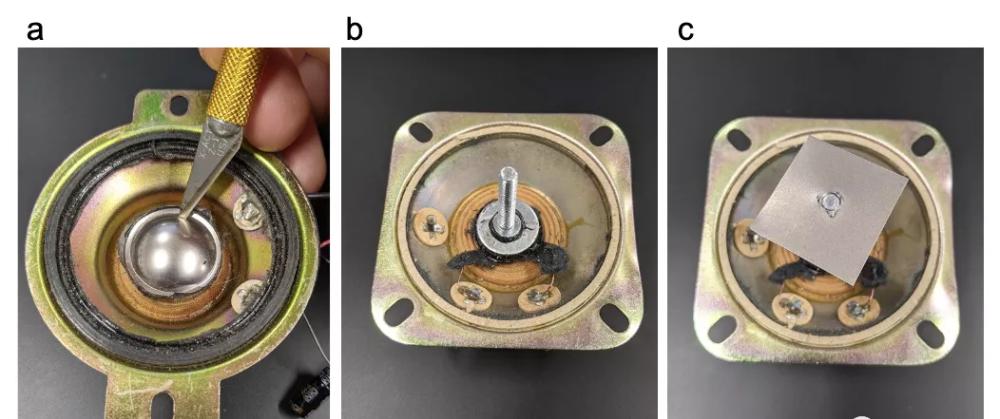

The figure below, on the surface, looks like a modified horn, but it is actually used to identify handwritten numbers, with a accuracy rate of nearly 90%.

This is a new trick from physicists from Cornell University.

They used loudspeakers, electronics, and lasers to create acoustic, electrical, and optical versions of physical neural networks (PNNS).

Moreover, these neural networks can also perform training using backpropagation algorithms.

The reason physicists have come up with PNN is that Moore's Law is dead, and we want to use physical systems to save machine learning.

According to the article, compared to software-implemented neural networks, PNNs have the potential to improve the energy efficiency and speed of machine learning by several orders of magnitude.

How to backpropagize with physics

The reason why scientists can build neural networks with physical equipment is because the essence of physical experiments and machine learning is the same - tuning, optimization.

There are many nonlinear systems in physics (acoustics, electricity, optics) that can be used to approximate arbitrary functions in the same way as artificial neural networks.

Acoustic neural networks are like this.

The two postdocs who did the experiment removed the diaphragm above the speaker and connected the square titanium plate to the horn dynamic.

The control signal from the computer and the input signal generated by the vibration of the metal plate are then output to the speaker, creating a feedback closed loop.

As for how backpropagation works, the authors propose an algorithm that blends the physical world with computers, called "physical perception training" (PAT), a universal framework that can backpropagation directly train any physical system to perform deep neural networks.

In an acoustic neural network system, the oscillating plate receives a sound input sample (red) modified by a MNIST image, and after driving the vibrating plate, the signal is recorded by the microphone (gray) and converted into an output signal (blue) in time.

The flow of the entire physical system is as follows: first convert the digital signal into an analog signal, input it into the physical system, and then compare the output with the real result, and after backpropagation, adjust the parameters of the physical system.

Through repeated tuning of the speaker parameters, they achieved an 87% accuracy rate on the MNIST dataset.

You may ask, you still have to use a computer in the training process, what are the advantages of this?

It is true that PNN may not be dominant in training, but PNN operates on the laws of physics, and once the network training is completed, there is no need for computer intervention, which has advantages in reasoning delay and power consumption.

And PNN is structurally much simpler than the software version of neural networks.

There are also electrical and optical versions

In addition to the acoustic version, the researchers also built the electrical and optical versions of the neural network.

The electrical version uses four electronic components resistors, capacitors, inductors, and tertiary tubes, and the circuit is extremely simple, just like a middle school physics experiment.

This analog circuit PNN is capable of performing MNIST image classification tasks with 93% test accuracy.

The optical version is the most complex, the near-infrared laser is converted into blue light through the frequency multiplier crystal, but the system has the highest accuracy rate, reaching 97%.

In addition, the optical system enables simple classification of speech.

The physical systems training algorithm PAT used above can be used in any system, and you can even use it to build fluid and even mechanical punk versions of neural networks.

Reference Links:

[1]https://www.nature.com/articles/s41586-021-04223-6

[2]https://github.com/mcmahon-lab/Physics-Aware-Training

[3]https://news.cornell.edu/stories/2022/01/physical-systems-perform-machine-learning-computations