Reports from the Heart of the Machine

Author: Du Wei, Demon King

When it comes to optimizers, most people think of Adam. Since its launch in 2015, Adam has been the "king" of the field. But recently, an assistant professor at Boston University made a hypothesis that Adam may not be the best optimizer, but that the training of neural networks makes it optimal.

The Adam optimizer is one of the most popular optimizers in deep learning. It is suitable for many kinds of problems, including models with sparse or noisy gradients. Its easy-to-fine-tune feature allows it to get good results quickly, and in fact, the default parameter configuration usually achieves good results. The Adam optimizer combines the benefits of AdaGrad and RMSProp. Adam uses the same learning rate for each parameter and adapts independently as learning progresses. In addition, Adam is a momentum-based algorithm that utilizes historical information about gradients. Based on these characteristics, Adam is often "a no-brainer" when choosing an optimization algorithm.

But recently, Francesco Orabona, an assistant professor at Boston University, has proposed a hypothesis that "it is not Adam who is best, but the neural network that is trained to make it optimal." He elaborated on his hypothesis in an article that reads as follows:

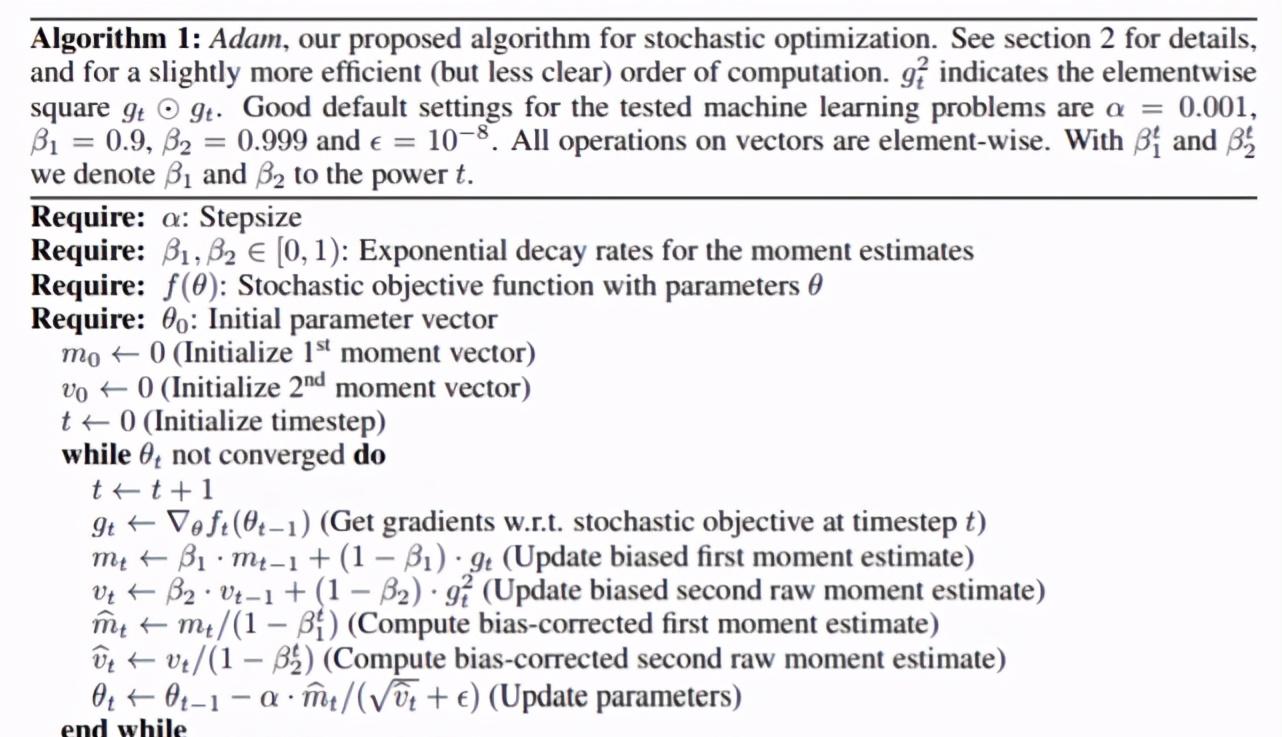

I've been working on online and randomized optimization for a while now. When Adam was proposed in 2015, I was already in this field. Adam was presented by Diederik P. Kingma, a senior research scientist at Google, and Jimmy Ba, an assistant professor at the University of Toronto, in the paper ADAM: A METHOD FOR STOCHASTIC OPTIMIZATION.

Adam algorithm

The paper is good, but it's not a breakthrough, especially by current standards. First, the theory is fragile: a regret guarantee is given to an algorithm that should handle random optimization of non-convex functions. Second, experiments are fragile: recently the exact same experiments have been rejected outright. It was later discovered that there was an error in the proof, and that the Adam algorithm was not yet able to achieve convergence on some one-dimensional random convex functions. Despite these problems, Adam is still considered the "king" of optimization algorithms.

So to be clear: we all know that Adam doesn't always get you the best performance, but most of the time, people think that you can use Adam's default parameters to achieve at least suboptimal performance when dealing with a deep learning problem. In other words, Adam is considered the default optimizer for deep learning today. So, what is the secret to Adam's success?

In recent years, a large number of papers have been published trying to explain Adam and his performance. From "adaptive learning rate" (adaptive what?) No one really understands) When it comes to momentum and scale invariance, Adam has read everything accordingly. However, all these analyses do not give a final answer about its performance.

Obviously, most of these factors, such as adaptive learning rates, are beneficial to the optimization process of any function, but it's still unclear why these factors make Adam the best algorithm when combined in this way. The balance between the individual elements is so subtle that the small changes required to solve the non-convergence problem are also thought to result in slightly worse performance than Adam.

But how likely is it all? I mean, is Adam really the best optimization algorithm? In such a "young" field, how likely are the best deep learning optimizations to be achieved years ago? Is there another explanation for Adam's amazing performance?

So, I came up with a hypothesis, but before we explain it, it's worth talking a little bit about the applied deep learning community.

Google Machine Learning researcher Olivier Bousquet once described the deep learning community as a giant genetic algorithm in a talk: community researchers are exploring variations of all algorithms and architectures in a semi-random way. Algorithms that continue to work in large experiments are preserved, and those that don't work are discarded. It's important to note that this process seems to have nothing to do with rejection or disapproval: the community is too big and active, and good ideas can be retained even if rejected and translated into best practices after a few months, such as Loshchilov and Hutter's study Decoupled Weight Decay Regularization. Similarly, ideas in published papers are tried by hundreds of people to reproduce, and those that cannot be reproduced are cruelly abandoned. This process creates many heuristics that consistently produce excellent results in experiments, but the pressure is also "always". Indeed, despite being based on a non-convex formula, the performance of deep learning methods is very reliable. (Note that the deep learning community has a strong tendency towards "celebrities", and not all ideas get equal attention...) )

So what is the connection between this giant genetic algorithm and Adam? After taking a closer look at the idea creation process in the deep learning community, I found a pattern: new architectures created by people tend to have fixed optimization algorithms, and most of the time, the optimization algorithm is Adam. This is because Adam is the default optimizer.

My hypothesis came: Adam was a good optimization algorithm for neural network architectures that existed many years ago, so people kept creating new architectures that worked for Adam. We may not see Adam's invalid architecture, because such ideas have long been abandoned! This type of idea requires designing both a new architecture and a new optimizer, which is a very difficult task. That is, in most cases, community researchers only need to improve one set of parameters (architecture, initialization strategy, hyperparameter search algorithm, etc.) while keeping the optimizer Adam.

I'm sure a lot of people won't believe this hypothesis, and they'll list all the specific problems that Adam isn't optimal optimization algorithm, such as momentum gradient descent being an optimal optimization algorithm. However, I would like to point out two points:

I'm not describing a law of nature, but just stating the community tendency that may have influenced the co-evolution of some architectures and optimizers;

I have evidence to support this hypothesis.

If my assertion is true, we expect Adam to work well on deep neural networks, but bad on other models. And that's what happened! For example, Adam performs poorly on simple convex and nonconvex problems, such as the experiments in Painless Stochastic Gradient: Interpolation, Line-Search, and Convergence Rates by Vaswani et al.:

It now seems that the time has come to discard the specific settings of the deep neural network (initialization, weights, loss functions, etc.), Adam has lost its adaptability, and its magic-like default learning rate must be adjusted again. Note that you can write a linear predictor as a layered neural network, but Adam doesn't do well in this situation. Therefore, the evolution of all the specific choices of deep learning architectures may have been to make Adam better and better, and the simple questions described above do not have such characteristics, so Adam loses its luster in it.

In summary, Adam is probably the best optimizer, as the deep learning community is only exploring a small area of the architecture/optimizer co-search space. If that's the case, it's ironic for a community that abandons the convex approach because it focuses on a narrow area of machine learning algorithms. As Yann LeCun, Facebook's chief AI scientist, puts it, "The key drops in the dark, but we have to look for it in the visible light."

The "novelty" hypothesis has attracted heated discussion among netizens

The assistant professor's hypothesis has sparked heated discussion on reddit, but it has only given ambiguous views, and no one can prove whether the hypothesis is true.

One user thought that this hypothesis may not be entirely correct but interesting, and made a further point: How does Adam perform on simple MLPs? Compared to the loss surface of a general-purpose optimization problem, perhaps it is only the loss surface of the neural network that makes them adapt naturally to Adam. If Adam performs worse on MLP, the evidence is more abundant.

Another netizen also believes that there is such a possibility. Most of the papers that Adam published have used it, and some of the other efficient architectures that have been found rely on it, especially for architectures that use NAS or similar approaches. But in practice, many architectures also work well with other optimizers. And many new papers are now using other optimizers like Ranger. In addition, another theory about Adam is that if it is really adaptive, then we don't need a finder and a scheduler.

reddit link: https://www.reddit.com/r/MachineLearning/comments/k7yn1k/d_neural_networks_maybe_evolved_to_make_adam_the/