import tensorflow as tf

from numpy.random import RandomState

import math

learning_rate = 0.01

MAX_STEPS = 1001

rdm = RandomState(1)

dataset_size = 256

validation_rate = 0.2

validation_size = math.floor(dataset_size * validation_rate)

X = rdm.rand(dataset_size, 2)

Y = [[int(x1 + x2 < 1)] for (x1, x2) in X]

train_X = X[validation_size:]

train_Y = Y[validation_size:]

validation_X = X[: validation_size]

validation_Y = Y[: validation_size]

x = tf.placeholder(tf.float32, shape=(None, 2), name="x_input")

y_ = tf.placeholder(tf.float32, shape=(None, 1), name="y_input")

input_weight = tf.Variable(tf.random_normal((2, 3)), name="input_weight")

input_bias = tf.Variable(tf.constant(0.1, shape=[3]), name="input_bias")

hidden_weight = tf.Variable(tf.random_normal((3, 3)), name="hidden_weight")

hidden_bias = tf.Variable(tf.constant(0.1, shape=[3,]), name="hidden_bias")

output_weight = tf.Variable(tf.random_normal((3, 1)), name="output_weight")

output_bias = tf.Variable(tf.constant(0.1, shape=[1]), name="output_bias")

#input layer

z = tf.matmul(x, input_weight) + input_bias

y = tf.sigmoid(z)

#hidden layer

z = tf.matmul(y, hidden_weight) + hidden_bias

y = tf.sigmoid(z)

#output layer

z = tf.matmul(y, output_weight) + output_bias

y = tf.sigmoid(z)

cross_entropy = - tf.reduce_mean(y_ * tf.log(tf.clip_by_value(y, 1e-3, 1.0))

+ (1-y_)*tf.log(tf.clip_by_value(1-y, 1e-3, 1.0)))

tf.summary.scalar("cross entropy", cross_entropy)

optimzer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cross_entropy)

merged = tf.summary.merge_all()

with tf.Session() as sess:

summary_writer = tf.summary.FileWriter("./train", sess.graph)

summary_writer1 = tf.summary.FileWriter("./test", sess.graph)

tf.global_variables_initializer().run()

for step in range(MAX_STEPS):

summary_train, _ = sess.run([merged, optimzer], feed_dict={x: train_X, y_: train_Y})

summary_writer.add_summary(summary_train, step)

summary_test, _ = sess.run([merged, cross_entropy], feed_dict={x: validation_X, y_: validation_Y})

summary_writer1.add_summary(summary_test, step)

summary_writer.close()

summary_writer1.close()

設計為: 實作三層的神經網絡,輸出端損失函數為cross entropy

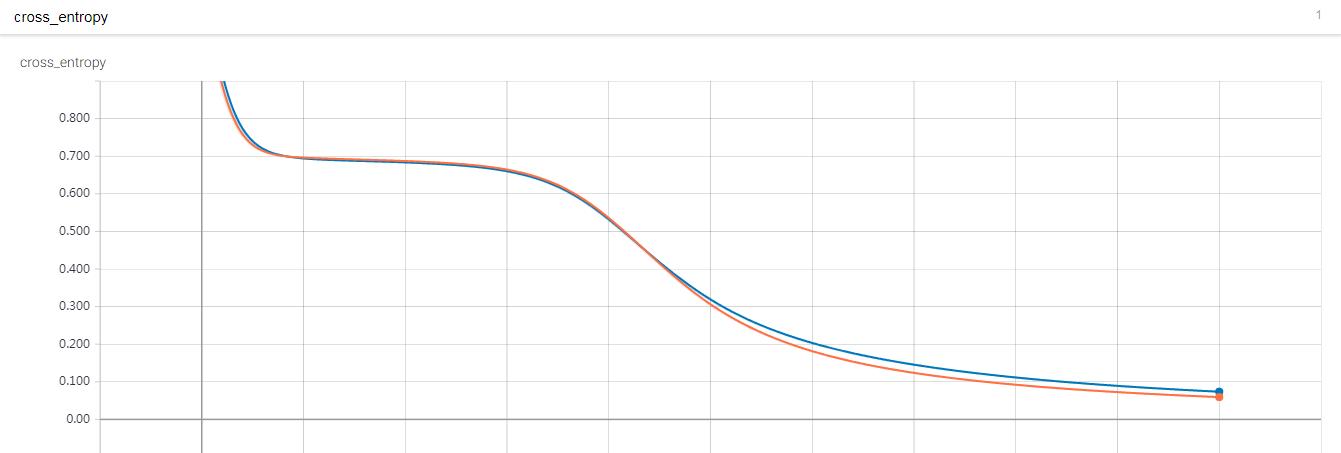

實驗結果如下:

訓練1001次結果:

藍線表示validation

紅線表示train

訓練10001次結果: 并沒有出現過拟合,我的了解可能是因為神經網絡學到了rand的屬性,是以才能拟合的很好