mongodb複制叢集搭建示範可參考上一篇:mongoDB複制集搭建,示範

文章目錄

-

- 一:mongodb分片的背景簡介

- 二:mongodb分片搭建示範

-

-

- 1、配置節點叢集搭建

-

- a、37017節點的conf配置資訊:

- b、啟動37017,37018節點:

- 2、路由節點叢集搭建

-

- a、路由節點27017的配置

- b、啟動27017,即路由節點

- 3、分片節點叢集搭建

-

- a、47017,47018是我們的一個shard分片叢集,現在進行改shard叢集的配置**

- b、啟動47017,47018:

- c、配置我們的分片節點叢集47017,47018的叢集關系配置:

- d、把47017,47018的分片複制集加入到路由節點27017下

- e、往分片叢集裡再添加一個分片節點

- 4、片健資料操作示範

-

-

- a、為資料庫開啟分片功能

- b、為指定集合開啟分片功能

- c、往分片叢集中寫資料,觀察分片效果

-

-

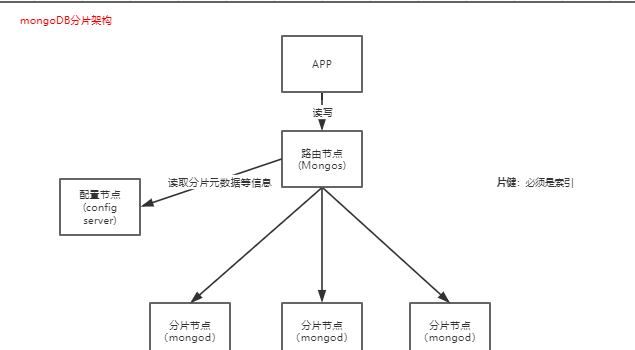

一:mongodb分片的背景簡介

-

原因簡介

随着資料的增長,單機執行個體的瓶頸是很明顯的。可以通過複制的機制應對壓力,但mongodb中單個叢集的 節點數量限制到了12個以内,是以需要通過分片進一步橫向擴充。此外分片也可節約磁盤的存儲。

-

分片優勢簡介

a、透明化

MongoDB自帶mongos路由程序。通過mongos将用戶端發來的請求準确無誤的路由到叢集中的一個或者一組伺服器上,同時會把接收到的響應聚合起來發回到用戶端。

b.高可用

MongoDB通過将副本集和分片叢集結合使用,在確定資料實作分片的同時,也確定了各分片資料都有相應的備份,這樣就可以確定當主伺服器當機時,其他的從庫可以立即替換,繼續工作。

c.易擴充

當系統需要更多的空間和資源的時候,MongoDB使我們可以按需友善的擴充系統容量。

- 分片叢集元件簡介

| 元件 | 說明 |

|---|---|

| Mongos | 提供對外應用通路,所有操作均通過mongos執行。一般有多個mongos節點。資料遷移和資料自動平衡。 |

| Config Server | 存儲叢集所有節點、分片資料路由資訊。預設需要配置3個Config Server節點。 路由節點基于中繼資料資訊 決定把請求發給哪個分片。 |

| Mongod | 存儲應用資料記錄。一般有多個Mongod節點,達到資料分片目的。實際存儲的節點,其每個資料塊預設為64M,滿了之後就會産生新的資料庫。 |

實際上,生産使用的所有元件都是複制叢集的,實際架構如下:

(備注:下面為了簡化示範,配置節點叢集使用2個節點,分片節點叢集使用2個,路由節點叢集使用1個,27017~57018是相應的端口号,搭建示範的伺服器環境是阿裡雲的centos7上做僞分片叢集示範)

二:mongodb分片搭建示範

1、配置節點叢集搭建

a、37017節點的conf配置資訊:

#資料目錄

dbpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/config-37017

#端口

port=37017

#日志

logpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/config-37017/37017.log

#背景啟動

fork=true

#複制集名稱

replSet=configCluster

#配置節點

configsvr=true

37018節點的conf配置資訊:

dbpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/config-37018

port=37018

logpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/config-37018/37018.log

fork=true

replSet=configCluster

configsvr=true

b、啟動37017,37018節點:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongod -f conf/conf-37017.conf

about to fork child process, waiting until server is ready for connections.

forked process: 32692

child process started successfully, parent exiting

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongod -f conf/conf-37018.conf

about to fork child process, waiting until server is ready for connections.

forked process: 32726

child process started successfully, parent exiting

[[email protected] mongodb-linux-x86_64-4.0.5]# ps -ef|grep mongo

root 32692 1 5 14:46 ? 00:00:00 ./bin/mongod -f conf/conf-37017.conf

root 32726 1 9 14:46 ? 00:00:00 ./bin/mongod -f conf/conf-37018.conf

root 32761 32621 0 14:46 pts/3 00:00:00 grep --color=auto mongo

進入37017節點的用戶端配置配置節點叢集的叢集設定:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongo --port 37017

MongoDB shell version v4.0.5

connecting to: mongodb://127.0.0.1:37017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("434beeea-5179-4a2d-ad8d-01bd094d96a0") }

MongoDB server version: 4.0.5

Server has startup warnings:

2019-11-15T14:46:04.066+0800 I STORAGE [initandlisten]

2019-11-15T14:46:04.066+0800 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine

2019-11-15T14:46:04.066+0800 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten]

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten]

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten]

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten]

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten]

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten]

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 7230 processes, 65535 files. Number of processes should be at least 32767.5 : 0.5 times number of files.

2019-11-15T14:46:04.782+0800 I CONTROL [initandlisten]

> rs.status()

{

"operationTime" : Timestamp(0, 0),

"ok" : 0,

"errmsg" : "no replset config has been received",

"code" : 94,

"codeName" : "NotYetInitialized",

"$gleStats" : {

"lastOpTime" : Timestamp(0, 0),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0),

"$clusterTime" : {

"clusterTime" : Timestamp(0, 0),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

> var cfg = {

... "_id":"configCluster",

... "protocolVersion":1,

... "members":[

... {

... "_id":0,"host":"127.0.0.1:37017"

... },{

... "_id":1,"host":"127.0.0.1:37018"

... }

... ]

... }

> rs.initiate(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1573800528, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1573800528, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0),

"$clusterTime" : {

"clusterTime" : Timestamp(1573800528, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configCluster:SECONDARY> rs.status()

{

"set" : "configCluster",

"date" : ISODate("2019-11-15T06:49:09.813Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1573800544, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1573800544, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1573800544, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1573800544, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1573800540, 1),

"members" : [

{

"_id" : 0,

"name" : "127.0.0.1:37017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 185,

"optime" : {

"ts" : Timestamp(1573800544, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-11-15T06:49:04Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1573800539, 1),

"electionDate" : ISODate("2019-11-15T06:48:59Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "127.0.0.1:37018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 21,

"optime" : {

"ts" : Timestamp(1573800544, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1573800544, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-11-15T06:49:04Z"),

"optimeDurableDate" : ISODate("2019-11-15T06:49:04Z"),

"lastHeartbeat" : ISODate("2019-11-15T06:49:09.586Z"),

"lastHeartbeatRecv" : ISODate("2019-11-15T06:49:08.074Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:37017",

"syncSourceHost" : "127.0.0.1:37017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1573800544, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1573800528, 1),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"lastCommittedOpTime" : Timestamp(1573800544, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1573800544, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configCluster:PRIMARY>

2、路由節點叢集搭建

a、路由節點27017的配置

#端口号

port=27017

#日志

logpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/mongos-27017/27017.log

#背景啟動

fork=true

#configCluster是我們上面配置的配置節點複制集的名稱,要對應,不然啟動不了

configdb=configCluster/127.0.0.1:37017,127.0.0.1:37018

b、啟動27017,即路由節點

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongos -f conf/mongos-27017.conf

2019-11-15T15:52:52.052+0800 W SHARDING [main] Running a sharded cluster with fewer than 3 config servers should only be done for testing purposes and is not recommended for production.

about to fork child process, waiting until server is ready for connections.

forked process: 2191

child process started successfully, parent exiting

[[email protected] mongodb-linux-x86_64-4.0.5]# ps -ef|grep mongo

root 2191 1 0 15:52 ? 00:00:00 ./bin/mongos -f conf/mongos-27017.conf

root 2297 1471 0 15:56 pts/4 00:00:00 grep --color=auto mongo

root 32692 1 0 14:46 ? 00:00:18 ./bin/mongod -f conf/conf-37017.conf

root 32726 1 0 14:46 ? 00:00:18 ./bin/mongod -f conf/conf-37018.conf

[[email protected] mongodb-linux-x86_64-4.0.5]#

現在啟動的路由節點是還不能正常寫入資料的,因為我們的shard(分片)節點叢集還沒有啟動:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongo

MongoDB shell version v4.0.5

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("f1734e03-cc66-44a5-b7cb-e880de610002") }

MongoDB server version: 4.0.5

Server has startup warnings:

2019-11-15T15:52:52.057+0800 I CONTROL [main]

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2019-11-15T15:52:52.058+0800 I CONTROL [main]

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** WARNING: This server is bound to localhost.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** Remote systems will be unable to connect to this server.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** Start the server with --bind_ip <address> to specify which IP

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** addresses it should serve responses from, or with --bind_ip_all to

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** bind to all interfaces. If this behavior is desired, start the

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** server with --bind_ip 127.0.0.1 to disable this warning.

2019-11-15T15:52:52.058+0800 I CONTROL [main]

mongos> show dbs;

admin 0.000GB

config 0.000GB

mongos> use hugo;

switched to db hugo

mongos> db.emp.insert({"name":"張三"})

WriteCommandError({

"ok" : 0,

"errmsg" : "unable to initialize targeter for write op for collection hugo.emp :: caused by :: Database hugo not found :: caused by :: No shards found",

"code" : 70,

"codeName" : "ShardNotFound",

"operationTime" : Timestamp(1573804857, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1573804857, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

})

3、分片節點叢集搭建

a、47017,47018是我們的一個shard分片叢集,現在進行改shard叢集的配置**

47017的配置:

#資料目錄

dbpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/shard-47017

#端口

port=47017

#日志

logpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/shard-47017/47017.log

#背景啟動

fork=true

#複制集名稱/副本集名稱

replSet=shardCluster

#表明是分片服務

shardsvr=true

47018的配置:

dbpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/shard-47018

port=47018

logpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/shard-47018/47018.log

fork=true

replSet=shardCluster

shardsvr=true

b、啟動47017,47018:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongod -f conf/shard-47017.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2827

child process started successfully, parent exiting

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongod -f conf/shard-47018.conf

about to fork child process, waiting until server is ready for connections.

forked process: 2909

child process started successfully, parent exiting

[[email protected] mongodb-linux-x86_64-4.0.5]# ps -ef|grep mongo

root 2191 1 0 15:52 ? 00:00:01 ./bin/mongos -f conf/mongos-27017.conf

root 2827 1 0 16:14 ? 00:00:00 ./bin/mongod -f conf/shard-47017.conf

root 2909 1 3 16:15 ? 00:00:00 ./bin/mongod -f conf/shard-47018.conf

root 2952 1471 0 16:16 pts/4 00:00:00 grep --color=auto mongo

root 32692 1 0 14:46 ? 00:00:23 ./bin/mongod -f conf/conf-37017.conf

root 32726 1 0 14:46 ? 00:00:24 ./bin/mongod -f conf/conf-37018.conf

[[email protected] mongodb-linux-x86_64-4.0.5]#

c、配置我們的分片節點叢集47017,47018的叢集關系配置:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongo --port 47017

MongoDB shell version v4.0.5

connecting to: mongodb://127.0.0.1:47017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("7be3f20d-c3cf-4ee9-bb24-ae9f1695db6c") }

MongoDB server version: 4.0.5

Server has startup warnings:

2019-11-15T16:14:42.422+0800 I STORAGE [initandlisten]

2019-11-15T16:14:42.422+0800 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine

2019-11-15T16:14:42.422+0800 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten]

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten]

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server.

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning.

2019-11-15T16:14:43.147+0800 I CONTROL [initandlisten]

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten]

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten]

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten]

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 7230 processes, 65535 files. Number of processes should be at least 32767.5 : 0.5 times number of files.

2019-11-15T16:14:43.148+0800 I CONTROL [initandlisten]

> rs.status()

{

"operationTime" : Timestamp(0, 0),

"ok" : 0,

"errmsg" : "no replset config has been received",

"code" : 94,

"codeName" : "NotYetInitialized",

"$clusterTime" : {

"clusterTime" : Timestamp(0, 0),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

> var cfg = {

... "_id":"shardCluster",

... "protocolVersion":1,

... "members":[

... {

... "_id":0,"host":"127.0.0.1:47017"

... },{

... "_id":1,"host":"127.0.0.1:47018"

... }

... ]

... }

> rs.initiate(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1573806134, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1573806134, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shardCluster:SECONDARY> rs.status()

{

"set" : "shardCluster",

"date" : ISODate("2019-11-15T08:23:17.712Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1573806188, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1573806188, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1573806188, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1573806188, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1573806148, 1),

"members" : [

{

"_id" : 0,

"name" : "127.0.0.1:47017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 515,

"optime" : {

"ts" : Timestamp(1573806188, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-11-15T08:23:08Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1573806146, 1),

"electionDate" : ISODate("2019-11-15T08:22:26Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "127.0.0.1:47018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 62,

"optime" : {

"ts" : Timestamp(1573806188, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1573806188, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-11-15T08:23:08Z"),

"optimeDurableDate" : ISODate("2019-11-15T08:23:08Z"),

"lastHeartbeat" : ISODate("2019-11-15T08:23:16.294Z"),

"lastHeartbeatRecv" : ISODate("2019-11-15T08:23:17.074Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "127.0.0.1:47017",

"syncSourceHost" : "127.0.0.1:47017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1573806188, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1573806188, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shardCluster:PRIMARY>

主節點(47017)已經正常啟動,現在把從節點(47018)也準備好:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongo --port 47018

MongoDB shell version v4.0.5

connecting to: mongodb://127.0.0.1:47018/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("b0c34434-cb24-49df-958b-c1fd3e819900") }

MongoDB server version: 4.0.5

Server has startup warnings:

2019-11-15T16:15:46.416+0800 I STORAGE [initandlisten]

2019-11-15T16:15:46.416+0800 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine

2019-11-15T16:15:46.416+0800 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten]

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten]

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten]

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten]

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten]

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten]

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 7230 processes, 65535 files. Number of processes should be at least 32767.5 : 0.5 times number of files.

2019-11-15T16:15:47.135+0800 I CONTROL [initandlisten]

shardCluster:SECONDARY> show dbs;

2019-11-15T16:25:34.407+0800 E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(1573806328, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1573806328, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

[email protected]/mongo/shell/utils.js:25:13

[email protected]/mongo/shell/mongo.js:124:1

[email protected]/mongo/shell/utils.js:876:19

[email protected]/mongo/shell/utils.js:766:15

@(shellhelp2):1:1

shardCluster:SECONDARY> rs.slaveOk()

shardCluster:SECONDARY> show dbs;

admin 0.000GB

config 0.000GB

local 0.000GB

shardCluster:SECONDARY>

d、把47017,47018的分片複制集加入到路由節點27017下

現在我們已經成功啟動了路由節點,配置節點叢集,一個分片節點複制叢集

但是進入路由節點27017中可以看到,目前的shards分片節點還沒有加進來:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongo

MongoDB shell version v4.0.5

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("4cc305cf-5800-427f-9865-da500d12f281") }

MongoDB server version: 4.0.5

Server has startup warnings:

2019-11-15T15:52:52.057+0800 I CONTROL [main]

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2019-11-15T15:52:52.058+0800 I CONTROL [main]

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** WARNING: This server is bound to localhost.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** Remote systems will be unable to connect to this server.

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** Start the server with --bind_ip <address> to specify which IP

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** addresses it should serve responses from, or with --bind_ip_all to

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** bind to all interfaces. If this behavior is desired, start the

2019-11-15T15:52:52.058+0800 I CONTROL [main] ** server with --bind_ip 127.0.0.1 to disable this warning.

2019-11-15T15:52:52.058+0800 I CONTROL [main]

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5dce4a5cfd68eca59be538e4")

}

shards:

active mongoses:

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

mongos>

是以,把47017,47018shard節點加進來(添加分片節點):

(備注:sh.addShard(“shardCluster/127.0.0.1:47017,127.0.0.1:47018”)中的shardCluster就是我們上面配置47017,47018的分片複制集名稱)

mongos> sh.addShard("shardCluster/127.0.0.1:47017,127.0.0.1:47018")

{

"shardAdded" : "shardCluster",

"ok" : 1,

"operationTime" : Timestamp(1573807105, 8),

"$clusterTime" : {

"clusterTime" : Timestamp(1573807105, 8),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5dce4a5cfd68eca59be538e4")

}

shards:

{ "_id" : "shardCluster", "host" : "shardCluster/127.0.0.1:47017,127.0.0.1:47018", "state" : 1 }

active mongoses:

"4.0.5" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

mongos>

可以看到,shards的分片節點已經加進來了。

e、往分片叢集裡再添加一個分片節點

前面的工作,我們已經往整個分片叢集裡加入了一個分片節點複制集了(47017,47018),現在再添加一個分片節點(57017,這裡為了簡化示範,就不做複制集了)

57017的conf配置:

dbpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/shard-57017

port=57017

logpath=/root/Hugo/tools/mongodb/mongodb-linux-x86_64-4.0.5/data/shard-57017/57017.log

fork=true

shardsvr=true

啟動57017節點:

[[email protected] mongodb-linux-x86_64-4.0.5]# ./bin/mongod -f conf/shard-57017.conf

about to fork child process, waiting until server is ready for connections.

forked process: 4111

child process started successfully, parent exiting

[[email protected] mongodb-linux-x86_64-4.0.5]# ps -ef|grep mongo

root 2191 1 0 15:52 ? 00:00:03 ./bin/mongos -f conf/mongos-27017.conf

root 2827 1 0 16:14 ? 00:00:08 ./bin/mongod -f conf/shard-47017.conf

root 2909 1 0 16:15 ? 00:00:08 ./bin/mongod -f conf/shard-47018.conf

root 3387 1471 0 16:29 pts/4 00:00:00 ./bin/mongo

root 4111 1 6 16:50 ? 00:00:00 ./bin/mongod -f conf/shard-57017.conf

root 4138 32621 0 16:50 pts/3 00:00:00 grep --color=auto mongo

root 32692 1 0 14:46 ? 00:00:32 ./bin/mongod -f conf/conf-37017.conf

root 32726 1 0 14:46 ? 00:00:32 ./bin/mongod -f conf/conf-37018.conf

[[email protected] mongodb-linux-x86_64-4.0.5]#

把57017加入到分片叢集中:

mongos> sh.addShard("127.0.0.1:57017")

{

"shardAdded" : "shard0001",

"ok" : 1,

"operationTime" : Timestamp(1573808031, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1573808031, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5dce4a5cfd68eca59be538e4")

}

shards:

{ "_id" : "shard0001", "host" : "127.0.0.1:57017", "state" : 1 }

{ "_id" : "shardCluster", "host" : "shardCluster/127.0.0.1:47017,127.0.0.1:47018", "state" : 1 }

active mongoses:

"4.0.5" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shardCluster 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shardCluster Timestamp(1, 0)

mongos>

可以看到,我們的分片叢集中shards已經有2個片健了,搞定成功。

下面示範資料操作。

4、片健資料操作示範

a、為資料庫開啟分片功能

這裡建立一個叫做hugo的資料庫,表名(collection) emp

mongos> sh.enableSharding("hugo")

{

"ok" : 1,

"operationTime" : Timestamp(1573808573, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1573808573, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5dce4a5cfd68eca59be538e4")

}

shards:

{ "_id" : "shard0001", "host" : "127.0.0.1:57017", "state" : 1 }

{ "_id" : "shardCluster", "host" : "shardCluster/127.0.0.1:47017,127.0.0.1:47018", "state" : 1 }

active mongoses:

"4.0.5" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shardCluster 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shardCluster Timestamp(1, 0)

{ "_id" : "hugo", "primary" : "shard0001", "partitioned" : true, "version" : { "uuid" : UUID("8175e28f-8335-4d35-bcf9-c40a937dc344"), "lastMod" : 1 } }

mongos>

在databases中可以看到,hugo的資料庫已經加進來了。

b、為指定集合開啟分片功能

這裡為集合‘emp’開啟分片功能,并且指定‘_id’做為片健

mongos> sh.shardCollection("hugo.emp",{"_id":1})

{

"collectionsharded" : "hugo.emp",

"collectionUUID" : UUID("6027c2a3-e8d1-49c4-bbe9-b57eacaf3201"),

"ok" : 1,

"operationTime" : Timestamp(1573808814, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1573808814, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5dce4a5cfd68eca59be538e4")

}

shards:

{ "_id" : "shard0001", "host" : "127.0.0.1:57017", "state" : 1 }

{ "_id" : "shardCluster", "host" : "shardCluster/127.0.0.1:47017,127.0.0.1:47018", "state" : 1 }

active mongoses:

"4.0.5" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shardCluster 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shardCluster Timestamp(1, 0)

{ "_id" : "hugo", "primary" : "shard0001", "partitioned" : true, "version" : { "uuid" : UUID("8175e28f-8335-4d35-bcf9-c40a937dc344"), "lastMod" : 1 } }

hugo.emp

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard0001 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 0)

mongos> show dbs;

admin 0.000GB

config 0.001GB

hugo 0.000GB

c、往分片叢集中寫資料,觀察分片效果

因為分片叢集中預設的chunk塊大小是64M,這裡為了示範效果,把chunk塊大小修改為1m

mongos> use config;

switched to db config

mongos> db.settings.find()

mongos> db.settings.save({_id:"chunksize",value:1})

WriteResult({ "nMatched" : 0, "nUpserted" : 1, "nModified" : 0, "_id" : "chunksize" })

mongos> use config;

switched to db config

mongos> show tables;

changelog

chunks

collections

databases

lockpings

locks

migrations

mongos

settings

shards

tags

transactions

version

mongos> db.settings.find()

{ "_id" : "chunksize", "value" : 1 }

mongos>

可以看到,目前emp表的資料為0:

mongos> use hugo;

switched to db hugo

mongos> db.emp.count()

0

為了示範分片效果,我們往集合emp中寫入10000條資料:

mongos> use hugo;

switched to db hugo

mongos> for(var i=1;i<=100000;i++){

... db.emp.insert({"_id":i,"name":"copy"+i});

... }

檢視一下目前分片叢集中的分片效果:

mongos> db.emp.count()

0

mongos> db.emp.count()

4453

mongos> db.emp.count()

6693

mongos> db.emp.count()

8587

mongos> db.emp.count()

10460

mongos> db.emp.count()

22249

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5dce4a5cfd68eca59be538e4")

}

shards:

{ "_id" : "shard0001", "host" : "127.0.0.1:57017", "state" : 1 }

{ "_id" : "shardCluster", "host" : "shardCluster/127.0.0.1:47017,127.0.0.1:47018", "state" : 1 }

active mongoses:

"4.0.5" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

1 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shardCluster 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shardCluster Timestamp(1, 0)

{ "_id" : "hugo", "primary" : "shard0001", "partitioned" : true, "version" : { "uuid" : UUID("8175e28f-8335-4d35-bcf9-c40a937dc344"), "lastMod" : 1 } }

hugo.emp

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard0001 2

shardCluster 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : 2 } on : shardCluster Timestamp(2, 0)

{ "_id" : 2 } -->> { "_id" : 28340 } on : shard0001 Timestamp(2, 1)

{ "_id" : 28340 } -->> { "_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 3)

mongos>

可以看到,已經有2個塊了

目前資料還在寫,10000條還沒寫完,等一下,看最終的效果:

mongos> db.emp.count()

89701

mongos> db.emp.count()

89701

mongos> db.emp.count()

89701

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5dce4a5cfd68eca59be538e4")

}

shards:

{ "_id" : "shard0001", "host" : "127.0.0.1:57017", "state" : 1 }

{ "_id" : "shardCluster", "host" : "shardCluster/127.0.0.1:47017,127.0.0.1:47018", "state" : 1 }

active mongoses:

"4.0.5" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Collections with active migrations:

hugo.emp started at Fri Nov 15 2019 17:28:21 GMT+0800 (CST)

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

2 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shardCluster 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shardCluster Timestamp(1, 0)

{ "_id" : "hugo", "primary" : "shard0001", "partitioned" : true, "version" : { "uuid" : UUID("8175e28f-8335-4d35-bcf9-c40a937dc344"), "lastMod" : 1 } }

hugo.emp

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard0001 3

shardCluster 4

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : 2 } on : shardCluster Timestamp(2, 0)

{ "_id" : 2 } -->> { "_id" : 28340 } on : shard0001 Timestamp(3, 1)

{ "_id" : 28340 } -->> { "_id" : 42509 } on : shard0001 Timestamp(2, 2)

{ "_id" : 42509 } -->> { "_id" : 59897 } on : shard0001 Timestamp(2, 3)

{ "_id" : 59897 } -->> { "_id" : 74066 } on : shardCluster Timestamp(3, 2)

{ "_id" : 74066 } -->> { "_id" : 89699 } on : shardCluster Timestamp(3, 3)

{ "_id" : 89699 } -->> { "_id" : { "$maxKey" : 1 } } on : shardCluster Timestamp(3, 4)

mongos>

可以看到:資料比較均勻的分布在2個塊

未完,待續,吃飯去。。。。。。