spark on yarn模式下配置spark-sql通路hive中繼資料

目的:在spark on yarn模式下,執行spark-sql通路hive的中繼資料。并對比一下spark-sql 和hive的效率。

軟體環境:

- hadoop2.7.3

- apache-hive-2.1.1-bin

- spark-2.1.0-bin-hadoop2.7

- jd1.8

hadoop是僞分布式安裝的,1個節點,2core,4G記憶體。

hive是遠端模式。

-

spark的下載下傳位址:

http://spark.apache.org/downloads.html

解壓安裝spark

tar -zxvf spark-2.1.0-bin-hadoop2.7.tgz.tar

cd spark-2.1.0-bin-hadoop2.7/conf

cp spark-env.sh.template spark-env.sh

cp slaves.template slaves

cp log4j.properties.template log4j.properties

cp spark-defaults.conf.template spark-defaults.conf

-

修改spark的配置檔案

cd $SPARK_HOME/conf

vi spark-env.sh

vi spark-defaults.confexport JAVA_HOME=/usr/local/jdk export HADOOP_HOME=/home/fuxin.zhao/soft/hadoop-2.7.3 export HDFS_CONF_DIR=${HADOOP_HOME}/etc/hadoop export YARN_CONF_DIR=${HADOOP_HOME}/etc/hadoop

vi slavesspark.master spark://ubuntuServer01:7077 spark.eventLog.enabled true spark.eventLog.dir hdfs://ubuntuServer01:9000/tmp/spark spark.serializer org.apache.spark.serializer.KryoSerializer spark.driver.memory 512m spark.executor.extraJavaOptions -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three" #spark.yarn.jars hdfs://ubuntuServer01:9000/tmp/spark/lib_jars/*.jarubuntuServer01 - ** 配置spark-sql讀取hive的中繼資料**

##将hive-site.xml 軟連接配接到spark的conf配置目錄中: cd $SPARK_HOME/conf ln -s /home/fuxin.zhao/soft/apache-hive-2.1.1-bin/conf/hive-site.xml hive-site.xml ##将連接配接 mysql-connector-java-5.1.35-bin.jar拷貝到spark的jars目錄下 cp $HIVE_HOME/lib/mysql-connector-java-5.1.35-bin.jar $SPARK_HOME/jars -

測試spark-sql:

先使用hive建立幾個資料庫和資料表,測試spark-sql是否可以通路

我向 temp.s4_order表導入了6萬行,9M大小的資料。

#先使用hive建立一下資料庫和資料表,測試spark-sql是否可以通路 hive -e " create database temp; create database test; use temp; CREATE EXTERNAL TABLE t_source( `sid` string, `uid` string ); load data local inpath '/home/fuxin.zhao/t_data' into table t_source; CREATE EXTERNAL TABLE s4_order( `orderid` int , `retailercode` string , `orderstatus` int, `paystatus` int, `payid` string, `paytime` timestamp, `payendtime` timestamp, `salesamount` int, `description` string, `usertoken` string, `username` string, `mobile` string, `createtime` timestamp, `refundstatus` int, `subordercount` int, `subordersuccesscount` int, `subordercreatesuccesscount` int, `businesstype` int, `deductedamount` int, `refundorderstatus` int, `platform` string, `subplatform` string, `refundnumber` string, `refundpaytime` timestamp, `refundordertime` timestamp, `primarysubordercount` int, `primarysubordersuccesscount` int, `suborderprocesscount` int, `isshoworder` int, `updateshowordertime` timestamp, `devicetoken` string, `lastmodifytime` timestamp, `refundreasontype` int ) PARTITIONED BY ( `dt` string); load data local inpath '/home/fuxin.zhao/20170214003514' OVERWRITE into table s4_order partition(dt='2017-02-13'); load data local inpath '/home/fuxin.zhao/20170215000514' OVERWRITE into table s4_order partition(dt='2017-02-14'); "

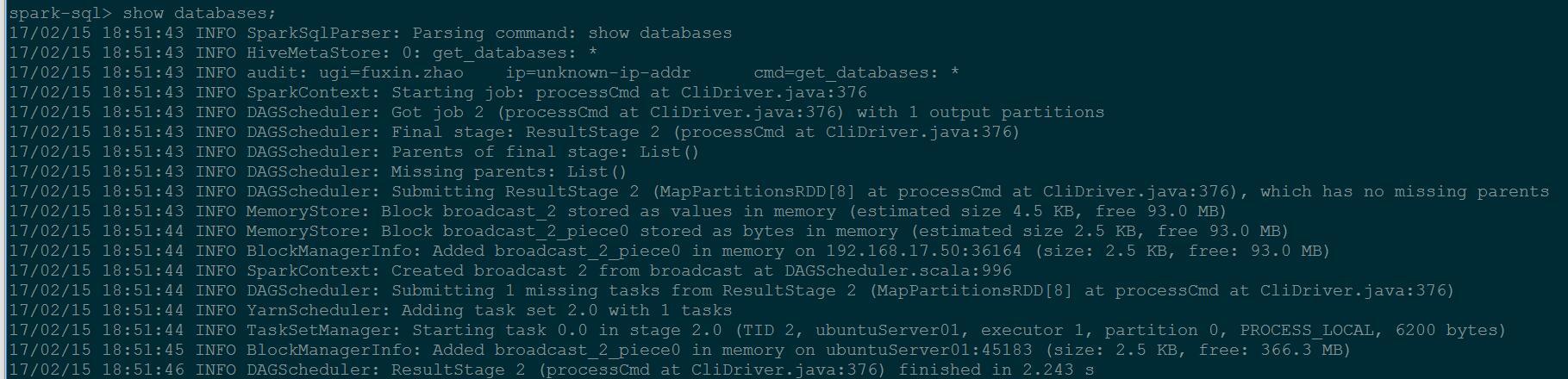

輸入spark-sql指令,在終端中執行如下一些sql指令:

啟動spark-sql用戶端:

spark-sql --master yarn

在啟動的指令行中執行如下sql:

show database;

use temp;

show tables;

select * from s4_order limit 100;

select count(*) ,dt from s4_order group dt;

select count(*) from s4_order ;

insert overwrite table t_source select orderid,createtime from s4_order;

select count() ,dt from s4_order group dt; // spark-sql耗時 11s; hive執行耗時30秒

select count() from s4_order ; // spark-sql耗時2s;hive執行耗時25秒。

直覺的感受是spark-sql 的效率大概是hive的 3到10倍,由于我的測試是本地的虛拟機單機環境,hadoop也是僞分布式環境,資源較匮乏,在生産環境中随着叢集規模,資料量,執行邏輯的變化,執行效率應該不是這個比例。

作者:

丹江湖畔養蜂子的趙大爹

出處:http://www.cnblogs.com/honeybee/

關于作者:丹江湖畔養蜂子的趙大爹

本文版權歸作者和部落格園共有,歡迎轉載,但未經作者同意必須保留此段聲明,且在文章頁面明顯位置給出原文連結