每個 request queue 會維護一個 struct queue_limits 結構來描述對應的塊裝置的硬體參數,這些參數描述了硬體存儲單元的組織方式,會影響 block layer 的很多行為,其中部分參數在 /sys/block/<dev>/queue/

struct request_queue {

struct queue_limits limits;

...

} struct queue_limits {

unsigned long bounce_pfn;

unsigned long seg_boundary_mask;

unsigned long virt_boundary_mask;

unsigned int max_hw_sectors;

unsigned int max_dev_sectors;

unsigned int chunk_sectors;

unsigned int max_sectors;

unsigned int max_segment_size;

unsigned int physical_block_size;

unsigned int alignment_offset;

unsigned int io_min;

unsigned int io_opt;

unsigned int max_discard_sectors;

unsigned int max_hw_discard_sectors;

unsigned int max_write_same_sectors;

unsigned int max_write_zeroes_sectors;

unsigned int discard_granularity;

unsigned int discard_alignment;

unsigned short logical_block_size;

unsigned short max_segments;

unsigned short max_integrity_segments;

unsigned short max_discard_segments;

unsigned char misaligned;

unsigned char discard_misaligned;

unsigned char cluster;

unsigned char raid_partial_stripes_expensive;

enum blk_zoned_model zoned;

}; base

logical_block_size

This is the smallest unit the storage device can address. It is typically 512 bytes.

logical_block_size 描述硬體進行位址尋址的最小單元,其預設值為 512 bytes,對應

/sys/block/<dev>/queue/logical_block_size

對于 HDD 裝置來說,裝置能夠尋址的最小存儲單元是扇區 (sector),每個扇區的大小是 512 bytes,因而 HDD 裝置的 logical_block_size 屬性就是 512 bytes

為了使用 block buffer 特性,檔案系統的 block size 必須為塊裝置的 logical_block_size 的整數倍

同時向 device controller 下發的 IO 也必須是按照 logical_block_size 對齊

physical_block_size

This is the smallest unit a physical storage device can write atomically. It is usually the same as the logical block size but may be bigger.

physical_block_size 描述硬體執行寫操作的最小單元,其預設值為 512 bytes,對應

/sys/block/<dev>/queue/physical_block_size

physical_block_size 必須是 logical_block_size 的整數倍

io_min

Storage devices may report a granularity or preferred minimum I/O size which is the smallest request the device can perform without incurring a performance penalty. For disk drives this is often the physical block size. For RAID arrays it is often the stripe chunk size. A properly aligned multiple of minimum_io_size is the preferred request size for workloads where a high number of I/O operations is desired.

描述執行 IO 操作的最小機關,預設值為 512 bytes,對應

/sys/block/<dev>/queue/minimum_io_size

io_opt

Storage devices may report an optimal I/O size, which is the device's preferred unit for sustained I/O. This is rarely reported for disk drives. For RAID arrays it is usually the stripe width or the internal track size. A properly aligned multiple of optimal_io_size is the preferred request size for workloads where sustained throughput is desired. If no optimal I/O size is reported this file contains 0.

描述執行 IO 操作的最佳大小,預設值為 0,對應

/sys/block/<dev>/queue/optimal_io_size

NVMe as example

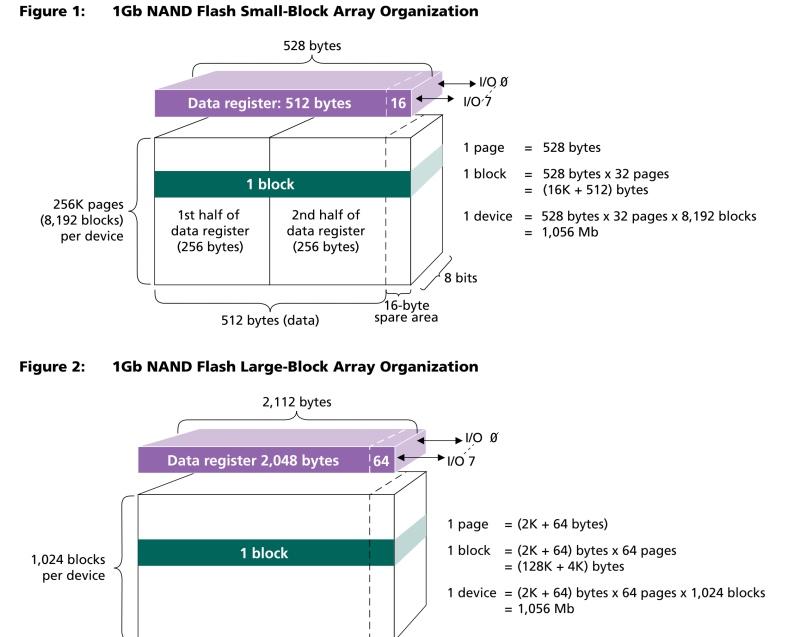

NAND flash unit

NAND flash 執行 read/write 操作的機關為 page,随着 NAND flash 規格的不同,page 的大小可以為 512, 2K, 4K, .. 位元組

但是由于 NAND flash 存儲媒體的特性,隻能對狀态為 0 的存儲單元執行 write 操作,對于狀态為 1 的存儲單元,必須先對其執行 erase 操作使其狀态恢複為 0,之後再對其執行 write 操作;而 erase 操作的機關為 block,一個 block 通常包含多個 page

NVMe

NVMe 協定其實并沒有直接暴露 NAND flash 存儲的 page、block 這些屬性,NVMe 協定隻暴露有 logical_block_size 屬性,即最小尋址單元

由于 HDD 的 logical_block_size 是 512 位元組,上層的軟體棧大多是為 512 位元組的 logical_block_size 設計的,因而為了相容上層的軟體棧設計,NVMe 協定也支援 512 位元組的 logical_block_size,即使此時底層的 NAND flash 存儲媒體的 page 很可能是 4K 大小,此時由 SSD 内部的 FTL 負責 NAND flash 存儲媒體的 page/block 屬性,到 NVMe 協定暴露的 logical_block_size 屬性之間的模拟

- supported LBA Format

NVMe 協定内部使用 LBA Format 描述 logical_block_size 參數,NVMe 協定會通過 namespace identity command 指令向使用者通告,裝置支援的所有 LBA Format

NVMe 裝置初始化階段,驅動會向裝置發送 namespace identity 指令,在傳回的 identity data 中,Number of LBA Formats (NLBAF) 字段描述了裝置支援的所有 LBA Format 的數量

Number of LBA Formats (NLBAF): This field defines the number of supported LBA data size and metadata size combinations supported by the namespace. LBA formats shall be allocated in order (starting with 0) and packed sequentially. This is a 0’s based value. The maximum number of LBA formats that may be indicated as supported is 16.

同時傳回的 identity data 的 LBA Format 0 Support (LBAF0) ~ LBA Format 16 Support (LBAF16) 字段就分别描述符了裝置支援的所有 LBA Format,其中的每個字段都是 LBA Format Data Structure 資料類型,描述裝置支援的一種 LBA Format 類型

其中 LBA Data Size (LBADS) 字段就描述了該 LBA Format 中,logical_block_size 的大小

例如對某個 NVMe 裝置執行 namespace identity 指令,其傳回的裝置支援的所有 LBA Format 格式為

LBA Format 0 : Metadata Size: 0 bytes - Data Size: 512 bytes - Relative Performance: 0x2 Good (in use)

LBA Format 1 : Metadata Size: 8 bytes - Data Size: 512 bytes - Relative Performance: 0x2 Good

LBA Format 2 : Metadata Size: 16 bytes - Data Size: 512 bytes - Relative Performance: 0x2 Good

LBA Format 3 : Metadata Size: 0 bytes - Data Size: 4096 bytes - Relative Performance: 0 Best

LBA Format 4 : Metadata Size: 8 bytes - Data Size: 4096 bytes - Relative Performance: 0 Best

LBA Format 5 : Metadata Size: 64 bytes - Data Size: 4096 bytes - Relative Performance: 0 Best

LBA Format 6 : Metadata Size: 128 bytes - Data Size: 4096 bytes - Relative Performance: 0 Best - format LBA Format

裝置通過 namespace identity 指令向使用者通告裝置支援的所有 LBA Format,在裝置格式化階段,使用者就可以通過 Format NVM 指令的 LBA Format (LBAF) 字段指定裝置使用哪一種 LBA Format 格式,這個字段的值實際上是使用的 LBA Format 在以上介紹的 namespace identity 指令傳回的 all supported LBA Format table 中的 index

LBA Format (LBAF): This field specifies the LBA format to apply to the NVM media. This 03:00 corresponds to the LBA formats indicated in the Identify command. Only supported LBA formats shall be selected.

- show selected LBA Format

namespace identity 指令傳回的 identity data 中,Formatted LBA Size (FLBAS) 字段就顯示了裝置目前使用的 LBA Format 格式,該字段的值實際上也是目前使用的 LBA Format 在 all supported LBA Format table 中的 index

Formatted LBA Size (FLBAS):Bits 3:0 indicates one of the 16 supported LBA Formats indicated in this data structure

對于 NVMe 裝置來說,namespace identity 指令傳回的 identity data 中,Formatted LBA Size (FLBAS) 字段就顯示了裝置目前使用的 LBA Format 格式,該 LBA Format 格式對應的 LBA Format Data Structure 結構體中,LBA Data Size (LBADS) 字段就描述了該 LBA Format 中 logical_block_size 的大小

NVMe features

NVMe 協定隻向外暴露 logical_block_size 參數,此時 logical_block_size 參數一般都小于内部的 NAND flash 存儲媒體實際的 page/block 參數,此時 FTL 就負責這中間的模拟和轉換,但是此時裝置的 read/write 性能往往不是最優的

理論上,當上層 read 操作的機關是 NAND flash 存儲媒體的 page 參數,write 操作的機關是 NAND flash 存儲媒體的 block 參數時,裝置的 read/write 性能才能達到最優

因而 NVMe 協定在之後引入了多個特性,這些特性雖然沒有直接暴露底層 NAND flash 存儲媒體的 page/block 參數,但是都與這些參數相關

- Namespace Preferred Write

NVMe 協定在 1.4 版本引入 Namespace Preferred Write 特性,來暴露内部 NAND flash 存儲媒體 block 參數的更多資訊

在 namespace identity 指令傳回的 identity data 中,

- Namespace Preferred Write Alignment (NPWA) 字段描述 preffered write 操作的 LBA 位址必須按照 NPWA 對齊,可以了解為 NAND flash 存儲媒體的 block alignment

- Namespace Preferred Write Granularity (NPWG) 字段描述 preffered write 操作的機關,是 NPWA 的複數倍,可以了解為是以 NAND flash 存儲媒體下的 block 為機關執行 write 操作

Namespace Preferred Write Granularity (NPWG): This field indicates the smallest recommended write granularity in logical blocks for this namespace. This is a 0’s based value.

The size indicated should be less than or equal to Maximum Data Transfer Size (MDTS) that is specified in units of minimum memory page size. The size should be a multiple of Namespace Preferred Write Alignment (NPWA).

Namespace Preferred Write Alignment (NPWA): This field indicates the recommended write alignment in logical blocks for this namespace. This is a 0’s based value.

- Namespace Optimal Write

NVMe 協定在 1.4 版本引入 Namespace Optimal Write 特性,其本質上也是暴露内部 NAND flash 存儲媒體的 block 參數

在 namespace identity 指令傳回的 identity data 中,Namespace Optimal Write Size (NOWS) 字段描述 optimal write 操作的機關,與之前 Namespace Preferred Write 的差别是,NOWS 必須是 NPWG 的複數倍(NPWG 又必須是 NPWA 的複數倍)

Namespace Optimal Write Size (NOWS): This field indicates the size in logical blocks for optimal write performance for this namespace. This is a 0’s based value.

The size indicated should be less than or equal to Maximum Data Transfer Size (MDTS) that is specified in units of minimum memory page size. The value of this field may change if the namespace is reformatted. The value of this field should be a multiple of Namespace Preferred Write Granularity (NPWG).

- Atomic Write

identify controller 指令傳回的資料中

- Atomic Write Unit Normal (AWUN) 描述了 atomic write 的最大機關,小于 AWUN 的 write 操作都是 atomic 的

- Atomic Write Unit Power Fail (AWUPF) 描述了 powerfail 情況下的 atomic write 的最大機關

Atomic Write Unit Normal (AWUN): This field indicates the size of the write operation guaranteed to be written atomically to the NVM across all namespaces with any supported namespace format during normal operation. This field is specified in logical blocks and is a 0’s based value.

If a specific namespace guarantees a larger size than is reported in this field, then this namespace specific size is reported in the NAWUN field in the Identify Namespace data structure.

If a write command is submitted with size less than or equal to the AWUN value, the host is guaranteed that the write command is atomic to the NVM with respect to other read or write commands. If a write command is submitted with size greater than the AWUN value, then there is no guarantee of command atomicity.

Atomic Write Unit Power Fail (AWUPF): This field indicates the size of the write operation guaranteed to be written atomically to the NVM across all namespaces with any supported namespace format during a power fail or error condition.

If a specific namespace guarantees a larger size than is reported in this field, then this namespace specific size is reported in the NAWUPF field in the Identify Namespace data structure.

This field is specified in logical blocks and is a 0’s based value. The AWUPF value shall be less than or equal to the AWUN value.

If a write command is submitted with size less than or equal to the AWUPF value, the host is guaranteed that the write is atomic to the NVM with respect to other read or write commands. If a write command is submitted that is greater than this size, there is no guarantee of command atomicity. If the write size is less than or equal to the AWUPF value and the write command fails, then subsequent read commands for the associated logical blocks shall return data from the previous successful write command. If a write command is submitted with size greater than the AWUPF value, then there is no guarantee of data returned on subsequent reads of the associated logical blocks.

以上兩個是整個 controller 範圍的屬性,适用于所有 namespace,而以下兩個則是 per-namespace 的屬性,在 identify namespace 指令傳回的資料中

Namespace Atomic Write Unit Normal (NAWUN): This field indicates the namespace specific size of the write operation guaranteed to be written atomically to the NVM during normal operation.

Namespace Atomic Write Unit Power Fail (NAWUPF): This field indicates the namespace specific size of the write operation guaranteed to be written atomically to the NVM during a power fail or error condition.

- Namespace Optimal IO Boundary

因而 NVMe 協定在 1.3 版本引入 Namespace Optimal IO Boundary 特性

- Stream

NVMe 協定在 1.3 版本引入 Stream 特性,該特性用于将同一生命的資料存儲在一起,不同生命周期的資料分開存儲,以減小寫放大,進而提升裝置壽命以及運作時性能

NVMe 協定通過 directive 機制支援 Stream 特性,directive 機制用于實作裝置與上層軟體之間的資訊溝通,Stream 隻是 directive 的一個子集,其控制流程主要為

- identify controller 指令傳回的 identify data 中,OACS (Optional Admin Command Support) 字段的 bit 5 描述該裝置是否支援 directive 特性

- 向裝置發送 Directive Receive (Return Parameters) 指令,傳回的 Return Parameters data 的 NVM Subsystem Streams Available (NSSA) 字段就描述了裝置支援的 stream 數量

- 向裝置發送 Directive Receive (Allocate Resources) 指令,以申請獨占使用某個 stream

- 之後在 Write 指令的 Directive Type 字段設定為 Streams (01h),同時 Directive Specific 字段設定為對應的 stream id,即可以将該 Write 指令寫入的資料與該 stream id 相綁定

在 Stream 特性中,Directive Receive (Return Parameters) 指令傳回的資料中

- Stream Write Size (SWS) 字段描述 stream write 的最小機關

Stream Write Size (SWS): This field indicates the alignment and size of the optimal stream write as a number of logical blocks for the specified namespace. The size indicated should be less than or equal to Maximum Data Transfer Size (MDTS) that is specified in units of minimum memory page size.

SWS should be a multiple of the Namespace Preferred Write Granularity (NPWG).

- Stream Granularity Size (SGS) 描述 stream 配置設定存儲空間的機關,裝置一開始會為每個 stream 預先配置設定 SGS 大小的存儲塊,之後該 stream 的資料都會存儲到這一預配置設定的 SGS 大小的存儲塊中;當這一存儲塊的空間用盡時,裝置則再次配置設定一個 SGS 大小的存儲塊;所有這些 SGS 大小的存儲塊就構成了一個 stream

Stream Granularity Size (SGS): This field indicates the stream granularity size for the specified namespace in Stream Write Size (SWS) units.

The Stream Granularity Size indicates the size of the media that is prepared as a unit for future allocation for write commands and is a multiple of the Stream Write Size. The controller may allocate and group together a stream in Stream Granularity Size (SGS) units.

NVMe 裝置的 physical_block_size 參數計算過程為

- 首先計算 @phys_bs 與 @atomic_bs 兩個變量的值

- @phys_bs

- 當支援 Namespace Preferred Write 特性時,來源于 Namespace Preferred Write Granularity (NPWG)

- 否則當支援 Stream 特性時,來源于 Stream Write Size (SWS)

- 否則來源于 logical_block_size

- @atomic_bs

- 優先來源于 atomic write unit power fail,即 Atomic Write Unit Power Fail (AWUPF) 或 Namespace Atomic Write Unit Power Fail (NAWUPF)

- @phys_bs

- @phys_bs 與 @atomic_bs 取最小值,就是 physical_block_size

NVMe 裝置的 io_opt 參數計算過程為

- 當支援 Namespace Optimal Write 特性時,來源于 Namespace Optimal Write Size (NOWS)

- 否則當支援 Stream 特性時,來源于 Stream Granularity Size (SGS)

NVMe 裝置的 io_min 參數實際上就是之前計算的 @phys_bs 變量的值,即

sector limit

max_dev_sectors

Some devices report a maximum block count for READ/WRITE requests.

有一些 IO controller 能夠處理的單個 READ/WRITE request 的大小存在上限,max_dev_sectors 就描述了這一上限值;對于不存在這一限制的裝置,該參數的預設值為 0

目前隻有 SCSI 裝置存在這一上限

max_hw_sectors

This is the maximum number of kilobytes supported in a single data transfer.

有一些 IO controller 能夠執行的單次 DMA SG 操作的大小存在上限,max_hw_sectors 參數就描述了這一限制,預設值為 BLK_DEF_MAX_SECTORS 即 255 個 sector,對應

/sys/block/<dev>/queue/max_hw_sectors_kb

max_sectors

This is the maximum number of kilobytes that the block layer will allow

for a filesystem request. Must be smaller than or equal to the maximum

size allowed by the hardware.

描述 block layer 下發的 request 的最大大小,預設值為 BLK_DEF_MAX_SECTORS 即 255 個 sector

max_sectors 與 max_hw_sectors 的差別是,後者是 DMA controller 硬體裝置的屬性,無法修改,而後者是軟體設定的屬性,使用者可以通過

/sys/block/<dev>/queue/max_sectors_kb

修改該參數的值,但是不能超過 max_hw_sectors 的值

在 block routine 中,如果一個 bio 包含的資料量超過了 max_sectors,那麼在 blk_queue_split() 中就需要對該 bio 執行 split 操作;同時 bio 與 request 的 merge 過程中,合并後的 request 包含的資料量也不能超過 max_sectors,否則該 bio 就不能與這個 request 合并

chunk_sectors

chunk_sectors has different meaning depending on the type of the disk. For a RAID device (dm-raid), chunk_sectors indicates the size in 512B sectors of the RAID volume stripe segment. For a zoned block device, either host-aware or host-managed, chunk_sectors indicates the size of 512B sectors of the zones of the device, with the eventual exception of the last zone of the device which may be smaller.

chunk_sectors 參數描述任何一個下發的 IO,其描述的 sector range 不能 cross(跨越)該參數描述的界限,以 sector 為機關

例如如果 chunk_sectors 參數的值為 128*2 sector 即 128KB,那麼任何一個下發的 IO 都不能橫跨 128KB 這個界限

chunk_sectors 的來源各有不同,對于 NVMe 裝置來說,由于 flash 自身存儲顆粒的限制,單個 request 最好不要超過 NOIOB 界限,此時 IO 性能可以達到最佳,因而此時 chunk_sectors 的值就來源于 NOIOB 參數

Namespace Optimal I/O Boundary (NOIOB): This field indicates the optimal I/O boundary for this namespace. This field is specified in logical blocks. The host should construct Read and Write commands that do not cross the I/O boundary to achieve optimal performance. A value of 0h indicates that no optimal I/O boundary is reported.

該參數的預設值為 0,即預設不存在 chunk size 的限制

chunk_sectors 參數要求按照 chunk size 對 bio 進行 split 處理

__blk_queue_split

blk_bio_segment_split

get_max_io_size

blk_max_size_offset 同時 IO merge 過程中,合并後的 IO 也不能跨越 chunk size

blk_mq_sched_bio_merge

__blk_mq_sched_bio_merge

blk_mq_attempt_merge

blk_mq_bio_list_merge

bio_attempt_back_merge

ll_back_merge_fn

blk_rq_get_max_sectors

blk_max_size_offset 因而對于 dm-stripe 這類裝置來說,chunk_sectors 參數實際上就來自 chunk size

segment limit

由于 DMA controller 的實體參數可能存在限制,physical segment 在一些參數上可能存在限制,例如一個 request 可以包含的 physical segment 的數量、單個 physical segment 的大小等,request queue 的 @limits 描述了這些限制

max_segments

Maximum number of segments of the device.

由于 DMA controller 自身的限制,單個 request 可以包含的 physical segment 數量可能存在上限,@limits.max_segments 描述了這一限制,預設值為 128,對應

/sys/block/<dev>/queue/max_segments

struct queue_limits {

unsigned short max_segments;

...

} 在 bio 與 request 合并,或者兩個 request 合并過程中,需要判斷合并後的 request 包含的 physical segment 的數量不能超過這一上限

以 virtio-blk 為例,virtio block config 配置空間的 @seg_max 字段就描述了一個 request 請求可以包含的 physical segment 的數量上限

struct virtio_blk_config {

/* The maximum number of segments (if VIRTIO_BLK_F_SEG_MAX) */

__u32 seg_max;

...

} virtio spec 中對 @seg_max 字段的解釋為

seg_max is the maximum number of segments that can be in a command.

virtio-blk 裝置初始化過程中,就會從 virtio block config 配置空間讀取 @seg_max 的值,并儲存到 @limits.max_segments 中

int virtblk_probe(struct virtio_device *vdev)

{

/* We can handle whatever the host told us to handle. */

blk_queue_max_segments(q, sg_elems);

...

} 此外值得注意的是,virtio-blk 中每個 request 後面會有預配置設定好的 scatterlist 數組(驅動在向底層的實體裝置下發 IO 的時候,每個 physical segment 需要初始化一個對應的 scatterlist),數組的大小就是 @limits.max_segments,也就是 physical segment 的數量上限

struct request struct virtblk_req sg[]

+-----------------------+-----------------------+-----------+

| | | |

+-----------------------+-----------------------+-----------+ struct virtblk_req {

...

struct scatterlist sg[];

}; max_segment_size

Maximum segment size of the device.

由于 IO controller 自身的限制,一個 physical segment 的大小可能存在上限,@limits.max_segment_size 描述了這一限制,以位元組為機關,預設值為 BLK_MAX_SEGMENT_SIZE 即 65536 位元組即 64 KB,對應

/sys/block/<dev>/queue/max_segment_size

struct queue_limits {

unsigned int max_segment_size;

...

} 在計算一個 bio 或 request 包含的 physical segment 數量的過程中,雖然兩個 bio_vec 描述的記憶體區間在實體位址上連續,因而可以合并為一個 physical segment,但是前提是合并後的 physical segment 的大小不能超過 @max_segment_size 限制

seg_boundary_mask

由于 DMA controller 自身的限制,DMA controller 能夠尋址的記憶體實體位址空間是有限的,例如一般的 DMA controller 隻能尋址 32 bit 即 4GB 内的記憶體實體位址空間,如果某一段 physical segment 的實體位址超過 4GB,DMA controller 會通過某種位址映射的方式解決這一問題,此時唯一的限制是同一段 physical segment 不能跨越 4GB 這個界限,即整段 physical segment 的實體位址必須都在 4GB 之下,或者都在 4GB 之上

@limits.seg_boundary_mask 就描述了這一限制,其預設值為 BLK_SEG_BOUNDARY_MASK 即 0xFFFFFFFFUL,也就是預設 DMA controller 隻能尋址 4GB 記憶體實體位址空間

struct queue_limits {

unsigned long seg_boundary_mask;

...

} 在判斷兩個 bio_vec 能否合并為一個 physical segment 的過程中,除了要求兩個 bio_vec 描述的記憶體區間在實體位址上連續之外,還要求合并後的 physical segment 不能橫跨 @seg_boundary_mask 界限,即

addr1 = start address of physical segment

addr2 = end address of physical segment

此時必須要求

((addr1) | (seg_boundary_mask)) == ((addr2) | (seg_boundary_mask)) virt_boundary_mask

virt_boundary_mask 的概念最初來源于 nvme,例如 nvme 中要求一個 request 包含的所有 physical segment 中

- 除了第一個 physical segment,其餘 physical segment 的起始位址必須按照 PAGE_SIZE 對齊

- 同時除了最後一個 physical segment,其餘 physical segment 的末位址也必須按照 PAGE_SIZE 對齊

Another restriction inherited for NVMe - those devices don't support SG lists that have "gaps" in them. Gaps refers to cases where the previous SG entry doesn't end on a page boundary. For NVMe, all SG entries must start at offset 0 (except the first) and end on a page boundary (except the last).

@limits.virt_boundary_mask 就描述了這一限制,nvme 的 @virt_boundary_mask 參數即為 (PAGE_SIZE - 1);該參數的預設值為 0,即預設不存在這一限制

struct queue_limits {

unsigned long virt_boundary_mask;

...

} 在 bio 與 request 合并、或者兩個 request 合并過程中,必須符合 @virt_boundary_mask 限制,否則不能合并

在 bio 下發的過程中也要考慮該限制,否則 blk_queue_split() 中就需要對該 bio 執行 split 操作

discard

discard_granularity

This shows the size of internal allocation of the device in bytes, if

reported by the device. A value of '0' means device does not support

the discard functionality.

Devices that support discard functionality may internally allocate space using units that are bigger than the logical block size. The discard_granularity parameter indicates the size of the internal allocation unit in bytes if reported by the device. Otherwise the discard_granularity will be set to match the device's physical block size. A discard_granularity of 0 means that the device does not support discard functionality.

該參數描述硬體裝置内部配置設定存儲單元的大小,同時也是 DISCARD 操作的機關,以位元組為機關,若該參數為 0 說明裝置不支援 DISCARD 操作,對應

/sys/block/<dev>/queue/discard_granularity

預設值為 0,nvme/virtio-blk 這類支援 DISCARD 操作的驅動會設定該參數的值為 512 即一個 sector

struct queue_limits {

unsigned int discard_granularity;

...

}; discard_alignment

Devices that support discard functionality may internally allocate space in units that are bigger than the exported logical block size. The discard_alignment parameter indicates how many bytes the beginning of the device is offset from the internal allocation unit's natural alignment.

DISCARD request 必須按照 discard_alignment 對齊,對應

/sys/block/<dev>/discard_alignment

其預設值為 0,nvme/virtio-blk 這類支援 DISCARD 操作的驅動會設定該參數的值為 512 即一個 sector

struct queue_limits {

unsigned int discard_alignment;

...

}; max_hw_discard_sectors

Devices that support discard functionality may have internal limits on

the number of bytes that can be trimmed or unmapped in a single operation. The discard_max_bytes parameter is set by the device driver to the maximum number of bytes that can be discarded in a single operation. Discard requests issued to the device must not exceed this limit. A discard_max_bytes value of 0 means that the device does not support discard functionality.

有些 IO controller 規定單個 DISCARD request 的大小存在上限,max_hw_discard_sectors 參數就描述了這一限制,以位元組為機關,對應

/sys/block/<dev>/queue/discard_max_hw_bytes

其預設值為 0,nvme/virtio-blk 這類支援 DISCARD 操作的驅動會設定該參數的值

struct queue_limits {

unsigned int max_hw_discard_sectors;

...

}; max_discard_sectors

While discard_max_hw_bytes is the hardware limit for the device, this

setting is the software limit. Some devices exhibit large latencies when

large discards are issued, setting this value lower will make Linux issue

smaller discards and potentially help reduce latencies induced by large

discard operations.

該參數描述了 block layer 下發的單個 DISCARD request 的大小上限,對應

/sys/block/<dev>/queue/discard_max_bytes

預設值為 0,nvme/virtio-blk 這類支援 DISCARD 操作的驅動會設定該參數的值

max_hw_discard_sectors 參數描述的是硬體裝置的限制,但是 IO controller 實際在處理大的 discard request 時,其延時可能會很大,因而使用者可以通過 max_discard_sectors 設定 block layer 層面的軟體限制

max_discard_segments

There are two cases when handle DISCARD merge.

If max_discard_segments == 1, the bios/requests need to be contiguous

to merge. If max_discard_segments > 1, it takes every bio as a range

and different range needn't to be contiguous.

Two cases of handling DISCARD merge:

If max_discard_segments > 1, the driver takes every bio

as a range and send them to controller together. The ranges

needn't to be contiguous.

Otherwise, the bios/requests will be handled as same as

others which should be contiguous.

有些 IO controller 支援在單個 DISCARD request 中同時對多個非連續的 sector range 執行 discard 操作,該參數就描述了這一限制,即單個 DISCARD request 可以包含的 sector range 數量的上限,預設值為 1,對應

/sys/block/<dev>/queue/max_discard_segments