前言

首先進行雙目攝像頭定标,擷取雙目攝像頭内部的參數後,進行測距;本文的雙目視覺測距是基于BM算法。注意:雙目定标的效果會影響測距的精準度,建議大家在做雙目定标時,做好一些(盡量讓誤差小)。

一、雙目測距--輸入圖檔

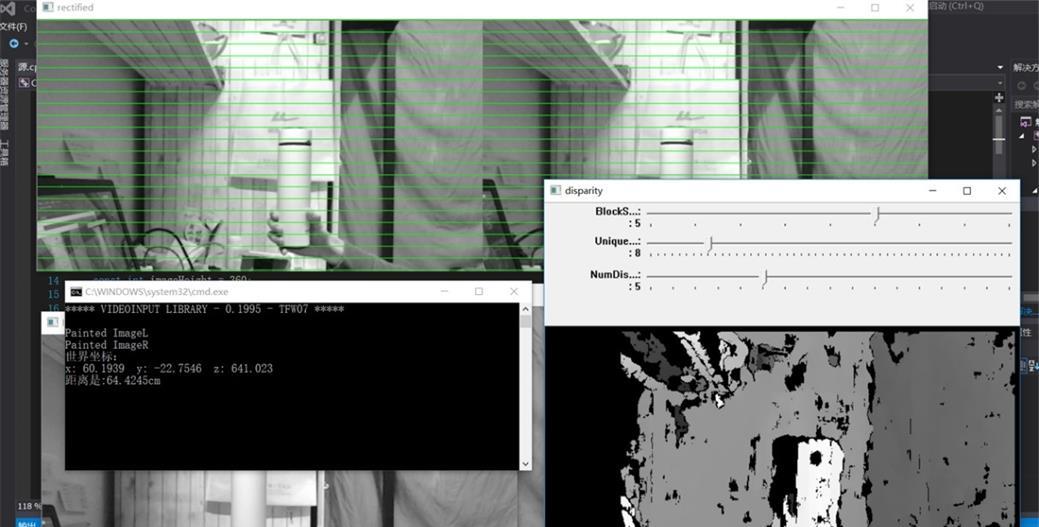

效果1:

效果2:

本人通過測試,誤差是1cm.

其中參數:BlockSize、UniquenessRatio、NumDisparities 根據實際情況來調整;

選擇C++運作效率高,BM算法可以自定義修改,比較靈活;嘗試過Python版的BM算法雙目測距,效果沒C++好。

源代碼:

/* 雙目測距 */

#include <opencv2/opencv.hpp>

#include <iostream>

#include <math.h>

using namespace std;

using namespace cv;

const int imageWidth = 640; //攝像頭的分辨率

const int imageHeight = 360;

Vec3f point3;

float d;

Size imageSize = Size(imageWidth, imageHeight);

Mat rgbImageL, grayImageL;

Mat rgbImageR, grayImageR;

Mat rectifyImageL, rectifyImageR;

Rect validROIL;//圖像校正之後,會對圖像進行裁剪,這裡的validROI就是指裁剪之後的區域

Rect validROIR;

Mat mapLx, mapLy, mapRx, mapRy; //映射表

Mat Rl, Rr, Pl, Pr, Q; //校正旋轉矩陣R,投影矩陣P 重投影矩陣Q

Mat xyz; //三維坐标

Point origin; //滑鼠按下的起始點

Rect selection; //定義矩形選框

bool selectObject = false; //是否選擇對象

int blockSize = 0, uniquenessRatio = 0, numDisparities = 0;

Ptr<StereoBM> bm = StereoBM::create(16, 9);

/*事先标定好的左相機的内參矩陣

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixL = (Mat_<double>(3, 3) << 418.523322187048, -1.26842201390676, 343.908870120890,

0, 421.222568242056, 235.466208987968,

0, 0, 1);

//獲得的畸變參數

/*418.523322187048 0 0

-1.26842201390676 421.222568242056 0

344.758267538961 243.318992284899 1 */ //2

Mat distCoeffL = (Mat_<double>(5, 1) << 0.006636837611004, 0.050240447649195, 0.006681263320267, 0.003130367429418, 0);

//[0.006636837611004,0.050240447649195] [0.006681263320267,0.003130367429418]

/*事先标定好的右相機的内參矩陣

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixR = (Mat_<double>(3, 3) << 417.417985082506, 0.498638151824367, 309.903372309072,

0, 419.795432389420, 230.6,

0, 0, 1);

/*

417.417985082506 0 0

0.498638151824367 419.795432389420 0

309.903372309072 236.256106972796 1

*/ //2

Mat distCoeffR = (Mat_<double>(5, 1) << -0.038407383078874, 0.236392800301615, 0.004121779274885, 0.002296129959664, 0);

//[-0.038407383078874,0.236392800301615] [0.004121779274885,0.002296129959664]

Mat T = (Mat_<double>(3, 1) << -1.210187345641146e+02, 0.519235426836325, -0.425535566316217);//T平移向量

//[-1.210187345641146e+02,0.519235426836325,-0.425535566316217]

//對應Matlab所得T參數

//Mat rec = (Mat_<double>(3, 1) << -0.00306, -0.03207, 0.00206);//rec旋轉向量,對應matlab om參數 我

Mat rec = (Mat_<double>(3, 3) << 0.999341122700880, -0.00206388651740061, 0.0362361815232777,

0.000660748031451783, 0.999250989651683, 0.0386913826603732,

-0.0362888948713456, -0.0386419468010579, 0.998593969567432); //rec旋轉向量,對應matlab om參數 我

/* 0.999341122700880 0.000660748031451783 -0.0362888948713456

-0.00206388651740061 0.999250989651683 -0.0386419468010579

0.0362361815232777 0.0386913826603732 0.998593969567432 */

//Mat T = (Mat_<double>(3, 1) << -48.4, 0.241, -0.0344);//T平移向量

//[-1.210187345641146e+02,0.519235426836325,-0.425535566316217]

//對應Matlab所得T參數

//Mat rec = (Mat_<double>(3, 1) << -0.039, -0.04658, 0.00106);//rec旋轉向量,對應matlab om參數 倬華

Mat R;//R 旋轉矩陣

/*****立體比對*****/

void stereo_match(int, void*)

{

bm->setBlockSize(2 * blockSize + 5); //SAD視窗大小,5~21之間為宜

bm->setROI1(validROIL);

bm->setROI2(validROIR);

bm->setPreFilterCap(31);

bm->setMinDisparity(0); //最小視差,預設值為0, 可以是負值,int型

bm->setNumDisparities(numDisparities * 16 + 16);//視差視窗,即最大視內插補點與最小視內插補點之差,視窗大小必須是16的整數倍,int型

bm->setTextureThreshold(10);

bm->setUniquenessRatio(uniquenessRatio);//uniquenessRatio主要可以防止誤比對

bm->setSpeckleWindowSize(100);

bm->setSpeckleRange(32);

bm->setDisp12MaxDiff(-1);

Mat disp, disp8;

bm->compute(rectifyImageL, rectifyImageR, disp);//輸入圖像必須為灰階圖

disp.convertTo(disp8, CV_8U, 255 / ((numDisparities * 16 + 16)*16.));//計算出的視差是CV_16S格式

reprojectImageTo3D(disp, xyz, Q, true); //在實際求距離時,ReprojectTo3D出來的X / W, Y / W, Z / W都要乘以16(也就是W除以16),才能得到正确的三維坐标資訊。

xyz = xyz * 16;

imshow("disparity", disp8);

}

/*****描述:滑鼠操作回調*****/

static void onMouse(int event, int x, int y, int, void*)

{

if (selectObject)

{

selection.x = MIN(x, origin.x);

selection.y = MIN(y, origin.y);

selection.width = std::abs(x - origin.x);

selection.height = std::abs(y - origin.y);

}

switch (event)

{

case EVENT_LBUTTONDOWN: //滑鼠左按鈕按下的事件

origin = Point(x, y);

selection = Rect(x, y, 0, 0);

selectObject = true;

//cout << origin << "in world coordinate is: " << xyz.at<Vec3f>(origin) << endl;

point3 = xyz.at<Vec3f>(origin);

point3[0];

//cout << "point3[0]:" << point3[0] << "point3[1]:" << point3[1] << "point3[2]:" << point3[2]<<endl;

cout << "世界坐标:" << endl;

cout << "x: " << point3[0] << " y: " << point3[1] << " z: " << point3[2] << endl;

d = point3[0] * point3[0]+ point3[1] * point3[1]+ point3[2] * point3[2];

d = sqrt(d); //mm

// cout << "距離是:" << d << "mm" << endl;

d = d / 10.0; //cm

cout << "距離是:" << d << "cm" << endl;

// d = d/1000.0; //m

// cout << "距離是:" << d << "m" << endl;

break;

case EVENT_LBUTTONUP: //滑鼠左按鈕釋放的事件

selectObject = false;

if (selection.width > 0 && selection.height > 0)

break;

}

}

/*****主函數*****/

int main()

{

/*

立體校正

*/

Rodrigues(rec, R); //Rodrigues變換

stereoRectify(cameraMatrixL, distCoeffL, cameraMatrixR, distCoeffR, imageSize, R, T, Rl, Rr, Pl, Pr, Q, CALIB_ZERO_DISPARITY,

0, imageSize, &validROIL, &validROIR);

initUndistortRectifyMap(cameraMatrixL, distCoeffL, Rl, Pr, imageSize, CV_32FC1, mapLx, mapLy);

initUndistortRectifyMap(cameraMatrixR, distCoeffR, Rr, Pr, imageSize, CV_32FC1, mapRx, mapRy);

/*

讀取圖檔

*/

rgbImageL = imread("image_left_1.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageL, grayImageL, CV_BGR2GRAY);

rgbImageR = imread("image_right_1.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageR, grayImageR, CV_BGR2GRAY);

imshow("ImageL Before Rectify", grayImageL);

imshow("ImageR Before Rectify", grayImageR);

/*

經過remap之後,左右相機的圖像已經共面并且行對準了

*/

remap(grayImageL, rectifyImageL, mapLx, mapLy, INTER_LINEAR);

remap(grayImageR, rectifyImageR, mapRx, mapRy, INTER_LINEAR);

/*

把校正結果顯示出來

*/

Mat rgbRectifyImageL, rgbRectifyImageR;

cvtColor(rectifyImageL, rgbRectifyImageL, CV_GRAY2BGR); //僞彩色圖

cvtColor(rectifyImageR, rgbRectifyImageR, CV_GRAY2BGR);

//單獨顯示

//rectangle(rgbRectifyImageL, validROIL, Scalar(0, 0, 255), 3, 8);

//rectangle(rgbRectifyImageR, validROIR, Scalar(0, 0, 255), 3, 8);

imshow("ImageL After Rectify", rgbRectifyImageL);

imshow("ImageR After Rectify", rgbRectifyImageR);

//顯示在同一張圖上

Mat canvas;

double sf;

int w, h;

sf = 600. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width * sf);

h = cvRound(imageSize.height * sf);

canvas.create(h, w * 2, CV_8UC3); //注意通道

//左圖像畫到畫布上

Mat canvasPart = canvas(Rect(w * 0, 0, w, h)); //得到畫布的一部分

resize(rgbRectifyImageL, canvasPart, canvasPart.size(), 0, 0, INTER_AREA); //把圖像縮放到跟canvasPart一樣大小

Rect vroiL(cvRound(validROIL.x*sf), cvRound(validROIL.y*sf), //獲得被截取的區域

cvRound(validROIL.width*sf), cvRound(validROIL.height*sf));

//rectangle(canvasPart, vroiL, Scalar(0, 0, 255), 3, 8); //畫上一個矩形

cout << "Painted ImageL" << endl;

//右圖像畫到畫布上

canvasPart = canvas(Rect(w, 0, w, h)); //獲得畫布的另一部分

resize(rgbRectifyImageR, canvasPart, canvasPart.size(), 0, 0, INTER_LINEAR);

Rect vroiR(cvRound(validROIR.x * sf), cvRound(validROIR.y*sf),

cvRound(validROIR.width * sf), cvRound(validROIR.height * sf));

//rectangle(canvasPart, vroiR, Scalar(0, 0, 255), 3, 8);

cout << "Painted ImageR" << endl;

//畫上對應的線條

for (int i = 0; i < canvas.rows; i += 16)

line(canvas, Point(0, i), Point(canvas.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

/*

立體比對

*/

namedWindow("disparity", CV_WINDOW_AUTOSIZE);

// 建立SAD視窗 Trackbar

createTrackbar("BlockSize:\n", "disparity", &blockSize, 8, stereo_match);

// 建立視差唯一性百分比視窗 Trackbar

createTrackbar("UniquenessRatio:\n", "disparity", &uniquenessRatio, 50, stereo_match);

// 建立視差視窗 Trackbar

createTrackbar("NumDisparities:\n", "disparity", &numDisparities, 16, stereo_match);

//滑鼠響應函數setMouseCallback(視窗名稱, 滑鼠回調函數, 傳給回調函數的參數,一般取0)

setMouseCallback("disparity", onMouse, 0);

stereo_match(0, 0);

waitKey(0);

return 0;

}

流程說明:

先采集左右攝像頭的圖檔,然後,修改一下指定的圖檔,可以進行測距。

裡面有雙目攝像頭的參數,具體需要自己定标和矯正後,然後,填入。

雙目定标可以參考我這篇部落格:

https://guo-pu.blog.csdn.net/article/details/86602452雙目資料轉化可以參考我這篇部落格:

https://guo-pu.blog.csdn.net/article/details/86710737詳細講解攝像頭參數:

1)Mat cameraMatrixL 左相機的内參矩陣

2)Mat distCoeffL = (Mat_(5, 1) ....... 左相機 畸變參數 即K1,K2,P1,P2,K3。

3) Mat cameraMatrixR 右相機的内參矩陣

4)Mat distCoeffR = (Mat_(5, 1) ....... 右相機畸變參數 即K1,K2,P1,P2,K3。

5) Mat T = (Mat_(3, 1) << -1.210187345641146e+02, 0.519235426836325, -0.425535566316217);// 相機的 平移向量

6) Mat rec = (Mat_(3, 3) << 0.99934112270088................... 相機的旋轉向量

一共6個相機參數,1、2是 左相機的參數; 3、4是 右相機的參數; 5、6是相機(相對)整體的參數。

二、實時采集攝像頭資料,進行雙目測距

效果如下圖:

/******************************/

/* 立體比對和測距 */

/******************************/

#include <opencv2/opencv.hpp>

#include <iostream>

#include <math.h>

using namespace std;

using namespace cv;

const int imageWidth = 640; //攝像頭的分辨率

const int imageHeight = 360;

Vec3f point3;

float d;

Size imageSize = Size(imageWidth, imageHeight);

Mat rgbImageL, grayImageL;

Mat rgbImageR, grayImageR;

Mat rectifyImageL, rectifyImageR;

Rect validROIL;//圖像校正之後,會對圖像進行裁剪,這裡的validROI就是指裁剪之後的區域

Rect validROIR;

Mat mapLx, mapLy, mapRx, mapRy; //映射表

Mat Rl, Rr, Pl, Pr, Q; //校正旋轉矩陣R,投影矩陣P 重投影矩陣Q

Mat xyz; //三維坐标

Point origin; //滑鼠按下的起始點

Rect selection; //定義矩形選框

bool selectObject = false; //是否選擇對象

int blockSize = 0, uniquenessRatio = 0, numDisparities = 0;

Ptr<StereoBM> bm = StereoBM::create(16, 9);

/*事先标定好的左相機的内參矩陣

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixL = (Mat_<double>(3, 3) << 418.523322187048, -1.26842201390676, 343.908870120890,

0, 421.222568242056, 235.466208987968,

0, 0, 1);

//獲得的畸變參數

/*418.523322187048 0 0

-1.26842201390676 421.222568242056 0

344.758267538961 243.318992284899 1 */ //2

Mat distCoeffL = (Mat_<double>(5, 1) << 0.006636837611004, 0.050240447649195, 0.006681263320267, 0.003130367429418, 0);

//[0.006636837611004,0.050240447649195] [0.006681263320267,0.003130367429418]

/*事先标定好的右相機的内參矩陣

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixR = (Mat_<double>(3, 3) << 417.417985082506, 0.498638151824367, 309.903372309072,

0, 419.795432389420, 230.6,

0, 0, 1);

/*

417.417985082506 0 0

0.498638151824367 419.795432389420 0

309.903372309072 236.256106972796 1

*/ //2

Mat distCoeffR = (Mat_<double>(5, 1) << -0.038407383078874, 0.236392800301615, 0.004121779274885, 0.002296129959664, 0);

//[-0.038407383078874,0.236392800301615] [0.004121779274885,0.002296129959664]

Mat T = (Mat_<double>(3, 1) << -1.210187345641146e+02, 0.519235426836325, -0.425535566316217);//T平移向量

//[-1.210187345641146e+02,0.519235426836325,-0.425535566316217]

//對應Matlab所得T參數

//Mat rec = (Mat_<double>(3, 1) << -0.00306, -0.03207, 0.00206);//rec旋轉向量,對應matlab om參數 我

Mat rec = (Mat_<double>(3, 3) << 0.999341122700880, -0.00206388651740061, 0.0362361815232777,

0.000660748031451783, 0.999250989651683, 0.0386913826603732,

-0.0362888948713456, -0.0386419468010579, 0.998593969567432); //rec旋轉向量,對應matlab om參數 我

/* 0.999341122700880 0.000660748031451783 -0.0362888948713456

-0.00206388651740061 0.999250989651683 -0.0386419468010579

0.0362361815232777 0.0386913826603732 0.998593969567432 */

//Mat T = (Mat_<double>(3, 1) << -48.4, 0.241, -0.0344);//T平移向量 //[-1.210187345641146e+02,0.519235426836325,-0.425535566316217]

//對應Matlab所得T參數

//Mat rec = (Mat_<double>(3, 1) << -0.039, -0.04658, 0.00106);//rec旋轉向量,對應matlab om參數 倬華

Mat R;//R 旋轉矩陣

/*****立體比對*****/

void stereo_match(int, void*)

{

bm->setBlockSize(2 * blockSize + 5); //SAD視窗大小,5~21之間為宜

bm->setROI1(validROIL);

bm->setROI2(validROIR);

bm->setPreFilterCap(31);

bm->setMinDisparity(0); //最小視差,預設值為0, 可以是負值,int型

bm->setNumDisparities(numDisparities * 16 + 16);//視差視窗,即最大視內插補點與最小視內插補點之差,視窗大小必須是16的整數倍,int型

bm->setTextureThreshold(10);

bm->setUniquenessRatio(uniquenessRatio);//uniquenessRatio主要可以防止誤比對

bm->setSpeckleWindowSize(100);

bm->setSpeckleRange(32);

bm->setDisp12MaxDiff(-1);

Mat disp, disp8;

bm->compute(rectifyImageL, rectifyImageR, disp);//輸入圖像必須為灰階圖

disp.convertTo(disp8, CV_8U, 255 / ((numDisparities * 16 + 16)*16.));//計算出的視差是CV_16S格式

reprojectImageTo3D(disp, xyz, Q, true); //在實際求距離時,ReprojectTo3D出來的X / W, Y / W, Z / W都要乘以16(也就是W除以16),才能得到正确的三維坐标資訊。

xyz = xyz * 16;

imshow("disparity", disp8);

}

/*****描述:滑鼠操作回調*****/

static void onMouse(int event, int x, int y, int, void*)

{

if (selectObject)

{

selection.x = MIN(x, origin.x);

selection.y = MIN(y, origin.y);

selection.width = std::abs(x - origin.x);

selection.height = std::abs(y - origin.y);

}

switch (event)

{

case EVENT_LBUTTONDOWN: //滑鼠左按鈕按下的事件

origin = Point(x, y);

selection = Rect(x, y, 0, 0);

selectObject = true;

//cout << origin << "in world coordinate is: " << xyz.at<Vec3f>(origin) << endl;

point3 = xyz.at<Vec3f>(origin);

point3[0];

//cout << "point3[0]:" << point3[0] << "point3[1]:" << point3[1] << "point3[2]:" << point3[2]<<endl;

cout << "世界坐标:" << endl;

cout << "x: " << point3[0] << " y: " << point3[1] << " z: " << point3[2] << endl;

d = point3[0] * point3[0]+ point3[1] * point3[1]+ point3[2] * point3[2];

d = sqrt(d); //mm

// cout << "距離是:" << d << "mm" << endl;

d = d / 10.0; //cm

cout << "距離是:" << d << "cm" << endl;

// d = d/1000.0; //m

// cout << "距離是:" << d << "m" << endl;

break;

case EVENT_LBUTTONUP: //滑鼠左按鈕釋放的事件

selectObject = false;

if (selection.width > 0 && selection.height > 0)

break;

}

}

/*****主函數*****/

int main()

{

/*

立體校正

*/

Rodrigues(rec, R); //Rodrigues變換

stereoRectify(cameraMatrixL, distCoeffL, cameraMatrixR, distCoeffR, imageSize, R, T, Rl, Rr, Pl, Pr, Q, CALIB_ZERO_DISPARITY,

0, imageSize, &validROIL, &validROIR);

initUndistortRectifyMap(cameraMatrixL, distCoeffL, Rl, Pl, imageSize, CV_32FC1, mapLx, mapLy);

initUndistortRectifyMap(cameraMatrixR, distCoeffR, Rr, Pr, imageSize, CV_32FC1, mapRx, mapRy);

/*

打開攝像頭

*/

VideoCapture cap;

cap.open(1); //打開相機,電腦自帶攝像頭一般編号為0,外接攝像頭編号為1,主要是在裝置管理器中檢視自己攝像頭的編号。

cap.set(CV_CAP_PROP_FRAME_WIDTH, 2560); //設定捕獲視訊的寬度

cap.set(CV_CAP_PROP_FRAME_HEIGHT, 720); //設定捕獲視訊的高度

if (!cap.isOpened()) //判斷是否成功打開相機

{

cout << "攝像頭打開失敗!" << endl;

return -1;

}

Mat frame, frame_L, frame_R;

cap >> frame; //從相機捕獲一幀圖像

cout << "Painted ImageL" << endl;

cout << "Painted ImageR" << endl;

while (1) {

double fScale = 0.5; //定義縮放系數,對2560*720圖像進行縮放顯示(2560*720圖像過大,液晶屏分辨率較小時,需要縮放才可完整顯示在螢幕)

Size dsize = Size(frame.cols*fScale, frame.rows*fScale);

Mat imagedst = Mat(dsize, CV_32S);

resize(frame, imagedst, dsize);

char image_left[200];

char image_right[200];

frame_L = imagedst(Rect(0, 0, 640, 360)); //擷取縮放後左Camera的圖像

// namedWindow("Video_L", 1);

// imshow("Video_L", frame_L);

frame_R = imagedst(Rect(640, 0, 640, 360)); //擷取縮放後右Camera的圖像

// namedWindow("Video_R", 2);

// imshow("Video_R", frame_R);

cap >> frame;

/*

讀取圖檔

*/

//rgbImageL = imread("image_left_1.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(frame_L, grayImageL, CV_BGR2GRAY);

//rgbImageR = imread("image_right_1.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(frame_R, grayImageR, CV_BGR2GRAY);

// imshow("ImageL Before Rectify", grayImageL);

// imshow("ImageR Before Rectify", grayImageR);

/*

經過remap之後,左右相機的圖像已經共面并且行對準了

*/

remap(grayImageL, rectifyImageL, mapLx, mapLy, INTER_LINEAR);

remap(grayImageR, rectifyImageR, mapRx, mapRy, INTER_LINEAR);

/*

把校正結果顯示出來

*/

Mat rgbRectifyImageL, rgbRectifyImageR;

cvtColor(rectifyImageL, rgbRectifyImageL, CV_GRAY2BGR); //僞彩色圖

cvtColor(rectifyImageR, rgbRectifyImageR, CV_GRAY2BGR);

//單獨顯示

//rectangle(rgbRectifyImageL, validROIL, Scalar(0, 0, 255), 3, 8);

//rectangle(rgbRectifyImageR, validROIR, Scalar(0, 0, 255), 3, 8);

// imshow("ImageL After Rectify", rgbRectifyImageL);

// imshow("ImageR After Rectify", rgbRectifyImageR);

//顯示在同一張圖上

Mat canvas;

double sf;

int w, h;

sf = 600. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width * sf);

h = cvRound(imageSize.height * sf);

canvas.create(h, w * 2, CV_8UC3); //注意通道

//左圖像畫到畫布上

Mat canvasPart = canvas(Rect(w * 0, 0, w, h)); //得到畫布的一部分

resize(rgbRectifyImageL, canvasPart, canvasPart.size(), 0, 0, INTER_AREA); //把圖像縮放到跟canvasPart一樣大小

Rect vroiL(cvRound(validROIL.x*sf), cvRound(validROIL.y*sf), //獲得被截取的區域

cvRound(validROIL.width*sf), cvRound(validROIL.height*sf));

//rectangle(canvasPart, vroiL, Scalar(0, 0, 255), 3, 8); //畫上一個矩形

// cout << "Painted ImageL" << endl;

//右圖像畫到畫布上

canvasPart = canvas(Rect(w, 0, w, h)); //獲得畫布的另一部分

resize(rgbRectifyImageR, canvasPart, canvasPart.size(), 0, 0, INTER_LINEAR);

Rect vroiR(cvRound(validROIR.x * sf), cvRound(validROIR.y*sf),

cvRound(validROIR.width * sf), cvRound(validROIR.height * sf));

//rectangle(canvasPart, vroiR, Scalar(0, 0, 255), 3, 8);

// cout << "Painted ImageR" << endl;

//畫上對應的線條

for (int i = 0; i < canvas.rows; i += 16)

line(canvas, Point(0, i), Point(canvas.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

/*

立體比對

*/

namedWindow("disparity", CV_WINDOW_AUTOSIZE);

// 建立SAD視窗 Trackbar

createTrackbar("BlockSize:\n", "disparity", &blockSize, 8, stereo_match);

// 建立視差唯一性百分比視窗 Trackbar

createTrackbar("UniquenessRatio:\n", "disparity", &uniquenessRatio, 50, stereo_match);

// 建立視差視窗 Trackbar

createTrackbar("NumDisparities:\n", "disparity", &numDisparities, 16, stereo_match);

//滑鼠響應函數setMouseCallback(視窗名稱, 滑鼠回調函數, 傳給回調函數的參數,一般取0)

setMouseCallback("disparity", onMouse, 0);

stereo_match(0, 0);

waitKey(10);

} //wheil

return 0;

} 希望對你有幫助。

如果發現有待優化的地方,歡迎交流。

補充說明:

1.關于如何求出世界坐标?

1)x,y,z 是由

Vec3f point3;

point3 = xyz.at(origin); 來轉化的。

cout << "x: " << point3[0] << " y: " << point3[1] << " z: " << point3[2] << endl;

2)x,y,z求平方和後開根号,是兩點的距離公式,即點(0,0,0)------雙目攝像頭的中心點,和點(x,y,z)進行兩點求距離。