一 環境介紹

1.作業系統

CentOS Linux release 7.2.1511 (Core)

2.服務

keepalived+nginx雙主高可用負載均衡叢集及LAMP應用

keepalived-1.2.13-7.el7.x86_64

nginx-1.10.2-1.el7.x86_64

httpd-2.4.6-45.el7.centos.x86_64

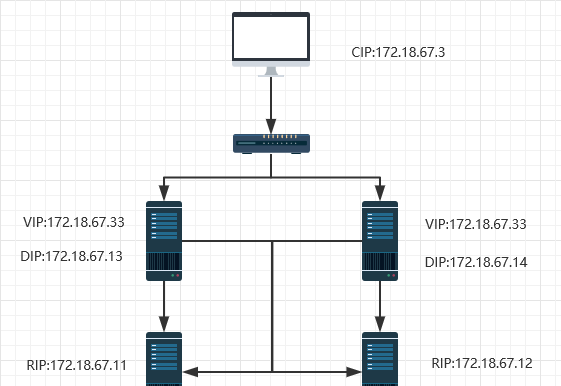

二 原理及拓撲圖

1.vrrp協定

在現實的網絡環境中,兩台需要通信的主機大多數情況下并沒有直接的實體連接配接。對于這樣的情況,它們之間路由怎樣選擇?主機如何標明到達目的主機的下一跳路由,這個問題通常的解決方法有二種:

在主機上使用動态路由協定(RIP、OSPF等)

在主機上配置靜态路由

很明顯,在主機上配置動态路由是非常不切實際的,因為管理、維護成本以及是否支援等諸多問題。配置靜态路由就變得十分流行,但路由器(或者說預設網關default gateway)卻經常成為單點故障。VRRP的目的就是為了解決靜态路由單點故障問題,VRRP通過一競選(election)協定來動态的将路由任務交給LAN中虛拟路由器中的某台VRRP路由器。

2.nginx反代

nginx是以反向代理的方式進行負載均衡的。反向代理(Reverse Proxy)方式是指以代理伺服器來接受Internet上的連接配接請求,然後将請求轉發給内部網絡上的伺服器,并将從伺服器上得到的結果傳回給Internet上請求連接配接的用戶端,此時代理伺服器對外就表現為一個伺服器。(為了了解反向代理,這裡插播一條什麼是正向代理:正向代理指的是,一個位于用戶端和原始伺服器之間的伺服器,為了從原始伺服器取得内容,用戶端向代理發送一個請求并指定目标(原始伺服器),然後代理向原始伺服器轉交請求并将獲得的内容傳回給用戶端。)

3.拓撲圖

三 配置

1.後端RS配置

[root@inode4 ~]# yum install httpd -y

[root@inode5 ~]# yum install httpd -y

2.Nginx反代配置

MASTER:

upstream websrvs {

server 172.18.67.11:80;

server 172.18.67.12:80;

server 127.0.0.1:80 backup;

}

server {

listen 80 ;

location / {

proxy_pass http://websrvs;

}

BACKUP:

upstream websrvs {

server 172.18.67.11:80;

server 172.18.67.12:80;

server 127.0.0.1:80 backup;

}

server {

listen 80 ;

location / {

proxy_pass http://websrvs;

}

3.keepalived高可用配置

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_mcast_group4 224.0.67.67

}

vrrp_script chk_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -5

}

vrrp_script chk_nginx {

script "killall -0 nginx && exit 0 || exit 1"

interval 1

weight -5

fall 2

rise 1

}

vrrp_instance myr {

state MASTER

interface eno16777736

virtual_router_id 167

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 571f97b2

}

virtual_ipaddress {

172.18.67.33/16 dev eno16777736

}

track_script {

chk_down

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_mcast_group4 224.0.67.67

}

vrrp_script chk_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -5

}

vrrp_script chk_nginx {

script "killall -0 nginx && exit 0 || exit 1"

interval 1

weight -5

fall 2

rise 1

}

vrrp_instance myr {

state BACKUP

interface eno16777736

virtual_router_id 167

priority 95

advert_int 1

authentication {

auth_type PASS

auth_pass 571f97b2

}

virtual_ipaddress {

172.18.67.33/16 dev eno16777736

}

track_script {

chk_down

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

4.通知腳本示例

[root@inode2 nginx]# vim notify.sh

#!/bin/bash

#

contact='root@localhost'

notify() {

mailsubject="$(hostname) to be $1, vip floating"

mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be $1"

echo "$mailbody" | mail -s "$mailsubject" $contact

}

case $1 in

master)

notify master

;;

backup)

notify backup

;;

fault)

notify fault

;;

*)

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

;;

esac

節點二同樣配置

四 啟動服務并測試

1.啟動後端web伺服器

[root@inode4 ~]# systemctl start httpd

[root@inode5 ~]# systemctl start httpd

為了測試顯示效果明顯一點,自定義一個通路頁面

[root@inode4 ~]# echo "RS1:172.18.67.11" > /var/www/html/index.html

[root@inode5 ~]# echo "RS2:172.18.67.12" > /var/www/html/index.html

2.測試

[root@inode2 ~]# systemctl start keepalived

[root@inode2 ~]# systemctl status -l keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Mon 2017-05-15 15:45:20 CST; 3s ago

Process: 20971 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 20972 (keepalived)

CGroup: /system.slice/keepalived.service

├─20972 /usr/sbin/keepalived -D

├─20973 /usr/sbin/keepalived -D

└─20974 /usr/sbin/keepalived -D

May 15 15:45:20 inode2 Keepalived_healthcheckers[20973]: Opening file '/etc/keepalived/keepalived.conf'.

May 15 15:45:20 inode2 Keepalived_healthcheckers[20973]: Configuration is using : 7521 Bytes

May 15 15:45:20 inode2 Keepalived_healthcheckers[20973]: Using LinkWatch kernel netlink reflector...

May 15 15:45:20 inode2 Keepalived_vrrp[20974]: VRRP_Script(chk_nginx) succeeded

May 15 15:45:21 inode2 Keepalived_vrrp[20974]: VRRP_Instance(myr) Transition to MASTER STATE

May 15 15:45:22 inode2 Keepalived_vrrp[20974]: VRRP_Instance(myr) Entering MASTER STATE

May 15 15:45:22 inode2 Keepalived_vrrp[20974]: VRRP_Instance(myr) setting protocol VIPs.

May 15 15:45:22 inode2 Keepalived_vrrp[20974]: VRRP_Instance(myr) Sending gratuitous ARPs on eno16777736 for 172.18.67.33

May 15 15:45:22 inode2 Keepalived_vrrp[20974]: Opening script file /etc/keepalived/notify.sh

May 15 15:45:22 inode2 Keepalived_healthcheckers[20973]: Netlink reflector reports IP 172.18.67.33 added

[root@inode2 ~]# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:8b:08:6f brd ff:ff:ff:ff:ff:ff

inet 172.18.67.13/16 brd 172.18.255.255 scope global eno16777736

valid_lft forever preferred_lft forever

inet 172.18.67.33/16 scope global secondary eno16777736

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe8b:86f/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

主節點啟動,Entering MASTER STATE,此時我們在用戶端進行測試通路

[root@inode1 ~]# for i in {1..4};do curl http://172.18.67.33;done

RS1:172.18.67.11

RS2:172.18.67.12

RS1:172.18.67.11

RS2:172.18.67.12

通路正常,接下來我們啟動備用節點的伺服器

[root@inode3 keepalived]# systemctl start keepalived

[root@inode3 keepalived]# systemctl status -l keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Mon 2017-05-15 15:46:51 CST; 3s ago

Process: 24329 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 24330 (keepalived)

CGroup: /system.slice/keepalived.service

├─24330 /usr/sbin/keepalived -D

├─24331 /usr/sbin/keepalived -D

└─24332 /usr/sbin/keepalived -D

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: Registering Kernel netlink command channel

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: Registering gratuitous ARP shared channel

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: Opening file '/etc/keepalived/keepalived.conf'.

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: Configuration is using : 66427 Bytes

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: Using LinkWatch kernel netlink reflector...

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: VRRP_Instance(myr) Entering BACKUP STATE

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: Opening script file /etc/keepalived/notify.sh

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: VRRP_Script(chk_down) succeeded

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: VRRP_Script(chk_nginx) succeeded

[root@inode3 keepalived]# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:78:24:c3 brd ff:ff:ff:ff:ff:ff

inet 172.18.67.14/16 brd 172.18.255.255 scope global eno16777736

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe78:24c3/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

此時,我們可以看到備用節點伺服器啟動後進入了BACKUP狀态,Entering BACKUP STATE。接下來我們測試主節點當機的情形下,我們的服務是否還可用

[root@inode2 ~]# systemctl stop keepalived

主節點當機後我們檢視備用節點的狀态

[root@inode3 keepalived]# systemctl status -l keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Mon 2017-05-15 15:46:51 CST; 2min 19s ago

Process: 24329 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 24330 (keepalived)

CGroup: /system.slice/keepalived.service

├─24330 /usr/sbin/keepalived -D

├─24331 /usr/sbin/keepalived -D

└─24332 /usr/sbin/keepalived -D

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: VRRP_Script(chk_down) succeeded

May 15 15:46:51 inode3 Keepalived_vrrp[24332]: VRRP_Script(chk_nginx) succeeded

May 15 15:48:35 inode3 Keepalived_vrrp[24332]: VRRP_Instance(myr) Transition to MASTER STATE

May 15 15:48:36 inode3 Keepalived_vrrp[24332]: VRRP_Instance(myr) Entering MASTER STATE

May 15 15:48:36 inode3 Keepalived_vrrp[24332]: VRRP_Instance(myr) setting protocol VIPs.

May 15 15:48:36 inode3 Keepalived_vrrp[24332]: VRRP_Instance(myr) Sending gratuitous ARPs on eno16777736 for 172.18.67.33

May 15 15:48:36 inode3 Keepalived_vrrp[24332]: Opening script file /etc/keepalived/notify.sh

May 15 15:48:36 inode3 Keepalived_healthcheckers[24331]: Netlink reflector reports IP 172.18.67.33 added

May 15 15:48:41 inode3 Keepalived_vrrp[24332]: VRRP_Instance(myr) Sending gratuitous ARPs on eno16777736 for 172.18.67.33

[root@inode3 keepalived]# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:78:24:c3 brd ff:ff:ff:ff:ff:ff

inet 172.18.67.14/16 brd 172.18.255.255 scope global eno16777736

valid_lft forever preferred_lft forever

inet 172.18.67.33/16 scope global secondary eno16777736

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe78:24c3/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

我們發現備用節點由備用狀态進入了主狀态,并且IP位址也成功綁定至備用節點下。再次進行測試通路

[root@inode1 ~]# for i in {1..4};do curl http://172.18.67.33;done

RS1:172.18.67.11

RS2:172.18.67.12

RS1:172.18.67.11

RS2:172.18.67.12

測試一台web伺服器當機

[root@inode4 ~]# systemctl stop httpd

[root@inode1 ~]# for i in {1..4};do curl http://172.18.67.33;done

RS2:172.18.67.12

RS2:172.18.67.12

RS2:172.18.67.12

RS2:172.18.67.12

在實際生産環境中後端兩台web伺服器的内容應該一樣的,在這裡我們可認為用戶端已成功通路到伺服器,是以我們可認為這樣的架構展現了高可用負載均衡。