本文使用的tensorflow版本:1.4

tensorflow安裝:pip install tensorflow

1、先來目睹一下效果吧

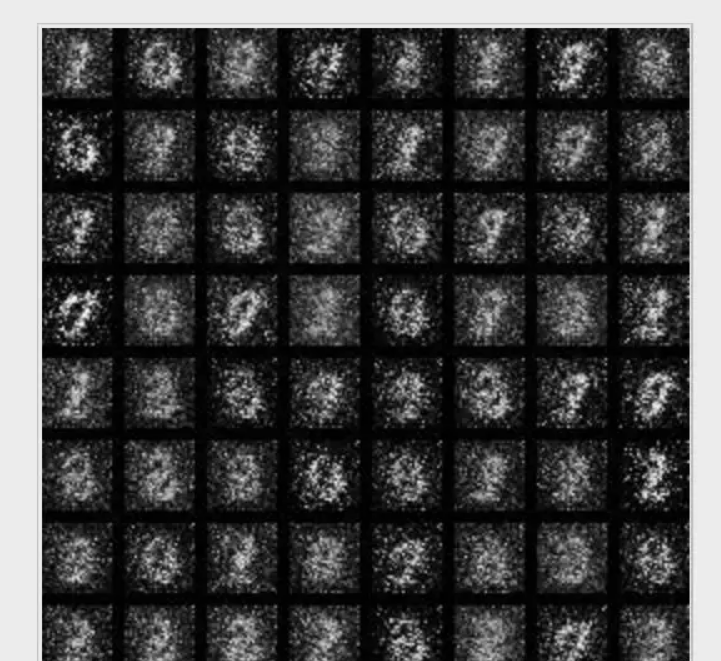

這篇文章講解了如何使用GAN來生成我們的手寫數字,我們首先來看看生成的效果吧:

10輪:

可以看到,在10輪的時候,我們的Generator生成的圖檔非常模糊,幾乎是無法用肉眼來分别數字的,到了第50輪的時候,已經初見雛形了,有一些數字比如1、4、5這些都可以很清楚的分辨出來,不過還并不是十分完美。到了100輪的時候,像數字8和9這些也基本能準确的生成了,而到200輪的時候,除去個别的以外,基本上都能正确的手寫出來了,由于時間的原因,沒有繼續訓練下去,如果大家感興趣,可以訓練更多輪,看看更好的效果。

2、思路解析

設定參數

本文設定的參數是,圖檔的大小是28*28,這是mnist圖檔的标準大小,後面是一些儲存模型的設定。我們總共的訓練輪數是500輪。在我們的Generator和Discriminator中,我們設定的是一個簡單的有兩層隐藏層的全連結神經網絡。對于Generator來說,輸入的的大小是[batch_size,z_size],第一個隐藏層有150個神經元,第二個隐藏層有300個神經元,輸出的大小就是圖檔的size28*28。而對于Discriminator來說,我們的輸入的大小是[batch_size * 2,img_size],因為我們要摻雜真實的img和Generator生成的img。第一個隐藏層有300個神經元,第二個隐藏層有150個神經元,輸出層隻有1個數,表示該圖檔為真實圖檔的機率。關于神經網絡的結構我們會在後面詳細講解。

img_height = 28img_width = 28img_size = img_height * img_width

to_train = Trueto_restore = Falseoutput_path = "output"# 總疊代次數500max_epoch = 500h1_size = 150h2_size = 300z_size = 100batch_size = 256

建立Generator

剛才也講到了,對于Generator來說,輸入的的大小是[batch_size,z_size],第一個隐藏層有150個神經元,第二個隐藏層有300個神經元,輸出的大小就是圖檔的size28*28。總的來說,經過Generator,由[batch_size,z_size] 變為 [batch_size,img_size]

# generate (model 1)def build_generator(z_prior):

w1 = tf.Variable(tf.truncated_normal([z_size, h1_size], stddev=0.1), name="g_w1", dtype=tf.float32)

b1 = tf.Variable(tf.zeros([h1_size]), name="g_b1", dtype=tf.float32)

h1 = tf.nn.relu(tf.matmul(z_prior, w1) + b1)

w2 = tf.Variable(tf.truncated_normal([h1_size, h2_size], stddev=0.1), name="g_w2", dtype=tf.float32)

b2 = tf.Variable(tf.zeros([h2_size]), name="g_b2", dtype=tf.float32)

h2 = tf.nn.relu(tf.matmul(h1, w2) + b2)

w3 = tf.Variable(tf.truncated_normal([h2_size, img_size], stddev=0.1), name="g_w3", dtype=tf.float32)

b3 = tf.Variable(tf.zeros([img_size]), name="g_b3", dtype=tf.float32)

h3 = tf.matmul(h2, w3) + b3

x_generate = tf.nn.tanh(h3)

g_params = [w1, b1, w2, b2, w3, b3] return x_generate, g_params

建立Discrminator

而對于Discriminator來說,我們的輸入的大小是[batch_size * 2,img_size],因為我們要摻雜真實的img和Generator生成的img。第一個隐藏層有300個神經元,第二個隐藏層有150個神經元,輸出層隻有1個數,表示該圖檔為真實圖檔的機率。要注意,我們的輸入和輸出是嚴格對應的,是以對于輸出成的輸出h3來說,前batch_size個代表着對真實圖檔的判别機率,而後batch_size代表着對Generator生成的圖檔的判别機率。這裡是用了一個tf.slice()函數,之前沒有接觸過,故在這裡做一下記錄:

1,函數原型 tf.slice(inputs,begin,size,name='')

2,用途:從inputs中抽取部分内容

inputs:可以是list,array,tensor

begin:n維清單,begin[i] 表示從inputs中第i維抽取資料時,相對0的起始偏移量,也就是從第i維的begin[i]開始抽取資料

size:n維清單,size[i]表示要抽取的第i維元素的數目

是以可以看到,最終y_data儲存的是真實圖檔的判别機率,這些值要越接近于1越好,而y_generated儲存的是Generator生成的圖檔的判别機率,這些值要越接近于0越好。

def build_discriminator(x_data, x_generated, keep_prob): # tf.concat

x_in = tf.concat([x_data, x_generated], 0)

w1 = tf.Variable(tf.truncated_normal([img_size, h2_size], stddev=0.1), name="d_w1", dtype=tf.float32)

b1 = tf.Variable(tf.zeros([h2_size]), name="d_b1", dtype=tf.float32)

h1 = tf.nn.dropout(tf.nn.relu(tf.matmul(x_in, w1) + b1), keep_prob)

w2 = tf.Variable(tf.truncated_normal([h2_size, h1_size], stddev=0.1), name="d_w2", dtype=tf.float32)

b2 = tf.Variable(tf.zeros([h1_size]), name="d_b2", dtype=tf.float32)

h2 = tf.nn.dropout(tf.nn.relu(tf.matmul(h1, w2) + b2), keep_prob)

w3 = tf.Variable(tf.truncated_normal([h1_size, 1], stddev=0.1), name="d_w3", dtype=tf.float32)

b3 = tf.Variable(tf.zeros([1]), name="d_b3", dtype=tf.float32)

h3 = tf.matmul(h2, w3) + b3

y_data = tf.nn.sigmoid(tf.slice(h3, [0, 0], [batch_size, -1], name=None))

y_generated = tf.nn.sigmoid(tf.slice(h3, [batch_size, 0], [-1, -1], name=None))

d_params = [w1, b1, w2, b2, w3, b3] return y_data, y_generated, d_params

儲存圖檔

關于儲存圖檔的代碼,我們這裡就不講了,這也不是重點,大家有興趣的話可以研究下:

def show_result(batch_res, fname, grid_size=(8, 8), grid_pad=5):

batch_res = 0.5 * batch_res.reshape((batch_res.shape[0], img_height, img_width)) + 0.5

img_h, img_w = batch_res.shape[1], batch_res.shape[2]

grid_h = img_h * grid_size[0] + grid_pad * (grid_size[0] - 1)

grid_w = img_w * grid_size[1] + grid_pad * (grid_size[1] - 1)

img_grid = np.zeros((grid_h, grid_w), dtype=np.uint8) for i, res in enumerate(batch_res): if i >= grid_size[0] * grid_size[1]: break

img = (res) * 255

img = img.astype(np.uint8)

row = (i // grid_size[0]) * (img_h + grid_pad)

col = (i % grid_size[1]) * (img_w + grid_pad)

img_grid[row:row + img_h, col:col + img_w] = img

imsave(fname, img_grid)

設定訓練目标

對于Discriminator來說,他希望能夠使二分類的結果越準确越好,即越能準确判别真實圖檔和Generator生成的圖檔越好,是以我們這裡使用類似于邏輯回歸中的損失函數,而對于Generator來說,它希望的是Discriminator無法分辨它生成的圖檔,是以它希望Discriminator能将它生成的圖檔越多的分類為真實圖檔,是以我們設定的訓練目标如下:

# 損失函數的設定

d_loss = - (tf.log(y_data) + tf.log(1 - y_generated))

g_loss = - tf.log(y_generated)

optimizer = tf.train.AdamOptimizer(0.0001)

# 兩個模型的優化函數

d_trainer = optimizer.minimize(d_loss, var_list=d_params)

g_trainer = optimizer.minimize(g_loss, var_list=g_params)

訓練

訓練其實很簡單,每次得到batch_size大小的樣本,先訓練一次Discriminator,然後再訓練我們的Generator

z_sample_val = np.random.normal(0, 1, size=(batch_size, z_size)).astype(np.float32)

steps = 60000 / batch_size for i in range(sess.run(global_step), max_epoch): for j in np.arange(steps): # for j in range(steps): print("epoch:%s, iter:%s" % (i, j)) # 每一步疊代,我們都會加載256個訓練樣本,然後執行一次train_step

x_value, _ = mnist.train.next_batch(batch_size)

x_value = 2 * x_value.astype(np.float32) - 1

z_value = np.random.normal(0, 1, size=(batch_size, z_size)).astype(np.float32) # 執行生成

sess.run(d_trainer,

feed_dict={x_data: x_value, z_prior: z_value, keep_prob: np.sum(0.7).astype(np.float32)}) # 執行判别 if j % 1 == 0:

sess.run(g_trainer,

feed_dict={x_data: x_value, z_prior: z_value, keep_prob: np.sum(0.7).astype(np.float32)})

3、完整代碼

本文涉及到的完整代碼如下:

import tensorflow as tffrom tensorflow.examples.tutorials.mnist import input_dataimport numpy as npfrom skimage.io import imsaveimport osimport shutil

img_height = 28img_width = 28img_size = img_height * img_width

to_train = Trueto_restore = Falseoutput_path = "output"# 總疊代次數500max_epoch = 500h1_size = 150h2_size = 300z_size = 100batch_size = 256# generate (model 1)def build_generator(z_prior):

w1 = tf.Variable(tf.truncated_normal([z_size, h1_size], stddev=0.1), name="g_w1", dtype=tf.float32)

b1 = tf.Variable(tf.zeros([h1_size]), name="g_b1", dtype=tf.float32)

h1 = tf.nn.relu(tf.matmul(z_prior, w1) + b1)

w2 = tf.Variable(tf.truncated_normal([h1_size, h2_size], stddev=0.1), name="g_w2", dtype=tf.float32)

b2 = tf.Variable(tf.zeros([h2_size]), name="g_b2", dtype=tf.float32)

h2 = tf.nn.relu(tf.matmul(h1, w2) + b2)

w3 = tf.Variable(tf.truncated_normal([h2_size, img_size], stddev=0.1), name="g_w3", dtype=tf.float32)

b3 = tf.Variable(tf.zeros([img_size]), name="g_b3", dtype=tf.float32)

h3 = tf.matmul(h2, w3) + b3

x_generate = tf.nn.tanh(h3)

g_params = [w1, b1, w2, b2, w3, b3] return x_generate, g_params# discriminator (model 2)def build_discriminator(x_data, x_generated, keep_prob): # tf.concat

x_in = tf.concat([x_data, x_generated], 0)

w1 = tf.Variable(tf.truncated_normal([img_size, h2_size], stddev=0.1), name="d_w1", dtype=tf.float32)

b1 = tf.Variable(tf.zeros([h2_size]), name="d_b1", dtype=tf.float32)

h1 = tf.nn.dropout(tf.nn.relu(tf.matmul(x_in, w1) + b1), keep_prob)

w2 = tf.Variable(tf.truncated_normal([h2_size, h1_size], stddev=0.1), name="d_w2", dtype=tf.float32)

b2 = tf.Variable(tf.zeros([h1_size]), name="d_b2", dtype=tf.float32)

h2 = tf.nn.dropout(tf.nn.relu(tf.matmul(h1, w2) + b2), keep_prob)

w3 = tf.Variable(tf.truncated_normal([h1_size, 1], stddev=0.1), name="d_w3", dtype=tf.float32)

b3 = tf.Variable(tf.zeros([1]), name="d_b3", dtype=tf.float32)

h3 = tf.matmul(h2, w3) + b3

y_data = tf.nn.sigmoid(tf.slice(h3, [0, 0], [batch_size, -1], name=None))

y_generated = tf.nn.sigmoid(tf.slice(h3, [batch_size, 0], [-1, -1], name=None))

d_params = [w1, b1, w2, b2, w3, b3] return y_data, y_generated, d_params#def show_result(batch_res, fname, grid_size=(8, 8), grid_pad=5):

batch_res = 0.5 * batch_res.reshape((batch_res.shape[0], img_height, img_width)) + 0.5

img_h, img_w = batch_res.shape[1], batch_res.shape[2]

grid_h = img_h * grid_size[0] + grid_pad * (grid_size[0] - 1)

grid_w = img_w * grid_size[1] + grid_pad * (grid_size[1] - 1)

img_grid = np.zeros((grid_h, grid_w), dtype=np.uint8) for i, res in enumerate(batch_res): if i >= grid_size[0] * grid_size[1]: break

img = (res) * 255

img = img.astype(np.uint8)

row = (i // grid_size[0]) * (img_h + grid_pad)

col = (i % grid_size[1]) * (img_w + grid_pad)

img_grid[row:row + img_h, col:col + img_w] = img

imsave(fname, img_grid)def train(): # load data(mnist手寫資料集)

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

x_data = tf.placeholder(tf.float32, [batch_size, img_size], name="x_data")

z_prior = tf.placeholder(tf.float32, [batch_size, z_size], name="z_prior")

keep_prob = tf.placeholder(tf.float32, name="keep_prob")

global_step = tf.Variable(0, name="global_step", trainable=False) # 建立生成模型

x_generated, g_params = build_generator(z_prior) # 建立判别模型

y_data, y_generated, d_params = build_discriminator(x_data, x_generated, keep_prob) # 損失函數的設定

d_loss = - (tf.log(y_data) + tf.log(1 - y_generated))

g_loss = - tf.log(y_generated)

optimizer = tf.train.AdamOptimizer(0.0001) # 兩個模型的優化函數

d_trainer = optimizer.minimize(d_loss, var_list=d_params)

g_trainer = optimizer.minimize(g_loss, var_list=g_params)

saver = tf.train.Saver() # 啟動預設圖

init = tf.global_variables_initializer()

sess = tf.Session() # 初始化

sess.run(init) if to_restore:

chkpt_fname = tf.train.latest_checkpoint(output_path)

saver.restore(sess, chkpt_fname) else: if os.path.exists(output_path):

shutil.rmtree(output_path)

z_sample_val = np.random.normal(0, 1, size=(batch_size, z_size)).astype(np.float32)

os.mkdir(output_path)

steps = 60000 / batch_size for i in range(sess.run(global_step), max_epoch): for j in np.arange(steps): # for j in range(steps):

print("epoch:%s, iter:%s" % (i, j)) # 每一步疊代,我們都會加載256個訓練樣本,然後執行一次train_step

x_value, _ = mnist.train.next_batch(batch_size)

x_value = 2 * x_value.astype(np.float32) - 1

z_value = np.random.normal(0, 1, size=(batch_size, z_size)).astype(np.float32) # 執行生成

sess.run(d_trainer,

feed_dict={x_data: x_value, z_prior: z_value, keep_prob: np.sum(0.7).astype(np.float32)}) # 執行判别 if j % 1 == 0:

sess.run(g_trainer,

feed_dict={x_data: x_value, z_prior: z_value, keep_prob: np.sum(0.7).astype(np.float32)})

x_gen_val = sess.run(x_generated, feed_dict={z_prior: z_sample_val})

show_result(x_gen_val, "output/sample{0}.jpg".format(i))

z_random_sample_val = np.random.normal(0, 1, size=(batch_size, z_size)).astype(np.float32)

x_gen_val = sess.run(x_generated, feed_dict={z_prior: z_random_sample_val})

show_result(x_gen_val, "output/random_sample{0}.jpg".format(i))

sess.run(tf.assign(global_step, i + 1))

saver.save(sess, os.path.join(output_path, "model"), global_step=global_step)if __name__ == '__main__':

train()

原文釋出時間為:2018-08-23

本文作者:文文

本文來自雲栖社群合作夥伴“

Python愛好者社群”,了解相關資訊可以關注“

”。