Linux塊層技術全面剖析-v0.1

1

前言

網絡上很多文章對塊層的描述散亂在各個站點,而一些經典書籍由于更新不及時難免更不上最新的代碼,例如關于塊層的多隊列。那麼,是時候寫一個關于linux塊層的中文專題片章了,本文基于核心4.17.2。

因為文章中很多内容都可以單獨領出來做個專題文章,是以資訊量還是比較大的。如果大家發現閱讀過程中無法順序讀完,那麼可以選擇性的看,或者階段性的來看,例如看一段消化一段時間,畢竟文章也是不一下子寫完的。最後文章給出的參考連結是非常棒的素材,如果英文可以建議大家去浏覽一下不必細究,因為很多内容基本已經融合在文章中了。

2

總體邏輯

作業系統核心這個東西真是複雜,如果上來直接說代碼,我想很可能會被大家直接嫌棄。是以,我們先列整體的架構,從邏輯入手,先抽象再具體。Let’s go。

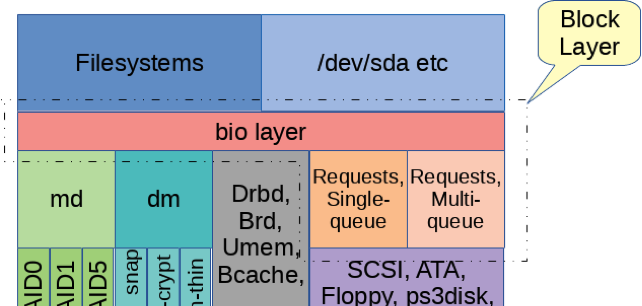

塊層是檔案系統通路儲存設備的接口,是連接配接檔案系統和驅動的橋梁。

塊層從代碼上其實還可以分為兩層,一個是bio層,另一個是request層。如下圖所示。

2.1

bio 層

檔案系統中最後會調用函數generic_make_request,将請求構造成bio結構體,并将其傳遞到bio層。該函數本身也不傳回,等io請求處理完畢後,會異步調用bio->bi_end_io中指定的函數。bio層其實就是這個generic_make_request函數,非常的薄。

bio層下面就是request層,可以看到有多隊列和單隊列之分。

2.2

Request層

request 層有單隊列和多隊列之分,多隊列也是核心進化出來的結果,将來很有可能隻剩下多隊列了。如果是單隊列generic_make_request會調用blk_queue_io,如果是多隊列則調用blk_mq_make_request.

2.2.1 單隊列

單隊列主要是考慮傳統的機械硬體,因為機械臂同一時刻隻能在一個地方,是以單隊列足矣。換個角度說,單隊列也正是被旋轉磁盤需要的。為了充分利用機械磁盤的特性,單隊列中需要有三個關鍵的任務:

l  收集多個連續操作到一組請求中,充分利用硬體。這個通過代碼會對隊列進行檢查,看請求是否可以接收新的bio。如果可以接收,排程器就會同意合并;否則,後續考慮和其他請求合并。請求會變得很大且連續。

l  将請求按順序排列,減少尋道時間, 這樣不會延時重要的請求。但是我們無法知道每個請求的重要程度,以及尋道浪費的時間。這個需要通過隊列排程器,例如deadline,cfq,noop等。

l  為了讓請求到達底層驅動,當請求準備好時候需要踢到底層,而請求完成時候需要有機制通知。驅動在會通過blk_init_queue_node函數來注冊一個request_fn()函數。在新請求出現在隊列時候會被調用。驅動會調用blk_peek_request函數來收集請求并處理,當請求完成後驅動會繼續去取請求而不是調用request_fn()函數。每個請求完成後會調用blk_finish_request()函數。

一些裝置同時可以接收多個請求,在上一個請求完成前接受新的請求。可以将請求進行打标簽,當請求完成的時候可以繼續執行合适的請求。随着裝置不斷的進步可以内部執行更多排程工作後,對多隊列的就越來越大了。

2.2.2 多隊列

多隊列的另一個動機是,系統中核數越來越多,最後都放入到一個隊列中,直接導緻性能瓶頸。

如果在每個NUMA節點或者cpu上配置設定隊列,請求放到隊列的傳輸壓力會大大減少。但是如果硬體一次送出一個,那麼多隊列最後需要做合并操作。

我們知道cfq調取在内部也會有多個隊列,但是和cfq排程不同, cfq是将請求同優先級關聯,而多隊列是将隊列綁定到硬體裝置。

多隊列的request層有兩個硬體相關隊列:軟體staging隊列(也叫submission queues)和硬體dispatch隊列。

軟體staging隊列結構體是blk_mq_ctx,基于cpu硬體數量來配置設定,每個numa節點或者cpu配置設定一個,請求被添加到這些隊列中。這些隊列被獨立的多隊列排程器管理,例如常用的:bfq, kyber, mq-deadline。不同CPU下軟體隊列并不會去跨cpu聚合。

硬體dispatch隊列基于目标硬體來配置設定,可能隻有一個也有可能有2048個。驅動向底層驅動負責。request 層給每個硬體隊列配置設定一個blk_mq_hw_ctx結構體(硬體上下文),最後請求和硬體上下文一起被傳遞到底層驅動。這個隊列需要負責控制送出給裝置驅動的速度,防止過載。目前請求從軟體隊列所下發的硬體隊列,最好是在同一個cpu上運作,提高cpu快取區域化率。

多隊列還有一個不同于單隊列的是,多隊列的request結構體是與配置設定好的。每個request結構體都有一個數字标簽,用于區分裝置。

多隊列不是提供request_fn()函數,而是需要操作函數結構體blk_mq_ops,其中定義了函數,最重要的是queue_rq()函數。還有一些其他的,逾時、polling、請求初始化等函數。如果排程認為請求已經準備好不需要繼續放在隊列上的時候,将會調用queue_rq()函數,将請求下放到request層之外,單隊列中是由驅動來從隊列中擷取請求的。queue_rq函數會将請求放到内部FIFO隊列,并直接處理。函數也可以通過傳回BLK_STS_RESOURCE來拒接接收請求,這回導緻請求繼續處于staging 隊列中。處理傳回BLK_STS_RESOURCE和BLK_STS_OK之外,其他傳回都表示錯誤。

2.2.2.1

多隊列排程

多隊列驅動并不需要配置排程器,工作類似于單隊列的noop排程器。當調用blk_mq_run_hw_queue()或blk_mq_delay_run_hw_queue()時候,會将請求傳給驅動。多隊列的排程函數定義在函數集elevator_mq_ops中,主要的有insert_requests()和dispatch_request()函數。Insert_requests會将請求插入到staging隊列中,dispatch_request函數會選擇一個請求傳入到給定的硬體隊列。當然,可以不提供insert_requests函數,核心也是很有彈性的,那麼請求增加在最後了,如果沒有提供dispatch_request,請求會從任何一個staging隊列中取然後投入到硬體隊列中,這個對性能有傷害(如果裝置隻有一個硬體隊列,那就無所謂了)。

上面我們提到多隊列常用的有三個排程器,mq-deadline,bfq和kyber。

mq-deadline中也有insert_request函數,它會忽略staging隊列,直接将請求插入到兩個全局基于時間排序的讀寫隊列。而dispatch_request函數會基于時間、大小、饑餓程度來傳回一個隊列。注意這裡的函數和elevator_mq_ops中是不一樣的名字,少了一個s.

bfq排程器是cfq的更新版,是Budget

Fair Queueing的縮寫。不過更像mq-deadline,不适用每個cpu的staging隊列。如果有多隊列則通過一個自旋鎖來被所有cpu擷取。

Kyber

I/O 排程器,會利用每個cpu或者每個node的staging 隊列。該排程器沒有提供insert_quest函數,使用預設方式。dispatch_request函數基于硬體上下文來維護内部隊列。這個排程在17年初大家才開始讨論,是以很多細節将來可能固定下來,先一躍而過了。

2.2.2.2

多隊列拓撲圖

最後我們來看下一個最清楚不夠的圖,如下:

從圖中我們可以看到software staging queues是大于hardware dispatch

queues的。

不過有其實有三種情況:

l  software staging

queues大于hardware dispatch queues

2個或多個software staging queues配置設定到一個硬體隊列,分發請求時候回從所有相關軟體隊列中擷取請求。

queues小于hardware dispatch queues

這個場景下,軟體隊列順序映射到硬體隊列。

queues等于hardware dispatch queues

這個場景就是1:1映射的。

2.2.3 多隊列何時取代單隊列

這個可能還需要些時間,因為任何新事物都是不完美。另外在紅帽在内部存儲測試時候發現mq-deadlien的性能問題,還有一些公司也在測試時候發現性能倒退。不過,這個隻是時間問題,并不會很久遠。

倒黴的是,很多書籍中描述的都是單隊列的情況,當然也包括書中描述的排程器。幸好,我們這個專題書籍涉及了,歡迎分享給自己的小夥伴。

2.3

bio based driver

早些時候,為了繞過系統單隊列的瓶頸,需要将繞過request 層,那麼驅動叫做基于request的驅動,如果跳過了request層,那麼驅動就叫做bio based driver了。如何跳過呢?

裝置驅動可以通過調用blk_queue_make_request注冊一個make_request_fn函數,make_request_fn可以直接處理bio。generic_make_request會把裝置指定的bio來調用make_request_fn,進而跳過了bio層下面的request 層,因為有些裝置例如ssd盤,是不需要request層中的請求合并、排序等。

其實,這個方法并不是為SSD高性能設計的,本是為MD

RAID實際的,用于處理請求并将其下發到底層真實的硬體裝置中。

此外,bio based driver是為了繞過碰到的核心中單隊列瓶頸,也帶來一個問題:所有驅動都需要自己去處理并發明所有事情,代碼不具備通用性。針對此事,核心中引入了多隊列機制,後續bio based的驅動也是會慢慢絕迹的。

Blk-mq:new

multi-queue block IO queueing mechnism

總體上看,bio層是比較薄的層,隻負責将IO請求建構成bio機構體,然後傳遞給合适的make_request_fn()函數。而request層還是比較厚的,畢竟還有排程器、請求合并等操作。

3

請求分發邏輯

3.1

多隊列

3.1.1 請求送出

多隊列使用的make_request函數是blk_mq_make_request,當裝置支援單硬體隊列或異步請求時,将不會阻塞或大幅減輕阻塞。如果請求是同步的,驅動不會執行阻塞。

make_request函數會執行請求合并,如果裝置允許阻塞,會負載搜尋阻塞清單中的合适候選者,最後将軟體隊列映射到目前的cpu。送出路徑不涉及I/O 排程相關的回調。

make_request會将同步請求立即發送到對應的硬體隊列,如果是異步或是flush(批量)的請求或被delay用于後續高效的合并的分發。

針對同步和異步請求,make_request的處理存在一些差異。

3.1.2 請求分發

如果IO請求是同步的(在多隊列中不允許阻塞),那麼分發有同一個請求上下文來執行。

如果是異步或flush的,分發可能由關聯到同一個硬體隊列請求在其上下文執行;也可能延遲的工作排程來執行。

多隊列中由函數blk_mq_run_hw_queue來具體實作,同步請求會被立即分發到驅動,而異步請求會被延遲。當時同步請求時候,該函數會調用内部函數__blk_mq_run_hw_queue,先會加入和目前硬體隊列相關的軟體隊列,然後加入已存在的分發清單,收集條目後,函數開始将每個請求分發到驅動中,最後由queue_rq來處理。

整個邏輯代碼位于函數blk_mq_make_request中。

多隊列的邏輯如下圖:

blk_mq的高清圖連結如下:

https://github.com/kernel-z/filesystem/blob/master/blk_mq.png3.2

單隊列

函數generic_make_request在單隊列中會調用blk_queue_bio,來負責處理bio結構體。該函數是塊層中最重要的,需要重點關注的一個函數,裡面内容也是極其豐富,第一次去看它肯定會“迷路”的。

blk_queue_bio函數中會進行電梯排程的處理,由向前合并或向後合并,如果不能合并就新産生一個request請求,最後會調用blk_account_io_start記錄io開始處理,很多io的監控統計就是從這個函數開始的。

邏輯相對代碼來說是簡單很多,先判斷bio是否可以合并到程序的plug連結清單中,不行則判斷是否可以合并到塊層請求隊列中;如果都不支援合并,則重新産生一個新的request來處理bio,這裡又分為是否可以阻塞的;如果可以阻塞則判斷原阻塞隊列是否需要刷盤,不需要刷則直接挂到plug隊列中即可傳回;如果不可阻塞的,就添加到請求隊列中,并調用__blk_run_queue函數,該函數會調用rq->request_fn(由裝置驅動指定,scsi的是scsi_request_fn),離開塊層。這個也是blk_queue_bio的整體邏輯。如下圖所示:

高清圖連結如下:

https://github.com/kernel-z/filesystem/blob/master/blk_single.pngplug中的請求,除了被新的blk_queue_bio函數調用鍊(blk_flush_plug_list)觸發外,還可以被程序排程觸發:

schedule->

sched_submit_work

->

blk_schedule_flush_plug()->

blk_flush_plug_list(plug, true) ->

queue_unplugged->

blk_run_queue_async

喚醒kblockd工作隊列來進行unplug操作。

plug隊列中的請求是要先刷到請求隊列中,而最後都由__blk_run_queue往下發,會調用->request_fn函數,這個函數因驅動而已(scsi驅動是scsi_request_fn)。

3.2.1 函數小結

插入函數:__elv_add_request

拆分函數:blk_queue_split

合并函數:

bio_attemp_front_merge/bio_attempt_back_merge,blk_attemp_plug_merge

發起IO: __blk_run_queue

4

塊層函數初始化分析(scsi)

驅動初始化時候需要根據硬體裝置确定驅動是否能使用多隊列。進而在初始化時候确定請求入隊列的函數塊層入口(blk_queue_bio或者blk_mq_make_request),以及最後發起請求的函數離開塊層(scsi_request_fn或scsi_queue_rq)。

4.1

scsi為例

4.1.1 scsi_alloc_sdev

驅動在探測scsi裝置過程中,會使用函數scsi_alloc_sdev。會配置設定、初始化io,并傳回指向scsi_device結構體的指針,scsi_device會存儲主機、通道、id和lun,并将scsi_device添加到合适的清單中。

該會做如下判斷:

if (shost_use_blk_mq(shost))

sdev->request_queue =

scsi_mq_alloc_queue(sdev);

else

scsi_old_alloc_queue(sdev);

如果是裝置能使用多隊列,則調用函數scsi_mq_alloc_queue,否則使用單隊列,調用scsi_old_alloc_queue函數,其中參數sdev是scsi_device.

在scsi_mq_alloc_queue函數中,會調用blk_mq_init_queue,最後會注冊blk_mq_make_request函數。

初始化邏輯如下,橫向太大,是以給豎過來了:

高清圖如下:

https://github.com/kernel-z/filesystem/blob/master/scsi-init.png下面是關于具體代碼中的一些結構體、函數的解釋,結合上面的文字描述可以更好的了解塊層。

5

結構體:關鍵結構體

5.1

request

request結構體就是請求操作塊裝置的請求結構體,該結構體被放到request_queue隊列中,等到合适的時候再處理。

該結構體定義在

include/linux/blkdev.h 檔案中:struct request {

struct request_queue *q; //所在隊列

struct blk_mq_ctx *mq_ctx;

int cpu;

unsigned int cmd_flags;

/* op and common flags */

req_flags_t rq_flags;

int internal_tag;

/* the following two fields are internal, NEVER access directly */

unsigned int __data_len;

/* total data len */

int tag;

sector_t __sector; /* sector cursor */

struct bio *bio;

struct bio *biotail;

struct list_head queuelist; //請求結構體隊列連結清單

/*

* The hash is used inside

the scheduler, and killed once the

* request reaches the

dispatch list. The ipi_list is only used

* to queue the request for

softirq completion, which is long

* after the request has

been unhashed (and even removed from

* the dispatch list).

*/

union {

struct hlist_node hash;

/* merge hash */

struct list_head

ipi_list;

};

* The rb_node is only used

inside the io scheduler, requests

* are pruned when moved to

the dispatch queue. So let the

* completion_data share

space with the rb_node.

struct rb_node rb_node;

/* sort/lookup */

struct bio_vec

special_vec;

void

*completion_data;

int error_count; /* for legacy drivers, don't use */

* Three pointers are

available for the IO schedulers, if they need

* more they have to

dynamically allocate it. Flush requests

are

* never put on the IO

scheduler. So let the flush fields share

* space with the elevator

data.

struct {

struct io_cq *icq;

void *priv[2];

} elv;

unsigned int seq;

struct list_head list;

rq_end_io_fn

*saved_end_io;

} flush;

struct gendisk *rq_disk;

struct hd_struct *part;

unsigned long start_time;

struct blk_issue_stat issue_stat;

/* Number of scatter-gather DMA addr+len pairs after

* physical address

coalescing is performed.

unsigned short nr_phys_segments;

#if defined(CONFIG_BLK_DEV_INTEGRITY)

unsigned short nr_integrity_segments;

#endif

unsigned short write_hint;

unsigned short ioprio;

unsigned int timeout;

void *special; /* opaque pointer available for LLD use */

unsigned int extra_len; /* length

of alignment and padding */

* On blk-mq, the lower bits

of ->gstate (generation number and

* state) carry the MQ_RQ_*

state value and the upper bits the

* generation number which

is monotonically incremented and used to

*

distinguish the reuse instances.

* ->gstate_seq allows

updates to ->gstate and other fields

* (currently ->deadline)

during request start to be read

* atomically from the

timeout path, so that it can operate on a

* coherent set of

information.

seqcount_t gstate_seq;

u64 gstate;

* ->aborted_gstate is

used by the timeout to claim a specific

* recycle instance of this

request. See blk_mq_timeout_work().

struct u64_stats_sync aborted_gstate_sync;

u64 aborted_gstate;

/* access through blk_rq_set_deadline, blk_rq_deadline */

unsigned long __deadline;

struct list_head timeout_list;

struct

__call_single_data csd;

u64 fifo_time;

* completion callback.

rq_end_io_fn *end_io;

void *end_io_data;

/* for bidi */

struct request *next_rq;

#ifdef CONFIG_BLK_CGROUP

struct request_list *rl; /* rl this rq is alloced from */

unsigned long long start_time_ns;

unsigned long long io_start_time_ns;

/* when passed to hardware */

};

表示塊裝置驅動層I/O請求,經由I/O排程層轉換後的I/O請求,将會發到塊裝置驅動層進行處理;

5.2

request_queue

每一塊裝置都會有一個隊列,當需要對裝置操作時,把請求放在隊列中。因為對塊裝置的操作 I/O通路不能及時調用完成,I/O操作比較慢,是以把所有的請求放在隊列中,等到合适的時候再處理這些請求;

struct request_queue {

* Together with queue_head

for cacheline sharing

struct list_head queue_head;//待處理請求的連結清單

struct request *last_merge;//隊列中首先可能合并的請求描述符

struct elevator_queue *elevator;//指向elevator對象指針。

int nr_rqs[2]; /* #

allocated [a]sync rqs */

int nr_rqs_elvpriv; /* # allocated rqs w/ elvpriv */

atomic_t shared_hctx_restart;

struct blk_queue_stats *stats;

struct rq_wb *rq_wb;

* If blkcg is not used,

@q->root_rl serves all requests. If

blkcg

* is used, root blkg

allocates from @q->root_rl and all other

* blkgs from their own

blkg->rl. Which one to use should be

* determined using

bio_request_list().

struct request_list root_rl;

request_fn_proc *request_fn;//驅動程式的政策例程入口點

make_request_fn *make_request_fn;

poll_q_fn *poll_fn;

prep_rq_fn *prep_rq_fn;

unprep_rq_fn *unprep_rq_fn;

softirq_done_fn *softirq_done_fn;

rq_timed_out_fn *rq_timed_out_fn;

dma_drain_needed_fn *dma_drain_needed;

lld_busy_fn *lld_busy_fn;

/* Called just after a request is allocated */

init_rq_fn *init_rq_fn;

/* Called just before a request is freed */

exit_rq_fn *exit_rq_fn;

/* Called from inside blk_get_request() */

void (*initialize_rq_fn)(struct request *rq);

const struct blk_mq_ops *mq_ops;

unsigned int

*mq_map;

/* sw queues */

struct blk_mq_ctx __percpu *queue_ctx;

nr_queues;

queue_depth;

/* hw dispatch queues */

struct blk_mq_hw_ctx **queue_hw_ctx;

nr_hw_queues;

* Dispatch queue sorting

sector_t end_sector;

struct request *boundary_rq;

* Delayed queue handling

struct delayed_work delay_work;

struct backing_dev_info *backing_dev_info;

* The queue owner gets to

use this for whatever they like.

* ll_rw_blk doesn't touch

it.

void *queuedata;

* various queue flags, see

QUEUE_* below

unsigned long

queue_flags;

* ida allocated id for this

queue. Used to index queues from

* ioctx.

int id;

* queue needs bounce pages

for pages above this limit

gfp_t bounce_gfp;

* protects queue structures

from reentrancy. ->__queue_lock should

* _never_ be used directly,

it is queue private. always use

* ->queue_lock.

spinlock_t __queue_lock;

spinlock_t *queue_lock;

* queue kobject

struct kobject kobj;

* mq queue kobject

struct kobject mq_kobj;

#ifdef CONFIG_BLK_DEV_INTEGRITY

struct blk_integrity integrity;

#endif /* CONFIG_BLK_DEV_INTEGRITY */

#ifdef CONFIG_PM

struct device *dev;

int rpm_status;

nr_pending;

* queue settings

nr_requests; /* Max # of requests */

nr_congestion_on;

nr_congestion_off;

unsigned int nr_batching;

dma_drain_size;

void *dma_drain_buffer;

dma_pad_mask;

dma_alignment;

struct blk_queue_tag *queue_tags;

struct list_head tag_busy_list;

nr_sorted;

in_flight[2];

* Number of active block

driver functions for which blk_drain_queue()

* must

wait. Must be incremented around functions that unlock the

* queue_lock internally,

e.g. scsi_request_fn().

request_fn_active;

rq_timeout;

int poll_nsec;

struct blk_stat_callback *poll_cb;

struct blk_rq_stat poll_stat[BLK_MQ_POLL_STATS_BKTS];

struct timer_list timeout;

struct work_struct timeout_work;

struct list_head timeout_list;

struct list_head icq_list;

DECLARE_BITMAP (blkcg_pols, BLKCG_MAX_POLS);

struct blkcg_gq *root_blkg;

struct list_head blkg_list;

struct queue_limits limits;

* Zoned block device

information for request dispatch control.

* nr_zones is the total

number of zones of the device. This is always

* 0 for regular block

devices. seq_zones_bitmap is a bitmap of nr_zones

* bits which indicates if a

zone is conventional (bit clear) or

* sequential (bit set).

seq_zones_wlock is a bitmap of nr_zones

zone is write locked, that is, if a write

* request targeting the

zone was dispatched. All three fields are

* initialized by the low

level device driver (e.g. scsi/sd.c).

* Stacking drivers (device

mappers) may or may not initialize

* these fields.

nr_zones;

*seq_zones_bitmap;

*seq_zones_wlock;

* sg stuff

sg_timeout;

sg_reserved_size;

int node;

#ifdef CONFIG_BLK_DEV_IO_TRACE

struct blk_trace *blk_trace;

struct mutex blk_trace_mutex;

* for flush operations

struct blk_flush_queue *fq;

struct list_head requeue_list;

spinlock_t requeue_lock;

struct delayed_work requeue_work;

struct mutex sysfs_lock;

int bypass_depth;

atomic_t mq_freeze_depth;

#if defined(CONFIG_BLK_DEV_BSG)

bsg_job_fn *bsg_job_fn;

struct bsg_class_device bsg_dev;

#ifdef CONFIG_BLK_DEV_THROTTLING

/* Throttle data */

struct throtl_data *td;

struct rcu_head rcu_head;

wait_queue_head_t mq_freeze_wq;

struct percpu_ref q_usage_counter;

struct list_head all_q_node;

struct blk_mq_tag_set *tag_set;

struct list_head tag_set_list;

struct bio_set *bio_split;

#ifdef CONFIG_BLK_DEBUG_FS

struct dentry *debugfs_dir;

struct dentry

*sched_debugfs_dir;

bool mq_sysfs_init_done;

size_t cmd_size;

void *rq_alloc_data;

struct work_struct release_work;

#define BLK_MAX_WRITE_HINTS 5

u64

write_hints[BLK_MAX_WRITE_HINTS];

該結構體還是異常龐大的,都快接近sk_buff結構體了。

維護塊裝置驅動層I/O請求的隊列,所有的request都插入到該隊列,每個磁盤裝置都隻有一個queue(多個分區也隻有一個);

一個request_queue中包含多個request,每個request可能包含多個bio,請求的合并就是根據各種原則将多個bio加入到同一個request中。

5.3

elevator_queue

電梯排程隊列,每個隊列都會有一個電梯排程隊列。

struct elevator_queue

{

struct elevator_type *type;

void *elevator_data;

struct kobject kobj;

struct mutex sysfs_lock;

unsigned int registered:1;

unsigned int uses_mq:1;

DECLARE_HASHTABLE(hash, ELV_HASH_BITS);

5.4

elevator_type

電梯類型其實就是排程算法類型。

struct elevator_type

{

/*

managed by elevator core */

struct kmem_cache *icq_cache;

fields provided by elevator implementation */

union {

struct elevator_ops sq;

struct elevator_mq_ops mq;

} ops;

size_t icq_size; /* see iocontext.h */

size_t icq_align; /* ditto */

struct elv_fs_entry

*elevator_attrs;

char elevator_name[ELV_NAME_MAX];

const char *elevator_alias;

struct module *elevator_owner;

bool uses_mq;

const struct blk_mq_debugfs_attr *queue_debugfs_attrs;

const struct blk_mq_debugfs_attr *hctx_debugfs_attrs;

#endif

char icq_cache_name[ELV_NAME_MAX

+ 6]; /* elvname + "_io_cq" */

struct list_head list;

5.4.1 iosched_cfq

例如cfq排程器結構體,指定了該排程器相關的所有函數。

static struct elevator_type iosched_cfq = {

.ops.sq = {

.elevator_merge_fn = cfq_merge,

.elevator_merged_fn = cfq_merged_request,

.elevator_merge_req_fn = cfq_merged_requests,

.elevator_allow_bio_merge_fn

= cfq_allow_bio_merge,

.elevator_allow_rq_merge_fn

= cfq_allow_rq_merge,

.elevator_bio_merged_fn = cfq_bio_merged,

.elevator_dispatch_fn = cfq_dispatch_requests,

.elevator_add_req_fn = cfq_insert_request,

.elevator_activate_req_fn

= cfq_activate_request,

.elevator_deactivate_req_fn

= cfq_deactivate_request,

.elevator_completed_req_fn

= cfq_completed_request,

.elevator_former_req_fn = elv_rb_former_request,

.elevator_latter_req_fn = elv_rb_latter_request,

.elevator_init_icq_fn = cfq_init_icq,

.elevator_exit_icq_fn = cfq_exit_icq,

.elevator_set_req_fn = cfq_set_request,

.elevator_put_req_fn = cfq_put_request,

.elevator_may_queue_fn

= cfq_may_queue,

.elevator_init_fn = cfq_init_queue,

.elevator_exit_fn = cfq_exit_queue,

.elevator_registered_fn = cfq_registered_queue,

},

.icq_size = sizeof(struct cfq_io_cq),

.icq_align = __alignof__(struct cfq_io_cq),

.elevator_attrs = cfq_attrs,

.elevator_name = "cfq",

.elevator_owner =

THIS_MODULE,

5.5

gendisk

再來看下磁盤的資料結構gendisk (定義于

<include/linux/genhd.h>) ,是單獨一個磁盤驅動器的核心表示。是塊I/O子系統中最重要的資料結構。

struct gendisk {

/* major, first_minor and minors are input parameters only,

* don't use directly. Use disk_devt() and disk_max_parts().

int major; /* major number of driver */

int first_minor;

int minors; /* maximum number of minors, =1 for

* disks

that can't be partitioned. */

char disk_name[DISK_NAME_LEN]; /* name

of major driver */

char *(*devnode)(struct gendisk *gd, umode_t *mode);

unsigned int events;

/* supported events */

unsigned int async_events;

/* async events, subset of all */

/* Array of pointers to partitions indexed by partno.

* Protected with matching

bdev lock but stat and other

* non-critical accesses use

RCU. Always access through

* helpers.

struct disk_part_tbl __rcu *part_tbl;

struct hd_struct part0;

const struct block_device_operations *fops;

struct request_queue *queue;

void *private_data;

int flags;

struct rw_semaphore lookup_sem;

struct kobject *slave_dir;

struct timer_rand_state *random;

atomic_t sync_io; /* RAID */

struct disk_events *ev;

struct kobject integrity_kobj;

int node_id;

struct badblocks *bb;

struct lockdep_map lockdep_map;

該結構體中有裝置号、次編号(标記不同分區)、磁盤驅動器名字(出現在/proc/partitions和sysfs中)、 裝置的操作集(block_device_operations)、裝置IO請求結構、驅動器狀态、驅動器容量、驅動内部資料指針private_data等。

和gendisk相關的函數有,alloc_disk函數用來配置設定一個磁盤,del_gendisk用來減掉一個對結構體的引用。

配置設定一個 gendisk 結構不能使系統可使用這個磁盤。還必須初始化這個結構并且調用 add_disk。一旦調用add_disk後, 這個磁盤是"活的"并且它的方法可被在任何時間被調用了,核心這個時候就可以來摸裝置了。實際上第一個調用将可能發生, 也可能在 add_disk 函數傳回之前; 核心将讀前幾個位元組以試圖找到一個分區表。在驅動被完全初始化并且準備好之前,不要調用add_disk來響應對磁盤的請求。

5.6

hd_struct

磁盤分區結構體。

struct hd_struct {

sector_t start_sect;

* nr_sects is protected by sequence counter. One might extend a

* partition while IO is happening to it and update of nr_sects

* can be non-atomic on 32bit machines with 64bit sector_t.

*/

sector_t nr_sects;

seqcount_t nr_sects_seq;

sector_t alignment_offset;

unsigned int discard_alignment;

struct device __dev;

struct kobject *holder_dir;

int policy, partno;

struct partition_meta_info *info;

#ifdef CONFIG_FAIL_MAKE_REQUEST

int make_it_fail;

unsigned long stamp;

atomic_t in_flight[2];

#ifdef

CONFIG_SMP

struct disk_stats __percpu

*dkstats;

#else

struct disk_stats dkstats;

struct percpu_ref ref;

struct rcu_head rcu_head;

5.7

bio

在2.4核心以前使用緩沖頭的方式,該方式下會将每個I/O請求分解成512位元組的塊,是以不能建立高性能IO子系統。2.5中一個重要的工作就是支援高性能I/O,于是有了現在的BIO結構體。

bio結構體是request結構體的實際資料,一個request結構體中包含一個或者多個bio結構體,在底層實際是按bio來對裝置進行操作的,傳遞給驅動。

代碼會把它合并到一個已經存在的request結構體中,或者需要的話會再建立一個新的request結構體;bio結構體包含了驅動程式執行請求的全部資訊。

一個bio包含多個page,這些page對應磁盤上一段連續的空間。由于檔案在磁盤上并不連續存放,檔案I/O送出到塊裝置之前,極有可能被拆成多個bio結構;

該結構體定義在include/linux/blk_types.h檔案中,不幸的是該結構和以往發生了一些較大變化,特别是與ldd一書中不比對了。

* main unit of I/O for the block

layer and lower layers (ie drivers and

* stacking drivers)

*/

struct bio {

struct bio *bi_next; /* request queue link */

struct gendisk *bi_disk;

bi_opf; /* bottom bits req flags,

* top bits REQ_OP. Use

*

accessors.

unsigned short

bi_flags; /* status, etc and bvec pool number */

bi_ioprio;

bi_write_hint;

blk_status_t bi_status;

u8 bi_partno;

/* Number of segments in this BIO after

unsigned int bi_phys_segments;

* To keep track of the max

segment size, we account for the

* sizes of the first and

last mergeable segments in this bio.

bi_seg_front_size;

bi_seg_back_size;

struct bvec_iter bi_iter;

atomic_t __bi_remaining;

bio_end_io_t *bi_end_io;

void *bi_private;

* Optional ioc and css

associated with this bio. Put on bio

* release. Read comment on top of

bio_associate_current().

struct io_context *bi_ioc;

struct cgroup_subsys_state *bi_css;

#ifdef CONFIG_BLK_DEV_THROTTLING_LOW

void *bi_cg_private;

struct blk_issue_stat bi_issue_stat;

bio_integrity_payload *bi_integrity; /* data

integrity */

bi_vcnt; /* how many bio_vec's */

* Everything starting with

bi_max_vecs will be preserved by bio_reset()

bi_max_vecs; /* max bvl_vecs we can hold */

atomic_t __bi_cnt; /* pin count */

struct bio_vec *bi_io_vec; /* the

actual vec list */

struct bio_set *bi_pool;

* We can inline a number of

vecs at the end of the bio, to avoid

* double allocations for a

small number of bio_vecs. This member

* MUST obviously be kept at

the very end of the bio.

struct bio_vec bi_inline_vecs[0];

5.8

bio_vec

其中bio_vec結構體位于檔案

include/linux/bvec.h 中:struct bio_vec {

struct page

*bv_page; //指向整個緩沖區所駐留的實體頁面

unsigned int bv_len; //以位元組為機關的大小

unsigned int bv_offset;//以位元組為機關的偏移量

5.9

電梯排程類型,例如AS或者deadline排程類型。

5.10 多隊列結構體

5.10.1

blk_mq_ctx

代表software staging

queues.

struct blk_mq_ctx {

struct {

spinlock_t lock;

struct list_head rq_list;

} ____cacheline_aligned_in_smp;

unsigned int

cpu;

index_hw;

incremented at dispatch time */

unsigned long

rq_dispatched[2];

rq_merged;

incremented at completion time */

____cacheline_aligned_in_smp rq_completed[2];

struct request_queue *queue;

struct kobject kobj;

}

5.10.2

blk_mq_hw_ctx

多隊列的硬體隊列。它和blk_mq_ctx的映射通過blk_mq_ops中map_queues來實作。同時映射也儲存在request_queue中的mq_map中。

/**

*

struct blk_mq_hw_ctx - State for a hardware queue facing the hardware block

device

struct blk_mq_hw_ctx {

struct list_head dispatch;

unsigned long

state; /* BLK_MQ_S_* flags */

} ____cacheline_aligned_in_smp;

struct delayed_work run_work;

cpumask_var_t cpumask;

int next_cpu;

int next_cpu_batch;

flags; /* BLK_MQ_F_* flags */

void *sched_data;

struct blk_flush_queue *fq;

void *driver_data;

struct sbitmap ctx_map;

struct blk_mq_ctx *dispatch_from;

struct blk_mq_ctx **ctxs;

unsigned int nr_ctx;

wait_queue_entry_t

dispatch_wait;

atomic_t

wait_index;

struct blk_mq_tags *tags;

struct blk_mq_tags *sched_tags;

queued;

run;

#define BLK_MQ_MAX_DISPATCH_ORDER 7

dispatched[BLK_MQ_MAX_DISPATCH_ORDER];

numa_node;

queue_num;

atomic_t nr_active;

nr_expired;

struct hlist_node cpuhp_dead;

struct kobject kobj;

poll_considered;

poll_invoked;

poll_success;

struct dentry *debugfs_dir;

struct dentry *sched_debugfs_dir;

/* Must

be the last member - see also blk_mq_hw_ctx_size(). */

struct srcu_struct srcu[0];

struct blk_mq_tag_set {

const struct blk_mq_ops *ops;

queue_depth; /* max hw supported */

reserved_tags;

cmd_size; /* per-request extra data */

int numa_node;

timeout;

flags; /* BLK_MQ_F_* */

void *driver_data;

struct blk_mq_tags **tags;

struct mutex tag_list_lock;

struct list_head tag_list;

5.11 函數操作結構體

5.11.1

elevator_ops

排程操作函數集合。

struct elevator_ops

elevator_merge_fn *elevator_merge_fn;

elevator_merged_fn *elevator_merged_fn;

elevator_merge_req_fn *elevator_merge_req_fn;

elevator_allow_bio_merge_fn *elevator_allow_bio_merge_fn;

elevator_allow_rq_merge_fn *elevator_allow_rq_merge_fn;

elevator_bio_merged_fn *elevator_bio_merged_fn;

elevator_dispatch_fn *elevator_dispatch_fn;

elevator_add_req_fn *elevator_add_req_fn;

elevator_activate_req_fn *elevator_activate_req_fn;

elevator_deactivate_req_fn *elevator_deactivate_req_fn;

elevator_completed_req_fn *elevator_completed_req_fn;

elevator_request_list_fn *elevator_former_req_fn;

elevator_request_list_fn *elevator_latter_req_fn;

elevator_init_icq_fn *elevator_init_icq_fn; /* see iocontext.h */

elevator_exit_icq_fn *elevator_exit_icq_fn; /* ditto */

elevator_set_req_fn *elevator_set_req_fn;

elevator_put_req_fn *elevator_put_req_fn;

elevator_may_queue_fn *elevator_may_queue_fn;

elevator_init_fn *elevator_init_fn;

elevator_exit_fn *elevator_exit_fn;

elevator_registered_fn *elevator_registered_fn;

5.11.2

elevator_mq_ops

多隊列排程操作函數集合。

struct elevator_mq_ops {

int (*init_sched)(struct request_queue *, struct elevator_type *);

void (*exit_sched)(struct elevator_queue *);

int (*init_hctx)(struct blk_mq_hw_ctx *, unsigned int);

void (*exit_hctx)(struct blk_mq_hw_ctx *, unsigned int);

bool (*allow_merge)(struct request_queue *, struct request *, struct bio *);

bool (*bio_merge)(struct blk_mq_hw_ctx *, struct bio *);

int (*request_merge)(struct request_queue *q, struct request **, struct bio *);

void (*request_merged)(struct request_queue *, struct request *, enum elv_merge);

void (*requests_merged)(struct request_queue *, struct request *, struct request *);

void (*limit_depth)(unsigned int, struct blk_mq_alloc_data *);

void (*prepare_request)(struct request *, struct bio *bio);

void (*finish_request)(struct request *);

void (*insert_requests)(struct blk_mq_hw_ctx *, struct list_head *, bool);

struct request

*(*dispatch_request)(struct

blk_mq_hw_ctx *);

bool (*has_work)(struct blk_mq_hw_ctx *);

void (*completed_request)(struct request *);

void (*started_request)(struct request *);

void (*requeue_request)(struct request *);

struct request *(*former_request)(struct request_queue *, struct request *);

struct request *(*next_request)(struct request_queue *, struct request *);

void (*init_icq)(struct io_cq *);

void (*exit_icq)(struct io_cq *);

5.11.3

5.11.3.1

blk_mq_ops

多隊列的操作函數,是塊層多隊列和塊裝置的橋梁,非常重要。

struct blk_mq_ops {

/*

* Queue request

*/

queue_rq_fn *queue_rq;//隊列處理函數。

* Reserve budget before queue request, once .queue_rq is

* run, it is driver's responsibility to release the

* reserved budget. Also we have to handle failure case

* of .get_budget for avoiding I/O deadlock.

get_budget_fn

*get_budget;

put_budget_fn

*put_budget;

* Called on request timeout

timeout_fn *timeout;

* Called to poll for completion of a specific tag.

poll_fn *poll;

softirq_done_fn *complete;

* Called when the block layer side of a hardware queue has been

* set up, allowing the driver to allocate/init matching structures.

* Ditto for exit/teardown.

init_hctx_fn

*init_hctx;

exit_hctx_fn

*exit_hctx;

* Called for every command allocated by the block layer to allow

* the driver to set up driver specific data.

*

* Tag greater than or equal to queue_depth is for setting up

* flush request.

*/

init_request_fn

*init_request;

exit_request_fn

*exit_request;

Called from inside blk_get_request() */

void (*initialize_rq_fn)(struct request *rq);

map_queues_fn

*map_queues;//blk_mq_ctx和blk_mq_hw_ctx映射關系

* Used by the debugfs implementation to show driver-specific

* information about a request.

void (*show_rq)(struct seq_file *m, struct request *rq);

例如scsi的多隊列操作函數集合。

5.11.3.1.1

scsi_mq_ops

最新scsi驅動使用的多隊列函數操作集合,老的單隊列處理函數是scsi_request_fn。

static const struct blk_mq_ops scsi_mq_ops = {

.get_budget =

scsi_mq_get_budget,

.put_budget =

scsi_mq_put_budget,

.queue_rq = scsi_queue_rq,

.complete =

scsi_softirq_done,

.timeout = scsi_timeout,

.show_rq = scsi_show_rq,

.init_request =

scsi_mq_init_request,

.exit_request =

scsi_mq_exit_request,

.initialize_rq_fn = scsi_initialize_rq,

.map_queues = scsi_map_queues,

6

函數:主要函數

6.1

6.1.1 blk_mq_flush_plug_list

多隊列中刷plug隊列中請求的函數。會有blk_flush_plug_list函數調用。

6.1.2 blk_mq_make_request多隊列塊入口

這個函數和單隊列的blk_queue_bio對立,是多隊列的入口函數。整個函數邏輯也展現了多隊列中io的處理流程。

總體邏輯和單隊列的blk_queue_bio函數非常相似。

如果能夠合入到程序的plug隊列中就直接合入後并傳回。否則,通過函數blk_mq_sched_bio_merge來進行合并到請求隊列中。不管合并到哪裡,都分為向前合并和向後合并兩種方式。

如果不能合并bio,則需要根據bio來生成一個request結構體。新産生的request會根據bio有多種執行分支,判斷條件有flush操作、sync、plug等,最終都是調用blk_mq_run_hw_queue來向裝置發起io請求。

static blk_qc_t blk_mq_make_request(struct request_queue *q, struct bio *bio)

{

const int is_sync = op_is_sync(bio->bi_opf);

const int is_flush_fua =

op_is_flush(bio->bi_opf);

struct blk_mq_alloc_data data = {

.flags = 0 };

struct request *rq;

unsigned int request_count = 0;

struct blk_plug *plug;

struct request *same_queue_rq = NULL;

blk_qc_t cookie;

unsigned int wb_acct;

blk_queue_bounce(q, &bio);

blk_queue_split(q, &bio);//根據裝置硬體上限來分割bio

if (!bio_integrity_prep(bio))

return BLK_QC_T_NONE;

if (!is_flush_fua &&

!blk_queue_nomerges(q) &&

blk_attempt_plug_merge(q, bio, &request_count, &same_queue_rq))//合并到程序的plug隊列

return BLK_QC_T_NONE;

if (blk_mq_sched_bio_merge(q,

bio))//合并到請求隊列中,成功傳回

wb_acct = wbt_wait(q->rq_wb, bio, NULL);

trace_block_getrq(q, bio, bio->bi_opf);

rq = blk_mq_get_request(q, bio, bio->bi_opf, &data);//無法合并,産生新的request 請求

if (unlikely(!rq)) {

__wbt_done(q->rq_wb,

wb_acct);

if (bio->bi_opf & REQ_NOWAIT)

bio_wouldblock_error(bio);

wbt_track(&rq->issue_stat, wb_acct);

cookie = request_to_qc_t(data.hctx, rq);

plug = current->plug;

if (unlikely(is_flush_fua)) {//是flush操作

blk_mq_put_ctx(data.ctx);

blk_mq_bio_to_request(rq, bio);//根據bio生成request,繼續下方到硬體隊列

/* bypass scheduler for flush rq */

blk_insert_flush(rq);

blk_mq_run_hw_queue(data.hctx, true);//向裝置發起io請求

} else if (plug && q->nr_hw_queues == 1) {//可以plug,同時硬體隊列數量為1。

struct request *last = NULL;

blk_mq_put_ctx(data.ctx);

blk_mq_bio_to_request(rq, bio);

/*

* @request_count may become

stale because of schedule

* out, so check the list

again.

*/

if (list_empty(&plug->mq_list))

request_count = 0;

else if (blk_queue_nomerges(q))

request_count =

blk_plug_queued_count(q);

if (!request_count)

trace_block_plug(q);

else

last =

list_entry_rq(plug->mq_list.prev);

if (request_count >= BLK_MAX_REQUEST_COUNT || (last

&&

blk_rq_bytes(last) >=

BLK_PLUG_FLUSH_SIZE)) {

blk_flush_plug_list(plug, false);

}

list_add_tail(&rq->queuelist, &plug->mq_list);

} else if (plug && !blk_queue_nomerges(q)) {

* We do limited plugging. If

the bio can be merged, do that.

* Otherwise the existing

request in the plug list will be

* issued. So the plug list

will have one request at most

* The plug list might get

flushed before this. If that happens,

* the plug list is empty, and

same_queue_rq is invalid.

if (list_empty(&plug->mq_list))

same_queue_rq = NULL;

if (same_queue_rq)

list_del_init(&same_queue_rq->queuelist);

blk_mq_put_ctx(data.ctx);

if (same_queue_rq) {

data.hctx =

blk_mq_map_queue(q,

same_queue_rq->mq_ctx->cpu);

blk_mq_try_issue_directly(data.hctx, same_queue_rq,

&cookie);

} else if (q->nr_hw_queues > 1 && is_sync) {

blk_mq_try_issue_directly(data.hctx, rq, &cookie);

} else if (q->elevator) {

blk_mq_sched_insert_request(rq,

false, true, true);

} else {

blk_mq_queue_io(data.hctx,

data.ctx, rq);

blk_mq_run_hw_queue(data.hctx, true);

}

return cookie;

6.2

6.2.1 blk _flush_plug_list

對應多隊列的blk_mq_flush_plug_list函數,負責将程序中plug鍊上的bio通過函數__elv_add_request刷到排程隊列中,并調用__blk_run_queue函數發起io。

6.2.2 blk_queue_bio單隊列塊層入口

這個函數是單隊列的請求處理函數,負責将bio放入到隊列中。由generic_make_request函數調用。将來如果多隊列完全體會了單隊列,那麼這個函數就成為曆史了。

該函數送出的 bio 的緩存處理存在以下幾種情況,

l  目前程序 IO 處于 Plug 狀态,嘗試将 bio 合并到目前程序的

plugged list 裡,即 current->plug.list 。

l  目前程序 IO 處于 Unplug 狀态,嘗試利用 IO 排程器的代碼找到合适的

IO request,并将 bio 合并到該 request 中。

l  如果無法将 bio 合并到已經存在的

IO request 結構裡,那麼就進入到單獨為該 bio 配置設定空閑 IO request 的邏輯裡。

不論是 plugged list 還是 IO

scheduler 的 IO 合并,都分為向前合并和向後合并兩種情況,

向後由 bio_attempt_back_merge 完成。

向前由 bio_attempt_front_merge 完成。

static blk_qc_t blk_queue_bio(struct request_queue *q, struct bio *bio)

struct blk_plug *plug;//阻塞結構體

int where =

ELEVATOR_INSERT_SORT;

struct request *req, *free;

* low level driver can indicate that it wants pages above a

* certain limit bounced to low memory (ie for highmem, or even

* ISA dma in theory)

blk_queue_split(q, &bio);//根據塊裝置請求隊列的limits.max_sectors和limits.max_segmetns來拆分bio,适應裝置緩存。會在函數blk_set_default_limits中設定。

if (!bio_integrity_prep(bio))//判斷bio是否完整

if

(op_is_flush(bio->bi_opf)) {//判斷bio是否是REQ_PREFLUSH或者REQ_FUA, 需要特殊處理

spin_lock_irq(q->queue_lock);

where = ELEVATOR_INSERT_FLUSH;

goto get_rq;

* Check if we can merge with the plugged list before grabbing

* any locks.

if (!blk_queue_nomerges(q)) {//判斷隊列能否合并,由QUEUE_FLAG_NOMERGES

if (blk_attempt_plug_merge(q, bio, &request_count, NULL)) //嘗試将bio合并到程序plug清單中,然後直接傳回,等後續觸發再處理阻塞隊列。

return BLK_QC_T_NONE;

} else

request_count =

blk_plug_queued_count(q);//擷取plug隊列中的請求數量即可。

switch (elv_merge(q, &req,

bio)) {//單隊列的io排程層,進入到電梯排程函數。

case ELEVATOR_BACK_MERGE://向後合并,将bio合入到已經存在的request中,合并後,調用blk_account_io_start結束

if (!bio_attempt_back_merge(q, req, bio)) //向後合并函數

break;

elv_bio_merged(q, req, bio);

free = attempt_back_merge(q,

req);

if (free)

__blk_put_request(q,

free);

elv_merged_request(q,

req, ELEVATOR_BACK_MERGE);

goto out_unlock;

case ELEVATOR_FRONT_MERGE://向前合并,将bio合入到已經存在的request中,合并後,調用blk_account_io_start結束

if (!bio_attempt_front_merge(q, req, bio))

free = attempt_front_merge(q,

req);

__blk_put_request(q, free);

req, ELEVATOR_FRONT_MERGE);

default:

break;

get_rq:

wb_acct = wbt_wait(q->rq_wb, bio, q->queue_lock);

* Grab a free request. This is might sleep but can not fail.

* Returns with the queue unlocked.

blk_queue_enter_live(q);

req = get_request(q, bio->bi_opf, bio, 0); //如果在plug鍊和request隊列中都無法合并,則重新生成一個request.

if (IS_ERR(req)) {

blk_queue_exit(q);

if (PTR_ERR(req) == -ENOMEM)

bio->bi_status =

BLK_STS_RESOURCE;

BLK_STS_IOERR;

bio_endio(bio);

wbt_track(&req->issue_stat, wb_acct);

* After dropping the lock and possibly sleeping here, our request

* may now be mergeable after it had proven unmergeable (above).

* We don't worry about that case for efficiency. It won't happen

* often, and the elevators are able to handle it.

blk_init_request_from_bio(req, bio);//通過bio初始化request請求。

(test_bit(QUEUE_FLAG_SAME_COMP, &q->queue_flags))

req->cpu =

raw_smp_processor_id();

if (plug) {

* If this is the first request

added after a plug, fire

* of a plug trace.

*

* out, so check plug list

if (!request_count || list_empty(&plug->list))

else {

struct request *last =

list_entry_rq(plug->list.prev);

if (request_count >= BLK_MAX_REQUEST_COUNT

||

blk_rq_bytes(last)

>= BLK_PLUG_FLUSH_SIZE) {

blk_flush_plug_list(plug, false);//如果請求數量或者大小超過指定,就觸發刷阻塞的io,第二參數表示不是從排程觸發的,是自己觸發的。會調用__elv_add_request将請求插入到電梯隊列中。

trace_block_plug(q);

}

list_add_tail(&req->queuelist, &plug->list);//把請求添加到plug清單中

blk_account_io_start(req, true);//啟動隊列中的io靜态相關統計.

add_acct_request(q, req,

where);//該函數會調用blk_account_io_start,__elv_add_request,将請求放入到請求隊列中,準備被處理。

__blk_run_queue(q);//如果非阻塞,則調用__blk_run_queue函數,觸發IO,開工幹活。

out_unlock:

spin_unlock_irq(q->queue_lock);

return BLK_QC_T_NONE;

6.3

初始化函數

6.3.1 blk_mq_init_queue

這個函數初始化軟體(software

staging queues)和硬體(hardware dispatch queues)隊列,同時執行映射操作。

也會通過調用blk_queue_make_request來設定blk_mq_make_request函數。

struct request_queue *blk_mq_init_queue(struct blk_mq_tag_set *set)

struct request_queue *uninit_q, *q;

uninit_q = blk_alloc_queue_node(GFP_KERNEL, set->numa_node, NULL);

if (!uninit_q)

return ERR_PTR(-ENOMEM);

q = blk_mq_init_allocated_queue(set, uninit_q);

if (IS_ERR(q))

blk_cleanup_queue(uninit_q);

return q;

6.3.2 blk_mq_init_request

該函數會調用.init_request函數。

static int blk_mq_init_request(struct blk_mq_tag_set *set, struct request *rq,

unsigned int hctx_idx, int node)

int ret;

(set->ops->init_request) {

ret =

set->ops->init_request(set, rq, hctx_idx, node);

if (ret)

return ret;

seqcount_init(&rq->gstate_seq);

u64_stats_init(&rq->aborted_gstate_sync);

* start gstate with gen 1 instead of 0, otherwise it will be equal

* to aborted_gstate, and be identified timed out by

* blk_mq_terminate_expired.

WRITE_ONCE(rq->gstate, MQ_RQ_GEN_INC);

return 0;

6.3.3 blk_init_queue

初始化隊列函數,會調用blk_init_queue_node。

struct request_queue

*blk_init_queue(request_fn_proc *rfn, spinlock_t *lock)

return blk_init_queue_node(rfn,

lock, NUMA_NO_NODE);

會調用blk_init_queue_node函數,而函數blk_init_queue_node會調用blk_init_allocated_queue

函數。

struct request_queue *

blk_init_queue_node(request_fn_proc *rfn,

spinlock_t *lock, int

node_id)

struct request_queue *q;

q = blk_alloc_queue_node(GFP_KERNEL, node_id, lock);

if (!q)

return NULL;

q->request_fn = rfn;

if (blk_init_allocated_queue(q)

< 0) {

blk_cleanup_queue(q);

6.3.4 blk_queue_make_request

blk_queue_make_request用來設定多隊列的入口函數:blk_mq_make_request函數

6.4

關鍵承上啟下函數

6.4.1 generic_make_request

這個函數本身起到一個承上啟下的作用,是以在函數定義處加入了大量的描述性文字,幫助開發者了解。

generic_make_request函數是bio層的入口,負責把bio傳遞給塊層,将bio結構體到請求隊列。如果是使用單隊列則調用blk_queue_bio,如果是使用多隊列的則調用blk_mq_make_request。

generic_make_request - hand a buffer to its device driver for I/O

@bio: The bio describing the location in

memory and on the device.

generic_make_request() is used to make I/O requests of block

devices. It is passed a &struct bio, which describes the I/O that needs

* to

be done.

generic_make_request() does not return any status. The

success/failure status of the request, along with notification of

completion, is delivered asynchronously through the bio->bi_end_io

function described (one day) else where.

The caller of generic_make_request must make sure that bi_io_vec

are set to describe the memory buffer, and that bi_dev and bi_sector are

set to describe the device address, and the

bi_end_io and optionally bi_private are set to describe how

completion notification should be signaled.

generic_make_request and the drivers it calls may use bi_next if this

bio happens to be merged with someone else, and may resubmit the bio to

* a

lower device by calling into generic_make_request recursively, which

means the bio should NOT be touched after the call to ->make_request_fn.

blk_qc_t generic_make_request(struct bio *bio)

* bio_list_on_stack[0] contains bios submitted by the current

* make_request_fn.

* bio_list_on_stack[1] contains bios that were submitted before

* the current make_request_fn, but that haven't been processed

* yet.

struct bio_list bio_list_on_stack[2];

blk_mq_req_flags_t flags = 0;

struct request_queue *q =

bio->bi_disk->queue;//擷取bio關聯裝置的隊列

blk_qc_t ret = BLK_QC_T_NONE;

if (bio->bi_opf &

REQ_NOWAIT)//判斷bio是否是REQ_NOWAIT的,設定flags

flags = BLK_MQ_REQ_NOWAIT;

if (blk_queue_enter(q, flags)

< 0) {//判斷隊列是否可以處理響應請求。

if (!blk_queue_dying(q) && (bio->bi_opf &

REQ_NOWAIT))

bio_io_error(bio);

return ret;

(!generic_make_request_checks(bio))//檢測bio

goto out;

* We only want one ->make_request_fn to be active at a time, else

* stack usage with stacked

devices could be a problem. So use

* current->bio_list to keep a list of requests submited by a

* make_request_fn function.

current->bio_list is also used as a

* flag to say if generic_make_request is currently active in this

* task or not. If it is NULL,

then no make_request is active. If

* it is non-NULL, then a make_request is active, and new requests

* should be added at the tail

if (current->bio_list) {//current是描述程序的task_struct機構體,其中bio_list是 Stacked block device

info(MD),如果是MD裝置就添加到隊列後退出了。

bio_list_add(¤t->bio_list[0], bio);

/* following

loop may be a bit non-obvious, and so deserves some

* explanation.

* Before entering the loop, bio->bi_next is NULL (as all callers

* ensure that) so we have a list with a single bio.

* We pretend that we have just taken it off a longer list, so

* we assign bio_list to a pointer to the bio_list_on_stack,

* thus initialising the bio_list of new bios to be

* added. ->make_request() may

indeed add some more bios

* through a recursive call to generic_make_request. If it

* did, we find a non-NULL value in bio_list and re-enter the loop

* from the top. In this case we

really did just take the bio

* of the top of the list (no pretending) and so remove it from

* bio_list, and call into ->make_request() again.

BUG_ON(bio->bi_next);

bio_list_init(&bio_list_on_stack[0]);//初始化目前要送出的bio連結清單結構

current->bio_list = bio_list_on_stack;//指派給task_struct->bio_list,最後函數結束後會指派為null.

do {//循環處理bio,調用make_request_fn處理每個bio

bool enter_succeeded = true;

if (unlikely(q != bio->bi_disk->queue)) {//判斷第一個bio關聯的隊列是否與上次make_request_fn函數送出的bio隊列一緻。

if (q)

blk_queue_exit(q);//減少隊列引用,是blk_queue_enter逆操作

q =

bio->bi_disk->queue; //從下一個bio中擷取關聯的隊列

flags = 0;

if (bio->bi_opf & REQ_NOWAIT)

flags =

BLK_MQ_REQ_NOWAIT;

if (blk_queue_enter(q, flags) < 0) {

enter_succeeded

= false;

q = NULL;

if (enter_succeeded) {//成功放入隊列後

struct bio_list lower, same;

/* Create a fresh bio_list

for all subordinate requests */

bio_list_on_stack[1] = bio_list_on_stack[0];//上次make_request_fn送出的bios,指派給bio_list_on_stack[1].

bio_list_init(&bio_list_on_stack[0]);//初始化這次需要送出的bios存放結構體bio_list_on_stack[0].

ret =

q->make_request_fn(q, bio);//調用關鍵函數->make_request_fn

/* sort new bios into those

for a lower level

* and those for the

same level

*/

bio_list_init(&lower);//初始化兩個bio連結清單

bio_list_init(&same);

while ((bio =

bio_list_pop(&bio_list_on_stack[0])) != NULL)//循環處理這次送出的bios。

if (q == bio->bi_disk->queue)

bio_list_add(&same, bio);

else

bio_list_add(&lower, bio);

/* now assemble so we handle

the lowest level first */

bio_list_merge(&bio_list_on_stack[0], &lower);//進行合并。

bio_list_merge(&bio_list_on_stack[0], &same);

bio_list_merge(&bio_list_on_stack[0], &bio_list_on_stack[1]);

} else {

if (unlikely(!blk_queue_dying(q) &&

(bio->bi_opf & REQ_NOWAIT)))

else

bio_io_error(bio);

bio =

bio_list_pop(&bio_list_on_stack[0]);//擷取下一個bio,繼續處理

} while (bio);

current->bio_list = NULL; /* deactivate */

out:

if (q)

return ret;

7

參考

一切不配參考連結的文章都是耍流氓。

https://lwn.net/Articles/736534/ https://lwn.net/Articles/738449/ https://www.thomas-krenn.com/en/wiki/Linux_Multi-Queue_Block_IO_Queueing_Mechanism_(blk-mq)

https://miuv.blog/2017/10/21/linux-block-mq-simple-walkthrough/ https://hyunyoung2.github.io/2016/09/14/Multi_Queue/ http://ari-ava.blogspot.com/2014/07/opw-linux-block-io-layer-part-4-multi.htmlLinux

Block IO: Introducing Multi-queue SSD Access on Multi-core Systems

The

multiqueue block layer