一、裝置環境說明

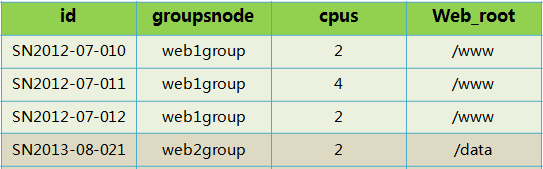

有兩組web業務伺服器,組名分别為web1group與web2group,裝置硬體配置、web根目錄存在異常,見下圖:

二、master配置說明

1、關鍵配置定義:

nodegroups:

web1group: 'L@SN2012-07-010,SN2012-07-011,SN2012-07-012'

web2group: 'L@SN2013-08-021,SN2013-08-022'

file_roots:

base:

- /srv/salt

pillar_roots:

- /srv/pillar

2、定義的檔案樹結構(具體檔案後續說明)

三、自定義grains_module

1)#vi /srv/salt/_grains/nginx_config.py

import os,sys,commands

def NginxGrains():

'''

return Nginx config grains value

'''

grains = {}

max_open_file=65536

#Worker_info={'cpus2':'01 10','cpus4':'1000 0100 0010 0001','cpus8':'10000000 01000000 00100000 00010000 00001000 00000100 00000010 00000001'}

try:

getulimit=commands.getstatusoutput('source /etc/profile;ulimit -n')

except Exception,e:

pass

if getulimit[0]==0:

max_open_file=int(getulimit[1])

grains['max_open_file'] = max_open_file

return grains

2)同步grains子產品

salt '*' saltutil.sync_all

3)重新整理子產品(讓minion編譯子產品)

salt '*' sys.reload_modules

4)驗證max_open_file key的value

[root@SN2013-08-020 _grains]# salt '*' grains.item max_open_file

SN2013-08-022:

max_open_file: 1024

SN2013-08-021:

SN2012-07-011:

SN2012-07-012:

SN2012-07-010:

四、配置pillar

本例使用分組規則定義pillar,即不同分組引用各自的sls屬性

1)定義入口top.sls

#vi /srv/pillar/top.sls

base:

web1group:

- match: nodegroup

- web1server

web2group:

- web2server

2)定義私有配置,本例隻配置web_root的資料,當然可以根據不同需求進行定制,格式為python的字典形式,即"key:value"。

#vi /srv/pillar/web1server.sls

nginx:

root: /www

#vi /srv/pillar/web2server.sls

root: /data

3)驗證配置結果:

#salt 'SN2013-08-021' pillar.data nginx

----------

root:

/data

#salt 'SN2012-07-010' pillar.data nginx

/www

五、配置States

1)定義入口top.sls

#vi /srv/salt/top.sls

'*':

- nginx

2)定義nginx配置及重新開機服務SLS,其中salt://nginx/nginx.conf為配置模闆檔案位置。

#vi /srv/salt/nginx.sls

pkg:

- installed

file.managed:

- source: salt://nginx/nginx.conf

- name: /etc/nginx/nginx.conf

- user: root

- group: root

- mode: 644

- template: jinja

service.running:

- enable: True

- reload: True

- watch:

- file: /etc/nginx/nginx.conf

- pkg: nginx

3)Nginx配置檔案(引用jinja模闆)

功能點:

1、worker_processes參數采用grains['num_cpus'] 上報值(與裝置CPU核數一緻);

2、worker_cpu_affinity配置設定多核CPU根據目前裝置核數進行比對,分别為2\4\8\其它核;

3、worker_rlimit_nofile參數與grains['max_open_file'] 擷取的系統ulimit -n一緻;

4、worker_connections 參數理論上為grains['max_open_file'];

5、 root參數為定制的pillar['nginx']['root']值。

#vi /srv/salt/nginx/nginx.conf

# For more information on configuration, see:

user nginx;

worker_processes {{ grains['num_cpus'] }};

{% if grains['num_cpus'] == 2 %}

worker_cpu_affinity 01 10;

{% elif grains['num_cpus'] == 4 %}

worker_cpu_affinity 1000 0100 0010 0001;

{% elif grains['num_cpus'] >= 8 %}

worker_cpu_affinity 00000001 00000010 00000100 00001000 00010000 00100000 01000000 10000000;

{% else %}

{% endif %}

worker_rlimit_nofile {{ grains['max_open_file'] }};

error_log /var/log/nginx/error.log;

#error_log /var/log/nginx/error.log notice;

#error_log /var/log/nginx/error.log info;

pid /var/run/nginx.pid;

events {

worker_connections {{ grains['max_open_file'] }};

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

# Load config files from the /etc/nginx/conf.d directory

# The default server is in conf.d/default.conf

#include /etc/nginx/conf.d/*.conf;

server {

listen 80 default_server;

server_name _;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root {{ pillar['nginx']['root'] }};

index index.html index.htm;

}

error_page 404 /404.html;

location = /404.html {

root /usr/share/nginx/html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

4)同步配置

#salt '*' state.highstate

(由于非第一次運作,看不到配置檔案比對的資訊)

5)驗證結果:

1、登入root@SN2013-08-021

#vi /etc/nginx/nginx.conf

2、登入root@SN2012-07-010