1.apache提供的hadoop-2.4.1的安裝包是在32位作業系統編譯的,因為hadoop一些C++的本地庫,是以如果在64位的操作上安裝hadoop-2.4.1就需要重新在64作業系統上重新編譯

2.本次搭建使用了2.7.1,hadoop2.7.1是穩定版。

3.節點包括了namenode的高可用,jobtracker的高可用,zookeeper高可用叢集(後期更新)

4、3個節點的配置完全相同,隻要在master配置完成後SCP到其他2節點上即可

連結:http://pan.baidu.com/s/1i4LCmAp 密碼:302x hadoop+hive下載下傳

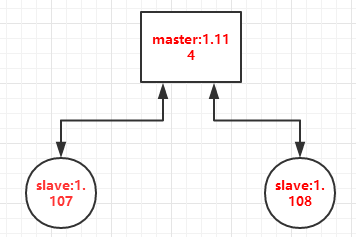

架構圖:

主機資訊

IP

主機名

MASTER

168.1.114

Mycat

SLAVE

168.1.107

Haproxy

168.1.108

Haproxy_slave

Hadoop版本

Version2.7.1

JDK版本

Version1.7.0_55

##三個節點的/etc/hosts一緻

添加ssh 之間的互信:ssh-keygen -t rsa

###若是原來存在的建議删除重新設定一次

# cd

#cd .ssh

#rm –rf ./*

1、 生成authorized_keys 檔案

cat id_rsa.pub>> authorized_keys

2、 把其他節點的id_rsa.pub的内容拷貝到第一節點的authorized_keys檔案裡

3、 然後把第一節點的authorized_keys複制到2個SLAVE中去:

#scpauthorized_keys [email protected]:~/.ssh/

scp authorized_keys [email protected]:~/.ssh/

4、 設定.ssh的權限為700,authorized_keys的權限為600

#chmod 700 ~/.ssh

#chmod 600 ~/.ssh/authorized_keys

設定jdk環境變量vim/etc/profile

#注意jdk和hadoop包的路徑,3個節點配置檔案一緻

export JAVA_HOME=/usr/local/jdk

export HADOOP_INSTALL=/usr/local/hadoop

設定環境立即生效:source /etc/profile

#ln –s /usr/local/jdk/bin/*/usr/bin/

測試jdk是否OK

java -version

java version "1.7.0_09-icedtea"

OpenJDK Runtime Environment(rhel-2.3.4.1.el6_3-x86_64)

OpenJDK 64-Bit Server VM (build 23.2-b09,mixed mode)

若提示:/usr/bin/java: /lib/ld-linux.so.2: bad ELF interpreter:

安裝yum install glibc.i686 再次執行沒問題

配置、添加使用者hadoop

# useradd hadoop

#配置之前,先在本地檔案系統建立以下檔案夾:

/home/hadoop/tmp、/home/dfs/data、/home/dfs/name,3個節點一樣

Mkdir /home/hadoop/tmp

mkdir /home/hadoop/dfs/data -p

mkdir /home/hadoop/dfs/name -p

#hadoop的配置檔案在程式目錄下的etc/hadoop,主要涉及的配置檔案有7個:都在/hadoop/etc/hadoop檔案夾下

/usr/local/hadoop/etc/hadoop/hadoop-env.sh #記錄hadoop要用的環境變量

/usr/local/hadoop /etc/hadoop/yarn-env.sh#記錄YARN要用的環境變量

/usr/local/hadoop /etc/hadoop/slaves#運作DN和NM的機器清單(每行一個)

/usr/local/hadoop/etc/hadoop/core-site.xml#hadoopCORE的配置,如HDFS和MAPREDUCE常用的I/O設定

/usr/local/hadoop/etc/hadoop/hdfs-site.xml#hdfs守護程序的配置,包括NN和SNN DN

/usr/local/hadoop/etc/hadoop/mapred-site.xml#mapreduce計算架構的配置

/usr/local/hadoop/etc/hadoop/yarn-site.xml#YARN守護程序的配置,報錯RM和NM等

1、 修改hadoop-env.sh,設定jdk路徑,在第25行中修改:

2、 修改core-site.xml

fs.default.name是NameNode的URI。hdfs://主機名:端口/hadoop.tmp.dir:Hadoop的預設臨時路徑,這個最好配置,如果在新增節點或者其他情況下莫名其妙的DataNode啟動不了,就删除此檔案中的tmp目錄即可。不過如果删除了NameNode機器的此目錄,那麼就需要重新執行NameNode格式化的指令。

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://haproxy:9000</value>

</property>

<name>io.file.buffer.size</name>

<value>131072</value>

<name>hadoop.tmp.dir</name>

<value>file:/home/hadoop/tmp</value>

<description>abase for othertemporary directories</description>

<name>hadoop.proxyuser.spark.hosts</name>

<value>*</value>

<name>hadoop.proxyuser.spark.groups</name>

3、配置 hdfs-site.xml 檔案-->>增加hdfs配置資訊(namenode、datanode端口和目錄位置)

dfs.name.dir是NameNode持久存儲名字空間及事務日志的本地檔案系統路徑。當這個值是一個逗号分割的目錄清單時,nametable資料将會被複制到所有目錄中做備援備份。

dfs.data.dir是DataNode存放塊資料的本地檔案系統路徑,逗号分割的清單。當這個值是逗号分割的目錄清單時,資料将被存儲在所有目錄下,通常分布在不同裝置上。

dfs.replication是資料需要備份的數量,預設是3,如果此數大于叢集的機器數會出錯。

注意:此處的name1、name2、data1、data2目錄不能預先建立,hadoop格式化時會自動建立,如果預先建立反而會有問題。

<name> dfs.namenode.name.dir </name>

<value>/home/hadoop/dfs/name/name1</value>

<final>true</final>

</property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/dfs/data/data1</value>

<name>dfs.replication</name>

<value>2</value>

</configuration>

4、mapred-site.xml檔案

<name>mapreduce.framework.name</name>

<value>yarn</value>

<name>mapreduce.jobhistory.address</name>

<value>haproxy:10020</value>

<name>mapreduce.jobhistory.webapp.address</name>

<value>haproxy:19888</value>

4、修改yarn-site.xml

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<name>yarn.nodemanager.aux-services.mapreduce.shufle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

<name>yarn.resourcemanager.address</name>

<value>haproxy:8032</value>

<name>yarn.resourcemanager.scheduler.address</name>

<value>haproxy:8030</value>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>haproxy:8035</value>

<name>yarn.resourcemanager.admin.address</name>

<value>haproxy:8033</value>

<name>yarn.resourcemanager.webapp.address</name>

<value>haproxy:8088</value>

5、配置masters和slaves主從結點

6、格式檔案系統

這一步在主結點master上進行操作

報錯

./hdfs: /usr/local/jdk/bin/java: /lib/ld-linux.so.2: bad ELFinterpreter: No such file or directory

yum install glibc.i686

#cd /usr/local/hadoop/bin/

./hdfs namenode -format

SHUTDOWN_MSG: Shutting down NameNode athaproxy/192.168.1.107

7、啟動主節點

#/usr/local/hadoop/sbin/start-dfs.sh

問題1:

啟動的時候日志有:

It's highly recommended that you fix the library with'execstack -c <libfile>', or link it with '-z noexecstack'.

經過修改主要是環境變量設定問題:

#vi /etc/profile或者vi~/.bash_profile

export HADOOP_HOME=/usr/local/hadoop

exportHADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

exportHADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

問題2:

WARN util.NativeCodeLoader: Unable toload native-hadoop library for your platform... using builtin-java classeswhere applicable

測試發現:

/usr/local/hadoop/bin/hadoop fs -ls /

16/11/16 16:16:42 WARN util.NativeCodeLoader: Unable to loadnative-hadoop library for your platform... using builtin-java classes whereapplicable

ls: Call From haproxy/192.168.1.107 to haproxy:9000 failed onconnection exception: java.net.ConnectException: Connection refused; For moredetails see: http://wiki.apache.org/hadoop/ConnectionRefused

增加調試資訊設定

$ export HADOOP_ROOT_LOGGER=DEBUG,console

啟動日志:标志紅色的需要按上面的錯誤提示做相應的處理

16/11/19 15:45:27 WARNutil.NativeCodeLoader: Unable to load native-hadoop library for yourplatform... using builtin-java classes where applicable

Starting namenodes on [mycat]

The authenticity of host 'mycat(127.0.0.1)' can't be established.

RSA key fingerprint is3f:44:d6:f4:31:b0:5b:ff:86:b2:5d:87:f2:d9:b8:9d.

Are you sure you want to continueconnecting (yes/no)? yes

mycat: Warning: Permanently added'mycat' (RSA) to the list of known hosts.

mycat: starting namenode, logging to/usr/local/hadoop/logs/hadoop-root-namenode-mycat.out

mycat: Java HotSpot(TM) Client VMwarning: You have loaded library /usr/local/hadoop/lib/native/libhadoop.so.1.0.0which might have disabled stack guard. The VM will try to fix the stack guardnow.

mycat: It's highly recommended that you fix the library with'execstack -c <libfile>', or link it with '-z noexecstack'.

haproxy: starting datanode, logging to/usr/local/hadoop/logs/hadoop-root-datanode-haproxy.out

haproxy_slave: starting datanode,logging to /usr/local/hadoop/logs/hadoop-root-datanode-haproxy_slave.out

haproxy: /usr/local/hadoop/bin/hdfs: line 304:/usr/local/jdk/bin/java: No such file or directory

haproxy: /usr/local/hadoop/bin/hdfs: line 304: exec:/usr/local/jdk/bin/java: cannot execute: No such file or directory

haproxy_slave: /usr/local/hadoop/bin/hdfs:/usr/local/jdk/bin/java: /lib/ld-linux.so.2: bad ELF interpreter: No such fileor directory

haproxy_slave:/usr/local/hadoop/bin/hdfs: line 304: /usr/local/jdk/bin/java: Success

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0(0.0.0.0)' can't be established.

0.0.0.0: Warning: Permanently added'0.0.0.0' (RSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode,logging to /usr/local/hadoop/logs/hadoop-root-secondarynamenode-mycat.out

0.0.0.0: Java HotSpot(TM) Client VMwarning: You have loaded library/usr/local/hadoop/lib/native/libhadoop.so.1.0.0 which might have disabled stackguard. The VM will try to fix the stack guard now.

0.0.0.0: It's highly recommended thatyou fix the library with 'execstack -c <libfile>', or link it with '-znoexecstack'.

16/11/19 15:46:01 WARNutil.NativeCodeLoader: Unable to load native-hadoop library for yourplatform... usi

問題3:若在datanode看不到節點的資訊

若看不到2個SLAVE資訊,有可能是配置檔案問題

1、看看/usr/local/hadoop/logs下的日志

2、檢查/usr/local/hadoop/etc/hadoop/hdfs-site.xml的配置資訊

3、檢查下/etc/hosts的配置,測試的時候,勿把127.0.0.1和hostname綁定一起導緻問題,借鑒

問題的處理方法:

(1)停掉叢集服務

(2)在出問題的datanode節點上删除data目錄,data目錄即是在hdfs-site.xml檔案中配置的dfs.data.dir目錄,本機器上那個是/var/lib/hadoop-0.20/cache/hdfs/dfs/data/ (注:我們當時在所有的datanode和namenode節點上均執行了該步驟。以防删掉後不成功,可以先把data目錄儲存一個副本).

(3)格式化namenode.

(4)重新啟動叢集。

##到此3主備叢集OK